Particle physics @ utopian-io - Objects isolation, histogramming and a first task request

This series of posts aims to offer a way to developers to contribute to state-of-the-art research in particle physics through the reimplementation, in the MadAnalysis 5 framework, of existing searches for new phenomena at the Large Hadron Collider, the LHC, at CERN.

The grand goal of this project is to extend the MadAnalysis 5 Public Analysis Database. The analyses included in this database are used by particle physicists to assess how given new particle physics theories are compatible with the observations at the LHC.

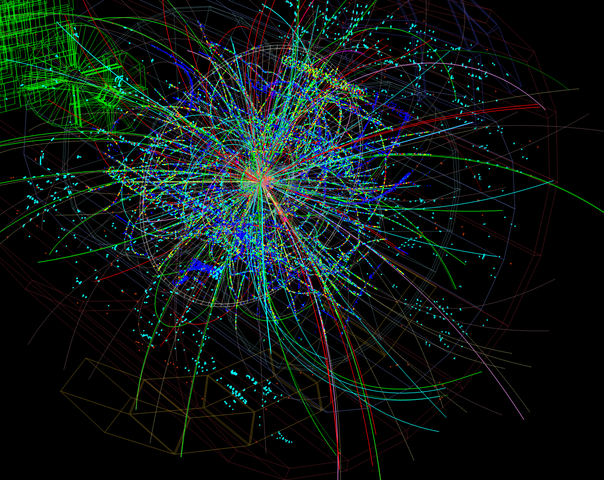

[image credits: Pcharito (CC BY-SA 3.0)]

Among all the concepts introduced so far (please have a look to the end of this post for a table of content), the most important one consists in the notion of events.

An event can be seen as a record in an LHC detector, originating either from a real collision or from a simulation.

Roughly speaking, such events contain a set of objects reconstructed from various hits and tracks left in the detector.

We have for now discussed four of these objects, namely photons, electrons, muons and jets. In parallel with the physics, we have also detailed how to access those objects and their properties in the MadAnalysis 5 framework.

At the end of the last episode of this series, we have introduced the removal of the potential overlap between different objects.

In this post, we will move on with object isolation, and then tackle a totally different topic and discuss how the MadAnalysis 5 framework can be used for histogramming. In other word, I will describe how to focus on a specific property (of an object or even the entire event) and represent it in a figure (a histogram).

This post will end up with a traditional exercise. We will today, for a change, focus on a search for dark matter performed by the CMS collaboration.

A first task request will also be proposed, targeting the development of a new add-on to MadAnalysis 5.

SUMMARY OF THE PREVIOUS EPISODES

[image credits: geralt (CC0)]

In the first post on this project I explained how the MadAnalysis 5 framework could be downloaded from LaunchPad or GitHub.

Moreover, I have also detailed how any necessary external dependencies could be downloaded and linked.

In the next two posts (here and there), we have started dealing with the real work.

I have discussed in some details the nature of four of the different classes of objects that could be reconstructed from the information stored in a detector. Together with their representation in the MadAnalysis 5 data format, those are:

- Electrons, that can be accessed through

event.rec()->electrons(), that is a vector ofRecLeptonFormatobjects. - Muons, that can be accessed through

event.rec()->muons(), that is a vector ofRecLeptonFormatobjects. - Photons, that can be accessed through

event.rec()->photons(), that is a vector ofRecPhotonFormatobjects. - Jets, that can be accessed through

event.rec()->jets(), that is a vector ofRecJetFormatobjects.

We have in parallel started to investigate the main properties of those objects (let us take a generic one called object), namely their transverse momentum (object.pt()), transverse energy (object.et()) and their pseudorapidity (object.eta() or object.abseta() for its absolute value).

The transverse momentum is related to the component of the motion of the object that is transverse to the collision axis. Similarly, the transverse energy consists in the energy deposited transversely to the same axis, and the pseudorapidity describes the object direction with respect to the collision axis.

Because of the remnants of the colliding protons, anything parallel to the collision direction is too messy to get any useful information out of it. For this reason, one restricts the analysis to what is going on in the transverse plane.

In addition, I have introduced the angular distance between two objects object1 and object2 (object1.dr(object2)) that allows us to assess how the two objects are separated from each other. This allows to lift any potential double counting occurring when the same detector signature is tagged as more than one object.

Along these lines, it is good to know that MadAnalysis 5 works in such a way that one must always remove the overlap between electrons and jets. Assuming that one has a jet collection named MyJets and an electron collection named MyElectrons, one must include into the code:

MyJets = PHYSICS->Isol->JetCleaning(MyJets, MyElectrons, 0.2);

This automatically clean the jet collection from any electron candidate.

ISOLATION

The identification, or reconstruction, of the physics objects leaving tracks in a detector is crucial. Any analysis is indeed based on imposing some requirements on those reconstructed objects, so that identifying good-quality reconstructed object is therefore crucial.

For this quality reason, one often requires the objects of interest to be well separated from each other. In other words, one imposes some isolation criteria.

One easy way to implement object isolation is to rely on the angular distance between different objects. For instance, we may impose that no object is reconstructed at a given angular distance of a specific object of interest. Implementing such an isolation is easily doable with the methods yielding object overlap removal (see my previous post).

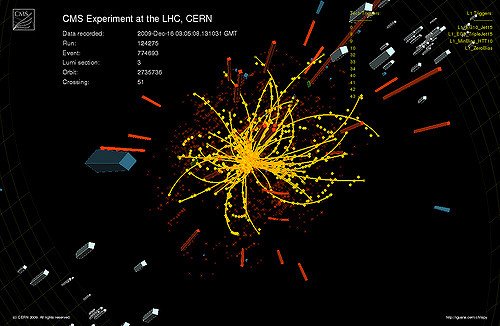

[image credits: Todd Barnard (CC BY-SA 2.0)]

However, there is another, very widely used, way. Let us take for instance this CMS search for dark matter. This analysis relies on the presence of an isolated photon.

Such an isolated photon is defined as a photon for which there is not much activity around it.

In order to assess quantitatively this activity, we consider a cone of a given radius dR centered on the photon.

One next calculates three variables Iπ, In and Iγ, related to the sum of the transverse momenta of all charged hadrons, neutral hadrons and photons present in the cone respectively. In other words, one considers all charged hadrons, neutral hadrons and photons lying at an angular distance smaller than dR of the photon.

Those sums are finally compared to the transverse momentum of the photon. If they are relatively small enough, the photon will then be tagged as isolated.

MadAnalysis 5 comes with predefined methods allowing to calculate those sums,

double Ipi = PHYSICS->Isol->eflow->sumIsolation(myphoton,event.rec(),0.3,0.,

IsolationEFlow::TRACK_COMPONENT);

double In = PHYSICS->Isol->eflow->sumIsolation(myphoton,event.rec(),0.3,0.,

IsolationEFlow::NEUTRAL_COMPONENT);

double Igam = PHYSICS->Isol->eflow->sumIsolation(myphoton,event.rec(),0.3,0.,

IsolationEFlow::PHOTON_COMPONENT);

where myphoton is the photon being tested and we picked a cone of radius dR=0.3.

The first line allows us to compute Iπ from the charged tracks around the photon (IsolationEFlow::TRACK_COMPONENT). The second one is dedicated to In that is evaluated from all neutral hadrons around the photon (IsolationEFlow::NEUTRAL_COMPONENT). The third line is finally tackling Iγ, where any photon component around the considered photon is included (IsolationEFlow::PHOTON_COMPONENT), the considered photon as well.

Such methods exist both for leptons and photons, and it is sometimes convenient to get the sum of all three variables above in one go. This is achievable by using

double Itot = PHYSICS->Isol->eflow->sumIsolation(myphoton,event.rec(),0.3,0.,

IsolationEFlow::ALL_COMPONENT);

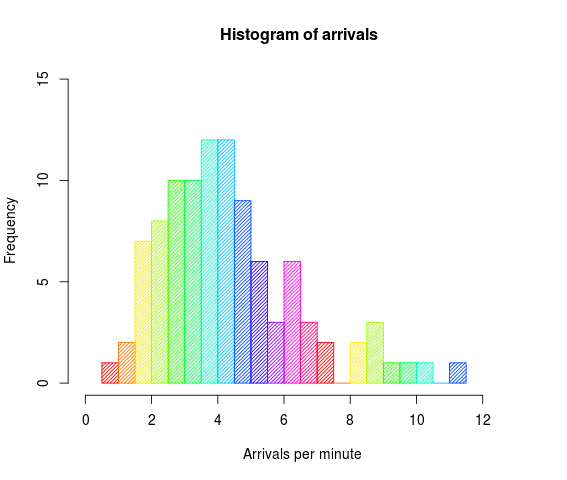

HISTOGRAMMING

In the context of a real analysis, it is often helpful to get histograms representing the distribution in a given variable. Such a variable could be a global property of the events, or a specific property of some object inside the events.

[image credits: DanielPenfield (CC BY-SA 3.0)]

By getting such a distribution for both a potential signal and the background, one can further decide whether this variable could be of any use to increase the signal-over-background ratio (that is the goal of any LHC analysis).

MadAnalysis 5 comes with varied methods making histogramming easy. The procedure is the folllowing.

1- In the Initialize method of the analysis, one needs to declare a signal region by adding

Manager()->AddRegionSelection(“dummy”);

I intentionally skip any detail about that, as this will be addressed in 2 or 3 posts.

2- Still in the Initialize method, one needs to declare the histogram we would like to show,

Manager()->AddHisto("ptj1",15,0,1200);

This declares a histogram named ptj1, containing 15 bins ranging from 0 to 1200.

3- At the beginning of the Execute method, one needs to read of the weight of each event, as not all events are necessarily equal,

Manager()->InitializeForNewEvent(event.mc()->weight());

Those weight will be accounted for when the histogram will be filled, each event therefore entering with the adequate weight.

4- Finally, one still need to fill out histogram, in the Execute method,

Manager()->FillHisto("ptj1”,10.)

The first argument refers to the name of the histogram, and the second one is the value.

5- After running the code on an input file named tth.list, the output file is Output/tth/test_analysis_X/Histograms/histos.saf where the capital X is a number increased every time the code is run. Each declared histogram is present in this XML-like file, within a given <Histo> element,

For a given histograms, one can find information about the number of bins and the range,

# nbins xmin xmax

15 0.000000e+00 1.200000e+03

some statistics and the value of each bin

<Data>

0.000000e+00 0.000000e+00 # underflow

0.000000e+00 0.000000e+00 # bin 1 / 15

3.639279e-04 0.000000e+00 # bin 2 / 15

2.362230e-04 0.000000e+00

…

</Data>

The total value corresponding to each bin is obtained from the sum of the numbers of the two columns. Once again, I will skip any detail concerning the reason behind that;)

A FIRST MADANALYSIS 5 TASK REQUEST

Completely unrelated to the rest, there is no dedicated module allowing one to read a histos.saf file and get plots out of it. I would like to get a Python code (potentially relying on matplotlib) allowing to do so. An exemplary histogram file can be found here.

This will be the first task request of this particle physics @ utopian-io project. Please do not forget to mention me in any post detailing such an implementation.

The deadline is Friday June 29th, 16:00 UTC.

THE EXERCISE

For the exercise of the day, we will move away from our previous analysis and start the study of a CMS search for dark matter. We will come back to the ATLAS analysis in the future.

As usual, one should avoid implementing every single line in the analysis and follow instead the instructions below. Please do not hesitate to shoot questions in the comments.

Create a blank analysis from the MadAnalysis 5 main folder,

./bin/ma5 -E test_cms test_cms

We will modify the filetest_cms/Build/SampleAnalyzer/User/Analyzer/test_cms.cppbelow.In the

Initializemethod of this file, please declare a dummy signal region (see above),

Manager()->AddRegionSelection("dummy");In the

Executemethod of this file, initialize the weights (in the aim of getting histograms),

Manager()->InitializeForNewEvent(event.mc()->weight());Go to section 3 of the CMS analysis note, on page 4. Create a vector with signal jets as described in the middle of the second paragraph. This is similar to anything we have done so far, as we only focus on transverse momentum and pseudorapidity constraints here.

Go the third paragraph on page 3 and create a vector containing all (isolated) signal photons. There is no transverse momentum requirement on the photons, but a pseudorapidity one (see in the second paragraph of section 3 on page 4). Isolation has to be implemented as described above, the thresholds being given in the medium barrel information of table 3 in this research paper. Whilst the σηη criterion can be ignored, the requirement on H/E can be implemented thanks to the

HEoverEE()method of theRecPhotonFormatclass.Implement four histograms of 20 bins each. Two of them will be related to the transverse momentum of the first photon and of the first jet, both covering a pT range from 0 to 1000 GeV. The last two histograms will be related to the pseudorapidity spectra of the same objects, covering the [-1.5,1.5] range for the first photon and the [-5,5] range for the first jet.

Apply the code on the previous sample of 10 simulated LHC collisions (see here).

Don’t hesitate to write a post presenting and detailing your code. Note that posts tagged with utopian-io tags as the first two tags (and steemstem as a third) will be reviewed independently by steemstem and utopian-io. Posts that do not include the utopian-io tags as the first two tags will not be reviewed by the utopian-io team.

The deadline is Friday June 29th, 16:00 UTC.

MORE INFORMATION ON THIS PROJECT

- The idea

- The project roadmap

- Step 1a: implementing electrons, muons and photons

- Step 1b: jets and object properties

- Step 1c: isolation and histograms (this post)

Participant posts (alphabetical order):

- @effofex: exercise 1b, madanalysis 5 on windows 10

- @irelandscape: journey through particle physics (1, 2, 2b, 3), exercise 1b, exercise 1a

- @mactro: exercise 1b, exercise 1a.

STEEMSTEM

SteemSTEM is a community-driven project that now runs on Steem for more than 1.5 year. We seek to build a community of science lovers and to make the Steem blockchain a better place for Science Technology Engineering and Mathematics (STEM).

More information can be found on the @steemstem blog, on our discord server and in our last project report. Please also have a look on this post for what concerns the building of our community.

This caught my attention. I spent some of the spring semester writing custom R code to create histograms (actually ended up with probability distro curves, but histos were created along the way) from the raw per-cell data from some fluid dynamics experiments. For example, plotting the volumetric distribution of the kinetic energy dissipation rate throughout the reactor, rather than relying on an average value.

edit: Forgot to ask my actual question. Do you know wow utopian deals with multiple people implementing answers to task requests?

edit: Forgot to ask my actual question. Do you know wow utopian deals with multiple people implementing answers to task requests?

A few more questions:

To make sure I understand the data format:

For the following data:

The value of bins 1, 2, and 3 are 1, 3x10-4, and 5x10-4, respectively. Further, we don't need to show this split in the histogram. Correct?

Here are answers to your questions. Do not hesitate to come back to me if necessary.

All histograms are concatenated within a given SAF file.

The title is given between quotes, right after the description. The axis names are not passed. You can leave them blank. However, it may be nice to allow the user to give them.

It would be nice to leave this option to the user.

Both can be done quite easily.

Once again, both. The matplotlib file is important to allow the user to tune it to the layout he/she wants.

The total number to put in a bin corresponds to the first column minus the second one.

Thanks for the clarifications. I think I understand it enough to get an implementation coded up.

I will double check the code anyways :)

Hi, sorry if this is strange question but I don't understand the resulting bin data values in the SAF file.

I thought that in the histogram the bin value should be whole numbers representing the number of particles matching a particular value range.

Am I wrong about this?

Apologies for a very late answer... Complicated times for me at the moment...

It depends what you want to plot.

If you are interested in the transverse momentum distribution of all jets, then each jet of the event will contribute to the histogram (to potentially different bins). On the other hand, you may be interested in the transverse momentum distribution of the leading (or first jet) of the events. Then, only the first jet matters and will yield an entry in the histogram.

We can also be interested in plotting global variables, like the total activity in the events, which do not depend on the actual number of particle but instead of the sum of their energy.

Does it clarify?

Thanks, yes I think that clarifies it.

I will submit corrections shortly, probably today.

Cheers!

Cool! Good luck with this!

I've got some good news. This is still janky as heck, but I've got a python tool which parses the saf file, converts it into a pandas dataframe, and allows me to spit out some histograms.

The janky part is that the histograms are still essentially a proof-of-pipeline, matplotlib has turned out to be very different (not bad, just different) from the plotting language (ggplot2) I am used to.

This image will get better between now and Friday. My plan to is push my local repo to a fork of ma5 on my github account, then send you a pull request on Friday. Based on how that goes and your feedback, then write up a post.

The downside is that I probably won't have time for 1c, but I can deal with that. It's been very informative using pandas and matplotlib on non-toy data.

Super! This looks very nice. Just two comments:

I can give one more week for 1c, as I still haven't found the time to start writing 1d ^^

I can certainly make it multiple figures, i was actually pondering that over morning coffee.

The code exists as a standalone thing, but utopian would like your github ma5 to be the main repo, so I am developing it in a new directory under tools. This also helps with the criterion that a task is accepted by an author by incorporating a pull request.

I am not sure to understand the utopian request. This addon was supposed to stay independent of madanalysis, as it is only useful once the working directory has been created. I will see with utopian.

One thing that' been consistently an issue is that the utopian workflow is based on largely monolithic projects. Frameworks which generate code or tiny tools complicate the idea of what the main repo should be.

Actually, I insist on the python option. The reason is that the code is meant to be used by physicists, and physicists do not use R in general (python, c++ and Fortran are the mostly spread programming languages).

I have no idea. I suppose all will be rewarded if they are nicely done. On my side, everything that works well will be advertised on the MadAnalysis 5 website. The best module may even be merged with the main branch of madanalysis 5, provided the author agrees.

No worries on the Python requirement, happy to code in that environment.

Cool! :)

Another nice exercise!

First question: do we need to select baseline jets and employ overlap removal from previous exercise before looking for signal jets?

There is only one type if jets in this analysis. Having two (or sometimes more than two) different jet definitions is not systematics.

Here, only the removal between jets and electrons is necessary, but this is connected to a feature of madanalysis. This can however be done through

MyJets = PHYSICS->Isol->JetCleaning(MyJets, MyElectrons, 0.2);Haven't found the time to do the exercise, but I'm in for the SAF plotting tool task request.

Thanks a lot for contributing. I am testing it right now! :)

Hi @lemouth, I have a few questions regarding the exercise:

With regards to step 4 in the exercise, the paper states:

Are we to ignore this criteria since you wrote:

In table 3 of the photon identification paper, the criteria looks like:

Am I right to think that the pTgamma value is to be retrieved from:

photon.momentum().Gamma() ?

Do we need to care about the hadronic fractionfh?

Thanks!

Concerning the opening angle stuff, we will ignore it entirely, as the full selection strategy in fact. This requires a post on its own as special methods are in order to extract cutflows and things like that. For the moment, we solely focus on object definitions.

This is just an abbrevation for the pt of the photon. The symbol of the photon is the Greek letter gamma. Therefore, one needs to use

myphoton->pt()No. We can't do that with our simulation setup (that approximates the real experimental apparatus).

Ok thanks for the clarification.

You are welcome. By the way, have you noticed the tag requirements? :)

I was kind of wondering about that.

You wrote:

I wasn't too sure about what you meant by a second tag to use for utopian-io, so I went with:

utopian-io utopian steemstem

Is this correct?

Nope, the second tag must consists in one of the utopian-specific subtags. Here, please use "blog".

OK, I wasn't aware of that.

Thanks for the clarification.

OK, I wasn't

Aware of that. Thanks for the

Clarification.

- irelandscape

I'm a bot. I detect haiku.

This comment made me learn what a haiku is... pfff...

I used to think, naively, that dark matter only exists in theory. But over-time, my thoughts have been re-aligned aright.

Well, even though something is theorized, it doesn't also mean it is non-existent

The dark matter hypothesis works extremely well to explain a plethora of cosmological observations. However, dark matter itself is still elusive from the direct detection standpoint. For this reason (i.e. no direct observation), it can still be considered as theoretical. There are other options, like alternative gravities, that work too. Not as well as dark matter, but they are not excluded (for now).

Alternative gravity? I've never heard of it. Where does it apply to? Let me guess; at the sub-atomic level?

Okay, something tells me I'm wrong right now.

No: at the other level, the one of the galaxies. Please check this for instance.

Thanks for the link. Now I have the privilege of reading the post again. When I first saw it 8months ago, I didn't grab much from it, but now my knowledge is enhanced.

Thanks again sir

This is with a lot of repetitions that one becomes expert :D

I absolutely agree with you

Great. Another exciting exercise.

From my first read the instructions appear clear enough.

Happy to contribute to any effort in searching for proof of existence of dark matter. 😀

Is this a hidden comment to request for more dark examples? Noted for the future :D

I am looking forward to your answer :)

Wow this is really interesting "dark matter " am really looking forward to your final proof.

Do you mind to be slightly more specific? My proof for what exactly?

competition ?

http://nautil.us//blog/evidence-found-for-a-new-fundamental-particle

No. The right word is complementarity (neutrino physics). However, the code I am developing is targeting LHC physics only. Nothing less, nothing more :)

the right word in the right place isnt always my strong point as you have probably noticed by now ... lack of time on schoolbenches ... but i still feel that doesnt hold me back from understanding, i sometimes feel i get this unique angle being the cat on the roof looking down at the world, wondering what the hell they are all chasing, but it DOES get in the way of other people understanding what i'm trying to communicate ofcourse

sometimes it does ... especially in exact fields like yours

or 'simple' math lol ... nothing simple about math but math is a language right ? not really a science as such, its a language to express observations in science more like

well then ... see you on the next page , i think i actually got through the replies, a script to filter out replies-only , (leaving out bots and ads) and only those that havent been replied to seems like a very good idea to be a bit more time efficient ( ireally need computers to do that for me) im gonna put that on the to-do list

a pleasure,

as always, sir :)

^^

Hi. Thank you for your contribution, it's amazing to find and support this type of projects here. Despite high academic content, the post is very understandable and well written. Keep up the good work.

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Write a ticket on https://support.utopian.io/.

Chat with us on Discord.

[utopian-moderator]

Thanks a lot for the nice report :)

Hey @lemouth

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Contributing on Utopian

Learn how to contribute on our website or by watching this tutorial on Youtube.

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!