Better learning through spoken words while reading subtitles

Making #BeyondBitcoin more accessible for newbie's

And facilitate accelerated learning.

This one is for you @officialfuzzy. I consider this to be my contribution to the Whaletank which aired yesterday, Friday 18th August 2017.

Introduction

I said to @officialfuzzy that I found it hard to keep up as someone who is quite new here. And I consider myself to be quite a pro having developed for iOS, I know my bash-fu and the crazy regular experessions. I've done graphics, User eXperience which I consider myself maybe best at. I compose and produced music. I've seen weird systems on my job for Air Traffic Control software, I loved BeOS, but I used Windows and Linux for work. Used fantastic window managers while having hefty debates. Focus Follow Mouse! I've seen a lot! But since 2009 I'm on Mac OS and there I got my most fun / work done.

So having seen so many systems while I couldn't quite figure out the specifics of the #BeyondBitcoin show I figured it could be a good thing to transcribe what has been said and possibly make a summary of the talks.

Yeah but the Whaletank turned out to be almost the whole day f***!

Cue the automation, YouTube vs. Watson vs. Google Docs

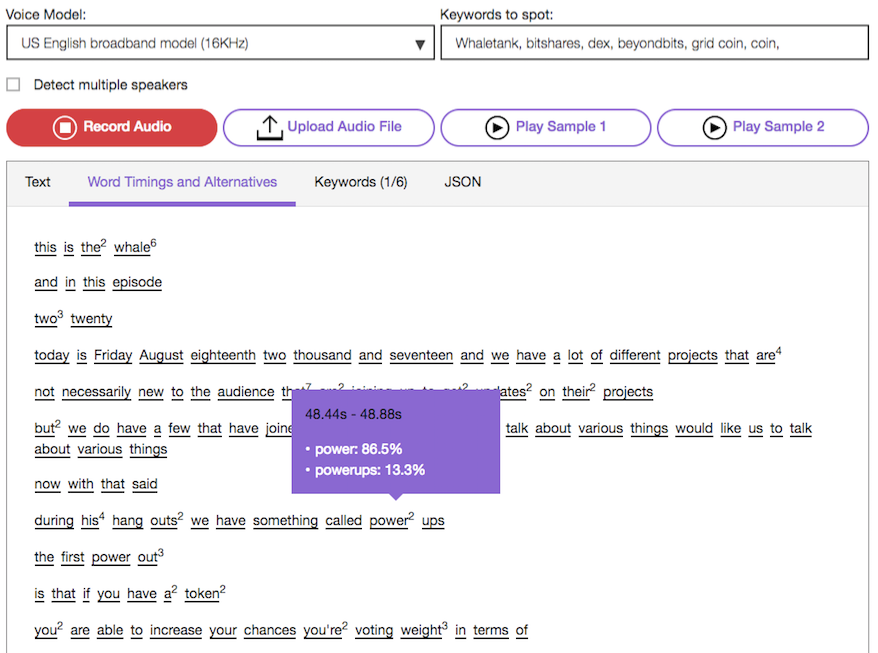

I've been experimenting with some Speech to Text tools today. Now I'm using IBM's Watson. Yes this has been spoken in. And I've also used Google Documents Voice input. Google doesn't create nice sententences however as Watson does. You can try it live in Chrome.

Watson

In the backend, YouTube’s sound captioning system is based on a Deep Neural Network model the team trained on a set of weakly labeled data. Whenever a new video is now uploaded to YouTube, the new system runs and tries to identify these sounds. For those of you who want to know more about how the team achieved this (and how it used a modified Viterbi algorithm), Google’s own blog post provides more details.

--- Techcrunch

Memo to Google and YouTube — Don’t rest on your laurels just yet

- only has a single speaker, who speaks clearly and in a slow, consistent, monotonic and deliberate manner

- has good quality audio and it doesn’t have any background noise or sound effects and / or music

...

Most YouTube videos do not have these elements.

Rather they tend to have multiple speakers, rapid fire and often unintelligible dialogue, sound effects and background music and the list goes on and on.

In these circumstances, Google and YouTube’s auto-generated craptions will simply not be able to get anywhere near the magic 95% level that we have demonstrated above.

--- Medium

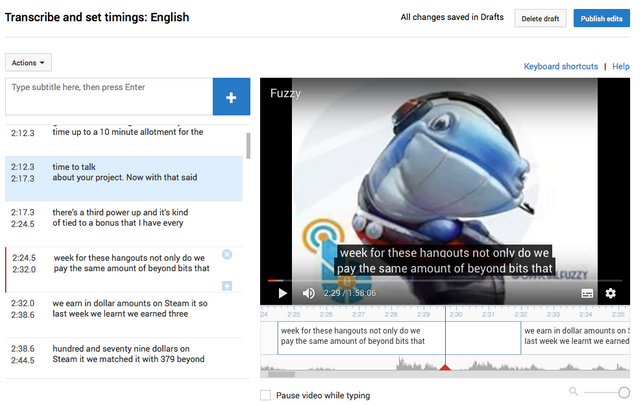

YouTube - editing while listening

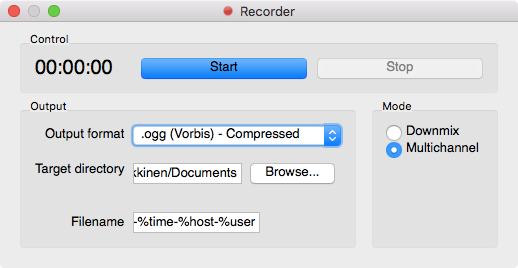

But the best and easiest by far, is maybe YouTube's automatic captioning system. Quite easy to upload the audio from the Whaletank which is hosted on SoundCloud but you can also make your own recording on Mumble. Mumble even allows you to record each speaker individually I guess to match up volume levels. This is called multitrack or multichannel in mumble speak.

YouTube's captions are also very easy to edit and the interface is really well designed. The subtitles / captions can be edited while the video is playing back. But make sure you are listening not reading! Sometimes the visual system takes precedence over the aural. Then you think you are hearing what you are actually reading. Not good! So yeah it's nice that you can type and play along. And it gave a meaningful piece of text out of it.

The weird way to the finish-line

Before I settled on YouTube I tried Google Docs Voice input. I wanted to test the speech to text quality. But for that I needed to get fuzzy's lovely voice to be heared by Google. This wasn't a real success because playback over speakers and into the mic is not handy. Plus any noise will be transcribed too.

UPDATE: It seems Google now requires you to be using the Chrome browser (on PC or Mac) in order to enable Google Now. If you're having any issues, make sure you're using Chrome!

Routing audio and much cursing

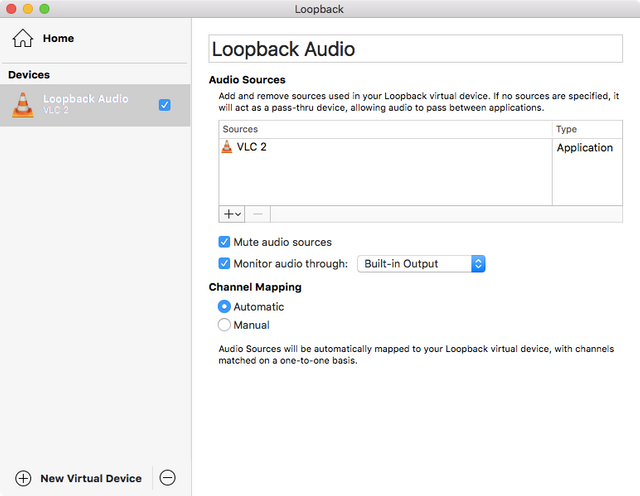

The best thing you can do if you have a pre-recorded text is to re-route the audio so that Google can hear it. This means not going through your speakers and back into your microphone. Speech recognition will be much better that way.

But getting software to work can be painful. I ended up cracking Loopback because SoundFlower was buggy and I could't get a new version to compile. Also Xcode takes up so much diskspace... So worried I might infect my machine by cracks and keygens I try to find alternatives to SandBoxie which I highly recommend if doing such experiments on Windows.

Notice the external microphone from my in-ear headphones

Loopback to reroute pre-recorded audio to a virtual microphone input

Virtual Audio Cable (windows)

Video

Alternatives for Mac

A post on Quora was also very helpful to list some alternatives for speech recognition

Uploading YouTube nightmares - more cursing!

Uploading audio to YouTube can be a nightmare. It's not the right format (ogg) or files are too big (I'm looking at you tunesTube 25mb limit!). Expensive software packages.

FFMPEG to the rescue

Having downloaded quite a few video from YouTube or other video sites with the excelllent youtube-dl commandline application I wondered if it could convert video and audio for YouTube upload. It could!

ffmpeg -loop 1 -r 2 -i image.jpg -i input.mp3 -vf scale=-1:380 -c:v libx264 -preset slow -tune stillimage -crf 18 -c:a copy -shortest -pix_fmt yuv420p -threads 0 output.mkv

As you can see command-line options can be pretty incomprehensible and therefore I Google them rather than looking into manuals or grepping help outputs. So finally I found the best command-line option which takes an image (replace image.jpg with the name / path to your image. Idem for the audio file input.mp3 And produces output.mkv which you can upload directly to YouTube.

Credit: http://www.makeuseof.com/tag/3-ways-add-audio-podcast-youtube/

Different use cases

Watson and Google transcribe speech to text in real time so it could be published in near realtime. I'm not sure if YouTube Live can transcribe in real time too.

But imagine if we would be hosting the #BeyondBitcoin Whaletank and you could catch up or read what is being said. Even if their would be a slight lag you could jump into the converstation with Mumble and ask your questions! How cool is that?

Even better is that you can fork their work on GitHub!

Things I didn't try

A paid service, as you might expect PopUp Archive has some distinct advantages over both the YouTube and the Watson-plus-Amara workflows. PopUp Archive will store audio files, can ingest multiple files at once from a variety of sources, supports multiple users on an account, includes robust transcript editing tools, differentiates speakers, adds punctuation, has very precise timestamps, and more. In testing the options for Tuts+ videos, we've found that PopUp Archives transcriptions are more accurate than competing services. In short, you get what you pay for.

I haven't bothered to try those out but given sufficient funds I might. This might be great for making Coursera type courses where you watch a video, and simultaneously read along with the currently spoken text highlighted in transcription! Talking beyond cool-bits!

Thanks to Photography Tutsplus

I've uploaded the whole Whaletank and I'll be posting it later to @Dtube or others maybe after editing the automatic subtitles! Let's create something great!

More sources:

IBM Watson vs. YouTube so far so good

not so good anymore, Watson can't keep up and is confused about who is who

@originalworks

@OriginalWorks Mention Bot activated by @nutela. The @OriginalWorks bot has determined this post by @nutela to be original material and upvoted it!

Do you like what @OriginalWorks is doing? Give it an upvote!

To call @OriginalWorks, simply reply to any post with @originalworks or !originalworks in your message!

For more information, Click Here!

Great initiative!

Thanks, next thing to try is to have an interactive transcript so you can read along the Whaletank or any other podcast or video and quickly jump to relevant sections for TL;DR or people who want to get to the point quickly like me ;)

Great article @nutela! Thanks for doing all of that research and testing of each of the different options :)

I also did a little googling of that last option - popup archive - I noticed that they also have a github:

https://github.com/popuparchive

Hey so you did find it also for popup archive! So now with IBM Watson and popup archive we have two Speech-to-Text projects with github source code access!

Regarding our conversation yesterday (this morning for me) I realized these projects inspired me to do also subtitles for films. So not only transcripts for learning with text being spoken being highlighted like Coursera does but also for other languages and non-native speakers can learn languages and/or cultures this way. I love Japanese anime, in fact I hand pick and show them to my daughters who like the tenderness and sophistication in those films. I will check for English subs for Czech films as well now we have @Dtube but maybe they'll have to be hardcoded ie. (burned into the video) for now.

The Whaleshares / Whaletank talk. I've edited the the subs until Gridcoin Q&A.

@nutela got you a $1.54 @minnowbooster upgoat, nice! (Image: pixabay.com)

Want a boost? Click here to read more!

@originalworks

Whew, what an excellent post!!

I'd really love to see this this tech used for the Gridcoin Hangouts.

We definitely could use audio transcription for our hangouts, as we discuss very relevant subjects and important information that needs to be disseminated to everyone.

We don't think of those who require audio transcription as a norm as we are fortunate to be able to hear what is being said and we sadly do forget those less fortunate.

Thanks for doing all this work, I for one will be "bookmarking" this post for future reference!!

https://steemit.com/gridcoin/@cm-steem/gridcoin-community-hangout-038

You'd do better following the #BeyondBitcoin hangouts on @officialfuzzy's blog.

So actually I used this one:

sed 's/\. /\.\'$'\n/g'To add newlines after a period and a space.But first I had to delete the timestamps from the subtitle file:

sed '/[0-3]:/d'and empty lines:sed '/^$/d'Another guide: The Basics of Using the Sed Stream Editor to Manipulate Text in Linux The basics, LOL.

I'm editing the subtitle output from YouTube with

sed. Works pretty good so far:sed 's/regexp/\'$'\n/g'Welcome everyone to another beyond

Bitcoin hangout.

This is the whale tank

hangout and this is episode 220.

Today is

Friday August 18 2017 and we have a lot

of different projects that are not

necessarily new to the audience that are

joining up, to give updates on their

projects but we do have a few that have

joined up today that would like to talk

about various things or would like us to

talk about various things.

Now with that

said during these hangouts we have

something called power-ups.

Advanced Bash-Scripting Guide: Appendix C. A Sed and Awk Micro-Primer

This post was resteemed by @resteembot!

Good Luck!

Learn more about the @resteembot project in the introduction post.

Thanks @resteembot! I love your service! Everybody else, please resteem if you like my work! It is real work to investigate and write in-depth articles actually!

I am glad to be appreciated. Thanks.

I saw that you requested the resteem of this post twice.

Although the comments say that both times were successful, it was only because the payment was valid. It is only possible to resteem once.

To avoid losing money and to avoid repeating comments on your posts, be more careful and only send once.

Keep up the good articles!

Thanks, I thought there was just a time limit for resteeming? Thanks for a great service :)