If Beethoven was Alive Today Could He Hear Again?

Yes indeed, if Beethoven, the musical prodigy who eventually became deaf, was among us today we could give back his hearing. Not with a perfect pitch detection, but he could easily understand human speech and maybe even enjoy some music, especially with deep, slow beats.

One of the fascinating technologies where we already equip or rather heal the human body with an electronic, computerized instrument is the cochlear implant. The cochlear implant is able to provide hearing to a completely (yes, completely) deaf person, born or acquired deafness alike. Even though this hearing is not as natural as the normal one, this technology enables their users to live a normal life, become mothers and fathers, complete graduate school, and enjoy the company of other human beings. To my surprise, very few people know about this technology outside the biomedical engineering community, and they are usually surprised when I tell them that I did my master’s research in this area.

So how does this technology work?...I tell you below:

First: super-brief 101 on sounds and hearing

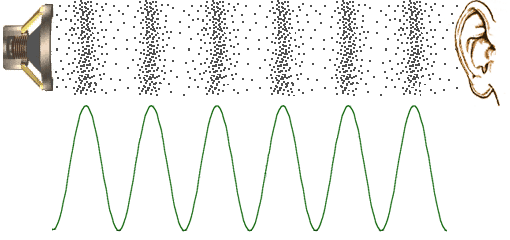

Audible sound is the periodic change in the air pressure around us. Air has a pressure that we cannot feel because we are accustomed to the atmospheric 101.3250 kPa value. However, there are small fluctuations in this value, that is still not detected by our other senses, but by our hearing. If you measure the air pressure at a given point in the 3D space, and for instance you see a periodic change in this value, and by plotting you see a sine wave with frequency 440 Hz (i.e. 440 cycles per sec), then you hear the standard musical pitch (A440, or A4, or Stuttgart pitch, musical note of A above middle C). In this case, we talk about a pure sound, a single frequency. These frequencies can be mixed together (different pure tones summed up), and they can create any kind of graph that you can imagine. Those are the sounds that you hear every day. That pure tone didn’t change over time, but in real life, these change continuously. E.g. a rising pitch tone would consist of such sine waves with higher and higher frequencies from moment to moment.

Figure 1 | Schematic representation of pure tone. Source: http://www.mediacollege.com/audio/01/sound-waves.html

A short but important mathematical detour: Joseph Fourier was the one to show that every function, that suffices to a couple of conditions (that is typically sufficed by functions describing our everyday life and mid-sized physics) can be decomposed into a finite or infinite number of sine and cosine waves with different frequencies. So each sound wave in a given moment can be represented by a plot, which tells you if a given frequency (e.g. 440 Hz) is present in the sound or not. Pure tones, as we discussed above, they look like a single thin column on such a plot (“infinitely thin in non-rigorous terms).

Hearing

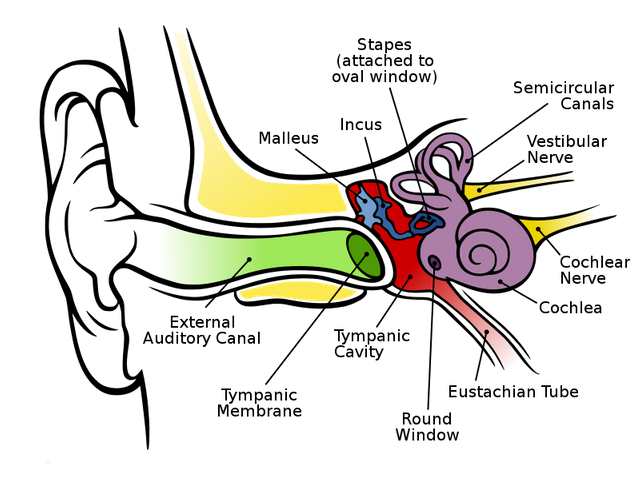

Now, hearing is your ability to sense these waves of the air and makes sense of them. How? As we already mentioned, the rest of our body doesn’t pick up these relatively small vibrations in the air pressure. Your hearing does because there are signal-strengthening mechanisms, implemented by the tiniest bones in your body that conduct the mechanical vibrations of the eardrum to the window of the cochlea. It is mechanics, old-school story. New school and more interesting part come now, with the cochlea. The cochlea is like a snail, a twisted tunnel. Inside that, you have a liquid and in the middle, a membrane. Inside the membrane there are small very sensitive sensory neurons, they are the endings of your auditory nerve, emanating from your brainstem and finishing up there in your cochlea, in the inner ear. The tiny bones “shake” the window, the entrance of the cochlea, the liquid takes up the vibration and the sensory cells react to these, sending signals to your brain.

Figure 2 | Schematic representation of the human hearing system. Source: Perception Space—The Final Frontier, A PLoS Biology Vol. 3, No. 4, e137 doi:10.1371/journal.pbio.0030137

Vibration is picked up, cool, but how do we separate the different sounds in different moments? And here comes the important realisation of Joseph Fourier. Evolution “invented” the Fourier transform before Fourier, your hearing implements this. Your ear can decompose every sound in the 20 - 20 kHz range into its constituting pure frequencies. Your ear distinguishes the different “pieces of” pure tones that constitute a given raw sound.

How?

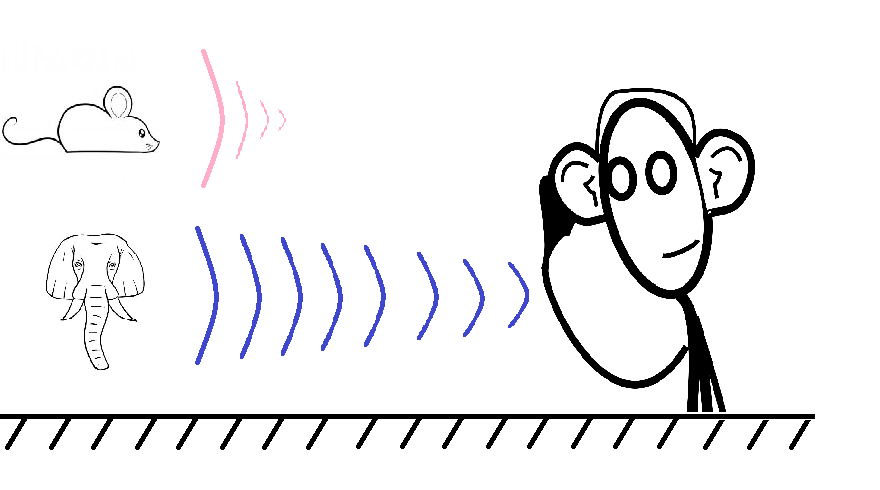

There is a simple but important physical law that we did not cover so far. I will just state it now and explain shortly, and I plan to elaborate on it in another post. Fact: in any medium, where there is a loss of power proportional to the energy of a system, alias: friction, the lower frequencies will propagate further than the higher frequencies. In more simpler terms: lower frequencies, alias deeper sounds, travel further in the air than higher frequency sounds, alias higher pitched sounds.

Figure 3 | Illustration of frequency dependent travel length. Even if the sound of the mouse and the elephant were at the same amplitude (i.e. loudness), the sound of an elephant would travel further because it represents a lower frequency and thus loses less energy as it travels along the air medium. Why this happens and what interesting implications it has, even in human fashion (in my opinion), will be covered in an upcoming post. Source: own quick illustration.

This is law, in general, is widely applicable and deserves a different post, but let’s just apply it now. In your cochlea, there is water, and in the water, there is obviously some friction among the molecules, thus higher frequency sounds will travel to a smaller distance, which is actually proportional to their frequency (higher frequency → shorter travelled distance). So the auditory nerves that wire the end of the cochlea (further away from the window where the vibration is transmitted) will sense the deepest sounds. Your brain knows this and it knows that the signals from those nerves should be interpreted as the “deep sounds”. I would say, evolution is a genius engineer.

Finally: cochlear implants

All this is great because it means that hearing is a 1-dimensional mapping of sounds onto a body part. If there is a deaf person, with healthy, or at least partially functional auditory nerves, then we can provide them with a kind of electrical hearing. We take a microphone to pick up the sounds in the environment. We process these sounds with a microchip, performing a Fourier transformation, that is calculating what frequency sines and cosines build up that given sound in a moment, what pure tones constitute it. Then, we insert an electrode into the cochlea of the person, this is technically a brain surgery procedure. And now we stimulate that long electrode in the corresponding position, according to what the microphone heard, whether it is a high pitch sound (excite the beginning of cochlea), or a deeper sound (excite the end of the cochlea).

Figure 4 | Schematic representation of a cochlear implant .Source: https://en.wikipedia.org/wiki/Cochlear_implant

This technology has been around now for 30-40 years and has an amazing outcome. Personally, I have been talking with many cochlear implant patients, and they can easily understand my accented English. To see what emotional positive effect it has on people you can check out compilations videos where deaf people hear for the first time.

I do not own this video

Cool! I follow you.

Congratulations @norbertmajubu! You received a personal award!

Click here to view your Board of Honor

Do not miss the last post from @steemitboard:

Congratulations @norbertmajubu! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!