Learning About Self-Interest and Cooperation from AI Matrix Game Social Dilemmas

Putting an artificial intelligence agent in charge of complex facets of human life involves specific goals to be worked towards. What happens when there are multiple artificial intelligence agents within a larger system that are managing the economy, traffic and other environmental challenges and trying to meet each of their own specific goals?

If I have the goal to do something, yet you have a goal to do something else and they are not mutually inclusive but clash and conflict with each other, that creates a problem. We can either compete in some way where someone ends up coming out as the winner and someone else the loser to get what they want, or we can cooperate for both our benefits.

This has been studied in the prisoner's dilemma to find out how rational self-interest through cooperation doesn't always win out over a less rational self-interest that ignores cooperation.

In an effort to better understand the motives for self-interest, five research scientist from Google's deep mind have authored a paper called Multi-agent Reinforcement Learning in Sequential Social Dilemmas, where they wanted to better understand how agents interact and arrive at cooperative behavior, and also when they turn on each other. They used Matrix Game Social Dilemmas to do that.

Two games were developed by the researchers called Gathering and Wolfpack are both employing a two-dimensional grid game engine. Deep reinforcement learning tries to get artificial intelligence agents to maximize their cumulative long-term rewards through trial and error interactions.

source, source

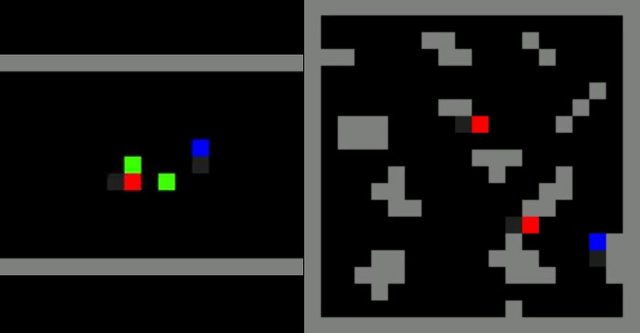

One game, Gathering, has two agents, red and blue, try to collect green apples, with the option of tagging another agent represented by a yellow line. The agents receive one reward for each apple. They can tag a yellow line that appears along their current orientation. The player that is hit by the tag been twice is removed from the game for a certain number of frames. When any player uses the tag option, no rewards are delivered to either player.

When there were enough apples to be divided between the two agents, they coexisted peacefully. When the supply of apples goes down the agents learn to defect and start using the tag feature in order to get more apples for themselves by trying to eliminate the other player from the competition.

The researchers manipulated the spawn rate the apples came back and the spawn rate of an eliminated player, they could control the cost of potential conflict that the agents evaluated for engaging in certain behavior.

The conclusion of the Gathering game predicts that conflicts emerge from competition due to scarcity, while conflict is less likely to happen when there is an abundance of resources.

Here is the first game, Gathering:

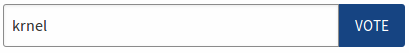

The second game is Wolfpack, with red wolves chasing a blue dot while they avoid gray obstacles. If any wealth touches the prey all the wolves in the capture radius get a reward. The reward the capturing player receives is dependent on how many other worlds are in the capture radius. Capturing it alone can be riskier as you need to defend the carcass on your own, whereas working together can lead to a higher reward.

Rewards are then issued based on being alone, or working with the team in cooperation. The more another agent was present, the higher likelihood of learning to cooperate and implementing complex strategies.

Here is the second game, Wolfpack:

What's interesting is that the two different types of games represented two different types of situations that resulted in two different tendencies to emerge.

In the Gathering game, increasing the amount of players in the network leads to an increase in an agent's tendency to defect. In the Wolfpack game it was the opposite, where the greater network size of players leads to less defection and greater cooperation.

These types of studies will help artificial intelligence developers and social managers develop computer systems that can effectively interface into a complex multi-agent system. If one AI is tasked with managing traffic in the city, while another is tasked with reducing carbon emissions for the state or country, they need to interact in a cooperative manner rather than compete for their own goals or objectives in isolation.

References:

- AI researchers get a sense of how self-interest rules

- Multi-agent Reinforcement Learning in Sequential Social Dilemmas

- Can Steemit attention-economy be a "non-competitive" society?

Thank you for your time and attention! I appreciate the knowledge reaching more people. Take care. Peace.

If you appreciate and value the content, please consider:

Upvoting  , Sharing

, Sharing  or Reblogging

or Reblogging  below.

below.

Please also consider supporting me as a Steem Witness by voting for me at the bottom of the Witness page; or just click on the upvote button if I am in the top 50:

@krnel

2017-02-12, 5pm

"The relationship between individuals, as the relationship between the individual and the group, is controlled by a dual principle of cooperation, solidarity on the one hand and competition-antagonism on the other hand, in the individual to individual relationship...". "... feeds the double complement antagonist principle of social organization. " - Morin, Edgar - "Paradigm Lost".

Augmenting the number of objects in relation to the number of people in an existing artificial scarcity (induces false attention) creates more equilibrium in distribution and less antagonism and inequalities.

It avoids the emergence of groups oriented by self-interest and the fight for power by equalizing the number of objects in relation to people.

The acceleration of change is here to stay, marked by competition and competitiveness based in e-systems and groupware competing for the new social attention economy.

Intergroup collaboration and intragroup cooperation as well involvement in the process of strategic reformulation, innovation, consensus generation, and learning are the drivers of change of culture in today's communities.

Replace the structural competition for cooperation, calls for action and collective awareness, to develop the intra-community cooperation and collaboration among all stakeholders' communities (internal and external users, shareholders, the public, partners, etc.) of the organization to gain competitive advantages.

Well said! Thanks for the feedback. Read more of @charlie777pt's stuff on his blog page, there is an article linked at the bottom of this post for anyone to read in reference to scarcity and competition, etc.

Fun games, like the idea, hate the fact. I would hate being governed by whatever, whatever is given the power to keep "me" in check is evil.

:D

I don't like AI's and would avoid giving them power over the world. People should be able to govern themselves and such bullshit as Carbon emissions shouldn't govern the land, people should start thinking ad taking responsibility. I don't drive a car, sure that is a excuse, but still I have a different way of seeing the world. Rather than everybody having a car, yeah Uber.

People have strong suits and I would rather trust a person who has lived in that city and knows the streets, rather than an AI with GPS and w/e w/e.

I wouldn't mind AI's or self-driving vehicles if we did solve the problems, but as it stands it would just be another layer of divisions and control.

Yup, too many people are infatuated with dreams of AI taking over our responsibilities in life and "helping" us manage ourselves because apparently like the elitists think we are just too stupid to be able to manage and control ourselves... the same shit over and over. First we need to evolve consciousness to live morally, then we can create moral things. Until then, our creations will be reflections of the creators, which is troubling given the power people imagine them having.

The addition of having a clean house without cleaning, a well taken care of car, without putting in the effort and a smart handheld advisor to answer all your questions seems like a great idea.

Normally people will buy into it like fairy-tales since it's easier to blame and believe things can be better if only we had more of, whatever.

Normal way for people to think anyway. After all, we are all taught how to follow and changing takes effort and time.

Menial tasks sure ok, but social living ok get some input, but don't let everything be at their control. Input for suggestions, let the AI have a say, it can surely help most of the time, but maybe sometimes its way off.

The lines blur for me, if it is to work well it needs information. Having that information is a detriment to the "freedoms", extracting all data from personal phones and devices would offer many new "discoveries", but at a cost. Sure having a helpful robot to point out recurring parts in works, so finding the patterns would be helpful to many fields. But I like life simple, I would talk to a person, rather than an AI, however self-aware it is, as it stands I doubt they can make such.

If it's just a helping hand like the butler from batman it can be helpful.

On a bigger scale, taking care of communities, helping with education and with the distribution of goods. But still having that information in the open and the "source" corruptible will leave doors open for power players(Facebook :D).

Thank you Information, i like it

I Just UpVoted You! Please See Our Very Important Post Below Regarding The Blockchain Witnesses. Have An Epic Day/Afternoon/Evening!

https://steemit.com/witness/@iloveupvotes/witness-guide-suggestions-and-witness-warnings