Variability in a Heteroskedastic Market

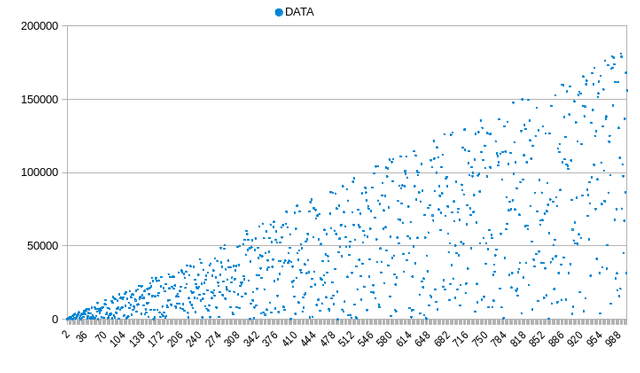

I have been thinking about market analysis and whenever I come across a heteroskedastic distribution, which basically all financial markets, there is a real issue with the way the variance is perceived and calculated.

A heteroskedastic distribution is a multivariate distribution basically either a group of defined or at least detectable joint-probability distributions or some kind of overlapping between multiple ones in 1 giant mess that varies almost randomly but not entirely since there are ways to forecast the changes.

The problem is with the variance, and the way it’s calculated, not just in this scenario but basically in all distributions where multiple orders of magnitude are encompassed.

The problem is really with the decimal numerical system, and in a heteroskedastic distribution we will obviously have numbers swinging from the low to the high in an erratic way, this of course creates a massive bias and error.

Now the fundamental way it is calculated I am not questioning that, there are many smart people who did the work on it, however we can’t ignore the bias either, so if there are any professional mathematicians out there, I welcome your comment on this.

Problem

I have already raised this issue in the past here:

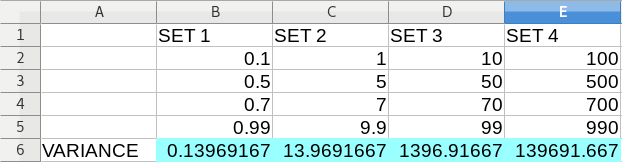

To illustrate the issue in a very simple way:

As you can see I picked 4 numbers to form a sample and multiplied it by 10, the variance although is similar, it always gets multiplied by LOG10(x) * 2 where x is the number of zeros the multiplier has. So if we multiply the first column by 1000 as in SET4, that is LOG10(1000) * 2 = 6 so that is 106 basically the original variance becomes 0.139691666666667 * 1000000 = 139691.666666667. So that is the relationship between the numbers.

So as you can see the variance is heavily biased, and I don’t know it’s the structure of the decimal numerical system, or simply just the fact that we use multiplication, squaring and addition in the variance formula, but nontheless it’s heavily biased.

In my opinion the variance in all columns has to be the same, because we care about the relationship between the numbers and not for the number of decimals in the set. Certainly in finance we don’t care about the additive relationship but rather the multiplicative one, since the profits and losses are multiplicative based if the price moves.

Heteroskedastic Case

In the heteroskedastic case we need to calculate the variance of the variances. Since the variance changes continuously in all subsets of the distribution, we need to measure the dispersion of the variances themselves.

So we need to segment the data into groups either manually selecting the inflection points or there are tools to measure the inflection points, like periodicity/frequency analysis, spectral analysis, or things like the Bartlett's test

.

Basically grouping data into bins, which can also be used to estimate the probability distribution, and then see the variance dispersion between the bins by calculating the variance of the variances.

Now of course this too is biased, since we can’t compare 2 different datas with it, like if the BTC/USD market which is 7 years old will have more bins than say the STEEM/USD market, plus if the sample size is not divisible by the bin number then the latest bin will be incomplete and cause errors.

So I was thinking, why the hell would we group the data into bins, of like say 100 datapoint groups for a 1000 sample sized data, it makes no sense since it just creates compatibility issues if we want to compare it to other distributions of similar size.

After all the variance of the variances calculates the dispersion between the bins, but the bin size is arbitrary.

Why not just use 1 as the bin size? Basically the variance itself.

I mean how do we know that the bin concept is accurate, what if the variance changes between every single datapoint, wouldn’t the sample variance be more accurate then than this binning process?

Yes you could say that processing the data has some utility if there is a relationship between groups of data, but we can’t know when it will change ahead, even the periodicity factor has some randomness in it, and it’s not like the group itself is tight, there is overlap between the previous and the next group in it, it’s it’s own probability distribution.

So really both to remove the arbitrary factor and as a rule of thumb to just make it more inclusive, it’s better in my opinion to take every single datapoint as it’s own element.

Solution

So the solution is pretty simple, and it’s not a replacement for the variance, but an enhancement of it that is perfect for multivariate analysis.

Just use LOG based differences, the natural log is already widely used in finance due to it’s biasless nature, why not use it here as well.

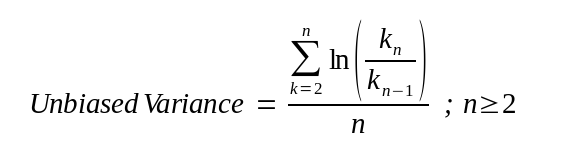

So I propose a biasless variance formula:

Very simple we just take the LN() difference between each datapoint, minimum 2, and divide it by the total datapoints. It’s very simple and it’s unbiased.

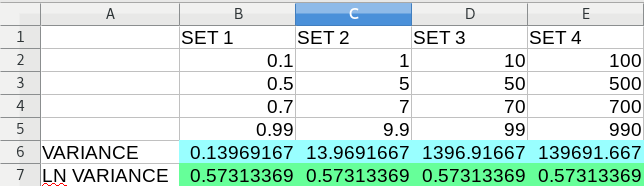

Using the example above, it yields the same output for each set:

As it should, since if the price jumps from 700 to 900 is the same as if it were from 70 to 90, the profits and losses are the same, so at least in finance using this formula is advantageous.

And it also eliminates the heteroskedastic problem, since a heteroskedastic market would jump to irrationally to different price levels, as Bitcoin went from 5 cents to 5000$ that is a 100,000x factor growth, obviously if we’d use the “standard” variance formula on that, it would fall apart.

But using the unbiased one I proposed above would not. In fact it doesn’t matter if the price jumps from 5000 to 9000 it’s the same variance if the price is already in that zone, but jumping from 5 cents to 9000 would obviously be a variance change, but we know that it doesn’t take huge leaps like that suddenly, so in it’s zone these kinds of jumps are much better emphasized by a LOG based formula.

So if the example case would be applied to like Bitcoin, it was similar, then why the hell would we compare a variance of 1396.91666666667 to 139691.666666667 when it was always the same relative to it’s local levels. It was the same in all zones, it was just a non-linear growth.

Conclusion

So for non-linear distributions I believe this formula is much better. Now I am not sure if we can use this to draw probability calculations from it since the variance has some interesting characteristics, but if any mathematician here wants to play with this, I welcome him, leave your opinions in the comments.

Other than that it is well known amongst finance experts that the log formula, or even a log based chart of the price is much more accurate, especially in a non-linear environment.

So why not extend this further and see if the variance can be defined as that, for a non-linear environment at least.

You may wish to look at the arithmetic coefficient of variation of a log-normal distribution - interestingly, it is independent of the mean.

Or you can keep testing your own intuitive formula and see when it breaks down and when it appears useful.

Oh yes I see, it's basically the so called Sharpe Ratio used in finance.

However I have my problems with that, I don't use it personally, I have a better way of determining risk in a market:

I will, as I said I am not a math expert, and I didn't said this is a replacement for the variance concept, it's more like an enhancement. Certainly for measuring errors in a forecasted data it's much better since the profit/loss is always a ratio:

Nice one!

Contribute to me, I will back your contribution

Regards

@tamimiqbal