MattockFS; Computer-Forensics File-System : Part Six

This post is the 6th of an eight-part series regarding the MattockFS Computer-Forensics File-System. This series of post is based on the MattockFS workshop that I gave at the Digital Forensics Research Workshop in Überlingen Germany earlier this year.

This post is the first of two hands on posts in this series. That is, you are suggested to keep a VM handy so you can play with MattockFS and get a feel for how the system works. I realize that the hands on section of my workshop might not work as well as a blog post and that it will ask more from you as a reader. So let us start and see how it goes.

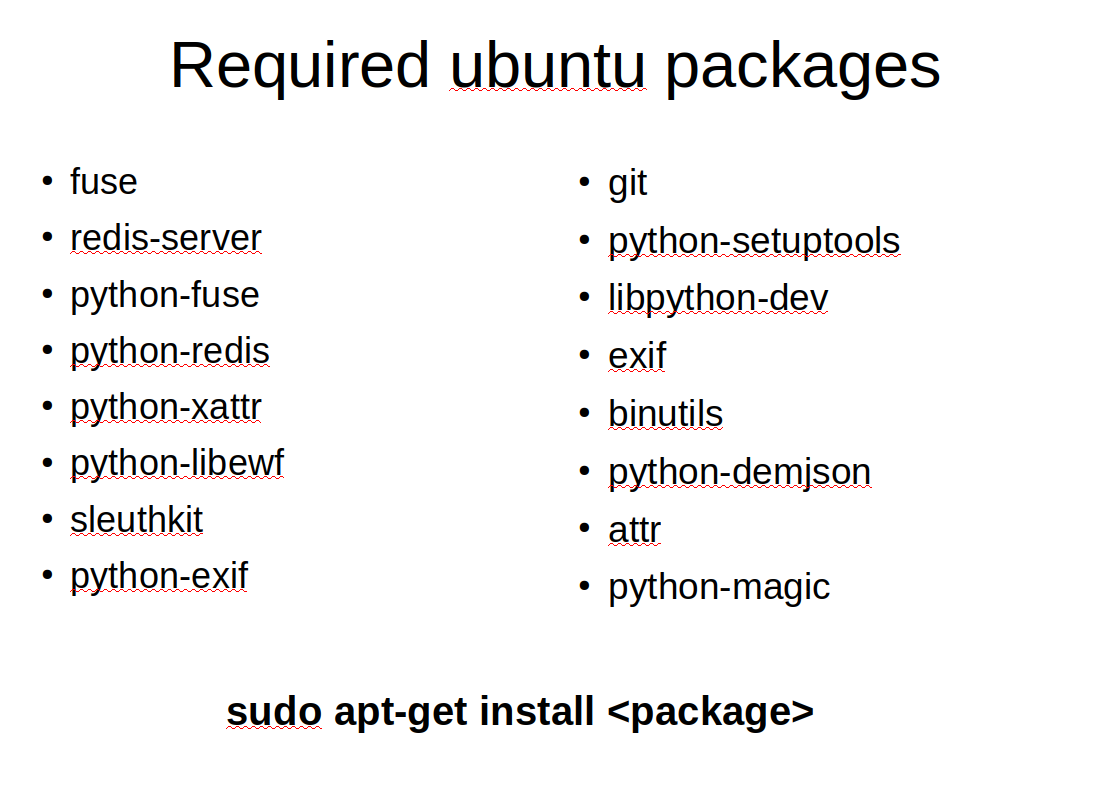

I'll be discussing the installation of MattockFS on Ubuntu first. Note that you may choose to either follow the instllation on your own fresh VM, or you might instead download the ova file.

Most of the packages we will need are available in the standard Ubuntu repository. The above is a list of all the standard packages we will need to install from the ubuntu repo using the apt-get command.

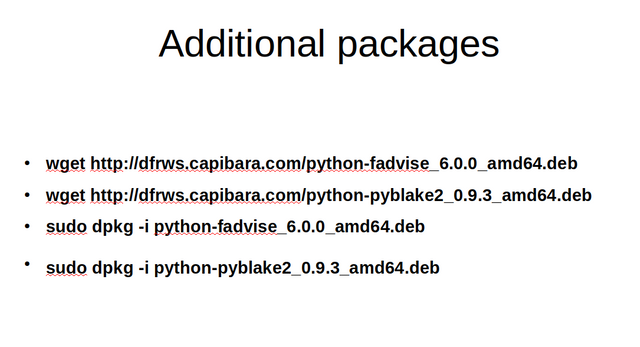

After installing the standard packages, there are still two important dependencies missing that currently arn't in the Ubuntu repo. The python modules for fadvise and for BLAKE2 hashing. You can download the two packages from my website and install them using dpkg.

Now we fetch the MattockFS source code from github. Now we arrived at the point where our OVA file and the manual installation converge. Change directory to the MattockFS directory. If you are working with the OVA file, you will have a slightly outdated version of the MattockFS sources and you will need to do a git pull to be on the same page as those building their VM up from scratch.

To install the most recent version of MattockFS we simply invoke the quick_setup as root. After that we can return to our users home directory and start playing with MattockFS.

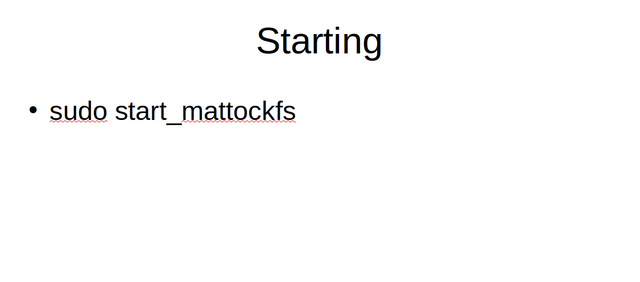

So let us start MattockFS. If you are working with a from-scratch VM, you should fetch an E01 forensic disk image file now and put it into your home directory on your VM.

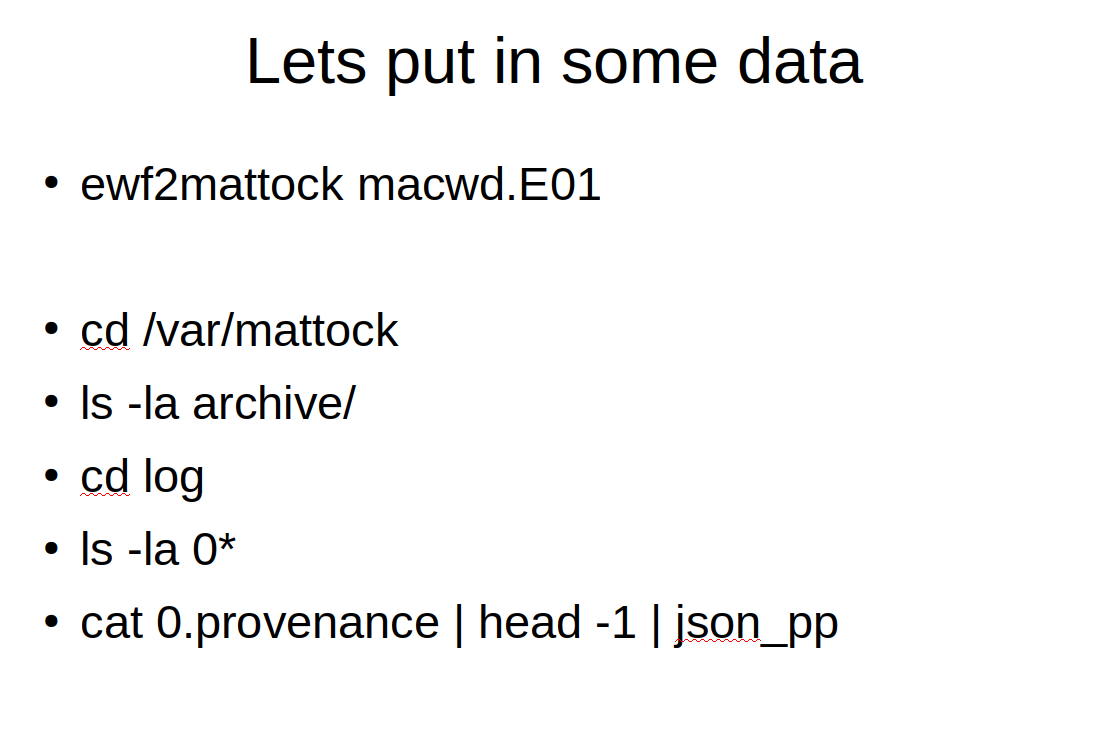

Before we can do anything useful, we start off by placing some data into the MattockFS archive. The ewf2mattock tool provided with MattockFS allows us to copy and freeze the data into the repository.

Given that we want to get a feel of what is going on under the hood, let us have a look at the /var/mattock directory tree. We see that the archive sub-directory contains a number of raw sparse image files. If we look at the log sub-directory, there are a number of interesting log files for us to look at. So far we only entered the data into the system, so there isn't much in the files yet, but please have a look for yourself to see what information these files disclose.

So let us explore what happened to the actual data we submitted to MattockFS. The first thing we do is that we look at.the key of the data that is identified by the first journal entry containing disk-img. Now we can trace what happened last to the data entity we entered by looking at the UPD or update of the job with the key we identified.

Other interesting things to look at right now are the ohash and the refcount files. Have a look at those and see what they are saying.

We saw in the journal that the data was forwarded to a module named mmls. Let us now go to the mnt directory and use the file-system as an API interface to gather some more info. Have a look at the anycast_status for the mmls actor.

Note that apart from the raw data, the ewf2mattock tool also added a meta-data entity to the archive.

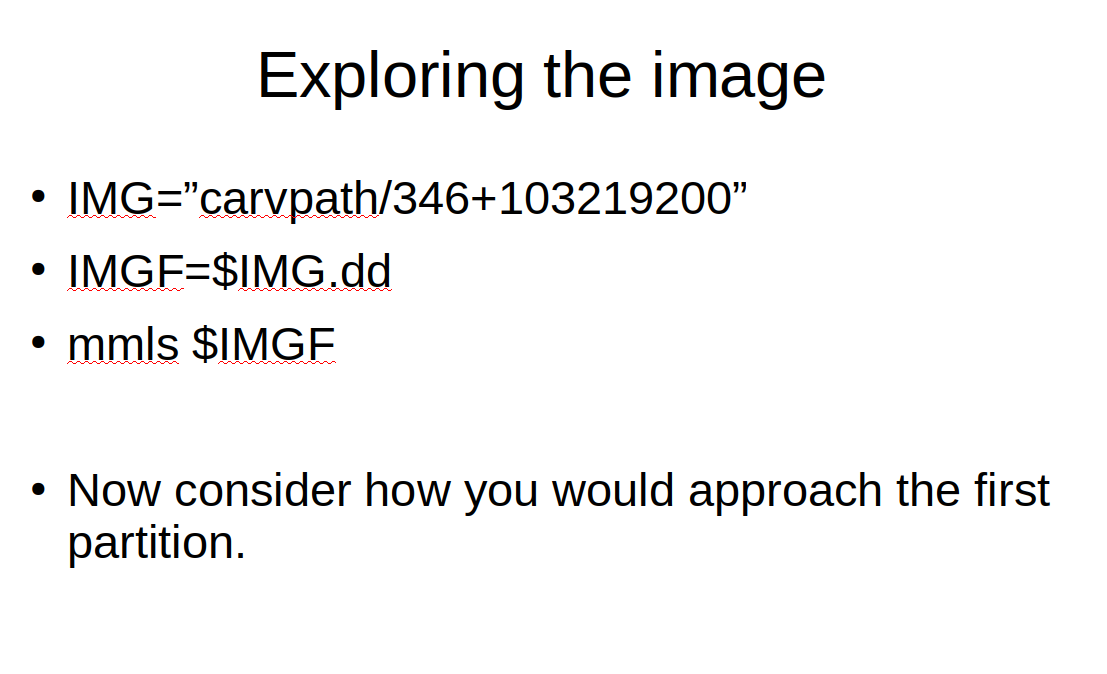

So now for the forensics part. Let us use the sleuthkit to explore how the CarvPath part of the MattockFS file-system. Before looking at the rest of the blog below, try solving a little challenge by yourself first. Look at the output of the mmls command and think about how you might use the shell to address the first partition as en entity within the /carvpath subsystem of MattockFS. Don't try to automate it from the output of mmls yet, just use the relevant parts of the result line representing the first partition and try to write an expression that returns a valid carvpath designation.

Above is a solution to our query. If you didn't figure out a similar solution yourself, try to at least follow the logic behind the solution above.

We'll continue with our CarvPath based exploration of MattockFS with the sleuthkit tools. Let us look a bit deeper using the partition we just identified and a few more sleuthkit tools. Try out the commands above. Then, using the output parameters of the commands in question, consider how we could designate the first JPG image in our file-system listing. Give it some real thought before looking at the solution below.

Hopefully, you managed to come up with a similar solution. Notice that we can now invoke the file command and the exif command to query some meta data regarding the data entity at hand.

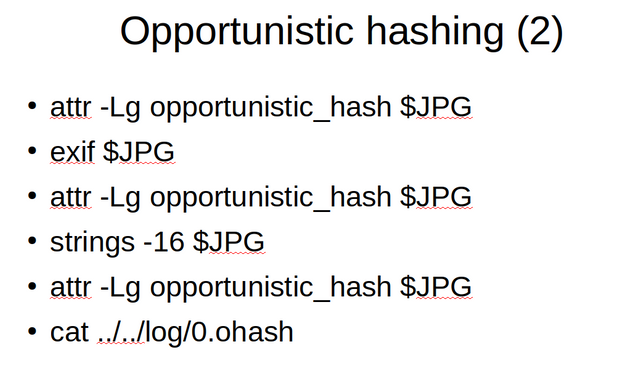

We continue using the result from our previous puzzle to explore opportunistic hashing in MattockFS. In order to explore opportunistic hashing , we will open a carvpath file and keep it open. To do this, we start Python interactively, open the file and put the python shell into the background. We haven't read any data yet, so lets look at the opportunistic hashing status we start out with.

We can use different tools on our carvpath file and see how it influences the opportunistic hashing state of our file.

We see that the opportunistic hashing progresses with different tools used on the file. After using strings, the whole file has been read and the hashing is completed. Have a look at the ohash log file of the primary archive file.

Now let us pretend we are a worker process designed for storing module data in some sort of database, called the Data Store Module or dsm. First have a look at the extended attributes available for the inf and the ctl file of the dsm. Now for the important thing, registering as a worker of the dsm actor.

Notice the sparse capability part of the worker file path. This is our private handle into the worker API.

Now with our worker handle, we can accept our first job. We would normally be doing this in a loop as a worker, but for now, we invoke it just twice. Using the acquired job handle, we can get the carvpath of the metadata entity and look at the metadata itself. As there would be no other modules in the tool-chain for this piece of data, we close the toolchain by setting empty routing info before asking for a next job.

Now we can have a last look at the provenance log to see what happened to the job we just processed.

Now if at one point in time our worker process is shut down, we unregister our worker with MattockFS.

In today's installment, we looked at the low-level file-system as an API in what I hope has been partially hands-on session. If you haven't been reading this blog post with a VM on hand, please consider going through it again using the OVA image on your VM.

In the next installment, I'll be discussing the Python language bindings for MattockFS that allow us to build modules or frameworks on top of the file-system as an API we looked at today.

You were lucky! Your post was selected for an upvote!

Read about that initiative

This post was resteemed by @resteembot!

Good Luck!

Learn more about the @resteembot project in the introduction post.

This is very cool and very informative!