Will Blockchains Scale In Time?

Introduction

Scaling blockchains for mass adoption is a challenge that some of the greatest minds in the industry have been pondering for years. As blockchain awareness grows and blockchain-based applications begin to gain adoption, the big question is whether platforms are going to be able to scale in time to provide a reliable service.

What is the big deal?

Well, blockchains are inefficient. They must be 'trustless', meaning that participants do not need to trust each other in order to transact with each other. The mathematical protocols running on the blockchain must guarantee that trust. Nick Szabo, a forefather of blockchain, defines trustlessness as an inverse function of technical efficiency. So the less efficient the network, the more difficult it is to manipulate. And the more difficult it is to manipulate, the more you can trust it.

To this end, today’s blockchains are designed with highly inefficient processes built in. Namely the process whereby ALL network participants across the globe must process every transaction and reach consensus on a single history. This is known as database canonicalisation. When the most recent ‘block’ of transactions has been agreed on by all nodes, the ledger's ‘global state’ is updated.

A Trade-off

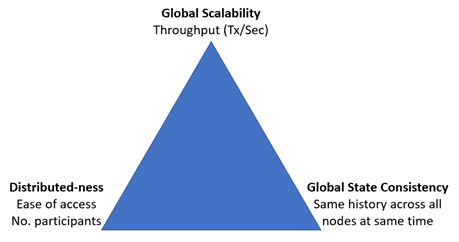

The ‘global scalability’ of a blockchain defines the ability to reach performance characteristics that ultimately aim to serve enterprise-scale needs, as per “big data” databases. This means throughput of 100,000 transactions per second (tps) or more, capacity in the hundreds of Tera Bytes or more and latency of less than 1 second.

As with all things in life, global scalability is part of a trade-off and specifically competes with 2 other metrics:

- ‘Distributed-ness’ where we are aiming for a situation where no single entity controls the network and anyone can join the network as a validating node.

- ‘Global state consistency’ where the network keeps data in sync and aims for a situation where all nodes see the same data at the same time.

It becomes apparent that it is relatively simple to achieve any two of the three apexes of the triangle, but a significant challenge to achieve all three at the same time in the same blockchain.

Where Are We At?

It is largely because of achieving global state in a distributed environment that the current leading smart contracts platform, Ethereum, can process only around 15 transactions per second.

Not only is the throughput low when compared with any modern network-based application, but because of the consensus algorithm, based on proof of work (PoW), that is inextricably linked to maintaining global state, each of those transactions uses an amount of electricity that could power a home for anywhere between a week or a month, depending on network conditions. The remuneration for the electricity consumption by the network is predominantly paid for through inflation of the Ether currency.

To illustrate this inefficiency, running a computation on Ethereum is approximately 100,000,000 times more expensive than running the same computation on Amazon Web Services (AWS).

If that sounds bad, the Bitcoin network performs at speeds and energy efficiencies roughly 2 to 4 times lower.

Because of these scalability limitations, both Bitcoin and Ethereum have in the past 2 years periodically seen debilitating network congestion. And this is at a time when Bitcoin payment adoption is still at minuscule levels and the number of practical distributed applications (DApps) running on Ethereum is effectively zero.

It has long been clear that the mass adoption use-cases such as blockchain-based electricity retail, which requires every customer to transact between 24 and 48 times per day, cannot currently be supported and the blockchain architecture needs to be improved.

Vitalik Buterin recently said that Ethereum will scale to 1 million tps. So what are some of the pathways to this dramatic transformation in scale?

Potential Solutions

The current developments in blockchain scaling can be categorised into two areas: -

- Layer 1: On the main-chain level where the enhanced process performed by the nodes is part of the core blockchain process

- Layer 2: On a layer above the main-chain where the enhanced process performed by the nodes is incremental to the core blockchain process

Layer 1 Scaling

1. Data Format Optimisation

Blockchains typically handle blocks of transactions at a set time interval and have some form of limitation on the size of the block. The latter is done (i) to limit full node hardware requirements to maximise accessibility and decentralisation and (ii) to limit the denial of service attack surface area.

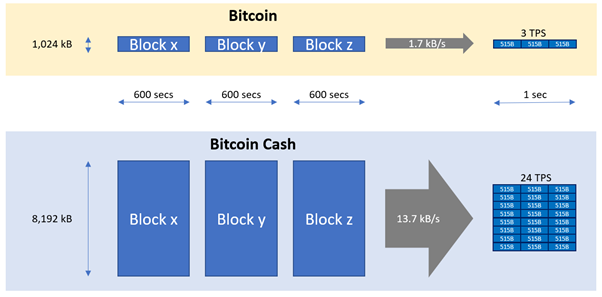

In Bitcoin, for example, the block size limit is 1MB and the block interval is 10 minutes (600 seconds), so we can determine that the network can process up to 1.7kB/s. Because the average transaction size in Bitcoin is around 515 Bytes then we can calculate that the network can process up to 3.38 transactions per second (note this does not include improvements from the implementation of Segwit, discussed below).

One way of increasing network throughput is to increase the block size limit. Bitcoin Cash, a hard fork of the Bitcoin blockchain implemented in 1st August 2017, began life with a block size limit 8 times larger than Bitcoin’s 1 MB limit. This theoretically increased Bitcoin Cash throughput to up to 24 tps, but in reality, Bitcoin Cash transactions are currently much smaller than on Bitcoin, meaning up to 60 tps are practical.

Another way of increasing network throughput is to reduce the size of the transaction data. A development called Segregated Witness (SegWit) increased the volume of transactions that fit into each Bitcoin block without raising the block size parameter. Segwit was introduced as a soft fork in 2017 meaning that node operators could optionally upgrade.

In Segwit, the block size limit was no longer measured in bytes. Instead, blocks and transactions were given a new metric called “weight” that correspond to the demand they place on node resources. Each Segwit byte was given a weight of 1, each other byte in a block was given a weight of 4, and the maximum allowed weight of a block was 4 million, which allowed a block containing SegWit transactions to hold more data than allowed by the current maximum block size. This could effectively increase the limit from 1 MB limit to a little under 4 MB, giving a ~70% increase in transactions. In reality though, because Segwit was a soft fork there are still significant amounts of non segwit transactions on the network, the full potential has yet to be realised (and may never be).

While data format optimisation can help to increment blockchain scalability in the short term it is not the approach that will provide the several orders of magnitude of performance improvements that will be required if blockchains are to become mainstream.

2. Proof of Stake (PoS)

While not in itself a scaling development, the use of a non-physical resource-based consensus mechanism is a pre-requisite for some of the more complex scaling solutions discussed later. The proof of work (PoW) mechanism that is currently used in Bitcoin and Ethereum relies upon participants expending physical resources (namely electricity) in order for the network to securely and consistently agree on the transaction history.

Not only is this an inefficient use of resources but it opens up security issues when scaling solutions like sharding are implemented because it is impossible for the network to relinquish the power of a bad actor. Their hash power remains so long as they pay their electricity bills.

PoS consensus mechanism’s are the de-facto alternative today. Here, instead of proving use of computational power, network participants stake their wealth that their transaction records are accurate. In Ethereum the PoS development, known as ‘Casper’ is expected to be released in late 2018 and will be a significant upgrade to the Ethereum protocol. At a high level, Casper allows users who own ether to deposit it to a smart contract and then become permissioned to update the ledger.

If one or more of these bonded validators acts out of turn or attempts to update the ledger in a way which violates state transition rules, those changes will be rejected by the broader set of stakers and those fraudulent validators will lose their entire ether deposit. Bad actors can be quickly eliminated through depleting their power (wealth) through this confiscation. If instead these validators act honestly, they earn some amount of ether commensurate with the fees in the transactions they have processed.

3. Sharding

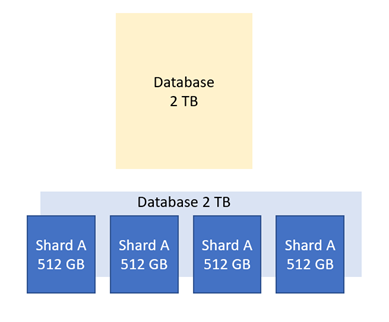

Sharding in blockchain is similar to traditional database sharding. With traditional databases, a shard is a horizontal partition of the data, where each shard is stored on a separate database server instance. This helps virtualise or spread the load across different physical servers.

By allowing nodes and transactions to be divided into smaller groups, sharding enables more transactions to be validated at once, and nodes only need to store certain segments of the blockchain. This results in a blockchain network that no longer has a global state. Transactions are directed to different nodes depending on which shards they affect. Each shard only processes a small part of the state and does so in parallel.

The challenge is that a transaction executed on the blockchain can depend on any part of the previous state in the blockchain. Trust-worthy communication between shards that does not open up opportunities for inter-shard attacks is difficult but can be obtained through careful crypto-economic design, or incentives. This is one of the reasons that before Sharding can be implemented on Ethereum, the protocol must be upgraded to Casper, which is based on a proof-of-stake consensus.

Layer 2 Scaling

4. State Channels

Unlike sharding, state-channel solutions scale the network off-chain. Two well-known state-channel solutions in development are ‘Raiden’ on Ethereum and ‘Lightning’ on Bitcoin.

Raiden will make microtransactions more feasible for Internet of Things and Machine-to-Machine applications.

The Lightning network promises to help Bitcoin become electronic cash for the masses – making it a feasible option for everyday transactions such as buying a sandwich or paying bills.

How does it work?

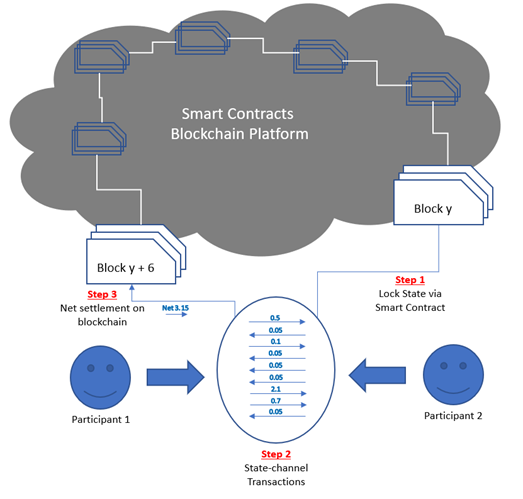

Off-chain transactions allow a collection of nodes to establish payment channels between each other to facilitate transactions, without directly transacting with the main-chain and clogging up precious capacity, be it Ethereum, Bitcoin or any other blockchain.

As an analogy, imagine you are at a bar and plan to stay there all night. If you open a new tab and close it out after paying for each round, you must sign multiple receipts and the bar incurs multiple transaction fees from their payment processor (this is why they always ask if you want to leave a tab open). If you instead keep your tab open across several rounds, you need only sign one receipt at the end and you save the bar some amount in transaction fees.

The concept is similar with state channels on a blockchain platform. Instead of creating 1,000 ledger updates, one can open a payment channel and instead pass 1,000 mathematical proofs that prove the sender can pay some number of tokens to the receiver. These can be verified “off-chain” and only one proof (typically the last one) is needed to close the channel. Thus, one thousand transactions can be reduced to two: one to open the channel and one to close it.

Not only is transactional capacity increased with state channels, but they also provide two other very important benefits: increased speed and lower fees.

5. Relays

In distributed ledgers, the term ‘relay’ can be used to describe a wide range of scaling solutions, all of which have the common characteristic of connecting multiple blockchains together. The name for this category of solution comes from the idea of relaying data between chains.

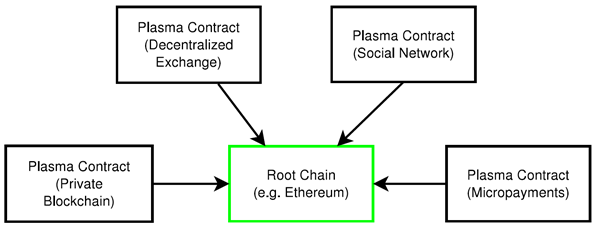

A typical architecture for blockchain’s connected by relays is a single main-chain at the centre with multiple side-chains at the periphery. Other solutions propose multiple layers branching outwards in a tree structure.

Relays allow asset transfers between chains because those assets are replicated in both environments and map 1:1. Any number of separate blockchains may be joined to the main chain to offload transactions and scale the ecosystem to a much higher degree.

One of the main relay developments is on Ethereum and is known as Plasma. Plasma has been developed by Joseph Poon and Vitalik Buterin as well as heavy involvement from OmiseGo - a decentralised exchange and payments platform project that relies on Plasma.

Image: Ethereum Plasma

Plasma blockchains are a chain within a blockchain. The system is enforced by ‘bonded fraud proofs’. This means that the Plasma blockchain does not disclose the contents of the blocks on the root chain. Instead, blockheader hashes are submitted on the root chain and if there is proof of fraud submitted on the root chain, then the block is rolled back and the block creator is penalized. This is very efficient, as many state updates are represented by a single hash (plus some small associated data).

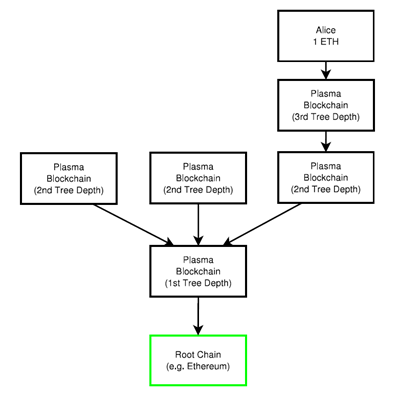

Image: Plasma Whitepaper

Plasma composes blockchains in a tree. Block commitments flow down and exits can be submitted to any parent chain, ultimately being committed to the root blockchain.

Image: Plasma Whitepaper

Plasma represents the most promising long term scaling design for Ethereum because incredibly high amounts of transactions can be committed on Plasma chains with minimal data hitting the root blockchain.

Cosmos is another development that proposes a multi-chain system, using Jae Kwon's Tendermint consensus algorithm.

Essentially, it describes multiple chains operating in zones, each using individual instances of Tendermint, together with a means for trust-free communication via a master hub chain. This interchain communication is limited to the transfer of digital assets (specifically tokens) rather than arbitrary information.

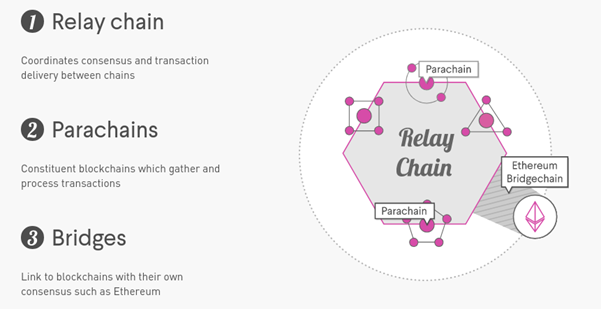

Polkadot is yet another blockchain development that will be designed on the principle of a heterogenous multi-chain interconnected by relays.

Image: Polkadot

They see the key limitation of blockchain scaling being due to the underlying consensus architecture whereby the state transition mechanism, or the means by which parties collate and execute transactions, has its logic fundamentally tied into the consensus \ canonicalisation mechanism, or the means by which parties agree upon one of a number of possible, valid, histories. By separating these two functions Polkadot believes scaling limitations will be alleviated greatly.

Conclusion

While the various scaling solutions under development are promising, there is low visibility of time to implementation. Additionally, the complexity built into many of these solutions creates cause for concern from a security point of view.

While the task of securely scaling blockchains by 4 or 5 orders of magnitude is mammoth, the silver lining is that it is being tackled by some of the brightest minds in the world. But the jury is still out on whether this conundrum will be solved in time or whether it will be the bottleneck to blockchain mass adoption once usable distributed applications come online and regulatory issues are resolved.

To the question in your title, my Magic 8-Ball says:

Hi! I'm a bot, and this answer was posted automatically. Check this post out for more information.

To listen to the audio version of this article click on the play image.

Brought to you by @tts. If you find it useful please consider upvoting this reply.