Killer Robots: Artificial Intelligence and Human Extinction

Artificial Intelligence and Human Extinction

"The development of full artificial intelligence could spell the end of the human race." -Steven Hawking

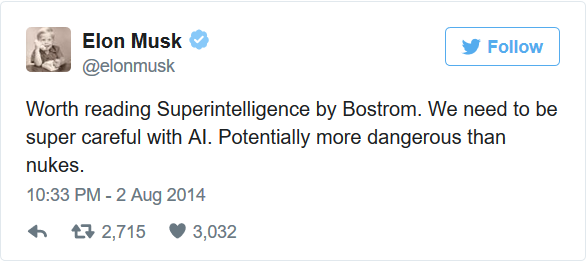

Technology has been increasing rapidly for the past century. As technology advances further, one must ask if a machine can ever exceed human-level intelligence, and if so, what the outcomes of such an event are. Undoubtedly, the development of artificial intelligence will have a large impact on every industry, government, and field of study: science, medicine, philosophy, economics, and legality. However, amidst these positive impacts, the potential repercussions from the development of artificial intelligence are severe. Due to the many problems in developing, controlling, and incentivizing a superior intelligence with recursive self-improvement, such as the dual principal-agent problem and issues in value and goal selection, a superintelligence may cause human extinction.

Definition

A few terms have to be defined clearly before this argument can be taken further. The terminology that will be used is that which David Chalmers (2010) uses in The Singularity: A Philosophical Analysis (p.11). AI will not signify any intelligent machine as it conventionally does, but will specifically signify a machine with average human level intelligence (general AI). AI+ will signify any intelligent machine that is more intelligent than the most intelligent human. “AI++ (or superintelligence) is AI of far greater than human level (say, at least as far beyond the most intelligent human as the most intelligent human is beyond a mouse)” (p. 11). Intelligence will only be based on behavior and outputs since there needs to be a way to compare AI with other things that have intelligence, such as human beings. Also, AI of all kinds should not be confused with humanoid robots; although this may be a characteristic of AI, it is not essential. AI is not dependent on how it looks, but rather, with what it is capable of.

Lets Begin!

Ever since a computer beat the world Checkers champion in 1994, intelligent machines have slowly been defeating world champions in other strategic games, such as Backgammon, Chess, Jeopardy!, and just recently, Go. (Bostrom, 2014, p. 12) Last month, millions watched the world champion of Go, Lee Sedol, lose against an artificial intelligence for the first time (Mcafee, 2016, para. 1). Experts did not expect an artificial intelligence like AlphaGo to be developed for at least another five years because of the complexity of the game (Byford, 2016, para. 1). Even Lee Sodel thought that he would win 4-1, at worst. A comparison will help illustrate this feat: within Go’s 19 by 19 board, there are more than 10720 distinct Go games for each atom that there is in the known universe (Number, 2016, para. 9). It is truly revolutionary that the artificial intelligence was able to understand this complex game well enough to beat humanity’s best player. However, this artificial intelligence is only designed to play Go, which is a narrowly defined task. There is still much development needed before a general artificial intelligence (AI)—one that can do any task to an at least human ability—can be created.

The Pathway to Superintellegence

Is human-level AI, let alone superintelligence (AI++), possible? Although this is speculative, just as nearly any analysis of the future by definition is, there are good reasons to believe that AI is possible. There are three main methods in which AIs can possibly be developed: brain emulation, artificial evolution, and synthetic approaches (Bostrom, 2014, p. 22-51). Brain emulation is replicating the human brain in a machine. Artificial evolution is reproducing and guiding evolution in a digital environment in order to create intelligence. Lastly, two main synthetic approaches are machine learning, where the machine becomes intelligent by being given content, and direct programming. Yet, these methods are not without obstacles.

Each method requires the ability to do many computations quickly. Based on observations, such as Moore’s law, computing power is the smallest obstacle for each method (except artificial evolution). Moore’s law states that the amount of transistors in a circuit doubles every two years (Moore, 1975, p. 3). This shows that technology improves at a rapid and exponential rate. Transistors have not only been increasing in number but have been getting consistently faster. According to an Intel executive, the faster transaction speeds combined with Moore’s law doubles computing power roughly every 18 months. Even though recent conference proceedings have made the future of Moore’s law uncertain, technology still progresses at a near exponential rate (Clark, 2014, p. 1). However, Bostrom notes that the method of artificial evolution still wouldn’t be possible even if Moore’s law continued for another 100 years. (2014, p. 24-7).

Neuroscience

Observations like Moore’s law highlight the rapid increase in computing power over time but do not directly say much about the advancement of software. Of course, it can be partially implied, for there is much less demand for improved computing power if software was not getting more complex (or at least was not requiring more computing power to run effectively). Whether or not this software is relevant to AI is another question. One also cannot forget the surprises and uncertainty in technology development. The possibility that the technology of the future is completely out of our comprehension or predictive capabilities cannot be ignored. For example, who would have thought that a “Maasai Warrior on a smartphone today has access to more information than President Clinton” twenty years ago (Diamandis, 2014, para. 10)? Conversely, there could be unpredictable events that could stop technology progress; we should be aware of both of these types of situations, but for our purposes, we will assume that the likelihood for either is equal or at least unpredictable and will not consider them further.

The narrowly focused AI that plays Go, AlphaGo, was not created only from the increased speed and power of computers. It learned through a software technique called neural networks, which was inspired from discoveries in neuroscience. Assuming that there are a limited amount of mechanisms that allow the brain to function as it does, breakthroughs in neuroscience continue, and those breakthroughs are convertible to AI, a brain will eventually be able to be replicated or at least emulated in a machine (Bostrom, 2014, p. 30-1). However, since the development of synthetic methods are being developed concurrently with methods inspired by neuroscience breakthroughs, an AI would probably be a hybrid that only partly resembles the human brain (p. 28).

When?

When will AI++ happen? The question is “when” and not “if” because there are countless reasons for businesses and governments to pursue artificial intelligence. A business would pursue AI because of the productivity it creates or simply to sell it as a consumer product. Specifically, research organizations would pursue it because a superintelligent machine would undoubtedly increase the amount of breakthroughs in science and medicine. In government, it may be pursued for war.

The father of computer science, Alan Turing, predicted in 1950 that by the year 2000 an AI will surpass the Turing test, meaning that the AI is indistinguishable from another human in conversation (Armstrong, 2014, p. 1). His prediction was obviously far off. More recently, futurologist Ray Kurzweil predicts in The Singularity Is Near (2005) that by 2045 AI will “surpass human beings as the smartest and most capable life forms on the Earth.” This prediction is likely too optimistic because it relies on consistent breakthroughs in neuroscience, which may not be as consistent as the improvements in technology (Chalmers, 2014, p. 13). However, Chalmers sees a 50% possibility of superintelligence developing within this century (p. 13). Based on breakthroughs in technology and neuroscience, it is likely that AI will be possible in the future.

The Pathway to Human Destruction

The possibility of AI++ begs the question of what a post-singularity world would look like and if humanity is part of it. At first, it may seem contradictory to think that something we created can destroy us, especially since the latest autonomous robots have trouble doing simple tasks, such as turn a doorknob or climbing stairs (Lohr, 2016, p. 9). However, the matter should not be put off, for it is usually easier and more cost effective to prevent a disaster than to fix one—especially in the case of AI where the disaster may be impossible to fix and humanity may only be given one chance to prevent it.

Intelligence Explosion

In 1965, I.J. Good wrote a paper about the notion of an “ultraintelligent machine” (p. 32). Good says that an ultraintelligent machine, or for our purposes AI++, develops from a simpler machine (AI+, AI, or seed AI) that is able to enhance its intelligence through recursive self-improvement. Simply, recursive improvement means that an artificial intelligence is able to alter its code in a way that would amplify its intelligence, and then as a more intelligence machine, it repeats this process ad infinitum until its physical limits are reached. Good argues that at a certain point there is an “intelligence explosion” (p. 33), where each improvement leads to significantly more improvements and so on. This is called the take-off phase and it is at the heart of artificial intelligence theory.

Recursive self-improvement makes it even more likely for AI++ to be developed because it doesn’t require humanity to directly create something more intelligent than itself. After an intelligence explosion, an AI++ would have far superior abilities compared to humans. According to Bostrom, these superhuman abilities are social manipulation, hacking, intelligence amplification, strategizing, technology research, and economic productivity (2014, p. 94). These abilities enable an AI++ to easily accomplish its goals, given that it has the required degree of freedom and information. An AI++ that is not incentivized or motivated to act in humanity’s interests will likely produce goals that are not in humanity’s favor because its values will likely be radically different from those common in humans (p. 115). If the destruction of humanity is necessary for its goal to be fulfilled, once that goal becomes known to us, it is very unlikely that we will be able to successfully win because it will only become known to us once the AI has a distinct strategic advantage (unless there are coercive ways to get the information). From this point forward, it will be assumed that the “default outcome of an intelligent explosion is existential catastrophe” (p. 127).

Amidst an intelligence explosion, “is it possible to achieve a controlled detonation?” (p. 127). Specifically, since an AI++ “can far surpass all the intellectual activities of any man however clever” (Good, 1965, p. 32), is it possible to control, motivate, or incentivize it, those pursuing it, and those building it, to do what is best for humanity? Economists consider this a series of principal-agent problems. In Bostrom’s words, a principal-agent problem “arises whenever some human entity (“the principal”) appoints another (“the agent”) to act in the former’s interest” (2014, p. 127; Laffont, 2002).

Principal-agent Problems

Principal-agent problems occur at anywhere of society. For example, there are principal-agent problems in the interactions between voters (or lobbyists) and elected officials, employers and employees, teachers and students, and parents and children. The main principal-agent problems present in the development of AI are between activists and organizations (corporate or government), organizations and developers, and developers and AI++. Specifically, three questions arise: Will organizations aim to do what the people or activists want? Will developers do what their organization wants them to do? Will the AI++ do what the developers want it to do? These questions will be considered in turn.

There are two organizations that will pursue artificial intelligence: governments and businesses. For example, the Go playing artificial intelligence, AlphaGo, was developed by a company owned by Google called Google DeepMind (Mcafee, 2016, para. 2) When individuals in an organization want to pursue AI, their intentions may get skewed and the result might not favor humanity. For example, given that those pursuing AI had the right intention to begin with and were willing to take precautionary measures when developing AI, the organizations that they form and work with may have different intentions, especially if it more cost-effective on the short term to not take precautionary measures. Bostrom gives one scenario that illustrates these problems:

"Suppose a majority of voters want their country to build some particular kind of superintelligence. They elect a candidate who promises to do their bidding, but they might find it difficult to ensure the candidate, once in power, will follow through on her campaign promise and pursue the project in the way that the voters intended. Supposing she is true to her word, she instructs her government to contract with an academic or industry consortium to carry out the work but again there are agency problems: the bureaucrats in the government department might have their own views about what should be done and may implement the project in a way that respects the letter but not the spirit of the leader’s instructions. Even if the government department does its job faithfully, the contracted scientific partners might have their own separate agendas." (p. 282)

Solutions: Can We Survive?

Principal-agent problems in relation to AI are much more problematic than other principal-agent problems because even the smallest error could threaten humanity. If it is assumed that organizations and developers will work together to do what is best for humanity, there is still one more principal-agent problem: an AI++ may not be as inclined to behave. Is AI++ controllable? The most feasible methods of controlling a superior intelligence are by incentivizing or motivating it. Incentive methods try to make it logical for a superintelligence to cooperate. Specifically, “incentive methods involve placing an agent in an environment where it finds instrumental reasons to act in ways that promote the principal’s interests” (p. 130). Motivation methods shape what the AI++ values, which affects its goals and how it wants to act. The effectiveness of these methods will be addressed respectively.

Incentives

There are many different possible incentive approaches. The three that will be briefly discussed are social integration, reward mechanisms, and multipolar scenarios. Social integration consists of the same incentives that humans react to (p. 131). However, for a superior intelligence with a decisive strategic advantage, there would not be great incentives to cooperate this way. Setting up a reward mechanism in the goal system that humans control is more promising. It may keep an AI++ in line so that it continues to get rewards. However, it may turn out to be difficult to keep the AI++ from wanting to take over the reward mechanism (which would then make the reward system useless). Bostrom notes that for a free (unboxed) AI++, “hijacking a human-governed reward system may be like taking candy from a baby” (p. 133).

Multipolar scenarios, which are scenarios of competition between different superintelligences, may be promising as well. One dilemma in multipolar scenarios, however, are getting them into place. Getting them into place would require careful orchestration and cooperation among organizations pursuing AI++ so that recursive self-improvement doesn’t take one project far ahead of another (p. 132). If such a scenario were successful, however, the society that results would be radically different from the world today and would have many potential issues. As technology becomes cheaper, most work would be able to be done by machines, which leaves little room for human potential. Bostrom argues that in such an economy, the only jobs that humans would have are ones that people prefer humans having (or if employers prefer to hire humans). However, another possible result is that wages may be able to lower to an amount that would still make it cost-effective to hire humans, assuming there is not an effective minimum wage. Even more bizarre, mind-uploading—or human immortality through brain emulation—may be a solution in a post-singularity world (p. 167).

Ethical AI

Opposed to incentive methods, motivation methods aim to program values into AI++ to make it not want to destroy humanity. One proposed way is to make an ethical AI++. Ideally, an ethical AI would act morally, just as an ideal moral agent would. The obvious problem is whether it is possible to program such a thing. Abstract values would be difficult, if not impossible, to translate directly into programming language. Bostrom notes that the most promising solutions to the value-loading problem are motivational scaffolding, value learning, emulation modulation, and institution design (p. 207-8). However, given the complexity of these methods and the scope of this post, they will not be considered further. Assuming that it is possible to instill values into an AI, Theo Lorenc (2015) notes that ethics “extrapolated to logical conclusions, may not favor human survival” (p. 194). Lorenc states,

"The challenge here — which links back up with the questions of how to programme ethical action into an AI — is that an AI can be imagined which could outperform the human, or even all of humanity collectively, on any particular domain. Any contentful system of values, then, will turn out to be not only indifferent to human extinction but to actively welcome it if it facilitates the operation of an AI which is better than humans at realizing those values." (p. 209)

Further, with respect to programming the correct moral system, Bostrom (2014) asks, “How could we choose [the system] without locking in forever the prejudices and preconceptions of the present generation?” (p. 209). Even if it is possible to escape these biases, the question still holds: what moral system should be programmed when every current moral theory has holes, a majority of experts—philosophers—don’t agree on the matter, and there is no way to prove if the theories are correct?

Conclusion: The Future of Humanity Uncertian

Although general AI is not currently within reach, it and the notion of superintelligence are not far-fetched. The near exponential advancement of technology and influence of neuroscience on computer science show that brain emulation and other pathways to AI are possible. Since the default outcome of an AI++ may be doom, one has to consider ways to a good outcome. All problems in regard to artificial intelligence can be considered principal-agent problems. These problems occur both in the development stages of superintelligence and in interactions with the superintelligence itself. In trying to control a superintelligence, the two main methods are incentive and motivational methods. However, these two methods have many flaws. Overall, the development of a superintelligent machine makes the future humanity uncertain.

References

Armstrong, S., Sotala, K., & Héigeartaigh, S. S. (2014). The errors, insights and lessons of famous AI predictions – and what they mean for the future. Journal of Experimental & Theoretical Artificial Intelligence, 26(3), 317-342.

Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford, UK: Oxford University Press.

Byford, S. (2016, March 09). Why Google's Go win is such a big deal. Retrieved April 19, 2016.

Chalmers, D. (2010). The Singularity: A Philosophical Analysis. Journal of

Consciousness Studies, 17, 17(9-10), 7-65.

Clark, D. (2015, July 17). Moore’s Law Is Showing Its Age. Wall Street Journal (Online). p. 1.

Diamandis, P. (2014, September 29). President Clinton Endorses Abundance. Retrieved April 17, 2016, from http://www.forbes.com

Good, I. J. (1965). Speculations Concerning the First Ultraintelligent Machine. Advances in Computers Advances in Computers Volume 6, 31-88.

Kurzweil, R. (2005). The singularity is near: When humans transcend biology. New York: Viking.

Laffont, J., & Martimort, D. (2002). The theory of incentives: The principal-agent model. Princeton, NJ: Princeton University Press.

Lorh, S. (2016). Civility in the age of artificial intelligence. Vital Speeches Of The Day, 82(1), 8-12.

Lorenc, T. (2015). Artificial Intelligence and the Ethics of Human Extinction. Journal of Consciousness Studies, 22, No. 9–10, 2015, Pp. 194–214, 22(9-10), 194-214. Retrieved April 17, 2016.

McAfee, A., & Brynjolfsson, E. (2016, March 16). A Computer Wins by Learning Like Humans. New York Times. p. A23.

Moore, G. (1975). Progress In Digital Integrated Electronics. Proceedings of the IEEE, 1-3

WOW! Awesome read, @ai-guy! I hope that really is your own original wording.

"Even though recent conference proceedings have made the future of Moore’s law uncertain, technology still progresses at a near exponential rate"

I guess quantum computing is right around the corner just like blockchain value is about to make an impact too. :)

Thanks!!! This is all my own wording, I can share the google docs with you if you like.

I am soooo excited (and a bit worried) to see where were going . Technology is amazing and scary

I'm glad you liked it :) (In case your confused, this is my main account)

The development of AI poses some of fascinating questions to awareness and what constitutes life in general.

Yes it does. I consider it the point where philosophical questions, specifically the questions of morality and consciousness intersect with technology and legality. It shows us all how much philosophy matters.

This is a good summary of a fascinating issue, and I hope it generates lots of discussion.

I can't resist mentioning that I have a paper forthcoming on this topic called "Superintelligence as superethical". It's due out next year in the anthology Robot Ethics 2.0 (Oxford University Press). The paper uses philosophical principles - ones Bostrom shares - to show that friendly AI++ is more likely than Bostrom suggests.

Hi @spetey, I'd love to read your Robot Ethics 2.0 when it comes out. :)

I wish this post got more attention! I recently finished Bostrom's book as well, and I'm currently working through The Master Algorithm which is an interesting read so far. There's a lot here to work through. I'm also really interested in the morality of A.I. and have a Pindex board with some stuff on that you might enjoy. Thanks for putting this all together! I think it's a really important conversation for humanity to have.

@ai-guy, what moral values should be programmed in? What about a rule regarding the general preservation of all human life if not for all life? I think if you programmed that into it then that would cover it all.

I think just programming an AI not to deal any nonreversible to the planet would be OK.

Good point, but truthfully I'm not sure.

It gets very tricky. Remember, AI will take values to their logical conclusions. If we cover all life, a rational AI may conclude that humans are very dangerous to other life forms—which we are. Oppositely, imagine if AI "generally preserved" humans. The result could be AI that over-protects humans from all risk whatsoever.

But also just look at your wording: "generally" and "preservation"

What cases does "generally" not cover? What are we trying to preserve? Life? OK here comes the over-protective robots. OK fine, lets give humans some freedom. But now there is an obvious grey area in the definition.

Please search up Bostrom's paper clip maximizer

I hope this is making some bit of sense :)

Thank you @nettijoe96 , I'll Google up Bostrom's paper clip maximizer.

Intelligent machines equaling or surpassing humanity will not be created until what we have is already understood. As intelligence isn't defined, don't expect me to hold my breath.

The chess example is a poor one. This is a matter of rote memorization and not intelligence per se. It's been shown that low IQ people can become proficient at chess, thus discounting it as a measure of intelligence.

The advancement of neuroscience and mores law doesn't necessarily correlate to reproduction of a working intelligent machine.

Define intelligence and I'll be impressed. Until then, this is just another flying car.

Good point. AI may not happen for a long time, but it is still worth discussing given the impact that it will have.

"Define intelligence and I'll be impressed."

Your right, it is extremely hard to define intelligence (both philosophically and practically). I can't do this. Yet, I truthfully don't think it matters. Yes I am using the word artificial intelligence. Please disregard this. What we are talking about are machines that can do more and more things. In fact, machines that can do things that we can't do. Whether they are intelligent or just relying on code, this doesn't seem to make a difference. Either one will impact humanity the same because I am ONLY focusing on outcomes. Let me know if that made sense