Steem Late Vote Explorer :: New Features

Steem Late Vote Explorer (SLVE) is a tool I designed to visually represent and track last minute votes in the interests of education and abuse detection. It is built with customizability in mind and can currently stream the blockchain to show a live feed of last minute votes, as well as saving that data for visual representation at any time. For more information, please read the introductory post.

Github Repository

New Features

Added vote value accumulator for outgoing votes

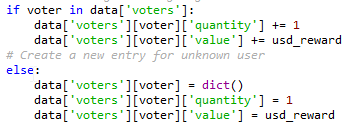

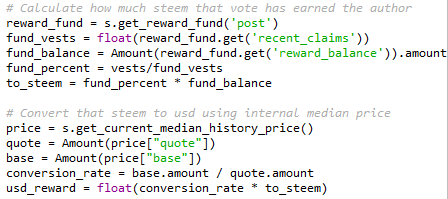

The program now keeps track of the value of outgoing votes, to implement this, I reset my old database and modified the following code on lines 104 to 111 of abuse_detection_steemit.py

Which used to just be:

if voter in data['voters']:

data['voters'][voter] += 1

else:

data[voter] = 1

Meaning each entry in the database for outgoing vote accounts looks like this:

"profkimicor1987": {"quantity": 2, "value": 0.006941774435027}

Rather than this:

"profkimicor1987":2

Changes to the database also mean changes had to be made to make_pie.py as it'd be gathering a new dataset.

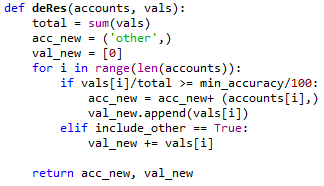

On lines 20 and 73 through to 91, you can see the addition of the

value, outgoing_value and outgoing_names_value variables.

The deRes() function which you can see being called in the code above is also new, which brings me onto the next new feature.

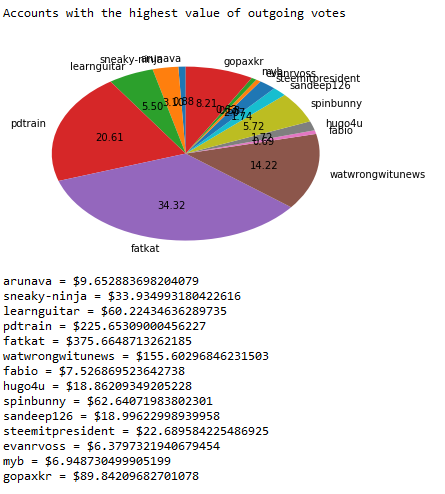

Automatic, user adjustable, exclusion of smaller abusers, anomalies and accidental LMVs

When representing the data, there were a lot of accounts, too many to include all of them in a pie chart. My temporary solution was to set static minimum limits on dollar values and number of votes, however, as the dataset grew, these values had to be adjusted manually as more and more accounts passed the threshold.

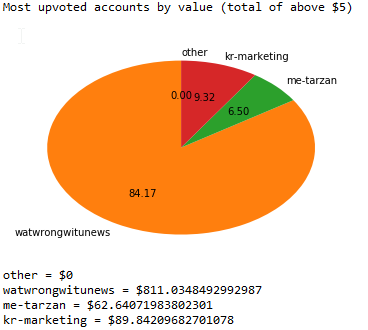

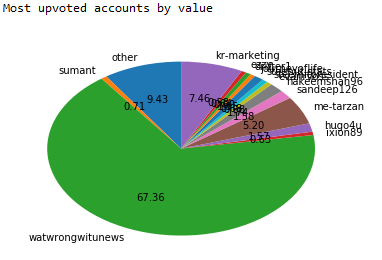

Found on lines 28 through 47 in 'make_pie.py', 'deRes()' acts as a filter to what I'm just going to refer to as dust entries. It creates new tuple and list pairings for the inputted account names and the corresponding values. The items only make it to the new list if the value exceeds the user defined 'min_accuracy' which I have set to 99.5%. Any items that don't make up more than 0.5% (in this case) of the total are added to the 'other' category if the user has 'include_other' set to true, otherwise they are disregarded. Below I have included an example of the same dataset, one with 99.5% accuracy (left), the other with 95% accuracy(right).

'include_other' is set to 'False' for both examples.

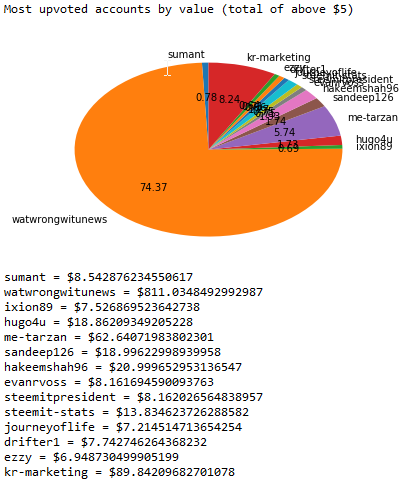

Exclusions list

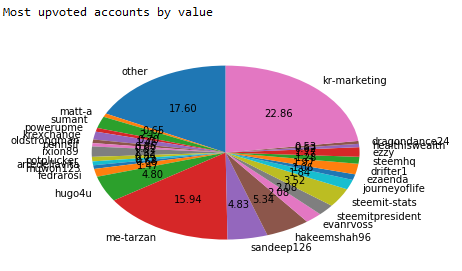

This is a small feature, but an important one nonetheless, when I first started on this project I noticed that @busy.org was giving away lots of last minute votes. These are awarded to people who use the #busy tag and the busy interface. Now these aren't really abusive votes so much as a platform rewarding it's users, so I quickly built in an exclusion list for such cases. The list is declared on line 22, and is used on lines 64 & 79 when reading the JSON file as a simple if statement filter.

In this case, both the pies have include_other = True set. The pie on our left has no exclusions whatsoever, and the pie on our right has 'watwrongwitunews' excluded, both with accuracy set to 99.5%.

New logo

.jpg)

After releasing the introductory post, @radudangratian reached out saying he'd be willing to make a logo for my project, we had a chat on discord and he quckly procured some high quality drafts. The finished product can be seen to the right and in his post here.

His work was professional and he is an excellent communicator, he managed to create exactly what I had in mind for the logo, so if you're ever in need of a project logo, send a message his way.

Bug Fixes

Incorrect vote values caused by use of the coinmarketcap API

I've since switched to the internal median price, built into the steem API which seems to be working just fine.

New Roadmap

After implementing these new changes, and since my initial post, my roadmap has adapted slightly. On my initial post, @stoodkev suggested that I integrate it with the steem sincerity project, I like that idea a lot so my next point of focus will be using the sincerity API, just for information reasons, and possibly to contribute to the database at a later point in time.

I'm also looking at making a GUI so data can be looked at more manually, and at a finer level of detail, as it's starting to become apparent that pie charts aren't the answer to everything. In the mean time, I might create bar chart options as well, just so the data can be used in as many ways as possible.

Finally, I'm hoping to allow users to pick what starting/ending blocks the data is drawn from instead of having to stream the data overnight like I did.

Discord: sisygoboom#6775

Hey @sisygoboom

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Contributing on Utopian

Learn how to contribute on our website or by watching this tutorial on Youtube.

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!

Nice additions to your project !

Good catch on the exclusion list. For Busy you probably caught our bot catching up on missed votes.

Your contribution has been evaluated according to Utopian rules and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post,Click here

Need help? Write a ticket on https://support.utopian.io/.

Chat with us on Discord.

[utopian-moderator]

Thanks man! Yeah I was thinking it must've been a fluke of sorts since I reset my data and there was no sign of Busy at all.

Also, thanks for the feedback, I'm going to work on my git commit message etiquette :)

Who else is commenting for the upvotes? :))

Here, have some love ;)

Congratulations! Your post has been selected as a daily Steemit truffle! It is listed on rank 1 of all contributions awarded today. You can find the TOP DAILY TRUFFLE PICKS HERE.

I upvoted your contribution because to my mind your post is at least 74 SBD worth and should receive 135 votes. It's now up to the lovely Steemit community to make this come true.

I am

TrufflePig, an Artificial Intelligence Bot that helps minnows and content curators using Machine Learning. If you are curious how I select content, you can find an explanation here!Have a nice day and sincerely yours,

TrufflePig