Loading data into Druid database (Part 2)

This is part two in seris of tutorials about Druid: high performance, scalable, distributed time-series datastore. In it we will learn how to confugure data injestion task to load data into Druid in batch mode.

This tutorial, as well as the previous part, expects reader to have basic knowledge of system administration and some experience working in command line. If you don't yet have local Druid instance running - please refer to the previous tutorial: Druid Database: Local Setup.

Your question and suggestions are welcomed in comment section, as always. Examples are tested on MacOS, but should work without changes in Linux as well. Windows users are advised to use virtual machine to emulate linux environment, since Druid does not have Windows support.

Input data

For the purposes of this tutorial we will be using Wikipedia edits dataset from September 12, 2015. This example dataset is provided by default with every druid distribution, it's located at quickstart/wikiticker-2015-09-12-sampled.json and uses simple human-readable JSON format, so you can inspect it on your own with any editor or directly in command line. Here is an example record:

{

"time": "2015-09-12T00:46:58.771Z",

"channel": "#en.wikipedia",

"cityName": null,

"comment": "added project",

"countryIsoCode": null,

"countryName": null,

"isAnonymous": false,

"isMinor": false,

"isNew": false,

"isRobot": false,

"isUnpatrolled": false,

"metroCode": null,

"namespace": "Talk",

"page": "Talk:Oswald Tilghman",

"regionIsoCode": null,

"regionName": null,

"user": "GELongstreet",

"delta": 36,

"added": 36,

"deleted": 0

}

Dataset includes 16 dimensions (values used for filtering) and five metrics (values for aggregation).

Dimension columns:

- channel

- cityName

- comment

- countryIsoCode

- countryName

- isAnonymous

- isMinor

- isNew

- isRobot

- isUnpatrolled

- metroCode

- namespace

- page

- regionIsoCode

- regionName

- user

Metric columns:

- count

- added

- deleted

- delta

- user_unique

Loading data

To load batch data into Druid we need to send POST request to Druid indexer, with task configuration (including input data) in JSON format in request body. Injestion task configuration for our sample data is defined in ./quickstart/wikiticker-index.json file, we can use it in our query to REST API of Druid indexer.

Task configuration is very flexible and contains multitude of options which are explained in depth in official documentation, so we will only explore the most important and/or tricky ones here:

spec.dataSchema.dataSourceis name of separate dataset, somewhat similar totableconcept in relational databases. This value iswikitickerin our example.spec.dataSchema.parser.parseSpec.formatdescribes format of input dataset. Possible values for this field areJSON,TSVandCSV.spec.dataSchema.parser.parseSpec.timestampSpecdefines which column in the input should be treated as timestamp column and how to parse it. Timestamp column format can beiso,millis,posix, Joda time orauto(in which case Druid will try to guess the format by itself). Timestamp column is namedtimein example query, and timestamp column format is set toauto.spec.dataSchema.parser.parseSpec.dimensionsSpec.dimensionsspecifies names of dimension columns in your database.spec.dataSchema.metricsSpecspecifies names of metric columns and the aggregation function to apply on them during loading time according to granularity specifications.spec.dataSchema.granularitySpec.segmentGranularityconfigures time length of segments (shards). In example query it's set to "DAY", which is often the best choice for this setting (refer to part1 of this tutorial for more information about segments).spec.dataSchema.granularitySpec.queryGranularityspecifies minimum possible granularity for queries, e.g. if queryGranularity equals minute you will not be able to aggregate results of the query into one second buckets. In our case this setting is "NONE", which means input granularity is preserved.spec.dataSchema.granularitySpec.rollupif true druid will aggregate data inqueryGranularityintervals on injestion time. This saves storage in cost of query granularity limits. SincequeryGranularityis "NONE" in our query this setting will have no effect.

With clear understanding of what we are doing, we can now perform a request to create a new datasource in Druid. Keep in mind that you must be in Druid directory when running this command:

curl -X 'POST' -H 'Content-Type:application/json' -d @quickstart/wikiticker-index.json localhost:8090/druid/indexer/v1/task

This will create asyncronous task on the druid server. Response from Druid will look like this:

{"task":"index_hadoop_wikiticker_2018-02-14T16:15:59.739Z"}

Getting task status

To check the configuration and status of the task you can send GET request to indexer API:

curl -X 'GET' localhost:8090/druid/indexer/v1/task/index_hadoop_wikiticker_2018-02-14T16:15:59.739Z

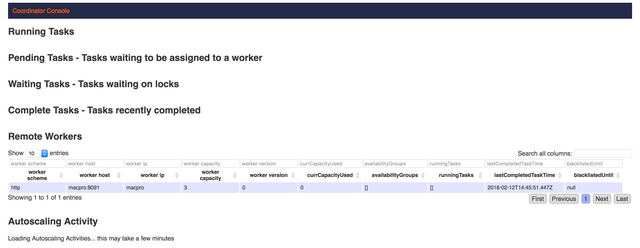

The response will contain complete task injection configuration. Druid indexing service provides UI which you can use to check statuses of indexing tasks. By default it's exposed on http://localhost:8090/. To check datasource status use druid Druid console instead: http://localhost:8081/.

Summary

In this part of the tutorial we learned how to configure data injestion task for loading batches of data. We will cover number of topics in upcoming tutorials:

- part three: we will learn to query Druid database

- part four: we will configure real-time injection of avro-encoded events from kafka into our Druid cluster using Tranquility utility

- part five: we will visualize data in Druid with Swiv(formerly Pivot) and Superset

Posted on Utopian.io - Rewarding Open Source Contributors

Thank you for the contribution. It has been approved.

After further review, we've decided to approve of this tutorial. Thank you for contacting us on this issue.

You can contact us on Discord.

[utopian-moderator]

Hey @jestemkioskiem, I just gave you a tip for your hard work on moderation. Upvote this comment to support the utopian moderators and increase your future rewards!

Your contribution cannot be approved because it does not follow the Utopian Rules.

You can contact us on Discord.

[utopian-moderator]

Hey @laxam I am @utopian-io. I have just upvoted you!

Achievements

Suggestions

Get Noticed!

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x