Druid Database: Local Setup

Druid is a high performance, scalable, distributed time-series datastore. It's a niche product that can be nonetheless invaluable in many circumstances, especially when dealing with high frequency timestamped data aggregations. In this tutorial we will learn the common usecases for this database, basic principles of its architecture and describe how data is organised inside the database. We will also setup a Druid database locally.

This tutorial expects reader to have basic knowledge of system administration and some experience working in command line. Your question and suggestions are welcomed in comment section. Examples are tested on MacOS, but should work without changes in Linux as well. Windows users are advised to use virtual machine to emulate linux environment, since Druid does not have Windows support.

When to use druid

Druid is a right tool whenever you need to perform analytics on a large time-based dataset populated in real time. Typical cases that fit this requirements are:

- system monitoring data stream

- online advertisement data stream

- IOT events data stream

- user interaction data stream

The data is distributed across the cluster, so Druid is capable of working in Bid Data environment. In fact, Druid developers claim that one particular production Druid cluster is currently handling more than 100 petabyte of data and contains more than 50 trillion events.

Druid architecture

Most classical distributed application clusters consist of the identical nodes, executing exctly the same code on multiple machines. Druid is different in this respect, because it divides practical concerns between the different node types. Each type of node has it's own responsibilities and limited knowledge of the cluster overall. This allows for an elegant architecture with minimal dependencies between internal components. You will see the types of nodes in a typical Druid cluster later in this guide.

Druid uses segment files to store its index. You can configure size of the time interval corresponding to one segment file with granularitySpec json object in ingestion configuration. It is recommended to configure this parameters in such a way that each segment file is from 300mb to 700mb in size. To achieve this for large time intervals you can also use partitionsSpec json object.

Data in Druid is structured in columns. Here is example data set of online advertisement data, provided by Druid documentation:

timestamp publisher advertiser gender country click price

2011-01-01T01:01:35Z bieberfever.com google.com Male USA 0 0.65

2011-01-01T01:03:63Z bieberfever.com google.com Male USA 0 0.62

2011-01-01T01:04:51Z bieberfever.com google.com Male USA 1 0.45

2011-01-01T01:00:00Z ultratrimfast.com google.com Female UK 0 0.87

2011-01-01T02:00:00Z ultratrimfast.com google.com Female UK 0 0.99

2011-01-01T02:00:00Z ultratrimfast.com google.com Female UK 1 1.53

There are three types of column:

- Timestamp: timestamp column is always present (albeit it might have different name), as it is always the primary target for Druid queries.

- Dimensions: attributes of event that describe a record in some manner and can be useful for filtering in queries. In the example above its

publisher,advertiser,genderandcountry. - Metrics: numbers that represent some kind of numerical aggregation of the records, for example count or average. In the example above there are two metrics:

clickandprice.

Setting up Druid locally

Zookeeper

Druid uses Apache Zookeeper for cluster discovery and coordination, so before starting the database itself, we need to start zookeeper instance on our machine. Simple one-node setup is sufficient for learning purposes, and it's very easy to set up using couple of shell commands:

curl http://www.gtlib.gatech.edu/pub/apache/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz -o zookeeper-3.4.10.tar.gz

tar -xzf zookeeper-3.4.10.tar.gz

cd zookeeper-3.4.10

cp conf/zoo_sample.cfg conf/zoo.cfg

./bin/zkServer.sh start

You can verify zookeeper node is working using the ./bin/zkCli command. Output should give you number of diagnostical messages ending with

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0]

Now you can leave zookeeper console with Ctrl/Cmd + d, get back to the parent dirctory with cd .. command and start seting up druid.

Druid

Download and anarchive druid using these commands:

curl -O http://static.druid.io/artifacts/releases/druid-0.11.0-bin.tar.gz

tar -xzf druid-0.11.0-bin.tar.gz

cd druid-0.11.0

To perform environment initialisation run ./bin/init command. It will create ./log directory and its subdirectories in druid-0.11.0 directory. Now we need to open five separate terminal windows and navigate to druid-0.11.0 directory in them to run different types of druid nodes in each.You might see an error while starting some of the nodes - do not worry it's normal, they should disappear once all 5 nodes are up and running.

Main nodes

- Historical node

java `cat conf-quickstart/druid/historical/jvm.config | xargs` -cp "conf-quickstart/druid/_common:conf-quickstart/druid/historical:lib/*" io.druid.cli.Main server historical`

This node type is at the core of druid cluster. It will take care of serving queries on historical segments. Historical node is self-sufficient by design, it uses Zookeeper to discover new segments and does not connect directly to other Druid nodes in a cluster.

- Broker Node

java `cat conf-quickstart/druid/broker/jvm.config | xargs` -cp "conf-quickstart/druid/_common:conf-quickstart/druid/broker:lib/*" io.druid.cli.Main server broker

This node is the main point of communication between client and druid cluster. In a nutshell it performs map-reduce type of operation: its main responsibility is to divide incoming queries from clients to appropriate sub-queries and send those to historical nodes. After receiving responses it will merge them together and send final result back to client. Broker nodes have information about segments distribution across the cluster.

- Coordinator Node

java `cat conf-quickstart/druid/coordinator/jvm.config | xargs` -cp "conf-quickstart/druid/_common:conf-quickstart/druid/coordinator:lib/*" io.druid.cli.Main server coordinator

Coordinator nodes performs segment maintenance: it directs historical nodes to move segments, fetch new ones and remove the old ones.

Indexing service

Creating an index is obviously a very important task for a distributed database, that's why Druid separates it into independent service. Doing indexing in a self-sufficient service allows us to scale it separately from the main cluster, which can be much more effective in high-load environments. Communication between indexing nodes is performed through ZooKeeper, same as in main cluster. Indexing service has three architectural components: Overlord, Middle Manager and Peons. Since Peons run on Middle Manager nodes, we only need to start two more nodes in our local setup.

- Overlord Node

java `cat conf-quickstart/druid/overlord/jvm.config | xargs` -cp "conf-quickstart/druid/_common:conf-quickstart/druid/overlord:lib/*" io.druid.cli.Main server overlord

Overlord node is coordinating indexing service: distributing tasks between Middle Manager nodes, managing locks and forwarding status information.

- Middle Manager Node

java `cat conf-quickstart/druid/middleManager/jvm.config | xargs` -cp "conf-quickstart/druid/_common:conf-quickstart/druid/middleManager:lib/*" io.druid.cli.Main server middleManager

Middle Manager node runs multiple Peon processes and assigns them tasks to execute. Each Peon process runs in separate JVM instance and is only able to execute one task at a time.

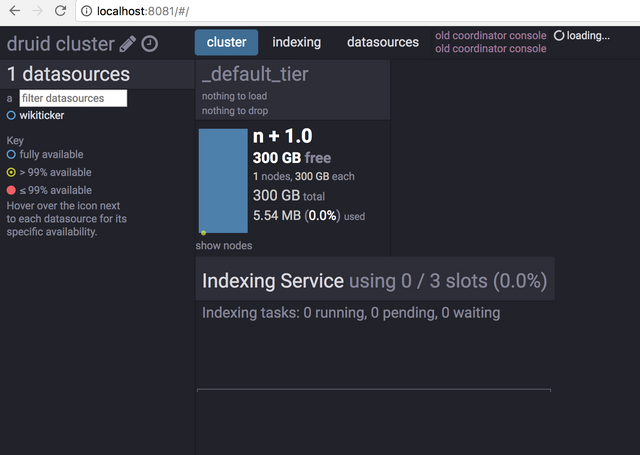

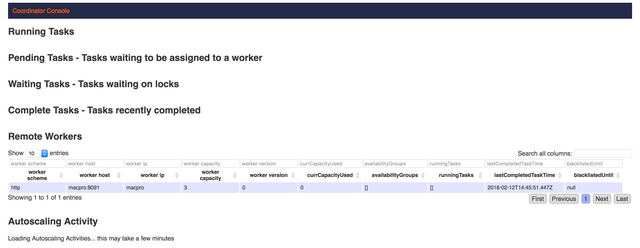

At this point our local setup of Druid database is complete. To verify the cluster is working visit the Coordinator console which is served at http://localhost:8090 and Druid cluster console at http://localhost:8081/.

Summary

We have learned about the nature of Druid database and what kind of problems it can help us solve. We also deployed minimal Druid cluster locally and it is ready for use.

This is the first part of Druid tutorial. We will cover number of topics in upcoming tutorials:

- in the second tutorial we will inject batch of sample data into this cluster and perform different types of queries on it

- in the third tutorial we will configure real-time injection of avro-encoded events from kafka into our Druid cluster using Tranquility utility

- in the part number four we will visualise data in Druid with Swiv(formerly Pivot) and Superset

Posted on Utopian.io - Rewarding Open Source Contributors

Thank you for the contribution. It has been approved.

You can contact us on Discord.

[utopian-moderator]

Hey @forkonti, I just gave you a tip for your hard work on moderation. Upvote this comment to support the utopian moderators and increase your future rewards!

Hey @laxam I am @utopian-io. I have just upvoted you!

Achievements

Suggestions

Get Noticed!

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x