Search for Dark Matter - Diphoton/Ditau Channels Analysis Implementation

Repository

Introduction

This post describes my efforts to implement the analysis for the search of dark matter described in the CERN-EP-2018-129 paper using the Madanalysis 5 framework.

Supported by @steemstem and @utopian-io, the task was prepared by @lemouth as part of his experiment to involve software developers into particle physics and produce opensource applications usable for scientific research.

The paper at the center of this blog describes how the production of Higgs bosons in the CERN accelerator may produce dark matter through several decay channels.

There are two decay channels considered in the paper:

- Higgs boson -> dual photons (diphoton: γγ), and

- Higgs boson -> dual oppositely charged tau particles (ditau: τ+τ-).

Taus are negatively charged leptons and as such belong to the same family as electrons and muons. Taus are heavy particles and as a result decay very quickly into other lower mass particles.

This decay can take several forms: leptonic decay to an electron (and neutrinos), leptonic decay to muon (and neutrinos) or hadronic decay.

Of all leptons, only electrons exist in vast quantities in the universe. Muons and taus can be produced during high energy collisions such as those created in particle accelerators but have a very short lifetime of 2.9×10−13seconds.

To put things into perspective light can only travel around 85 nanometers in such a small amount of time.

The paper goes along to describe how Dark Matter production may be indirectly measured from the large amount of missing transverse energy measured by CMS, as described below.

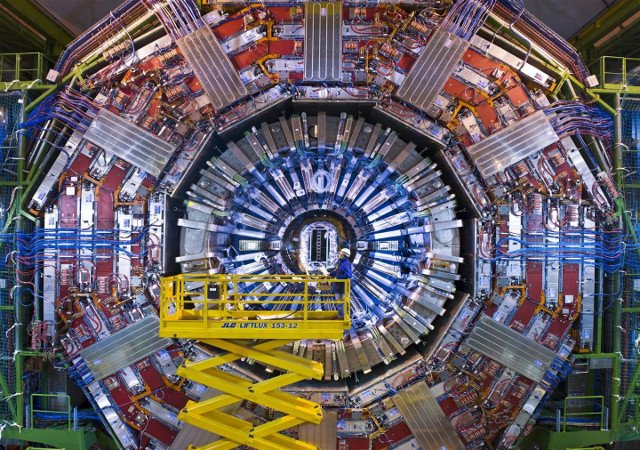

Measured at 21.6m long and 15m high, CMS - or Compact Muon Solenoid - is one of several detectors in CERN. It consists of an enormous electromagnet capable of producing a magnetic field of 4 Teslas, around 100,000 times more powerful than then Earth's magnetic field.

Credits: CERN

This huge magnetic field is capable of deflecting high energy charged particles produced during collisions in a measurable way.

Knowing the charge of an elementary particle and the intensity of the surrounding magnetic field, it is possible to derive the amount of momentum carried by the particle based on its deviation from the beam axis.

Momentum and energy are intimately related. Using conservation laws such as the conservation of mass/energy of a system, scientists can measure the amount of mass and energy produced during collisions and compare it to the mass and energy of the colliding particles just before impact.

Should all particles and energy produced after collision be accounted for, the detected amount of mass/energy should match the mass/energy involved before the collision.

However the production of Dark Matter would result in those particles being undected by the instruments and therefore would translate into a deficit of detected energy.

One component of this missing energy is the missing transverse energy, pTmiss.

Physicists theorize the amount of Dark Matter produced through certain interactions between particles and thus predict the amount of missing energy detected by the accelerator detectors.

If the measured missing energy matches the amount predicted by the theory, then it brings some element of evidence that the theory is correct.

Post Body

Armed with the experience acquired while working on @lemouth's previous exercises, I initially thought that implementing the analysis described in the paper would be a reasonably straightforward exercise.

I was wrong.

An initial read through the relevant sections of the paper quickly made me realize that I was going to have a hard time making sense of the information that I needed to program anything.

The γγ Decay Channel

I set out to work on the γγ (diphoton) decay channel first.

Some issues arose quickly, such as how to calculate the invariant mass of the diphoton.

I was initially stuck but then decided to recursively search in the Madanalysis5 folder for strings such as "invariant mass".

I got lucky in the sense that it revealed some other analysis implementations present in the folder which looked similar to the task that I had at hand.

Fortunately the C++ files of those implementations appeared to be named after the name of the corresponding CERN research papers.

I was able to find some of these papers online and compared them to the paper I was working on.

From this I isolated some of the code that I had to replicate for my exercise.

Hopefully that won't be considered cheating! :-)

I reached the point where I was resonnably satisfied that my implementation of the diphoton channel wasn't completely out of whack and felt reasonably confident that the τ+τ- channel would be of the same degree of difficulty (once again I was wrong).

In summary here are the steps that I implemented for the diphoton channel:

- Prepare a signal region named "diphoton"

- Add a histogram for low pTmiss criteria

- Add a histogram for high pTmiss criteria

- Define some cuts

- Extract signal photons from the event based on the criteria defined in the paper: pseudorapidity, transversal momentum and isolation criteria. Signal photons are kept sorted by decreasing value of pTmiss in a vector so as to check that the resulting leading and subleading photons carry enough momentum

- Apply cuts to the resulting signal photons, such as: must have a.t least 2 signal photons with enough momentum, invariant mass must be below 65GeV, azimuthal separation must be greater than 2.1

- Azimuthal separation with energetic jets is checked.

- Two kinematic requirements are then verified against those photons that pass the cuts: low and high pTmiss requirements.

- The low_pTmiss and high_pTmiss histograms are finally filled.

The τ+τ- Decay Channel

Where the diphoton channel was reasonably difficult I found the ditau channel really obscure.

I read many times the section in the white paper and tried as best I could to make sense of it.

Reading the same sections over and over again I decided to proceed with an implementation based on my best understanding of the analysis.

Here is the analysis implemented:

- Create a region selection for the di-tau channel

- Add histograms for the three hadronic decays: eτh, μτh and τhτh.

- Extract signal electrons meeting requirements such as minimum transverse momentum, maximum pseudorapidity and isolation criteria.

- Repeated the same for signal muons (I wrote a single function to be executed for electrons and muons).

- Make sure that the total pTmiss is greater than 105GeV.

- Check each signal electron / tau system for taus with enough separation, transverse momentum and meeting pseudorapidity criteria and add the total pTmiss to the eτh histogram.

- Repeated the same for the μτh channel.

- Iterated all taus to pair those taus bein separated by less than 0.5 radians and meeting transverse momentum and pseudorapidity criteria. Note that I selected the best pairing based on the transverse momentum of the paired tau (highest pT). This is just a guess as I wasn't clear about the exact process to follow.

- For each pair of taus, check that the total transverse momentum is greater than 65GeV.

- Ensure that the invariant mass of the ditau system is below 125GeV.

- Fill the ditau channel histogram.

Conclusions

This analysis has made me think.

The main obstacle standing in the way of getting software developers to implement this type of analysis is not so much the programming difficulty (the coding is straightforward), but bridging the gap between two different worlds.

Particle physicists are used to speak a language which is very obscure for anybody else to understand. The research papers produced are hardly intelligible for most outside the scientific community.

Nevertheless it seems to me like those same papers could be somewhat understood better by programmers in charge of implementing these analysis if they were converted into a more accessible form, using a wording and structure closer to everyday's English.

With this in mind it might benefit the scientific community to produce derived documents, using simplified language, which could be more easily understood by developers in charge of translating these into code.

Another aspect of the past exercises that has struck me is that there is a small amount of distinct logics repeated across many analysis.

This type of analysis would therefore lend itself very well to be translated into a higher level descriptive syntax. This syntax could then be translated into code using some kind of analysis syntax interpreter written in a high level language such as Python.

The advantage of the above is that the analysis could be defined precisely using a language that can be easily understood by scientists and software developers alike.

Something along the following lines:

BEGIN PHOTONS

SIGNAL =>

PT_SORTED: True

ABSETA: REGION1[0, 1.44], REGION2[1.57, 2.5]

PT: < 20

REGION1: (Igam - PT) < (0.7 + (0.005 * PT)) &&

In < (1.0 + (0.04 * PT)) &&

iPi < 1.5)

REGION2: ((Igam - PT) < (1.0 + (0.005 * PT)) &&

In < (1.5 + (0.04 * PT)) &&

iPi < 1.2)

CUT(leading_photons_pT) => PT[0] >= 30 && PT[1] >= 20

CUT(diphoton_M) => M(0, 1) < 95

etc...

END PHOTONS

BEGIN ELECTRONS

SIGNAL =>

etc...

With a little bit of work an interpreter could be implemented to convert the above syntax into C++ or Python code.

Resources

- CERN-EP-2018-129 paper: Search for dark matter produced in association with a Higgs boson decaying to γγ or τ +τ − at √ s = 13 TeV

- Particle physics on Steem - let’s start coding on the MadAnalysis 5 platform, by @lemouth.

- Can the potential of Utopian advance cutting-edge science?, by @lemouth.

- Madanalysis 5

- tau particle on Wikipedia

Madanalysis Credits:

E. Conte, B. Fuks and G. Serret,

Comput. Phys. Commun. 184 (2013) 222

http://arxiv.org/abs/1206.1599

E. Conte, B. Dumont, B. Fuks and C. Wymant,

Eur. Phys. J. C 74 (2014) 10, 3103

http://arxiv.org/abs/1405.3982

B. Dumont, B. Fuks, S. Kraml et al.,

Eur. Phys. J. C 75 (2015) 2, 56

http://arxiv.org/abs/1407.3278

This was a very impressive post, detailing some very impressive work. As a fan of physics, I was absolutely fascinated. It's pretty awesome to see this cross discipline work being facilitated by open source software. I also found your analysis of the issues preventing more of this work from being done to be cogent and convincing.

The post had some minor issues of grammar and proofreading, which detracted a bit. I could supply examples in a follow-up comment if you're interested.

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Write a ticket on https://support.utopian.io/.

Chat with us on Discord.

[utopian-moderator]

Hi @didic, thank you very much for your nice review.

Absolutely, I would be pleased if you could point me at the grammatical issues in my post.

Cheers.

As usual, these are examples. This is a super long text, so I'm not going to be able to provide any kind of comprehensive list.

Is two words.

Both of these sentences should have commas before "and."

Comma after "perspective."

The paper goes on.

Thanks!

Thank you for your review, @didic!

So far this week you've reviewed 9 contributions. Keep up the good work!

This post has been voted on by the steemstem curation team and voting trail.

There is more to SteemSTEM than just writing posts, check here for some more tips on being a community member. You can also join our discord here to get to know the rest of the community!

Hi @irelandscape!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hey, @irelandscape!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!