The self-driving enigma

Okay, imagine you’re driving along a three lane highway. You start to merge into the middle lane, but you don’t notice that a car in the far lane was merging too–and you hit each other.

Image Source

So here’s a question for you: Who’s at fault? Is it you, is the other driver, or is it a situation of equal blame?

You both pull over and it turns out that the other car was a self driving car. There’s no one inside.

Image Source

When you were both merging, the car’s algorithm predicted you would see it and allow it to merge before you. So, who’s at fault now? Did you fail the algorithm, or did the algorithm fail to allow for you? Or is it still a situation of equal blame?

This isn’t a purely hypothetical situation–earlier this year a Google self driving car hit a bus when it failed to adjust for the bus’s speed entering traffic. The vehicle’s automation system was partly to blame, but in that case there was a person sitting in the self driving car who did not override the system, because they trusted it would correct itself.

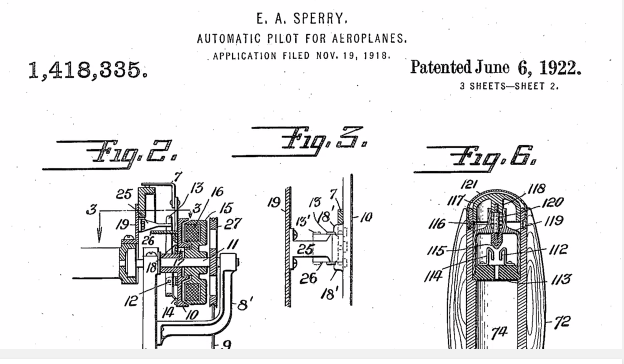

And, really, we have a lot of reasons to trust systems–automation is nothing new. In 1933, the first solo flight around the world was made possible thanks to “Mechanical Mike,

Image Source

” the plane’s automatic Sperry Gyroscope.

Image Source

Since then, flight has become more and more automated, a lot of that to make air travel safer. 1991 NASA memo stated “Human error is the dominant cause of aircraft accidents...The most important purpose automation can serve is to make the aviation system more error resistant.”

Image Source

And it DOES make air travel safer. For example, the Fly-by-Wire system helps monitor the plane to prevent stalling in mid-air. But, there have also been unintended consequences of this when automated systems fail.

Take the crash of Air France flight 477 in June 2009.

Image Source

At some point during the flight, the Fly-by-Wire system failed and the plane stalled. Neither the pilot or co-pilot recognized the warning alarm and the result was a tragic loss of life.

Some argue that pilots have become too dependent on automated systems and are unable to safely fly a plane if those automation's fail. And Air France flight 477 embodies The Automation enigma.

The Automation says that as systems become more automated, humans lose some of their skill with the system, resulting in more automation. And the paradox applies to any automated system–Including cars.

We started by replacing the crank start with an electric starter in 1896. Fully automatic transmissions were available by 1940.

Image Source

The ‘60s saw the adoption of power steering; and since then, ABS brakes,

Image Source

automatic headlights,

Image Source

reverse cameras,

Image Source

parking assist and a lot of automation has been added to vehicles.

Recently, Tesla released an Autopilot feature a semi-autonomous system where cars keep in a lane, adjust their speed, change lanes and self-park.

Image Source

But in the last few months, at least two accidents and one death have been attributed to Tesla’s autopilot feature. Which led to a lot of people and media to question if the idea of driverless cars.

Let’s look at the stats: For Tesla’s Autopilot, it’s the first fatality in 130 million miles driven. In the US, there’s one fatality every 94 million miles driven and globally, one every 60 million miles.

Image Source

In New York State, driver operated vehicles have an average of 2.4 accidents per one million miles driven, and for Google’s self-driving cars it’s 0.7 accidents per one million Miles.

Image Source

The numbers say that driverless and self driving cars are likely safe. But it’s questionable how close we are to a utopian vision of driverless cars as far as the eye can see. Think back to your crash at the beginning of this post.

When you were both merging, the self driving car’s algorithm predicted you would see it and allow the car to merge before you. But, you didn’t.

It raises a question where technology meets psychology: What happens when people don’t interact with technology in the way that developers expect?

Last month, Australian psychologist Narelle Haworth,

Image Source

an expert in road safety and psychology, said, “Technology isn’t the obstacle, psychology is, and the challenge is to understand if humans can trust autonomous machines. Will we be willing to entrust our children to self-driving machines? And will improvements in technology improve road safety in developing countries or just magnify the current inequities?”

The Automation is pretty logical: As systems become more automated, humans lose skill with that system, resulting in more Automation.

But when you think about it, the prospect of automated cars is really nuanced–cars are our personal possessions, and the trust and ethical considerations surrounding fully automated systems becomes personal, too.

Will we ever get to the point where, in the developed world, self driving cars are the norm? And we will allow ourselves to lose skills, driving skills, with that system?

Perhaps the real thing is in our own psychology.

Many skills have become useless over the centuries. Chances are future generations will no longer be able to drive, but it's not a problem. My granddad had skills which I no longer have, and which wouldn't improve my life one iota by having them, even though they were important in his time.

I do not see the problem with automated cars, driving people around who can no longer drive themselves.

Also, we see very little trouble trusting our lives to busdrivers, pilots, traindrivers, ship captains,... SO why not trust our lives to programmers? Especially when you can already now prove that they are safer.

The problem of the interaction between technology and psychology will also become less important, as more and more automatic drivers will be used. These automated cars will be able to communicate amongst themselves, and will avoid crashing into each other in the crash scenario your post started with.

I believe Elon Musk already stated that he expects people driven cars too become illegal in the far future, since they will be seen as much more dangerous than the automatically driven variant.

Great post! In fact, it was so good that we decided to feature it in our latest newspaper. Read about it here: https://steemit.com/steemplus/@steemplus/steemplus-the-daily-newspaper-that-pays-you-for-recommending-high-quality-content-edition-1

Will they ever be the norm? Yes. Way sooner than anyone suspects. They will be so good that they will rapidly, rapidly accelerate as policymakers start to see their advantages. In more civilized, less fear and testosterone driven countries, they will be adopted rapidly and eventually we'll have to follow suit.

Far far faster than anyone is ready to admit now.

Except once there's enough cars out there, they will all be quietly talking to each other so they will know exactly what each other are going to do, and none of the cars will have the obnoxious egos that push people to show off, get aggressive, get pushy and drive dangerously.

In the end, no doubt, self-driving cars will be at fault in some collisions, but broadly speaking deaths from automobiles will drop precipitously, traffic will become more efficient and if adopted widely enough, the problem of parking will evaporate, opening up vast amounts of space for humans to live again.

:)

This was good. I have been waiting for someone to tackle this one the way you did. Nice job.

Thanks!

This post has been linked to from another place on Steem.

Learn more about linkback bot v0.4. Upvote if you want the bot to continue posting linkbacks for your posts. Flag if otherwise.

Built by @ontofractal

Thanks!