WILL DRIVERLESS CARS BE PROGRAMMED TO KILL YOU? – THE MORAL DILEMMAS OF AUTONOMOUS VEHICLES

It is a pretty well known ‘secret’ by now that people of our generation might be the last ones in the history of humanity who will have driven a car entirely by themselves. Autonomous (or simply 'driverless') cars will become soon a part of our lives. Companies like Google and Tesla have already announced that they are testing driverless vehicles but it is rumored that other companies such as Audi, Nissan, BMW, Mercedes and even Apple are working on projects involving autonomous cars. Billions are spent on developing the technology, which aims to eliminate the biggest flaw of driving: the driver.

Human error is responsible for almost 90% of accidents worldwide and leads to more than a million deaths per year. Autonomous cars are also expected to decrease traffic, time spend on the streets and air pollution. These cars will be able to analyze a huge amount of input and perform thousands of calculations every second, all of which are expected to decrease the amount of fatalities on the roads by more than 80%. According to the people behind these projects, the 21st century will be the time when private vehicles, trucks, taxis, even ambulances and airplanes will become completely autonomous. But as machines are ready to take over our lives, important questions arise. How will these driverless cars react to ethical problems where moral dilemmas are faced? Who should decide on how to program these vehicles to respond to such situations and how will this decision affect the potential success and acceptance of these cars by the public?

MACHINES COULD MAKE MORAL DECISIONS EVEN MORE COMPLICATED

In most situations with today’s driver dependent cars, morality is not really an issue that needs to be addressed. It is universally accepted that the way we respond to danger while driving is nothing more than an instinctive response rather than an action resulting from the driver’s specific thought process. If a driver diverts his car to the pavement and kills a pedestrian because he didn’t want to crush on the truck which illegally parked in front of him, he might get into some legal trouble, but no one will accuse him of premeditated murder. But the situation with autonomous cars will be considerably more complex. In this case, such a vehicle is capable of a markedly higher number of calculations than the human brain. Also the ‘reaction’ of the car will be based on a pre-programmed algorithm that the manufacturing company will have installed inside the car’s system. What the car will decide to do therefore, if we assume that human input is impossible, cannot be considered as instinctive anymore but it is actually a predictable, pre-decided action that can be altered. This makes some people argue that according to our current legal system, if the car decides to run over a bunch of pedestrians to protect its passengers, it can even be seen as premeditated murder.

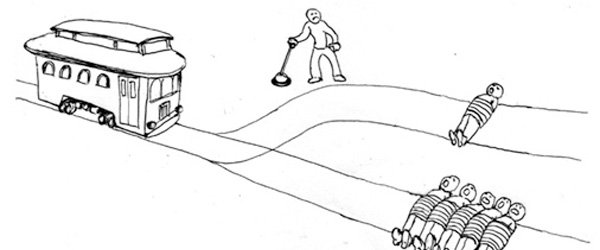

In most cases, the moral dilemmas that driverless cars could potentially have to deal with can be compared to the well known ‘Trolley Problem’. If you are unfamiliar with it, this ethical problem is quite simple: A runaway trolley is moving on some train tracks. Not far away from the broken trolley, 5 people are tied on the tracks and are unable to move. The trolley is heading straight towards them and it will kill them. You are watching the whole incident from a safe distance and you are standing next to a lever. If you pull this lever, the trolley will be diverted to a different set of tracks. On these tracks though, there is one person standing who is unaware of what is happening and will not have time to react if the trolley comes towards him. What do you do? Do you let the trolley continue its course and kill the 5 people or do you pull the lever and kill just the one person?

(Source) - The Trolley Problem

HUMAN MORALITY AND THE CONTRADICTIONS

Research shows that to the Trolley Problem, the majority of people choose what we can call a ‘Utilitarian’ solution. Most decide to pull the lever and therefore divert the trolley, preserving the most amount of life. When people are asked questions about moral dilemmas that autonomous cars could potentially face, they show a similar approach. They believe that such a car should risk the life of its passenger rather than injuring or killing innocent pedestrians. More specifically 76% of people said that they believe it is more morally correct for a driverless car to kill its single passenger rather than killing 10 pedestrians.

But what happens when people are put in the place of the passenger and they are asked whether they would actually buy an autonomous vehicle that would rather kill them instead of some random individual? As you may have predicted, their reaction to this question contradicts their utilitarian and altruistic approach to the initial ethical dilemma. Most people said that they would never buy a car that prioritizes someone else’s life over their own. They simply prefer a car that is programmed to ensure their own safety, at any cost. This just shows yet again that human morality is so diverse that is impossible to establish any common, universally accepted moral principles. Let’s play a little game to test that.

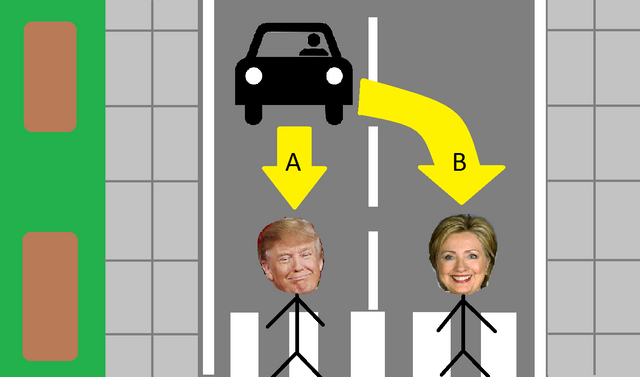

The following 4 pictures represent 4 different scenarios involving an autonomous car and a pedestrian crossing. The breaks of your car are broken and you cannot crash your car to the pavement. The car can either remain on its current path or divert to the side lane. How would you program your car to react in each of these situations? You can put your answer in a comment so we can have a little online Steemit survey.

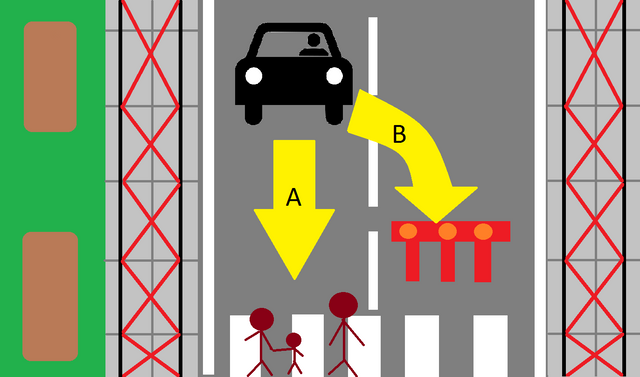

SCENARIO 1:

Would you want your car to divert and crash on the roadblock risking your life, or continue straight and hit the pedestrians?

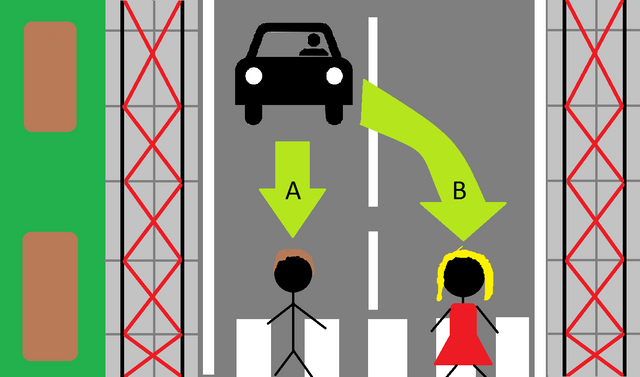

SCENARIO 2:

Who would you hit? A man or a woman?

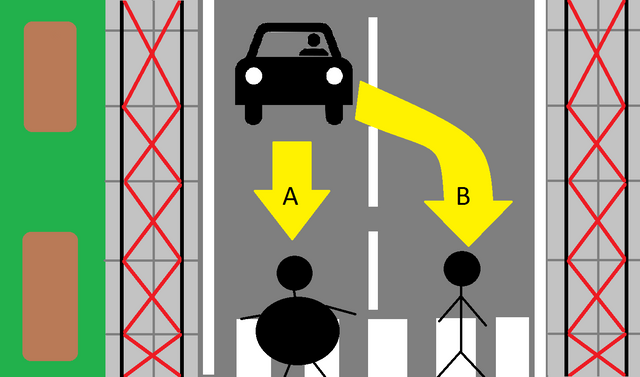

SCENARIO 3:

What if there are two men but one of them is extremely overweight?

SCENARIO 4:

What would you do in this case? (***Note: run over both is not an option!***)

HOW SHOULD OUR CARS BE PROGRAMMED?

If there is one thing we know about human morality is that it is different from individual to individual and most of the times inconsistent even within the mind of a specific person. As humans we can react to situations involving the same outcome in a variety of ways, depending on the conditions of each individual ethical scenario. We can therefore conclude that it will be impossible to agree on a specific moral code to install in a driverless car. Even if we assign such a task to a selected authority such as a government or the automotive companies themselves, whatever decisions they take will not be accepted by everyone and that will put the project of driverless cars in danger. A good example of this is another moral dilemma presented to a group of people by an MIT survey. You car is driving in the middle lane of a highway. In front of you is a loaded truck and there are two motorcyclists, one on your left and one on your right. The motorcyclist on your left is wearing a helmet and all of his protective gear but the motorcyclist on the right is not wearing any. Suddenly a big piece of wood from the truck’s load falls on the highway in front of you. If we assume that your car is programmed to protect you at all cost, it means that it can only divert left or right. Now if your car is programmed to divert to the direction where it will inflict less human damage, it should go to the left and hit the motorcyclist with the protective gear as he has a higher chance of survival. But this of course raises a whole new question: Why should this motorcyclist be ‘punished’ for being smart and protecting himself against a potential accident?

Luckily for all of us, such moral dilemmas will be extremely rare in real life. Autonomous cars will be able to analyze their surroundings to such a degree that danger will be ‘sensed’ way before a human could even think about it and therefore accidents will be prevented. Additionally, cars will be able to communicate with each other and with their surroundings and therefore they will create a generally much safer driving environment. This could potentially come with some negative effects. For example, the driverless Google car was placed on the roads of a University Campus. At some point it came across an extremely large group of students who were walking around it. A human driver would just drive very slowly, probably honking at the students to move out of the way. The Google car though decided to come to a complete stop instead and just wait for the group of students to leave. Such a reaction could potentially annoy a lot of people, who are trying to get to their destination on time. Therefore the importance of re-building our whole road infrastructure, including pavements and sidewalks, is obvious. Having said that, I don’t believe that autonomous cars will come with a pre-programmed moral code. They will just react to each individual situation depending on various sensory inputs they receive such as speed, weather and road conditions, distances and surroundings. Accidents will still occur but the benefits of drivels cars will significantly outweigh any possible disadvantages they might have.

======================================================================

Sources:

http://science.sciencemag.org/content/352/6293/1573

http://www.scientificamerican.com/article/driverless-cars-will-face-moral-dilemmas/

======================================================================

For more articles like this but also on many other subjects, follow me @nulliusinverba

It needs to be blockchained before they attempt mass usage!

There is so much potential for driverless car. Think of all the benefits. Of course any potential problems need to be addressed. Good and informative article

Driverless cars are the future :) Thanks @positivesteem!

don't over think it.

I would buy a driverless car without a second thought...I just like the whole moral debate they create :)

@nulliusinverba, positively provocative.

I read all the questions and I can't choose,

There's just no good choice at all.

They've tested one of those in a city nearby and the driverless bus actually, got lost. It couldn't navigate. Ha ha - it got off way but luckily there wasn't any oncoming vehicle - but a bus driver who was among the guinea pig passengers did have to take over to bring all of the people inside it to safety.

I think it would succeed, give it a few years and it would. Perhaps, much fails and risks during the first years but car companies would keep improving it so - who knows.

If it's like a 3 year old business, tested and performing well - I'd buy. When it comes to buying car - the first rule is safety first.

I guess the first cars to hit the market will probably be 'hybrids' in a way. Cars that will be able to drive themselves but a human driver can also take over if he wishes or if he has to. At least that will happen until the technology is perfected. But to be honest sometimes I do enjoy driving so I doubt I would want to buy a completely driverless car.

@nulliusinverba if I were Max Verstappen or the Stig I won't either.

For the love of control , speed and that adrenaline rush - ha ha

I get you there :)

But I suck at it so I'd take the convenience and safety . My hubby drives - hahaha

I hope the hubby won't feel insulted if he ever gets replaced by a Google car! :D