Pipelining and Parallel Processing

When we visit a standard factory, we notice that products are not finished in one process, or on one table. Sometimes products move from human to machine and then back to human or from a machine to human before it comes out a finished product. We can term this, a division of labor but what do we term the same process if it's done by one machine or by one human? The computer processes so many data before it can give us information and the most intelligent part of the computer is the processor.

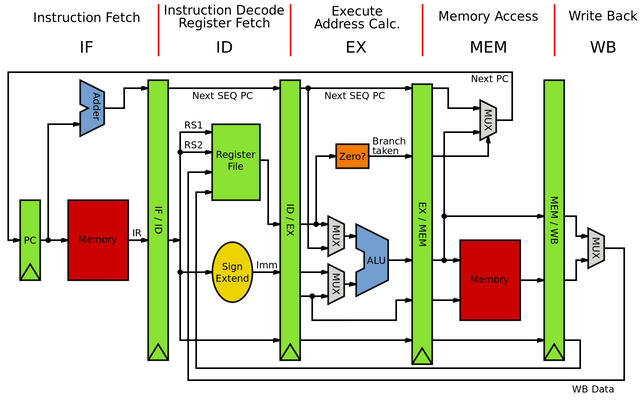

The effect of having an efficient computer hardware unutilized or underutilized could have a serious economic importance, hence, computer development at some point is not just aimed at increasing hardware capacity but also efficiently utilizing these capacities. Pipelining to a great extent answers the question in the first paragraph from the computer perspective. On the processor level, the process of carrying out an instruction can be divided into four:

[arithmetic pipeline instruction cycle execution. credit: Wikimedia]

The above listed processes are called the fetch-execute cycle which is performed by every computer processor. On a very basic level, it can be said to be a process whereby a computer processor pulls out a program instruction from memory (usually saved in the main memory), figures out what actions are embedded in the instruction and then carries out the instructions. In early computers, these processes are executed straightforward or sequentially by having each instruction executed before moving on to start the next instruction cycle. This however, cannot be said for most if not all modern computers as instructions are not just overlapped but shared among multiple processors in a process called multithreading.

Instruction Fetch

Instructions are saved in computer memory as stated above. Computer memory exists in blocks and these blocks can be assessed randomly. When information is saved in a block, the location of this block also called its address is saved in a very small and fast memory called memory address registers (MAR). To read the data in a block, or make changes to the data in a block (write), this type of register is first accessed by the processor. The memory address of an instruction is saved in a program counter. The program counter (also called instruction pointer) functions by incrementing its current value as a way of indicating the progress of a processor in its execution sequence. For a processor to perform the fetch cycle, it first makes reference to the program counter in order to retrieve the memory address of an instruction.

Once the address of the instruction is obtained, a trip is made to the main memory to retrieve this instruction. When this instruction is successfully retrieved, the program counter then prepares for the next fetch cycle by pointing to the next instruction.

Instruction Decode

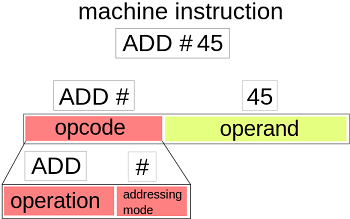

))

[An opcode credit: wikimedia]

This job of decoding an instruction or at this stage, OPCODE is done by the instruction decoder. The instruction decoder (ID) can be regarded as the most important part of the processor because irrespective of the fact that the computer is known to carry out so many calculations, this is done by just a section of the processor; the arithmetic logic unit (ALU), the main function of the processor is to carry out instructions.

No matter how brilliant a student is, he/she cannot pass an examination without reading and understanding the instructions which are always given out before actual questions. The instruction decoder is a combinatorial circuit consisting of logic gates (implemented using transistors) which consists of many inputs. These gates takes opcodes in the form of binary as inputs and performs logic operations which is capable of activating correct instruction procedure. Opcode is a short form of operational codes and is the part of the instruction which specifies which instructions to be carried out.

Instruction Execute

Once the instruction is decoded, the control unit in the central processing unit transfers these decoded instructions in the form of control signal to the required parts of the CPU which in turn carries out the necessary action dictated by the instructions. During the instruction execution cycle, if mathematical operations are required by the decoded information, the arithmetic logic unit is then involved by further sending control signal to the control unit.

From the user point of view, the execution cycle is the only operation that makes sense, hence all the other cycles are just designed to realize this very cycle, the execute cycle.

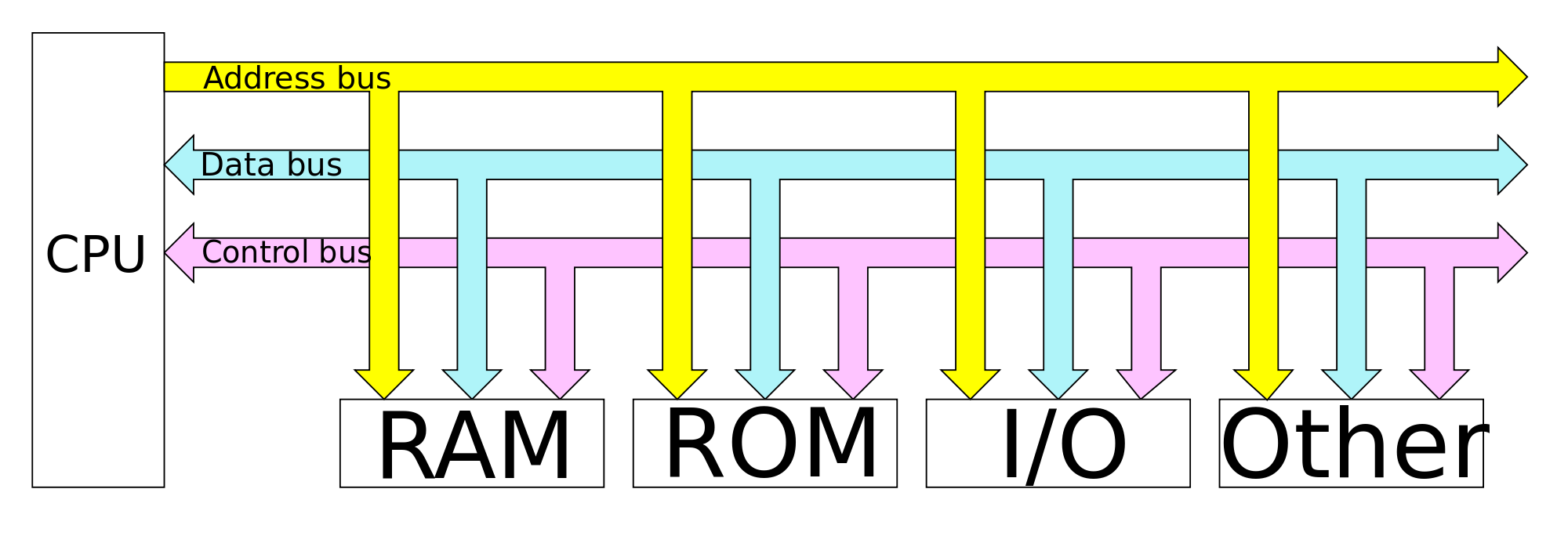

Write Back

During execution cycle or at the end of the execution cycle, the result of this cycle is forwarded to the main memory or any other form of memory as required by the instruction. Write back operations are always regarded as memory operations. Information or data travels in and out of the processor through what is called bus. The CPU makes reference to three basic types of bus when moving information and data to and from CPU. These are;

[credit: wikimedia

The bus to a great extent also determines the speed of transfer of information. When we are moving information from a PC to two different external hard drives, we notice that an initial transfer speed to one hard drive reduces once we start transferring information to the second hard drive. This is because bus available for the data transfer has a limited capacity and can share this capacity among different operations.

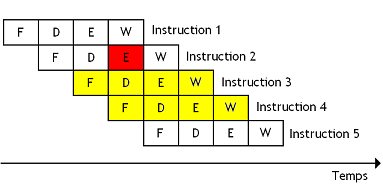

Overlapping Operations

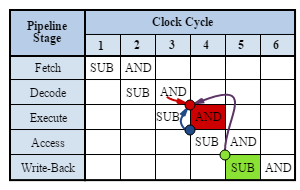

The early computers performed the above cycles sequentially, that is, an instruction is first fetched from the memory, decoded, executed and the result written back to the memory, after this is done, the cycle then repeats itself. Hence for each instruction, the cycle repeats itself while keeping other instructions in a form of temporal storages called buffers. This is to say that any instruction that is being processes must complete before another instruction is cycle is started.

The problem with the sequential form of carrying out instructions is that more hardware are required to perform these distinct operations leaving most of these hardware idle during the whole cycle since the hardware take turns to perform their functions.

))

[Original image available at wikimedia]

Problems associated with pipelining

As effective as pipelining may sound, there are basically three main problems encountered in the cause of designing a good pipeline system for a processor. These problems include;

Implementing a pipeline system for speedy supply of instruction to the processor is a bit costly because of the required hardware which in turn increases the chip area.

))

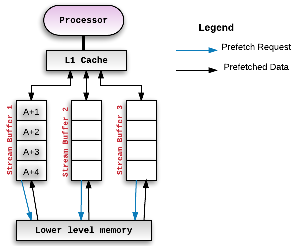

[prefetching instructions to processor buffer credit: wikipedia creative commons]

The pipeline at some points in its operation is drained of instructions and refilled, for instance if there was a pause in the instruction cycle, usually due to an interrupt, we can reduce the amount of time used in refilling the pipeline by loading the instructions in the buffer ahead of its execution. Using an effective prefetch algorithm to load needed instructions beforehand solves to a great extent this fetch problem.

As the name implies, the bottleneck problem arises from assigning too many functions to a particular stage in the cycle with respect to other stages thereby increasing the amount of time required for that stage to complete its work. This additional time spent at one stage results in other stage waiting for the stage experiencing a delay thereby creating a bottleneck in the execution cycle.

A major solution to this is to further divide the stage in question. Another possible solution is to create copies of this stage in the pipeline.

Issuing problem occurs when there’s an instruction but instruction cycle cannot occur, hence we say that the instruction has a hazard. The hazards prevents giving up instructions for execution and can be classified into three;

))

Conclusion

Pipelining is utilized by most modern computers for reducing the processing time of instructions. Though in reality, implementation of pipeline is more complex than what I depicted here but the principle remains the same. In today’s computers, we have what is called superpipeline which is the same with the traditional pipeline but consists of more stages.

Pipelining increases the throughput of its system and also makes the system more reliable though its implementation requires additional hardware to the processor and this means additional cost of processors. Furthermore, many problems are encountered on the course of implementing an effective pipeline and this can be seen in the form of instruction latency.

Reference

- Pipelining -wikipedia

- computer organization and architecture pipelining -geeksforgeeks

- pipelining obstacles -d.umn.edu

- pipeline hazards -slideshare

If you write STEM (Science, Technology, Engineering, and Mathematics) related posts, consider joining #steemSTEM on steemit chat or discord here. If you are from Nigeria, you may want to include the #stemng tag in your post. You can visit this blog by @stemng for more details. You can also check this blog post by @steemstem here and this guidelines here for help on how to be a member of @steemstem.

.jpg](https://steemitimages.com/DQmZPsmZCbUfn4wUw83qv7NvmBQDDQVxycDCVtcEgYHjrJU/Memory_Address_Register_(MAR).jpg)))

I enjoyed your post. Very instructive.

Of course pipelining is at the core of the recent security issue found in the vast majority of modern CPUs.

It will be interesting to see how CPU manufacturers address the problem.

@henrychidiebere, when I first saw pipelining, i was already thinking about fluid transport until I begin to read the article. 😀😀😀

I like the fact that you made me understand the steps required to process an instruction. It made the major points clearer. But I will like to ask a question in this respect :

If the operation are distinct and there are more hardwares, why should there be any wait?

Thanks.

okay imagine this scenario where 10 PCs were needed to be booted up and two programs (say MS office and Adobe reader) installed on the 10 PCs. Lets also say that 3 personnel were employed to carry out these jobs cause it they were envisioned as three seperate work; boot the system, install the first program and then the last program. If the work was done sequentially, then the person that would install MS office and the person that would install Adobe reader would be Idle waiting for the person that would boot up the system to finish his job whereas if these jobs were overlapped, one person could even finish it faster by first pressing the power botton on all the 10 PCs then coming back to the first one (which might have finished booting) and then start the install program for MS office and do the same for the remaining 9 PCs after which he will come back and do same for the Adobe reader. The picture I painted may not be very accurate but I hope it shows you how idle the processor hardware would be if sequencial system of instruction execution cycle were considered

Oh... Now I get.

Thanks for the reply.

Interesting. I was actually expecting the fluid flow in those pipelines. Lets just for get that.

I have a couple of friends who worked on pipeline protection and by protection i mean corrosion protection. Its difficult to leave a pipeline underground for so long without protection it damages them.

no bro, it ain't that type of pipeline

Yeah.. From what i read it was a different one. Thanks for the knowledge. I gained something today.

Bro, that was a detailed piece on pipelining. I just sat here remembering when we were introduced to pipelining way back in the university. I think it was CIT309 (Computer architecture).. Can't remember the title again.

Nice piece man

thanks man. Was it computer engineering you studied? or elect elect?

Communication Technology, bro

nice project sir

Being A SteemStem Member

This post has received a 0.21 % upvote from @drotto thanks to: @banjo.

I once attended a microprocessor class with Electrical/Electronics engineering students in my university, and this reminds me of many things I learnt in that class. I appreciate the simplified way you presented such complex information.