Cache: Computer memory redefined

When I was in my teens, my home tutor hilariously defined a computer as an "idiot machine" solely because the computer does not have "mind of its own". She was right to an extent cause without instructing the processor on what to do, usually, through a program, the computer will just be literarily standing there functionless. Computer programs are series of instructions directing the processor on what and what not to do. These instructions (programs) are stored in a high-speed memory called Random Access Memory (RAM) but information or data stored in the RAM are lost when it loses current within it, hence we say that RAM is volatile in nature.

Computer memory hierarchy

))

[credit: @henrychidiebere]

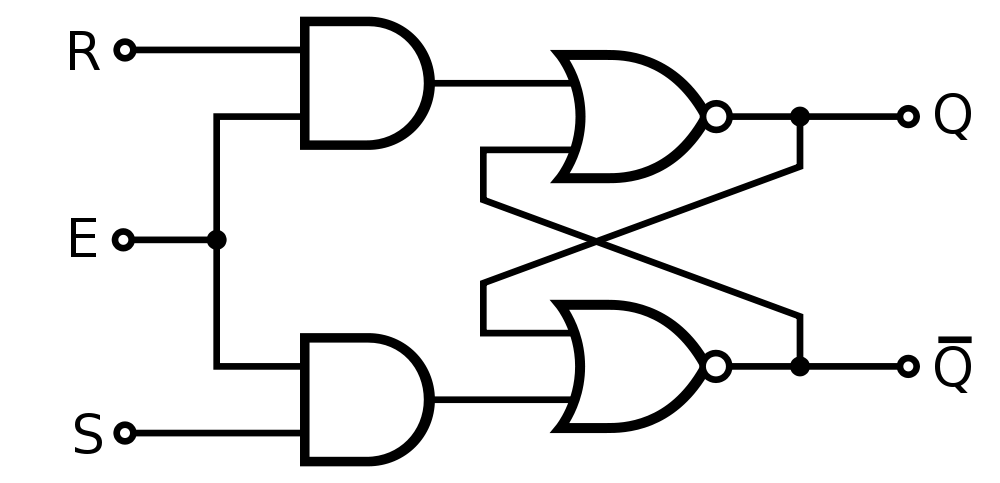

The Register

The register is a very high-speed memory consisting of flip-flops arranged in parallel or in series (latches). The register is the smallest computer memory, in fact, some computer engineers consider the register as part of the computer instructions as some instructions include arithmetics consisting of the addition or subtraction of registers' content(s) and subsequently placing the result in another register. The register is located inside the central processing unit.

[an SR flip-flop equivalent to 1-bit of memory. Credit: wikimedia]

Apart from being the closest and smallest memory of the computer, it is the fastest and also the costliest memory of the computer. The register is part of the major determinant of the architecture of a computer as the choice of instruction set of a computer depends on the size of the register, hence we have 64-bit processor performing the job of both 32-bit and 64-bit while the reverse is not the case since the word length (which is determined by the register) of a 32-bit processor is not large enough to process 64-bit instructions.

The cache

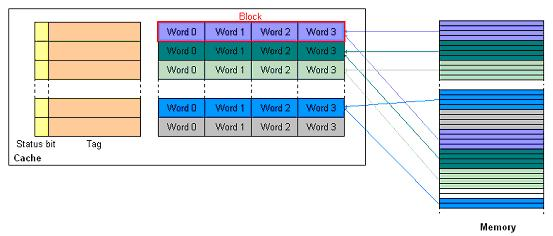

Cache memory is the focus of this write-up and is the closest to the central processing unit after the register. They are high-speed random access memory (RAM). They are called random access memories because the information they contain can be accessed randomly meaning that any byte of memory they contained can be accessed without needing to access the memory byte existing before the needed byte. Random access memories are broadly divided into two; the Dynamic Random Access Memory (DRAM) and the Static Random Access Memory (SRAM).

[credit: wikimedia]

DRAMs requires that the memory be refreshed constantly and failure to do that results in loss of data or information it contains. This is not the case for the SRAM as the information or data it contains will not be lost if the memory is not refreshed, in fact, the memory need not be refreshed. The cache is made using the SRAM though both the DRAM and SRAM are volatile memories.

The cache keeps a very close relationship with the main memory (discussed below) in the sense that data contained in the cache is also contained in the main memory but are kept in the cache using some algorithm to reduce memory latency.

The main memory

My friends always presents their new smartphones to me probably to know my thought about their recently purchased phones. The first question I usually ask them is, "how many gig RAM does your phone contain?". Many people are aware of this memory these days and it is still gaining popularity. When we say a phone contains 4GB RAM, we are talking about the main memory and this is nothing but the place the processor keeps data it is actively using. Main memory is slower, larger and cheaper than the cache and consists of the dynamic random access memory already stated above. In the computer engineering world, most of us are familiar with the double data rate 2, 3 and even 4 ram (DDR2, DDR3 and DDR4), these present day RAMs are produced using the synchronous dynamic random access memories (SDRAM).

Most of the computer memory operations are volatile except storage operations, hence, by default a computer running many unsaved process (reference to a storage), like an unsaved word document, loses all its data after a reboot. A computer without RAM will automatically fail a power-on self-test (POST) giving you a blank screen because the computer copies information it would need for its operations into the main memory at startup and as the need arises.

The auxiliary memory

The auxiliary memory is the largest and the slowest among the computer memories. In fact they are referreed to as storage systems. They are also the cheapest among the computer memory hierarchy. Auxiliary memory includes your cloud storage, your hard drive, USB sticks and many storages that are peripheral to the computer.

Auxiliary memories are not volatile, hence information contained in your hard drive will always remain there even when power has been removed from the system. The auxiliary memory can further be classified as magnetic disk memories like the hard drives, USB sticks, etc., the main disk memory and finally the cache disk memory (I will just stop at listing them for simplicity).

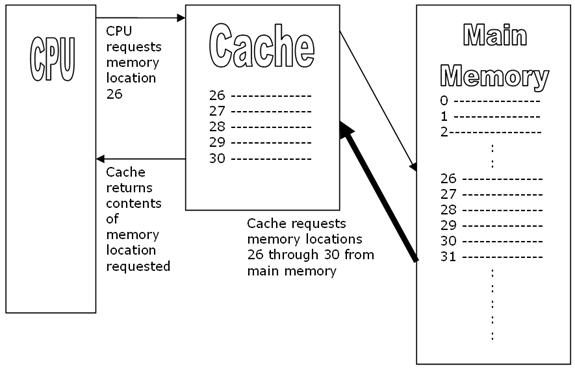

Operation of the cache

The idea behind the cache is simple; a small high speed memory. As stated above, the information contained in the cache is already contained in the main memory but if the computer fetches its information "always" from the main memory, the computer response time would be very large, in other words, the processing time of the computer would be really annoying. When an information is accessed by the processor in the main memory, this information is fetched and a copy is made. This copy is kept in a faster memory, the cache.

Information kept in the cache are based on the principle of locality of reference. Overtime, it has been observed that most computer programs spends as much as 90 percent of its execution time on its instruction alone! This huge amount of time can be greatly reduced since it has also been observed that a program makes reference to data in a memory location it just finished accessing. Hence we can predict with a great accuracy the memory location, data or even instruction a program would use in the near future based on its previous memory access. Hence, programs are executed mostly in loops.

Locality of reference is divided into two; the spatial and temporal locality. In a clear term, temporal locality simply means that data accessed recently in the memory has a very high probability of being accessed again in no time.

Spatial locality in clear terms also simply means that data whose addresses are close to each other are likely to be made reference to, probably together at the same time. For instance, once reference is made to the planet Mars, I would bet my wrist watch that Earth or Jupiter would be mentioned as well.

The central processing unit of the computer is designed to query the cache first for the data it is looking for. When this query returns with the required data, it is called a hit while when this query returned without the requested data, you guessed it right, it is called a miss. The rate at which the central processing unit gets a positive response (a hit) from the cache is called the hit rate of the cache while the rate at which the central processing unit gets a negative response from the cache (a miss) is called the miss rate of the cache.

The overall cache principle can be summarized in the flow chart below.

[credit: creative commons image by wikipedia]

Making the size of the cache large will definitely increase the hit rate of the cache but that would translate a costlier product as caches are very costly memories. Just as increasing the power managment of our smartphones sometimes is more important than increasing the capacity of the battery, optimizing the reference algorithm of the cache can be an answer to increasing its hit rate. When the processor records a miss, the information is fetched from the main memory and a copy is made to the cache, flushing out the oldest equivalent data, with a delay.

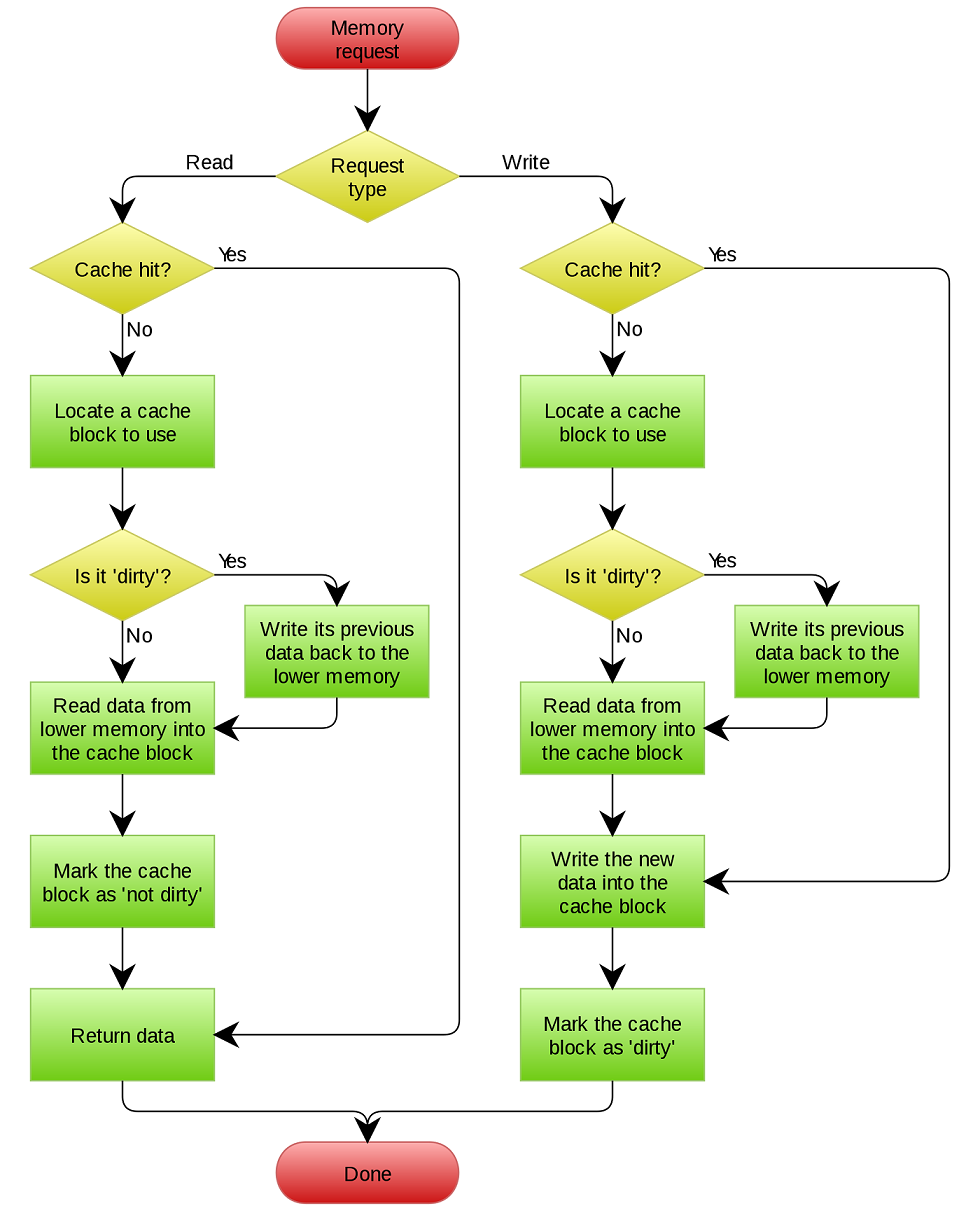

Cache mapping functions

As stated above, the principle of caching is based on the movement of frequently or data likely to be accessed by the processor into a faster memory called cache. Caches are always very small in size (a 3GHz processor might contain 3MB cache) hence requiring the movement of these data to the cache on demand bases and flushing the least referenced data. These data are moved in blocks of memories and the manner in which these blocks are moved to the cache is called the mapping functions. There are basically three cache mapping functions viz:

Direct Mapping

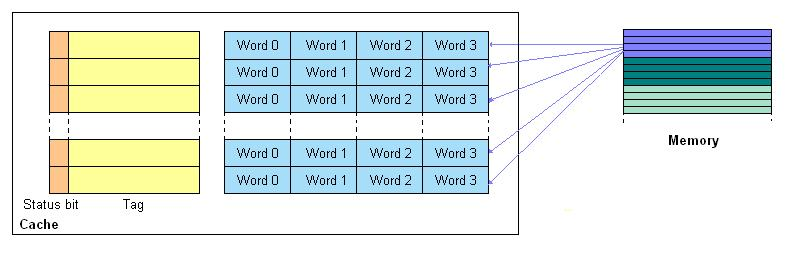

Basically, caches are organized in lines. These lines contains spaces which are enough to store just a block data from the main memory. The cache also contains tags which are used as pointer to the block's location in the main memory.

[credit: wikimedia]

In direct cache mapping, memory blocks are assigned a line in the cache, a line for each memory block. When data is needed to be mapped to the cache and that line is being occupied by an old data, data in that line is simply discarded. Operations of direct cache mapping can be summarized in the image above.

Direct mapping has the advantage of being very simple to implement but problem arises when an instruction requires access to two memory blocks mapped to the same line in a repeated manner. This will result in the cache discarding and reloading the same cache line repeatedly causing the cache to have a high miss rate and this is not a good property of a cache.

Associative Mapping

[credit: wikimedia]

In associative mapping also called fully associative mapping, a memory block can be mapped into any line in a cache. Hence, any memory block from the main memory can be mapped anywhere in the cache memory. When this is done, a separate tag memory is created in the cache for identifying the address these mapped blocks in the main memory. Though this process increases the utilization of cache by the processor, it does this at the expense of access time. A fully associative mapping is summarized in the image above.

Set-associative Mapping

The problem of discarding memory lines in the cache is taken care of in the set-associative mapping by grouping cache lines into sets. Hence a memory block can be mapped to any specific line among the group of lines (sets). This is to say that the set-associative mapping is the combination of the simple mapping process offered by the direct mapping and the flexible mapping process offered by the associative mapping process.

Conclusion

The picture I painted in this write-up has caches presented mostly from the hardware(CPU) perspective, but the operation of cache described above was done on a general perspective of cache. Of course we have web cache, page cache, memoization, etc. We are able to continue our post on steemit post tab even after shutting down and powering up our systems again because page caching was leveraged in the design of steemit.com which saves us a lot of resources and combats loss of data greatly.

References

- Cache memory -computerworld

- Cache -wikipedia

- Random access memory -webopedia

- Computer memory hierarchy

If you write STEM (Science, Technology, Engineering, and Mathematics) related posts, consider joining #steemSTEM on steemit chat or discord here. If you are from Nigeria, you may want to include the #stemng tag in your post. You can visit this blog by @stemng for more details. You can also check this blog post by @steemstem here and this guidelines here for help on how to be a member of @steemstem.

))

))

Being A SteemStem Member

it's really educative

Super detailed, great write up! Thanks a lot!

It's the least I could do. Thanks

:) well looking forward to the next one!

Thanks for the lecturing, I must say I just got refreshed with your article on computer memory. Well done @henrychidiebere

Thanks for stopping by, happy you grabbed something

I will be featuring it in my weekly #technology curation post for the @minnowsupport project and the Creators' Guild! The is a new group of Steem tech bloggers and content creators looking to improve the overall quality of the niche.

If you wish not to be featured in the curation post this Saturday, please let me know. Keep up the hard work, and I hope to see you at the Tech Bloggers' Guild!

thanks bro, i appreciate the support