An Artificial Intelligence Algorithm Can Help Prevent Suicide

Suicides are not new to us as bio-species in our world. Time and time again, there have been individuals who gave up on life and who gave up on living, due to one factor or the other.

Sad as it is, countless lives have been ended prematurely because on their own they just decided that life isn’t worth living. When this happens, 'depression' is largely fingered as the causative factor.

In Miami, a recent case hit the limelight, after a 14-year-old posted on social media that “I don’t want to live no more”. A year later, she live streamed her suicide as she hung herself in front of a webcam via Facebook.

A friend of hers contacted the police, who arrived too late to save her, as her two-hour long suicide was viewed by thousands of people while streaming live.

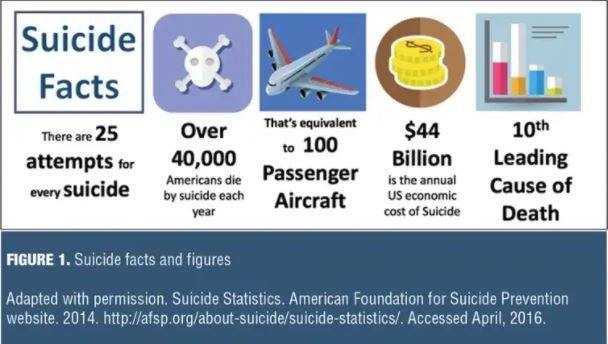

As terrible as the occurrence was, it isn’t considered completely unique; as statistically, the second leading cause of teenage deaths in the US, Europe, and South-East Asia is “suicide”.

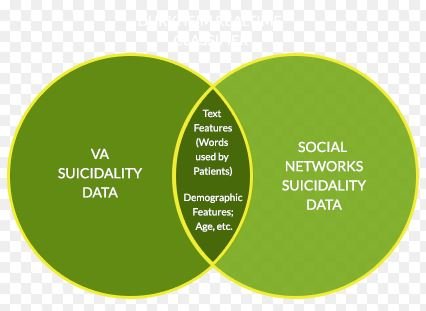

Millions of daily social media posts and chats on Facebook, Google, Siri, Snapchat and Alexa are linked to mental health. Such posts could be pieced together while picking up on suggestive suicidal statements and breadcrumbs dropped by social media users with psychological or mental health challenges.

However, some questions beg for answers.

- Is there a way that the tech and social media companies can use a trail of suicidal-related posts to locate and help those who are mostly at the risk of suicide?

- Can the likes of Google or Siri promptly respond to people searching for information relating to depression and suicide?

- How can a platform like Snapchat react quickly when teenagers are secretly chatting about suicidal acts?

Towards stemming the Suicidal tide

The above questions are beyond just speculation and hypothesis, because some tech giants have taken up the initiative to act. For instance, any Google search in the U.S, for clinical depression symptoms, goes on to activate a private screening test for depression (the PHQ-9) and a knowledge panel.

Tech giant, Google, has stated that it will not link a person’s identity to answers of test which they answer, in order to maintain privacy. Google also say they intend collecting anonymous data to improve their service and the user experience.

While millions of people are taking the tests online – emanating from their search for info on suicide and depression - a large amount of data is being generated which could potentially be useful in generating a digital fingerprint of depression.

Artificial Intelligence (AI) Algorithm

Following the trending but negative system of streaming suicides live, Facebook came up with an ‘Artificial Intelligence algorithm’, in order to scan through people’s post, images and words which indicate tendencies toward depression and suicide.

As soon as the AI spots suicidal tendencies in images, behavior, or words of the person, it may go on to signal ‘self-harm’ to the authorities, who then provide resources to the user and alert an internal “Empathy Team”.

At the scene, the first responders may be notified if their palliative actions do not avert the self-harm or suicidal behaviour. As per Facebook’s policy on this new A.I., people cannot opt out of the initiative.

After months of launching the A.I alogorithm, Facebook Boss, Mark Zuckerberg pointed out that the algorithm has helped of over 100 people.

Concerns about privacy rules and policies

Google and Facebook must be appreciated for kick-starting the process of technologically determining suicidal tendencies via an A.I. algorithm and them stemming them. Despite the brilliant monitoring innovation, there are concerns being raised.

Concerns and challenges include:

- Would a separate consent-approval for the A.I’s monitoring be needed for the social media companies in order to monitor our health?

- Since the companies would be monitoring the mental health of the users of their platforms, do their A.I. algorithms need to be analyzed to show efficacy?

- When the intention of a live stream becomes clear (e.g for self-harm or suicide) should it be allowed to proceed or should it be cut off?

- Can the mental-health database of people be used for target advertisements such as pills which serves as an antidepressant?

- In countries with weak data policy will social media users be subject to same monitoring? It is important to note that Facebook’s A.I. is not yet available in Europe due to the fact that the company does not comply with Europe’s much stricter privacy policies.

- Also, the procedure aimed at curbing teenage mental-health problems, is it centered more on A.I social media monitoring or less?

Conclusion

Presently, the algorithm of Artificial Intelligence to analyze moods of social media posts in not perfect. Also, the exact perspective of mental illnesses varies widely from culture to culture. It is also known by many clinicians that most of the people who plan to commit suicide deny it, which complicates the studies.

Further analysis of 55 million text messages of people considering suicide, conducted by the Text-based mental health service, Crisis Text Line, revealed that people who are considering suicide as more likely to use words such as “pills” or “bridge” rather than the word ‘suicide’ itself.

Regardless of the concerns and challenges, Facebook foresaw the use of A.I. as a beneficial tool in spotting online suicidal tendencies much faster than a friend. But then, a combination of the A.I. algorithm and seasoned counsellors in order to respond to risky and suicidal posts would help reduce the present suicidal statistic and trend.

In order to succeed on a large scale against teenage suicidal tendencies, partnership between public-private organizations and multi-stakeholder must revolve around some efficient research guidelines. Also, the A.I. algorithm should be transparent and the results from search study should be made open access, to guarantee that researchers all over the world can contribute and help instill public trust in the various tech and social companies involved.

Lastly, the advancement in artificial intelligence and brain science, poses great benefits for man and research study. However it should all be done under proper ethical and scientific framework

Reference:

https://www.weforum.org/agenda/2018/01/can-ai-algorithms-help-prevent-suicide/

https://www.nbcnews.com/mach/video/a-facebook-algorithm-that-s-designed-to-help-prevent-suicide-1138895939701

http://mashable.com/2017/11/28/facebook-ai-suicide-prevention-tools/#yN4LoVsZ3OqX

https://www.hulu.com/watch/1206981

https://www.scientificamerican.com/article/ai-algorithms-to-prevent-suicide-gain-traction/

https://www.scientificamerican.com/article/can-facebooks-machine-learning-algorithms-accurately-predict-suicide/

https://www.dailydot.com/debug/algorithm-suicide-prevention/

Thank you for your time and for reading my post.

If you found this post interesting, then kindly UPVOTE, RESTEEM and FOLLOW @rickie, for more quality posts.

You Can Check Out My Other Posts Below:

- You Are More Creative If You Smoke Marijuana, According To Studies

- Accidental Inventions That Impacted Our World

- High-Salt Intake Over Long Periods Can Affect The Brain

- Diabetes and Obesity Can be Prevented By Blocking FKBP51 Protein

- Nissan develops 'B2V' System That Lets You Drive A Car With Your Brain

- Nano-Technology Can Aid The Eradication of Viruses and Viral Infections

Great insights. AI will soon know us better than we know ourselves. I just finished the Homo Deus book and it opened my eyes to the scope of things that AI will/may soon be capable of. Suicide prevention sure is a great idea to focus on.

Haha! @newcastle, you are quite right.....your statement, just about sums it up.

It sounds a bit dreary too, if you put it that way. But yes, being able to prevent suicide would definitely go a long way to help save lives. Thanks for reading

AI is going to change the world....that is for sure!

You are right @bitdollar. Thanks for reading.