Natural Language Processing (Winston | Watson)

How many of you have read Dan Brown's latest novel, Origin? Those of you, who have read it, must know that its ending is a bit controversial. I'm not going into that debate though. The topic of my interest is Winston.

Yes, the legendary and intelligent museum curator, the Winston.

There maybe spoilers ahead, but I'm trying not to reveal much information.

I'm sure, like me, none of you would have guessed who that guy Winston was. The conversation was quite human-like. I didn't even think for a second that he was not human. Until the author told himself. It wasn't shown physically until by the end of novel. For me, it passed the Turing Test with Straight As. :D

Yes, Winston was a very advanced computer who behaved just like human.

- It made meaningful human-like conversations.

- It could search anything in seconds.

- Contact anyone.

- Devise plans and strategies according to the given situation

- It was self-learning continuously and was becoming more intelligent

- It was like secretary to its inventor, who booked flights, arranged meetings, gave opinions on certain matters to its master. In short, it was not human-like, it was human ( at least it seemed that way).

That was fiction but we are rapidly advancing towards that kind of technology. There are plenty of softwares and apps that are currently helping us out in our daily practical life.

- Google Maps - I don't remember last time I traveled without its assistance

- Speech Recognition Apps

I can't think of much examples who could perform tasks like Winston did. Still there is a lot to do. There is one example though, i have saved it for the end.

So the question is, Why Winston was so advanced?

The answer to that is simple but the implementation of such technology is hard.

The three domains covered by such technology are:

- Machine Learning

- Artificial Intelligence

- Natural Language Processing

Artificial Intelligence is a bit common subject now, so I think there is no need to explain it.

Machine Learning in simple words is, Computers or Softwares that are not pre-programmed for everything, there are some things they learn themselves, like human behavior.

Natural Language Processing- Among the three domains, I'm discussing it today.

What is Natural Language Processing?

It is basically an interaction between computer and human, where a computer tries to analyze and manipulate human language. It tries to devise meanings from it.

Natural Language Processing is the intersection of Computer Science, Artificial Intelligence and Computational Linguistics.

Unlike common processors which take an input string like a bunch of symbols only, NLP helps computer to have some perception and devise meanings from a sentence.

Like it tries to find names from a sentence or a phrase and the relation between them.

Magic behind NLP - How it works?

NLP is considered a hard problem of Computer Science. Hard in a sense, human language is very ambiguous, sentences are rarely precise and sometimes have dual meanings. So it should be very hard to set rules for a machine to understand.

Hard-coding could be very tedious or almost impossible in this regard.

In my final academic year, I had to work on NLP related project. I had no knowledge of it then. I re-searched days and nights, how should I program. What program should I write that it recognize any name in the world, any place. It sounded impossible to hard-code every single name or place.

It was then i got my hands on some magic i.e Stanford Libraries.

Yes, there are many NLP libraries. Stanford libraries include NER, POS, Parser and much more.

Here comes Machine Learning into the picture. We give set of examples to computer to learn from. The more examples it learns from, the more accurate it answers our queries. There is a Corpus prepared for such purposes.

Natural Language Processing is a very advanced field. I will discuss here only the concepts I learned while doing the project. Those are some basics which will get you started on it, if you are interested.

So what was my project?

Criminal Investigation System

The idea behind it was that we would make a search engine in which users would type queries related to crimes and it would generate results accordingly. The key idea was not to use keyword-based searching. Instead we planned to introduce semantic searching. Like if a user asks about some criminal's link to a certain crime then search engine will display the information about his link to the crime. It would not display every irrelevant information that includes those keywords like typical search engines do.

So that project included semantic web too but I'm not going into that topic today.

So, NLP !!!

We will start with an example.

Now how a computer will know what that means?

Aforementioned Stanford libraries include, NER i.e, Named Entity Recognizer.

It has an annotated corpus that has been fed with every name and adding more to it.

So it will tag John as a person and Canada as a location.

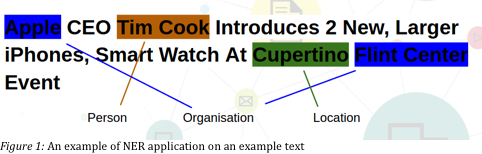

In this example person, organisation and location are tagged. These three things can be easily found in a sentence through NER.

Now see this example.

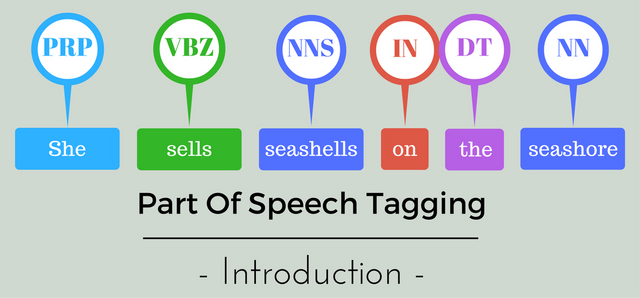

Here POS (parts of speech) tagger is used. It tells you everything from nouns to verbs to prepositions.

Now what's left in a sentence? See another example.

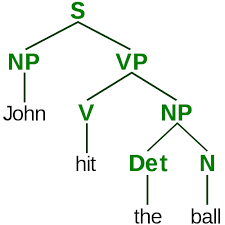

Grammatical structure of sentences. Stanford's Parser helps in this regard. You can see there are tags which are not understandable. To go in further details we can go deep by generating dependencies through parser which help us recognize subjects, objects etc in a sentence. You can check the code in this link.

So these are early steps into NLP programming. As we go deep, things get complicated and scientists are still working on it.

Ushering into Web 3.0

Web 1.0 : Web with static information where people could only read data through internet

Web 2.0 : Social Interactive Web that we are using nowadays

Web 3.0 : We are just at the beginning stage of Web 3.0. There would be virtual assistants. They are still present as 3rd party in different apps but not by default in systems. These assistants process natural/human language to translate the commands and perform them like Send Email, Send Text Messages, Set Alarm or Reminder. You must be thinking of SIRI. It's a good app but still not advanced enough. It has some pre-programmed algorithms on which it works. There is not much machine learning or NLP.

Recent Breakthrough

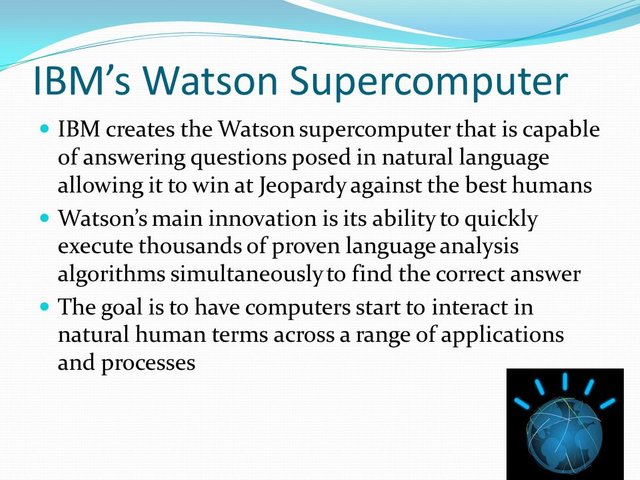

Now we have real life Winston whose name is Watson.

Let's see how further can we go in terms of artificial intelligence. And I hope Watson is better than Winston. Those who know Winston might know what he did was quite astonishing and sad. Sorry i keep forgetting that Winston is not he , it's it.

References

https://nlp.stanford.edu/software/CRF-NER.shtml

https://nlp.stanford.edu/software/tagger.shtml

https://www.lifewire.com/what-is-web-3-0-3486623

Being A SteemStem Member

To properly credit the owner of the images used, linking us to Google isn't enough. You almost made it by allowing us access to the source but it would be much better to click once more into the website and provide the actual source

Thankyou for pointing out. I will keep it in my mind next time for sure.

This is brilliant! I am so happy that you got a curie discovery. Well done! Also, I wrote an article on focusing on your goals as per your request. Do check it out when you have the time. :)

Thanks a lot @sharoonyasir. I'm happy too 😄

And thanks for considering my request, I just saw your post. I will read it soon.