[Steem] 30 Days of Transfers Among the Top 100 Most Valuable Accounts, With Graphs

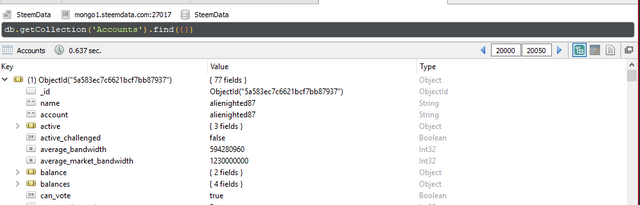

Who's your daddy?

( You can view a clearer version of this document on GitHub: https://github.com/SquidLord/SteemJupyterNotebooks/blob/master/Who's%20Related%20to%20Whom%20on%20the%20Steem%20Blockchain.ipynb )

What I really wanted to do today was to start stepping into the dungeon with Two Hour Dungeon Crawl.

What I'm going to do today is to see if we can build some directed acyclic graphs built off of information which is available from SteamData once again. I should have enough of the underlying graphics-generating systems installed to make this happen, so maybe we actually turn up something interesting.

I'm definitely going to focus on the first 78 accounts, which we have determined control far more than 50% of the total SP in the system. I think those might be a nexus of connections that we are all interested in.

The First 78

What were the top 78 accounts according to the analysis that I ran a couple days ago? Let's regenerate that data and store it somewhere convenient.

from steemdata import SteemData

import datetime

from datetime import datetime as dt

from pprint import pprint

db = SteemData()

breakTime = dt(2000, 1, 1)

query = db.Accounts.find({'last_account_update':

{'$gte': breakTime}},

projection={'name': 1,

'vesting_shares.amount': 1,

'_id': 0}

)

def vestingAmount(queryEntry):

return queryEntry['vesting_shares']['amount']

def sortQuery(queryList):

return sorted(queryList, key=vestingAmount, reverse=1)

# We'll just go ahead and pull the list and sort it sumultaneously.

queryList = sortQuery(list(query))

print('Accounts: {}\n'.format(len(queryList)))

pprint(queryList[:78])

Accounts: 291496

[{'name': 'steemit', 'vesting_shares': {'amount': 90039851836.6897}},

{'name': 'misterdelegation', 'vesting_shares': {'amount': 33854469950.665653}},

{'name': 'steem', 'vesting_shares': {'amount': 21249773925.079193}},

{'name': 'freedom', 'vesting_shares': {'amount': 15607962233.428}},

{'name': 'blocktrades', 'vesting_shares': {'amount': 9496005213.584002}},

{'name': 'ned', 'vesting_shares': {'amount': 7344140982.676874}},

{'name': 'databass', 'vesting_shares': {'amount': 3500010180.297931}},

{'name': 'hendrikdegrote', 'vesting_shares': {'amount': 3298001762.871842}},

{'name': 'jamesc', 'vesting_shares': {'amount': 3199868835.022211}},

{'name': 'michael-b', 'vesting_shares': {'amount': 3084198458.874888}},

{'name': 'val-b', 'vesting_shares': {'amount': 3058661749.06894}},

{'name': 'proskynneo', 'vesting_shares': {'amount': 3021109391.032705}},

{'name': 'val-a', 'vesting_shares': {'amount': 2895251778.87689}},

{'name': 'thejohalfiles', 'vesting_shares': {'amount': 2633775823.387832}},

{'name': 'minority-report', 'vesting_shares': {'amount': 2169258956.047093}},

{'name': 'xeldal', 'vesting_shares': {'amount': 2046642953.741535}},

{'name': 'roadscape', 'vesting_shares': {'amount': 1959600356.285733}},

{'name': 'jamesc1', 'vesting_shares': {'amount': 1644781210.539062}},

{'name': 'arhag', 'vesting_shares': {'amount': 1605237829.023531}},

{'name': 'fyrstikken', 'vesting_shares': {'amount': 1548949904.44616}},

{'name': 'adm', 'vesting_shares': {'amount': 1524486923.142378}},

{'name': 'safari', 'vesting_shares': {'amount': 1500015009.426551}},

{'name': 'riverhead', 'vesting_shares': {'amount': 1494178696.187778}},

{'name': 'adsactly', 'vesting_shares': {'amount': 1334951123.54692}},

{'name': 'trafalgar', 'vesting_shares': {'amount': 1330038590.82164}},

{'name': 'created', 'vesting_shares': {'amount': 1247305568.029396}},

{'name': 'tombstone', 'vesting_shares': {'amount': 1210362781.831355}},

{'name': 'wackou', 'vesting_shares': {'amount': 1114084454.364379}},

{'name': 'glitterfart', 'vesting_shares': {'amount': 1062363158.72496}},

{'name': 'steemed', 'vesting_shares': {'amount': 1051657094.72923}},

{'name': 'jaewoocho', 'vesting_shares': {'amount': 999807983.157001}},

{'name': 'engagement', 'vesting_shares': {'amount': 936760723.508883}},

{'name': 'livingfree', 'vesting_shares': {'amount': 892682798.947933}},

{'name': 'recursive', 'vesting_shares': {'amount': 881978388.39816}},

{'name': 'smooth', 'vesting_shares': {'amount': 872944505.994046}},

{'name': 'bitcube', 'vesting_shares': {'amount': 857132923.66697}},

{'name': 'goku1', 'vesting_shares': {'amount': 855564782.349634}},

{'name': 'slowwalker', 'vesting_shares': {'amount': 814870862.346184}},

{'name': 'ben', 'vesting_shares': {'amount': 785673736.674163}},

{'name': 'pharesim', 'vesting_shares': {'amount': 772191908.833058}},

{'name': 'fulltimegeek', 'vesting_shares': {'amount': 751540385.975086}},

{'name': 'witness.svk', 'vesting_shares': {'amount': 742537008.031217}},

{'name': 'donkeypong', 'vesting_shares': {'amount': 725952873.24769}},

{'name': 'gavvet', 'vesting_shares': {'amount': 722929613.896795}},

{'name': 'xaero1', 'vesting_shares': {'amount': 684089439.968211}},

{'name': 'grumpycat', 'vesting_shares': {'amount': 671119464.205808}},

{'name': 'eeqj', 'vesting_shares': {'amount': 649020354.62877}},

{'name': 'transisto', 'vesting_shares': {'amount': 637944561.30265}},

{'name': 'michael-a', 'vesting_shares': {'amount': 626817548.506146}},

{'name': 'fuzzyvest', 'vesting_shares': {'amount': 626266681.537907}},

{'name': 'eeks', 'vesting_shares': {'amount': 616185095.729982}},

{'name': 'systema', 'vesting_shares': {'amount': 615917285.895589}},

{'name': 'honeybeee', 'vesting_shares': {'amount': 610435961.566472}},

{'name': 'bramd', 'vesting_shares': {'amount': 594999767.618339}},

{'name': 'dan', 'vesting_shares': {'amount': 570531111.463385}},

{'name': 'lafona-miner', 'vesting_shares': {'amount': 529070879.841906}},

{'name': 'arsahk', 'vesting_shares': {'amount': 527728440.803427}},

{'name': 'newsflash', 'vesting_shares': {'amount': 502243245.82727}},

{'name': 'fminerten', 'vesting_shares': {'amount': 501308314.127823}},

{'name': 'neoxian', 'vesting_shares': {'amount': 500858160.187364}},

{'name': 'bhuz', 'vesting_shares': {'amount': 496874738.499231}},

{'name': 'thecyclist', 'vesting_shares': {'amount': 495196258.229233}},

{'name': 'trevonjb', 'vesting_shares': {'amount': 490004354.77295}},

{'name': 'creator', 'vesting_shares': {'amount': 476774626.926905}},

{'name': 'czechglobalhosts', 'vesting_shares': {'amount': 453542876.700221}},

{'name': 'ramta', 'vesting_shares': {'amount': 451840961.095189}},

{'name': 'imadev', 'vesting_shares': {'amount': 445256469.401562}},

{'name': 'roelandp', 'vesting_shares': {'amount': 433678303.134573}},

{'name': 'damarth', 'vesting_shares': {'amount': 432226951.036988}},

{'name': 'hr1', 'vesting_shares': {'amount': 422355147.85433}},

{'name': 'davidding', 'vesting_shares': {'amount': 414003605.758246}},

{'name': 'virus707', 'vesting_shares': {'amount': 412335662.700278}},

{'name': 'newhope', 'vesting_shares': {'amount': 412160978.663641}},

{'name': 'gtg', 'vesting_shares': {'amount': 411489506.732878}},

{'name': 'skan', 'vesting_shares': {'amount': 388810507.997702}},

{'name': 'abdullar', 'vesting_shares': {'amount': 386384818.506917}},

{'name': 'kevinwong', 'vesting_shares': {'amount': 382619111.48287}},

{'name': 'snowflake', 'vesting_shares': {'amount': 372422187.161081}}]

There, that's convenient. All the accounts we expected to find, right there ...

Of course, I had to screw it up a few times, first. You can't do a sort on the data until you have all the data, not just the first 78 accounts to come off the pile. Kind of a big deal that. All pretty now, so far as it goes.

Dare we go balls out and see if anything looks different if we don't filter for our date key?

db = SteemData()

queryBig = db.Accounts.find({},

projection={'name': 1,

'vesting_shares.amount': 1,

'_id': 0}

)

def vestingAmount(queryEntry):

return queryEntry['vesting_shares']['amount']

def sortQuery(queryList):

return sorted(queryList, key=vestingAmount, reverse=1)

# We'll just go ahead and pull the list and sort it sumultaneously.

queryBigList = sortQuery(list(queryBig))

print('Accounts: {}\n'.format(len(queryBigList)))

pprint(queryBigList[:78])

Accounts: 765383

[{'name': 'steemit', 'vesting_shares': {'amount': 90039851836.6897}},

{'name': 'misterdelegation', 'vesting_shares': {'amount': 33854469950.665653}},

{'name': 'steem', 'vesting_shares': {'amount': 21249773925.079193}},

{'name': 'freedom', 'vesting_shares': {'amount': 15607962233.428}},

{'name': 'blocktrades', 'vesting_shares': {'amount': 9496005213.584002}},

{'name': 'ned', 'vesting_shares': {'amount': 7344140982.676874}},

{'name': 'mottler', 'vesting_shares': {'amount': 4617011297.0}},

{'name': 'databass', 'vesting_shares': {'amount': 3500010180.297931}},

{'name': 'hendrikdegrote', 'vesting_shares': {'amount': 3298001762.871842}},

{'name': 'jamesc', 'vesting_shares': {'amount': 3199868835.022211}},

{'name': 'michael-b', 'vesting_shares': {'amount': 3084198458.874888}},

{'name': 'val-b', 'vesting_shares': {'amount': 3058661749.06894}},

{'name': 'proskynneo', 'vesting_shares': {'amount': 3021109391.032705}},

{'name': 'val-a', 'vesting_shares': {'amount': 2895251778.87689}},

{'name': 'ranchorelaxo', 'vesting_shares': {'amount': 2673733493.513266}},

{'name': 'thejohalfiles', 'vesting_shares': {'amount': 2633775823.387832}},

{'name': 'minority-report', 'vesting_shares': {'amount': 2169258956.047093}},

{'name': 'xeldal', 'vesting_shares': {'amount': 2046644131.328833}},

{'name': 'roadscape', 'vesting_shares': {'amount': 1959600356.285733}},

{'name': 'jamesc1', 'vesting_shares': {'amount': 1644781210.539062}},

{'name': 'arhag', 'vesting_shares': {'amount': 1605237829.023531}},

{'name': 'fyrstikken', 'vesting_shares': {'amount': 1548949904.44616}},

{'name': 'adm', 'vesting_shares': {'amount': 1524486923.142378}},

{'name': 'safari', 'vesting_shares': {'amount': 1500015009.426551}},

{'name': 'riverhead', 'vesting_shares': {'amount': 1494178696.187778}},

{'name': 'adsactly', 'vesting_shares': {'amount': 1334951123.54692}},

{'name': 'trafalgar', 'vesting_shares': {'amount': 1330038590.82164}},

{'name': 'batel', 'vesting_shares': {'amount': 1310388186.0}},

{'name': 'created', 'vesting_shares': {'amount': 1247305568.029396}},

{'name': 'tombstone', 'vesting_shares': {'amount': 1210362781.831355}},

{'name': 'smooth-a', 'vesting_shares': {'amount': 1205013219.592872}},

{'name': 'wackou', 'vesting_shares': {'amount': 1114086419.056089}},

{'name': 'bob', 'vesting_shares': {'amount': 1077657897.0}},

{'name': 'glitterfart', 'vesting_shares': {'amount': 1062363158.72496}},

{'name': 'steemed', 'vesting_shares': {'amount': 1051657094.72923}},

{'name': 'jaewoocho', 'vesting_shares': {'amount': 999807983.157001}},

{'name': 'alice', 'vesting_shares': {'amount': 954075907.197174}},

{'name': 'engagement', 'vesting_shares': {'amount': 936760723.508883}},

{'name': 'livingfree', 'vesting_shares': {'amount': 892682798.947933}},

{'name': 'recursive', 'vesting_shares': {'amount': 881978388.39816}},

{'name': 'smooth', 'vesting_shares': {'amount': 872944505.994046}},

{'name': 'bitcube', 'vesting_shares': {'amount': 857132923.66697}},

{'name': 'goku1', 'vesting_shares': {'amount': 855564782.349634}},

{'name': 'yoo1900', 'vesting_shares': {'amount': 820024777.397777}},

{'name': 'slowwalker', 'vesting_shares': {'amount': 814870862.346184}},

{'name': 'ben', 'vesting_shares': {'amount': 785673736.674163}},

{'name': 'pharesim', 'vesting_shares': {'amount': 772191908.833058}},

{'name': 'fulltimegeek', 'vesting_shares': {'amount': 751540385.975086}},

{'name': 'witness.svk', 'vesting_shares': {'amount': 742537008.031217}},

{'name': 'donkeypong', 'vesting_shares': {'amount': 725952873.24769}},

{'name': 'gavvet', 'vesting_shares': {'amount': 722929613.896795}},

{'name': 'alvaro', 'vesting_shares': {'amount': 696470304.0}},

{'name': 'xaero1', 'vesting_shares': {'amount': 684089439.968211}},

{'name': 'grumpycat', 'vesting_shares': {'amount': 671119464.205808}},

{'name': 'eeqj', 'vesting_shares': {'amount': 649020354.62877}},

{'name': 'transisto', 'vesting_shares': {'amount': 637944561.30265}},

{'name': 'michael-a', 'vesting_shares': {'amount': 626817548.506146}},

{'name': 'fuzzyvest', 'vesting_shares': {'amount': 626266681.537907}},

{'name': 'eeks', 'vesting_shares': {'amount': 616185095.729982}},

{'name': 'systema', 'vesting_shares': {'amount': 615917285.895589}},

{'name': 'honeybeee', 'vesting_shares': {'amount': 610435961.566472}},

{'name': 'bramd', 'vesting_shares': {'amount': 594999767.618339}},

{'name': 'dan', 'vesting_shares': {'amount': 570531111.463385}},

{'name': 'lafona-miner', 'vesting_shares': {'amount': 529070879.841906}},

{'name': 'arsahk', 'vesting_shares': {'amount': 527728440.803427}},

{'name': 'newsflash', 'vesting_shares': {'amount': 502243245.82727}},

{'name': 'fminerten', 'vesting_shares': {'amount': 501308314.127823}},

{'name': 'neoxian', 'vesting_shares': {'amount': 500858160.187364}},

{'name': 'bhuz', 'vesting_shares': {'amount': 496874738.499231}},

{'name': 'thecyclist', 'vesting_shares': {'amount': 495196258.229233}},

{'name': 'trevonjb', 'vesting_shares': {'amount': 490004354.77295}},

{'name': 'creator', 'vesting_shares': {'amount': 476774626.926905}},

{'name': 'czechglobalhosts', 'vesting_shares': {'amount': 453542876.700221}},

{'name': 'ramta', 'vesting_shares': {'amount': 451840961.095189}},

{'name': 'imadev', 'vesting_shares': {'amount': 445256469.401562}},

{'name': 'roelandp', 'vesting_shares': {'amount': 433678303.134573}},

{'name': 'damarth', 'vesting_shares': {'amount': 432226951.036988}},

{'name': 'hr1', 'vesting_shares': {'amount': 422355147.85433}}]

But are they different?

# Let's explicitly _only_ compare on the names in either list. The

# actual numbers might change between subsequent runs.

def makeNameList(qList):

initList=[]

for e in qList:

initList.append(e['name'])

return initList

queryNames = makeNameList(queryList)

queryBigNames = makeNameList(queryBigList)

def Diff(li1, li2):

li_dif = [i for i in li1 + li2 if i not in li1 or i not in li2]

return li_dif

Diff(queryNames[:78], queryBigNames[:78])

['davidding',

'virus707',

'newhope',

'gtg',

'skan',

'abdullar',

'kevinwong',

'snowflake',

'mottler',

'ranchorelaxo',

'batel',

'smooth-a',

'bob',

'alice',

'yoo1900',

'alvaro']

Apparently they are!

It's kind of interesting that @ranchorelaxo is in the differentiated list. That makes me wonder if my original analysis was simply too optimistic.

Easy enough to rerun that setup, though. Let's grab some additional code and see what happens.

First, we'll just grab all the helper functions.

def query2tup(idx, queryEntry):

return (idx, queryEntry['name'], vestingAmount(queryEntry))

def queryList2queryTupList(queryList):

idx = 0

outList = []

for e in queryList:

outList.append(query2tup(idx, e))

idx += 1

return outList

def query2csl(queryEntry):

return "{}, {}".format(queryEntry['name'],

queryEntry['vesting_shares']['amount'])

def outputCSL(qryList):

cnt = 0

with open('accountRanked.CSV', 'w') as outFile:

outFile.write("Index, Name, Vests\n")

for lne in qryList:

outFile.write("{}, {}\n".format(cnt,

query2csl(lne)))

cnt += 1

print('Total lines written: {}\n'.format(cnt))

def computeMidbreak(tupQueryList):

totVests = sum([e[2] for e in tupQueryList])

halfVests = totVests / 2

print('Total vests: {}, half vests: {}\n'.format(totVests,

halfVests))

accumVests = 0

for e in tupQueryList:

accumVests += e[2]

if accumVests > halfVests:

print('Rank {} - {} reaches {} of {}!\n'.format(e[0],

e[1],

accumVests,

halfVests))

break

else:

if (e[0] % 5000) == 0:

print('Accum rank {} - {}, {} of {}\n'.format(e[0],

e[1],

accumVests,

halfVests))

Then we just reconstruct the tuple map to speed up things on the big map and write it out to disk.

qBigTup = queryList2queryTupList(queryBigList)

pprint(qBigTup[:30])

[(0, 'steemit', 90039851836.6897),

(1, 'misterdelegation', 33854469950.665653),

(2, 'steem', 21249773925.079193),

(3, 'freedom', 15607962233.428),

(4, 'blocktrades', 9496005213.584002),

(5, 'ned', 7344140982.676874),

(6, 'mottler', 4617011297.0),

(7, 'databass', 3500010180.297931),

(8, 'hendrikdegrote', 3298001762.871842),

(9, 'jamesc', 3199868835.022211),

(10, 'michael-b', 3084198458.874888),

(11, 'val-b', 3058661749.06894),

(12, 'proskynneo', 3021109391.032705),

(13, 'val-a', 2895251778.87689),

(14, 'ranchorelaxo', 2673733493.513266),

(15, 'thejohalfiles', 2633775823.387832),

(16, 'minority-report', 2169258956.047093),

(17, 'xeldal', 2046644131.328833),

(18, 'roadscape', 1959600356.285733),

(19, 'jamesc1', 1644781210.539062),

(20, 'arhag', 1605237829.023531),

(21, 'fyrstikken', 1548949904.44616),

(22, 'adm', 1524486923.142378),

(23, 'safari', 1500015009.426551),

(24, 'riverhead', 1494178696.187778),

(25, 'adsactly', 1334951123.54692),

(26, 'trafalgar', 1330038590.82164),

(27, 'batel', 1310388186.0),

(28, 'created', 1247305568.029396),

(29, 'tombstone', 1210362781.831355)]

Looks good! Out to disk.

outputCSL(queryBigList)

Total lines written: 765383

Now, we could just run our naked analysis to double-check that things are as we suspected.

computeMidbreak(qBigTup)

Total vests: 379032157821.6713, half vests: 189516078910.83566

Accum rank 0 - steemit, 90039851836.6897 of 189516078910.83566

Rank 9 - jamesc reaches 192207096217.31546 of 189516078910.83566!

That is actually significantly different than expected! Completely untrimmed, with the big corporate accounts still sitting in the pile, without any kind of filtering for account activity, the number of accounts at the top with equal amounts of SP for those at the bottom moves down to rank nine.

Let's see what happens when we do a basic decapitation.

computeMidbreak(qBigTup[4:])

Total vests: 218280099875.7508, half vests: 109140049937.8754

Rank 89 - freeyourmind reaches 109146400926.60837 of 109140049937.8754!

Without filtering looking for accounts which have been relatively recently active and just haven't been SP pools from the early days which haven't been touched, the 50% mark moves down to rank 90 from rank 73.

That's not really a huge improvement, truth be told. Instead, what we have is a pretty massive amount of SP which is just sitting around, not doing anything, in accounts which haven't been active for a very, very long time.

Remember my reference to @ranchorelaxo? You do remember what fun he's been involved in most recently, right?

At the time, the site the image was screenshotted had a bug that showed @haejin receiving 6.7% of the reward pool. It was later found this was a bug, and the owner of the site patched it. As you can see it reports over 2,700% of the reward pool which is not even possible.

I provided an updated screenshot and some charts showing one user @ranchorelaxo voting for the majority of the post value.

That wasn't the opening salvo of the Whale Wars, but it was one of the flareups and the ongoing skirmishes. Fallout continues to be widespread.

Note that this is one of the accounts which is different between my filter versions.

Time to rerun the graphs?

import plotly

import plotly.plotly as py

import plotly.graph_objs as go

trace0 = go.Scattergl(

name = 'Raw',

x = [e[0] for e in qBigTup],

y = [e[2] for e in qBigTup],

hoverinfo = "x+y+text",

text = [e[1] for e in qBigTup] )

layout = go.Layout(

showlegend=True,

legend = {'x': 'Rank', 'y': 'Vests'},

title = "Steemit Power Distribution (full, linear)",

xaxis = {'showgrid': True,

'title': "Rank"},

yaxis = {'showgrid': True,

'title': "Vests"})

layoutLog = go.Layout(

showlegend=True,

legend = {'x': 'Rank', 'y': 'Vests'},

title = "Steemit Power Distribution (full, log)",

xaxis = {'showgrid': True,

'title': "Rank"},

yaxis = {'showgrid': True,

'title': "Vests",

'type': 'log'})

layout

{'legend': {'x': 'Rank', 'y': 'Vests'},

'showlegend': True,

'title': 'Steemit Power Distribution (full, linear)',

'xaxis': {'showgrid': True, 'title': 'Rank'},

'yaxis': {'showgrid': True, 'title': 'Vests'}}

layoutLog

{'legend': {'x': 'Rank', 'y': 'Vests'},

'showlegend': True,

'title': 'Steemit Power Distribution (full, log)',

'xaxis': {'showgrid': True, 'title': 'Rank'},

'yaxis': {'showgrid': True, 'title': 'Vests', 'type': 'log'}}

data = go.Data([trace0])

py.plot({'data': data,

'layout': layout},

filename = 'Extended Steemit Power Curve (linear)')

https://plot.ly/~AlexanderWilliams/4/steemit-power-distribution-full-linear/

py.plot({'data': data,

'layout': layoutLog},

filename = 'Extended Steemit Power Curve (log)')

https://plot.ly/~AlexanderWilliams/6/steemit-power-distribution-full-log/

That doesn't look different at all. (Except that with this version you can actually go and drill down into the system by dragging a box – and all you will see is that the knee is very small.)

At least until we come to the logarithmically plotted version. In the unfiltered graph, you can see a strange little plateau happening between rank 65,000 and 165,000 where the rate of decline is slightly less.

Figuring out what those accounts have in common that others do not might be particularly interesting.

You can tell from mousing over the curve in the generated plots that there is a fair amount of differentiation between parts of the slope. A closer examination shows subtle but visible breakpoints at round numbers. For instance, a small plateau is visible between 10,000 vests and 15,000 vests, with another visible between 18,000 vests and roughly 19,000 vests.

These are extremely small bands, and represent incredibly small portions of the curve at this point.

If we look down at the other end, you can see stairstep effects down at roughly 1000 vests down to 500 (which is about the one steem level), another plateau at 200, another plateau at 100, and another plateau at 20. These are pretty obviously breakpoints related to various fractions of a single steem in SP.

Cool is that is, that's not what we came for! Now that we have the data, we should probably work out relationships between high-ranking accounts, if we can.

(You really don't want to know how many hours I just got distracted down the rabbit hole of rerunning these numbers and getting good interactive charts. Or maybe you do! Four. Four hours.)

Time to change toolchains!

Let's start generating a list of interesting accounts. Let's say -- the top 100 accounts? That sound good?

acctsInterest = [n[1] for n in qBigTup[:100]]

acctsInterest.remove('blocktrades')

acctsInterest[:10]

['steemit',

'misterdelegation',

'steem',

'freedom',

'ned',

'mottler',

'databass',

'hendrikdegrote',

'jamesc',

'michael-b']

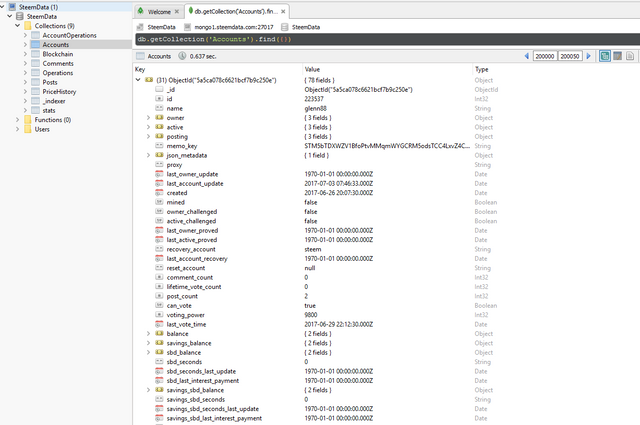

We are initialized, we have a list of interesting targets, now let's look at the data we can get about them and consider what kind of database query we want to put together.

The truth is – not bloody much. It looks like we're going to have to actually query operations on the blockchain to see what kind of interactions we can figure out. Messy, but doable.

Let's take a look at what those things look like. How about specifically transfers which have occurred in the last 30 days between any of the accounts in this list?

constraints = {

'type': 'transfer',

'timestamp': {'$gte': dt.now() - datetime.timedelta(days=30)},

}

constraints

{'timestamp': {'$gte': datetime.datetime(2018, 1, 17, 0, 12, 6, 275328)},

'type': 'transfer'}

transDB = db.Operations.find(constraints,

projection = {'from': 1, 'to': 1})

transList = list(transDB)

len(transList)

1650408

transList[:10]

[{'_id': 'abb8c8a8c58b81a261b9005748b456da30cc57e2',

'from': 'sajumiah',

'to': 'alimuddin'},

{'_id': '1c4fc345665baf57b8517aa3adf0edd3ac8f0f4c',

'from': 'ksolymosi',

'to': 'rocky1'},

{'_id': 'e48dfe538de184bbe2464d0384b3be09d22bbff1',

'from': 'rball8970',

'to': 'mitsuko'},

{'_id': '05a584b548004c52f624da1dfd516fd3950c7e25',

'from': 'minnowbooster',

'to': 'hanitasteemer'},

{'_id': '663c9b11f9359c81be5b34ba7cbf5593d9bacd59',

'from': 'juwel',

'to': 'blocktrades'},

{'_id': 'c2f001a58f8b551b0bc95782eaad4769483e6044',

'from': 'alirajput',

'to': 'resteembot'},

{'_id': '498c3739ced0990b4778d26220a9ad2a939d4bf3',

'from': 'krguidedog',

'to': 'hyunlee1999'},

{'_id': '1f515588b8bc003d969c426ffd43e18b7cc1ccf0',

'from': 'jwbonnie',

'to': 'rusia'},

{'_id': 'e5972ec87747529cabea6901d6d82b1bd9829402',

'from': 'kennybll',

'to': 'blocktrades'},

{'_id': '23a0c85e2cf796d1fcd4a80d13dfa4b965c0e5a5',

'from': 'akumar',

'to': 'freedom'}]

1.6 million transactions is nothing to sneeze at. Though we can definitely filter these down with a little judicious data management.

def filtTransList(TL):

outTL = []

for e in TL:

if e['from'] in acctsInterest or e['to'] in acctsInterest:

outTL.append(e)

return outTL

filtTL = filtTransList(transList)

len(filtTL)

6413

We've cut it down by 1/3! That still ends up with 63 thousand edges, which is going to be uncomfortably large for dealing with any kind of graph.

Maybe the approach here is to only take unique pairings.

(PS: After removing blocktrades, it's dropped to 6400. Kee-rist.)

filtTL = [ (e['from'], e['to']) for e in filtTL]

filtTL[:10]

[('akumar', 'freedom'),

('akumar', 'freedom'),

('nayya24', 'ramta'),

('nayya24', 'ramta'),

('ghiyats', 'ramta'),

('minnowbooster', 'smooth-b'),

('julybrave', 'hr1'),

('miftahuddin', 'ramta'),

('eeqj', 'blocktrades'),

('boomerang', 'spmarkets')]

from more_itertools import unique_everseen

uFiltTL = list(unique_everseen(filtTL))

len(uFiltTL)

2200

That certainly cut down the number of elements that were dealing with. Only 20,000 edges.

This might be acceptable. Or it might not. It's really hard to tell. If it turns out that we need to cut down the amount a bit, at this point it just might be utterly arbitrary.

So we have a list of all the transactions that involve the accounts we're interested in. Necessarily we also have a list of all the accounts who have interacted with the ones we're interested in.

Now we just need to create a list of nodes that aren't in our interest list, make nodes for all of the accounts we're interested in, and start drawing some edges.

(PS: And another order of magnitude drop after removing @blocktrades. Sensible, since it's an exchange, but really ... These PS will only make sense on your second read-through. It's like time-folded narrative!)

assocAccts = set()

for e in uFiltTL:

a1, a2 = e[0], e[1]

if not a1 in acctsInterest:

assocAccts.add(a1)

if not a2 in acctsInterest:

assocAccts.add(a2)

len(assocAccts)

1218

Not bad. We have 100 accounts of interest and 15k associated accounts. For operations which have involved transfers for the last 30 days.

That's probably well more than enough to work with.

(PS: Another order of magnitude cut. I might be able to increase the timeframe back to 90 days at this rate.)

Let's load up the graphviz module and then tinker.

from graphviz import Digraph

dot = Digraph(comment="Steem Relations",

format="jpg",

engine="sfdp")

dot.attr('graph', ratio='auto')

dot.attr('graph', scale='100000,100000')

dot.attr('graph', nodesep='50')

dot.attr('graph', sep='+250,250')

dot.attr('node', shape='square', style='filled', color='green', fillcolor='green')

for a in acctsInterest:

dot.node(a, a)

dot.attr('node', shape='oval', color='black', fillcolor='white')

for a in assocAccts:

dot.node(a, a)

for e in uFiltTL:

dot.edge(e[0], e[1])

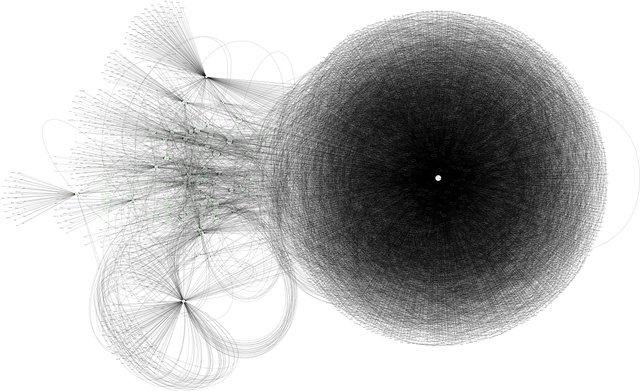

That may just be a little bit extreme. Considering that it is only localizing the top 100 accounts and the activity of the last 30 days, it's quite nuts.

Of course, the problem is @blocktrades. Of course it's at the center of a crazy nest of activity.

Let me go back and remove it, specifically, from our list of interesting targets.

dot.render('steemTransferRelationships')

'steemTransferRelationships.jpg'

You're probably going to want to download that image to fully take in what you're seeing.

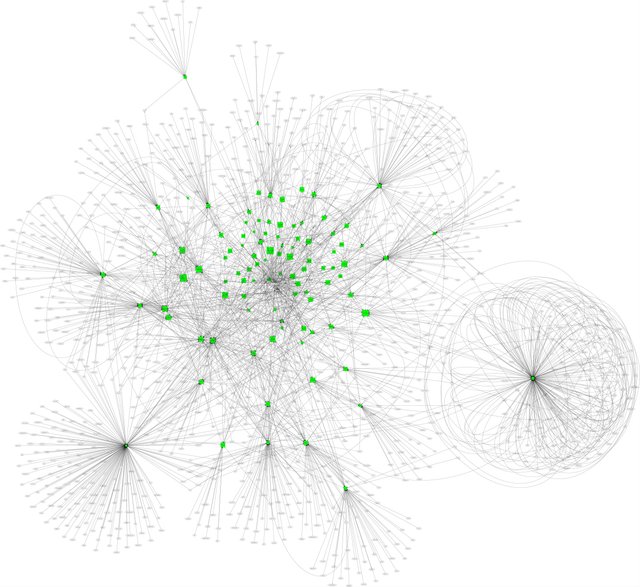

Once blocktrades was removed, and if you color touchups were done, things became a little bit clearer. It's actually interesting to note where there are isolated nexi of transfers largely isolated from the rest of the network that was involved with the top 99.

There is a very tight cluster involved with @hr1 in the southwest which is extremely dense and which has relatively little crossover, surprisingly. Where they do it appears to involve @arama and @newhope on the south side.

@ramta is a big deal in the southeast, with a lot of accounts involved with transferring funds within and between each other. I'm not sure what's going on there, but it's interesting. There's a lot of give-and-take.

Up in the far north, @bramd has his own little thing going on. @gtg likewise. Unlike some of the outer clusters elsewhere, they just shoot off to the side and those they interact with by trading appear to take their further exchanges somewhere farther from the top.

The core areas are pretty dense with intra-group trade, which tells us pretty clearly that – at least for the last month – a lot of the activity at the top is largely incestuous. Transfers are going through a fairly tight network of accounts around and around.

This is pretty interesting stuff.

Hopefully someone with more talent for financial analysis will find this sort of relationship analysis and visualization interesting enough to pursue. It's not really my forte, sad to say. Thus I'm making my code and research available for anyone who'd like to come along and take their turn.

Juicy incest!

There are no words. Your knowledge is surprising.

If it's not a secret, how much did you spend your time creating this article?

Well, my knowledge is spotty and a little inconsistent at best. It's been many years since I did any kind of programming at a significant sitting. The piles of reference material that I had around my virtual seat were not to be trifled with.

So – if you count the amount of time that I put in on the problem yesterday, which led to me refining that work into a more precise and better presented set of graphs regarding who is at the top of the blockchain, plus the work today in getting plotly connected up without a problem and starting to work on the graphviz integration with my development environment…

Maybe 12 hours, total? Most of them today, most of them flailing around at graphviz to get it to output content that looked compelling and actually was explorable. Not to mention working out a few more things about how to control database queries to SteamData because I didn't want to keep banging on them too heavily.

data posts take ages, love what you have done here. Keep it up

The funny thing is that at this point, the part that takes the most time is actually processing the data to build the graphs and charts. Figuring out how to get the data, pulling the data, getting the data into usable chunks – that's at most an hour. Doing the actual writing and analysis? A couple of hours at worst, probably because I'm not very good at it.

Waiting for massive amounts of data to get chunked into various presentation formats? Even with the amount of CPU I have to throw at the problem – well, there's always plenty of time when tinkering with these things to get a snack. Or a few rounds of Atlas Reactor.

So now I just have to figure out more things to draw lines around.

I really don't know what any of this means, but have an upvote for putting so much detail and work into this. I'm going to follow, just so I can learn more about this world.

Cheers!

Just so we're clear, this isn't my usual type of content. But sometimes you have a moment of inspiration and get curious about things, which leads to all sorts of shenanigans.

We're clear, but along with your other posts, I want to be able to come back to this when I understand it better. Curiosity is what keeps me going.

If nothing else, my next set of graphs will be terribly pretty.

They will also outline actual proof that most of the transactions involving the top 200 accounts on the steem blockchain are incoming rather than outgoing. That is, at least by quantity if not quality, most of the funding is flowing into those accounts, not out of them.

Those numbers might change once I figure out a good way to put the actual quantities on the graph, but that's a preliminary finding.