CLASSICAL ARGUMENTS OF EMPTINESS AND AETHER (Pt. 5 of 5 Laplace, Faraday, Maxwell, Planck, and Einstein)

Fittingly, the resurgence of the aether theory was immediately followed by the discovery of a direct relationship between electricity and magnetism. As the story goes, Hans Christian Orsted was giving a lecture on electricity and magnetism when he became convinced that the two phenomena were connected. He decided to discharge a Leyden Jar with a compass resting nearby on the same table, confidant that the discharge would affect the compass needle and dazzle the crowd. When he discharged the Jar, the needle jumped for a moment then wobbled back into place, but no one in the audience could see well enough to tell what had happened. Orsted left the lecture in relative disgrace, but he had proved to himself the connection between electricity and magnetism. Andre-Marie Ampere later assisted Orsted in publishing the discovery, furthered the research, and demonstrated the existence of attractive and repulsive forces between parallel conducting wires.

Ampere and Orsted had shown the world that electricity and magnetism were in some way connected, but it took several years before people began to understand this connection on an intimate level. Michael Faraday was responsible for much of this progress. Faraday spent his teenage years as a bookbinder’s journeyman, learning chemistry and electrical theories by reading the books he was supposed to be binding. He was particularly taken with a book of Humphrey Davy’s lectures on electrolysis— the precipitation of chemical reactions using electricity— and the relationship between work and heat. Faraday wrote Davy a letter with notes and comments on the lectures, and the latter was so impressed that in 1813 he hired a 22-year old Faraday as secretary and personal assistant for his continental tour.

Faraday’s career blossomed quickly from there. In 1815 he became a resident at the Royal Institution of Great Britain, in 1821 he published his discovery of electromagnetic rotation, in 1823 he published a paper on the liquefaction and solidification of gases, and in 1825 he discovered Benzine and was made Director of the Laboratory at the Royal Institution. In 1831, two years after the death of his mentor Humphrey Davy, Faraday made his most important discoveries. He discovered electric induction when he ran electric current through a loop of wire, accidentally generating a current in a nearby loop of wire. Shortly thereafter, he attempted to generate an electric current by placing a magnet in proximity to one of his wire loops. The stationary magnet did nothing, but as he moved the magnet closer to the wire a small current was created, prompting the conclusion that a changing magnetic field produces an electric field.

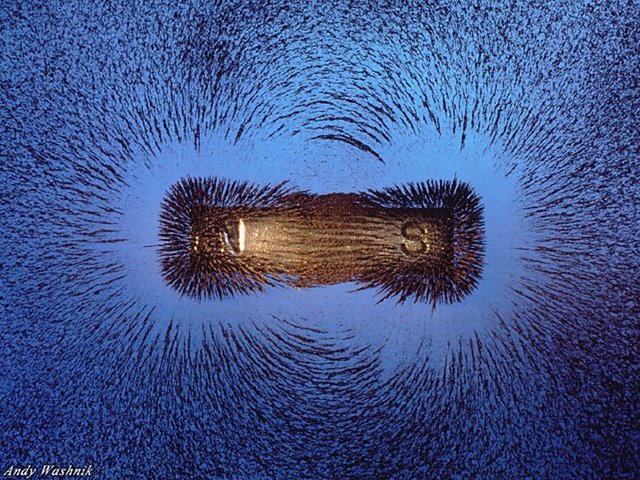

In the same landmark year, Faraday discovered the physical reality of the magnetic field. He placed piles of iron filings in the proximity of magnets, and found that the filings organized themselves into symmetrical lines emanating from and surrounding the magnet. He concluded that electromagnetic induction “never occurred except through the influence of intervening matter” and “the whole action is one of contiguous particles…” Faraday takes care to specify that by “contiguous particles” he means “those which are next to each other, not that there is no space between them.” He endorses a subtle medium, but rather than subscribe to the elastic, ubiquitous medium supposed by Fresnel, Cauchy, Young, and others, Faraday explains electromagnetic phenomena through the collision of his subtle particles. In this way, his electromagnetic theory strongly resembles the kinetic theory of heat, which also relies upon the collisions of neighboring particles— just particles of a larger scale. This similarity would ultimately aid the popularity of Faraday’s theory, as the kinetic theory of heat would soon defeat the caloric theory, owing to the work of Julius von Mayer and James Prescott Joule in the early 1840’s.

While Faraday did not specifically address the philosophical issues of vacuum or action at a distance, his work provided a logical, experimentally-validated counterpoint to the arbitrarily defined force laws of Laplace and other predecessors. Laplace was content to invent a new mathematical “law” for every physical process, Coulomb and his contemporaries relied upon three different fluids to explain electromagnetic phenomena, but Faraday validated the idea of finding common reasons between disparate phenomena. In 1845, upon discovering a relationship between electromagnetism and polarized light, Faraday expressed his scientific philosophy as follows: “I have long held an opinion, almost amounting to a conviction, in common, I believe, with any lovers of natural knowledge, that the various forms under which the forces of matter are made manifest have one common origin; or, in other words, are so directly related and mutually dependent that they are convertible, as it were, one into another, and possess equivalents of power in their action.” Faraday never succeeded in reducing his physics to a common origin, but his experimental discoveries and his “lines of force” were significant steps in the right direction.

Faraday’s spiritual heir was undoubtedly James Clerk Maxwell, whose first major breakthrough was proving that a changing electric field produces a magnetic field, a complement to Faraday’s earlier discovery that a changing magnetic field produces an electric field. Taken together, these finding implied that a change in either field would produce a self-sustaining chain reaction, a change in one field precipitating a change in the other and vice versa, as these reactions propagate outward in the form of an electromagnetic wave.

Maxwell had long been obsessed with understanding the physical and mechanical nature of Faraday’s lines of force. Previous discussions of the magnetic force lines had focused on their geometric structure, but now Maxwell sought to understand them as consequences of the motion of the aether, which he believed to be the medium of the electric and magnetic fields. Maxwell proposed the aether as an incompressible fluid moving through the “tubes” of the lines of force, but he was frustrated by the arbitrary nature of this description and its lack of explanatory power. He disparagingly called it “not even a hypothetical fluid” but instead “a collection of imaginary properties.” Despite the lack of significant progress, he remained diligent in his desire to understand the nature of electromagnetism.

Maxwell had another major breakthrough in 1861 when he found that the speed of the electromagnetic waves were related in a simple way to two constants appearing in Ampere’s circuital law: electric permittivity and magnetic permeability. He discovered that the ratio between these two constants yielded not only the hypothetical speed of the electromagnetic waves, but also a close approximation to the measured speed of light, implying that light was in fact an electromagnetic phenomenon. He quickly wrote to Michael Faraday and William Thomson to declare that “the magnetic and luminiferous media are identical.”

If Maxwell was right that light was a form of electromagnetic wave, then it would stand to reason that there would be other forms of light-- electromagnetic waves of different wavelengths which would not necessarily be visible to the human eye. The German physicist Heinrich Hertz set out to find them, and he succeeded. He produced radio waves in his laboratory, adding further experimental credibility to Maxwell’s discovery. Herman von Helmholtz declared to the world, “There can no longer be any doubt that light waves consist of electric vibrations in the all-pervading ether, and that the latter possesses the properties of an insulator and a magnetic medium.”

Maxwell’s discovery was hailed as a triumph and his vision of the aether began to change to accommodate his new understanding. While his theory on the lines of force had suggested the aether as an incompressible fluid, he now imagined it as an elastic one, fit to accommodate undulations which manifest themselves as electricity, magnetism, and light. His new conception allowed him to see the gravitational potential mathematics of Laplace, Lagrange, Green, and Poisson from a different perspective. If space itself were responsible for the transmission of light and electromagnetism, it would intuitive to believe that gravity also arises from a physical property of space and not merely action at a distance, and thus Maxwell suggested to his peers that they direct their attention to the potential function “as if it were a real property of the space in which it exists.”

Not until Einstein’s theory of General Relativity would this feat be accomplished in a practically useful manner; however, Maxwell’s peer William Thomson had made impressive attempts at that goal even before Maxwell’s discovery of the electromagnetic nature of light. Thomson had established that Faraday’s theory of electromagnetic induction via contiguous particles could be reconciled with the traditional, Laplacian mathematics of action at a distance and potential theory, and perhaps it was partly Thomson’s own interest that led Maxwell down a similar path.

Thomson is best remembered for the 1848 invention of the Kelvin temperature scale, which uses zero as the hypothetical absolute zero— the complete absence of heat and kinetic energy. Like Faraday, he was convinced that all phenomena spawned from a single source. He spent 1856 and 1857 studying Faraday’s magneto-optic effect, and on publishing his studies he claimed that “the explanation of all phenomena of electro-magnetic attraction and repulsion, and of electro-magnetic induction, is to be looked for simply in the inertia and pressure of the matter of which the motions constitute heat.” Thomson believed that heat and electromagnetism arose from the motion of matter, and in this belief it might appear that he held an atomistic view of the universe. On the contrary, he was already searching for a solution to the concept of fundamental, indivisible atoms. He and his friends George Stokes and P.G. Tait all believed in the existence of the aether. Unlike other proponents of the aether, however, they were unsatisfied with the atomistic, “subtle particle” explanation of their elastic medium.

Thomson found a tremendous inspiration in the work of his peer Herman von Helmholtz. Helmholtz had already influenced Thomson with his 1847 paper on the conservation of energy, which explained the role of energy conservation in musculature, nerve physiology, and in electromagnetic discharge. As a student of Helmholtz’s work, Thomson was thoroughly intrigued when Helmholtz later postulated an incompressible, frictionless, aetherial fluid. In this ideal fluid, Helmholtz theorized that portions of the fluid that are stationary will remain stationary, while portions of the fluid that are in rotational motion will keep their rotation. This was an expected result: since the fluid did not allow for friction, there was no action available to change the fluid’s momentum. As a result a vortex, which is a portion of fluid in rotational motion, would always maintain the same proportions and would be immune to dissipation, existing forever. Helmholtz also showed that the ‘strength’ of a vortex, defined as the product between the local circumference and rotational velocity, would remain constant over the entire length of the filament-like “vortex tube.” He proposed that vortex tubes, if frictionless, could become entangled without destroying the vortex.

Thomson and Tait were both enamored with the idea, not because they believed the aether to be frictionless, but because they saw the utility of explaining atoms and other particulate phenomena as fluid vortices instead of blocks of matter and it seemed inevitable after Maxwell’s discovery of the electromagnetic nature of light that all phenomena would eventually be understood via motions in the aether. PG Tait had in his laboratory a mechanism for shooting smoke rings, so the pair exercised their curiosities by firing smoke rings into each other, and observing the rebound effect. Both saw the surprising integrity of smoke vortices as support for the vortex theory of atoms. Thomson wrote: “Two smoke-rings were frequently seen to bound obliquely from one another, shaking violently from the effects of the shock. The result was very similar to that observable in to large india-rubber rings striking one another in the air. The elasticity of each smoke-ring seemed no further from perfection than might be expected in a solid india-rubber ring of the same shape, from what we know of the viscosity of india-rubber. Of course this kinetic elasticity of form is perfect elasticity for vortex rings in a perfect liquid. It is at least as good a beginning as the ‘clash of atoms’ account for the elasticity of gases.”

But the smoke ring experiment had only proved that vortices could collide, rebound, and persist— it did nothing to explain the diversity of atomic forms. To solve this shortcoming, the pair sought refuge in Helmholtz’s claim that frictionless vortex tubes could be entangled without disrupting the flow. Tait made an exhaustive list of knotted loops, drawing analogies between each knot and a different element of the periodic table. The infinite variety of the knots was more than sufficient to explain the varieties of known matter. The knot explanation may seem preposterous to the modern reader, but it impressed Thomson and Tait’s contemporaries and a panel of the Royal Society. Between 1870 and 1890, the vortex theory of the atom became immensely popular; about sixty papers on the theory were written by twenty different scientists. George FitzGerald acknowledged the flexibility of the theory and concluded that “with the innumerable possibilities of fluid motion it seems impossible but that an explanation of the properties of the universe will be found in this conception.”

In 1893 Thomson wrote, “During the fifty-six years which have passed since Faraday first offended physical mathematicians with his curved lines of force, many workers and many thinkers have helped to build up the nineteenth-century school of plenum, one ether for light, heat, electricity, magnetism; and the German and English volumes containing Hertz’s electrical papers, given to the world in the last decade of the century, will be a permanent monument of the splendid consummation now realized.” In the preceding years, Heinrich Hertz had furthered Maxwell’s research by transmitting and observing electromagnetic waves in his laboratory. Nikola Tesla, inspired by the work done by Hertz, invented the alternating current, wireless electrical technology, and a long distance radio. Within a few years, Marconi would use Tesla’s radio patents to build the first commercially available radios as well as to send a message across the entire Atlantic ocean, a feat for which he won the Nobel Prize. All of these pioneers, from Maxwell to Marconi, took for granted the existence of the aether. The aether was the medium through which electromagnetic waves propagated (they were commonly referred to as aetherial waves), and thus the aether was rightly given credit for much of the scientific and technological advances pouring out at the turn of the century. Thomson believed these accomplishments would be a “permanent monument” to the school of plenum, but he was sadly mistaken. Already the foundation of the monument was beginning to crack.

The Equipartition Theorem, derived by Ludwig Boltzmann in 1876, states that in a certain volume of gas or liquid in which the particles exchange energy through mutual collisions the total energy is, on average, distributed equally between all of the particles. Writing E for the total energy of a container and N for the total number of particles, we find that the average energy per particle is E/N. If the particles are all identical, then they will have on average the same velocities and and kinetic energies. If we have a mixture of gasses or liquids then the heavier particles will have lower velocities and the the lighter particles will have higher velocities, such that the average kinetic energy remains equally distributed amongst all of them. Toward the end of the nineteenth century, Lord Rayleigh and Sir James Jeans attempted to extend this thermodynamic law to the realm of electromagnetic radiation. Suppose we have a container in which the inner walls are perfectly reflective mirrors, such that they reflect one hundred percent of the light which strikes them and have no effect on the frequency of that light. While the number of gas molecules in a given container is always large but finite, the number of possible vibration frequencies of light is always infinite. Rayleigh and Jeans believed that the energy of red light injected into a perfectly reflective container should gradually shift into blue, violet, ultraviolet, X-rays, gamma rays, and so on without any limit. Thus, a scientist shining a flashlight into such a container and closing behind ought to be wary when opening it, for they might expect to find a flash of harmful radiation upon opening the container and releasing the shifted light. Even worse, the infinite range of possible light frequencies led to an infinite capacity for energy which violated the law of conservation of energy. The drastic shift was of course never detected, though many believed it ought to have been, and this disconnect was dubbed the “Ultraviolet Catastrophe.”

No one could solve this experimental conundrum until December 14th 1900, when Max Planck presented the hypothesis that light could only be absorbed or emitted in discreet bits he called “quanta,” with energy content directly proportional to the corresponding frequency of light. The higher the frequency, the less possible energy values below any given limit, meaning the amount of energy that can be taken by high frequency vibrations becomes finite in spite of the infinite number of possible frequencies. Planck openly considered his idea “a purely formal assumption” and used it for practical means without genuinely believing it to be a true description of light.

His intuition was justified, for we can certainly explain away the Ultraviolet Catastrophe without recourse to quanta. The “paradox” presented by Rayleigh and Jeans was based on the flawed premise that the number of molecules in the Equipartition Law could be equated with the number of possible light frequencies in its electromagnetic counterpart. The proper analogy would be between light frequency and molecular velocity or the number of molecules and the number of light waves. By making this substitution, the supposed paradox disappears entirely. The maximum wave frequency is limited by the amount of total energy allowed into the container. In such a perfect container, frequency shift can only occur by virtue of wave collision and combination, and thus the frequency limit must be that of a single light wave possessing all of the initial input energy. Of course, it is absurd to think that by chance alone all of the light waves would combine into one high-frequency wave, but this immensely improbable scenario provides a finite limit of the wave energy and resolves the Ultraviolet Catastrophe. Regardless, Planck had already opened Pandora’s Box and alternative explanations for Rayleigh and Jeans paradox would be hard pressed to do away with the notion of quanta, which would soon be further validated by the work of Albert Einstein.

In 1896 at the age of 17, Albert Einstein enrolled in the Zurich Polytechnic and trained for four years to become a professor of physics and mathematics. Upon graduating in 1900, he was unable to land a job as a professor, and so he worked as a tutor for two years before reluctantly accepting a job at the Swiss patent office, a humble post which would add to the legend of his eventual success. In the patent office, his work focused largely upon the transmission of electrical signals and electrical-mechanical synchronization of time, topics which would later be used for thought experiments in his papers on Special and General Relativity. In 1905, at the age of 26, Einstein published paradigm-shifting papers on Brownian motion, the photoelectric effect, mass-energy equivalence, and Special Relativity.

Einstein seized upon Planck’s idea of light quanta for his papers on Special Relativity and the photoelectric effect, both of which assume light to be particulate in nature. The photo electric effect is the experimentally observed phenomenon in which high frequency light falling upon a metal plate causes a discharge of electrons from the plate. The study of this phenomenon had previously resulted in two simple and well-established laws:

I. For light of a given frequency but varying intensity the energy of emitted photoelectrons remains constant while their number increases in direct proportion to the intensity of light.

II. For varying frequency of light no photoelectrons are emitted until that frequency exceeds a certain limit which is different for different metals. Beyond that threshold frequency the energy of emitted photoelectrons increases linearly, being proportional to the difference between the frequency of the incident light and the critical frequency of the specific metal.

Einstein argued that these laws were incompatible with the prevailing wave theory of light but explained neatly by a corpuscular, quantum theory. Since electrons were ejected from the plate by virtue of electric force, it seemed intuitive that their energy should increase with the intensity of light, which was never observed. By presuming light to be composed of particulate “quanta,” the instensity could be considered as simply a measure of the total number of light quanta, meaning that higher intensity leads to more collisions and more electron ejections but no additional ejection energy. Planck, however, still harbored serious doubts regarding the validity of his own theory, which he still regarded as a strictly formal assumption. While Einstein’s explanation is insightful, it does not necessarily demand that light come in particulate form, for we can just as easily suppose that intensity translates to a higher number of individual waves rather than individual quanta, and we arrive at an equally suitable explanation. When Planck finally met Einstein, he tried to talk the latter out of his belief in quanta, telling him that the theory’s acceptance would regress humanity’s understanding of light “by centuries, into the age when Christiaan Huygens dared to fight against the mighty emission theory of Isaac Newton.” Nevertheless, Einstein eventually convinced Planck to accept the validity of quanta, and Planck’s support lent credibility and recognition to Einstein’s work.

A global philosophical shift was now underway, the magnitude of which cannot be understated. Before Einstein’s rise to fame, the stage was set for the aether to become permanently accepted as the fabric of reality and purveyor of the fundamental forces. His paper on the photoelectric effect succeeded in killing the aether-based wave theory of light.

THANK YOU FOR READING!

Cover Photo: Image Source

Hi I am working on the same subjects, but have not written down much yet.

You may like http://www.thresholdmodel.com/

He shows with experiments that Einstein's idea of light-particles was wrong. That is because sometimes you can have 2 detections while there is only one "particle" transmitted. This works better with ultraviolet and can even be done with nuclear particles.

It would be nice to have some more verifications. But in mainstream papers there are indeed double detections. It is done away with as noise. According to the experimenter the noise increases with the frequency of the light or particles.

The threshold model assumes that there are no particles at all. The energy is spread over the area. The detectors start with a random initial level.

If a certain amount of energy is reached, the detector reaches the threshold. This means gives off a signal that we can measure.

Lets say initial state:

0 1 2 3 4 5 6 7 8 9

State after receiving energy:

1 2 3 4 5 6 7 8 9 10

Detection of "particle" at X:

1 2 3 4 5 6 7 8 9 X

So there is a hidden state at the detector. This works with all experiments of quantum mechanics that I have seen. The experiments exclude a hidden variable in the particles, but not hidden variables in the detectors.

But this also means that Einstein's and Plank's assumption of particles was wrong to begin with. From the website I read that Plank had first thought of this threshold solution, but had omitted it, because he thought that all energy states started at zero.

I don't know if this theory always works, we still have to test that better. But it is the simplest solution for quantum mechanics that also gives a very simple way to calculate results. With Occham's Razor it wipes all other options off the table. With simulations it makes everything suddenly very simple.

Anyway, thanks for writing out the history.

Thank you for reading and for your insightful comment! It's always nice to find more evidence that supports my opinion while at the same time deepening my understand of the subject. I'll check that link out now

In high school I learned about the faraday laws, but not enough knowledge to understand all of the great significance of it. Thanks for the valuable knowledge in your article.

siempre sorprendiéndonos y enseñándonos con tus post, me encanta leerte gracias por enseñarnos, y distraernos con tus publicaciones,

Amazing brother!!!

Que personajes de nuestra historia contemporanea @youdontsay

Get a free Bible for your phone, tablet, and computer. bible.com

To listen to the audio version of this article click on the play image.

Brought to you by @tts. If you find it useful please consider upvoting this reply.