Fuzzy, Evolutionary and Neurocomputing - Neural Networks (part 2)

Development of neurocomputing began in the mid 20th century as a result of connecting series of biological revelations and their modelling appropriate for computer processing.

As early as in 1943., Warren McCulloch and Walter Pitts in the "A Logical Calculus of Ideas Immanent in Nervous Activity" have a defined model of artificial neuron (so called TLU perceptron) and showed that it can be used to calculate logic functions AND,OR and NOT. Taking into account that using these 3 functions it is possible to build arbitrary Boolean function, follows that as a result of building (that is, appropriate constructing) artificial neural network from TLU neurons you can get arbitrary Boolean function. However, the problem which is worth emphasizing here is that it is about solving the problem by construction, not by learning.

Few years later, British biologist Donald Hebb in his book "The Organization of Behaviour" from 1949. publishes his revelations about the work and interaction of biologic neurons. Concretely, his observation was that if two neurons often fire together, then it comes to metabolic changes by which the efficiency of one neuron exciting the other increases over time.

With that knowledge, all the preconditions for defining learning algorithm of TLU perceptron have been created. And that is exactly what Frank Rosenblatt has done through his report "The Perceptron: A Perceiving

and Recognizing Automaton" from 1957. and the book "Principles of Neurodynamics" from 1962. He defined the learning algorithm of TLU perceptron, using the Hebb's idea that learning means changing the bong strength between neurons.

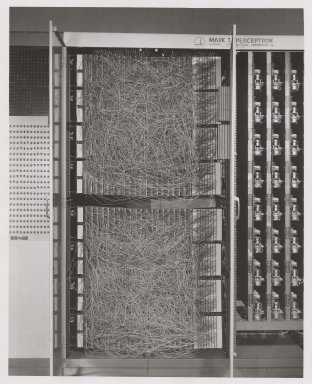

The Mark I Perceptron machine was the first implementation of the perceptron algorithm

A diagram showing a perceptron updating its linear boundary as more training examples are added

Motivation

Development of artificial neural networks started as an attempt to explain intelligent behaviour in people and animals. One of the attempts to explain and achieve intelligent behaviour in machines is known as symbolic approach.

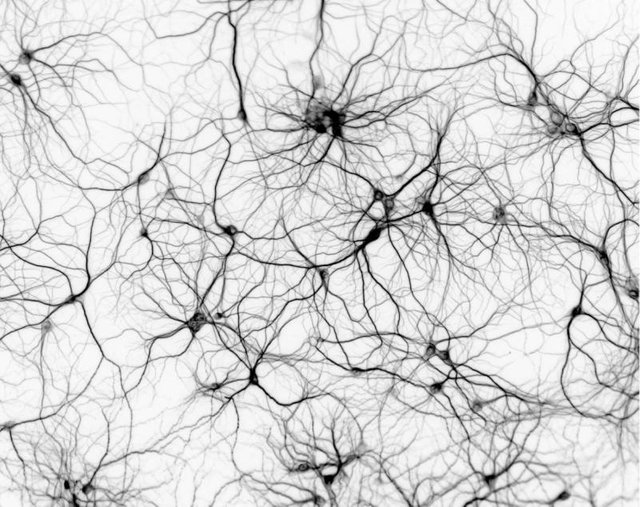

However, understandings of how the biological organisms function have yet not proved that it is the symbolic processing of data and symbolic inference that is happening inside the brain, that is the nervous system. Instead, it seems that intelligent behaviour emerges from the big amount of simple processing elements (neurons) which process the data massively parallel and with great redundancy - which is the foundation of the approach, today known as connectionism.

Research has showed that inside the human brain there are 10^11 neurons connected with around 10^15 connections. It means that each neuron is connected, in average, with 10^4 connections from which it can be concluded that processing the data in human brain is massively parallel activity. Additional argument about the parallel data processing thesis comes from the very slow work of neurons; namely, time of neuron firings is about 1 ms. If we take into account that a human can solve extremely complex data analysis in a very short time (for example, we can recognize our mother when we see her in time interval lasting around 0.1 ms), it is obvious that the processing cannot be done sequentially because the amount of steps it is possible to do in such a short time interval really small.

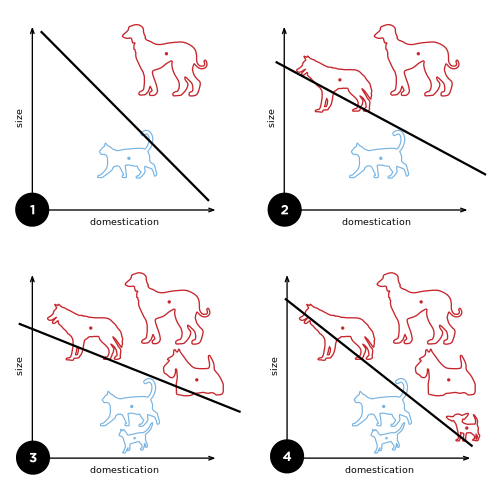

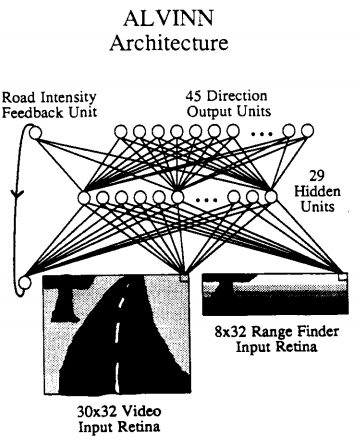

Considering that the area of artificial neural networks is developing during the last 70 years, until today there have been series of successful applications. Neural networks have showed to be as a successful model for function approximation, data classification, trend predictions and similar. It is nice to mention an experimental neural network Alvinn developed on the Carnegie Mellon University. It's about artificial neural network which learns how to drive a car by looking at how does the human do it. Through the done experiments, that network has successfully steered the vehicle on 100 km long section of the road driving with the top speed of 110 km/h.

Considering a sequence of good properties of neural networks, such as robustness on errors in data, resistance to noise, massive parallel data processing and the possibility of learning, even today this paradigm is in focus of research and application.

Basic neuron model

We will start the introduction to artificial neural networks with observation of one neuron.

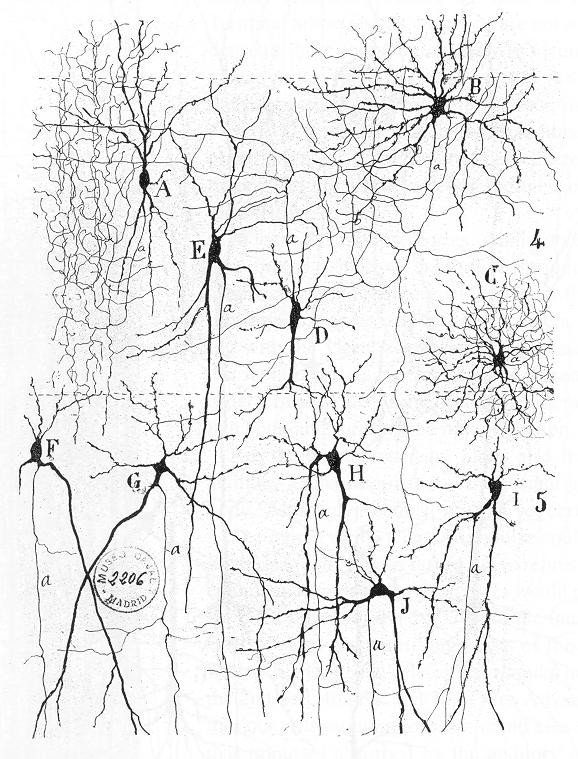

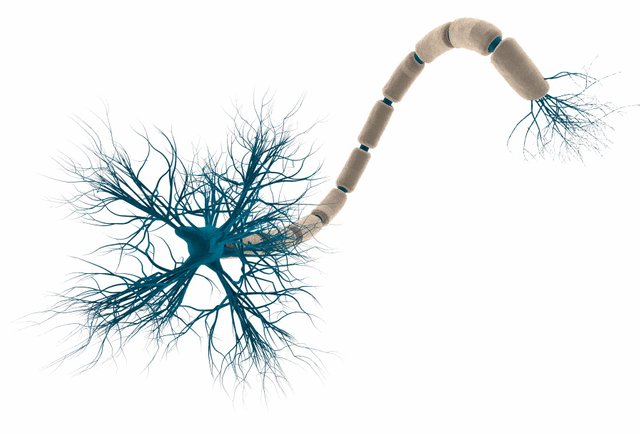

Simplified, biological neuron consists of dendrites, neuron body and axon. In average, every neuron is connected with 10^4 other neurons via dendrites, and through them it gathers electric impulses which are then accumulated in its body. After the certain amount of charge is accumulated (roughly defined by threshold), neuron fires - accumulated charge is sent through its axon to other neurons and thus discharges. In that sense, dendrites represent inputs through which neurons gathers the information, body of the cell processes it and finally generates the result which is transferred though the axon - neuron output.

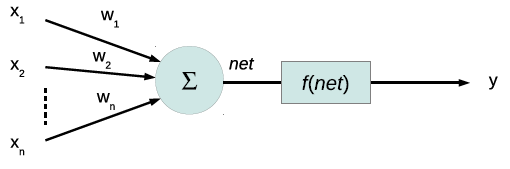

Based on this simplified description, a basic neuron model is defined. Model consists of inputs x1 to xn (dendrites), weights w1 to* wn*, which determine to which extent does each of the inputs excite the neuron, neuron body which calculates the total excitation (which will often be noted as net) and a transfer function f(net) (axon) which processes the excitation and forwards towards the neuron output.

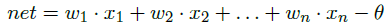

Total excitation net is calculated by the definition:

where θ represents the threshold for neuron firing. Furthermore, if we substitute x0 for an imaginary input which is always 1, and weight w0 which represents θ, the expression can be converted to the following:

where x is a (n+1) dimensional vector representing neuron input, and w (n+1) dimensional weight vector.

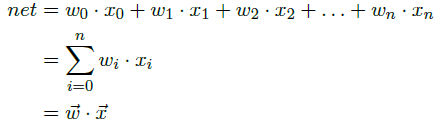

Transfer function models axon behaviour. In practice, several typical transfer function are used.

Transfer function a) is a step function and it was used by Warren McCulloch and Walter Pitts in 1943. while defining the model of TLU perceptron (Threshold Logic Unit). Based on that neuron model they've showed how it can be used to calculate basic Boolean functions AND, OR and NOT.

Transfer function b) is a linear function. Neurons with this kind of transfer functions are often used in output layers of neural networks. However, it is important to emphasize that complex neural networks cannot be built using only this type of neurons because from linear elements you can't create anything more complex.

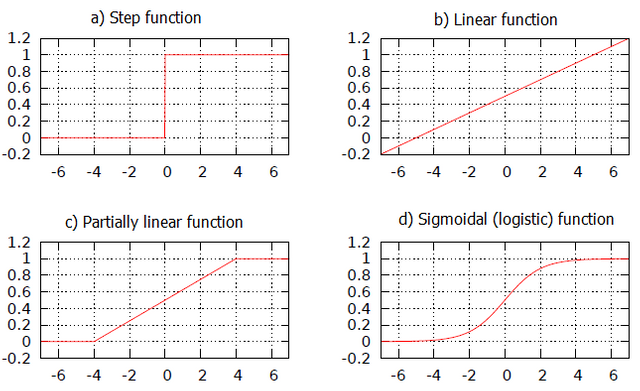

To solve this problem, instead of linear transfer function a partially linear function can be used.

It is defined by the following:

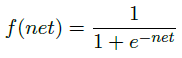

However, with all the mentioned transfer functions it still wasn't known how to define the learning algorithm of the more complex neural networks which are built from those elements. The solution in the form of Backpropagation algorithm has emerged only with the introduction of derivable transfer function whose most recognized representative is a sigmoid (or logistic) transfer function, defined by the following equation:

Basic neural network model

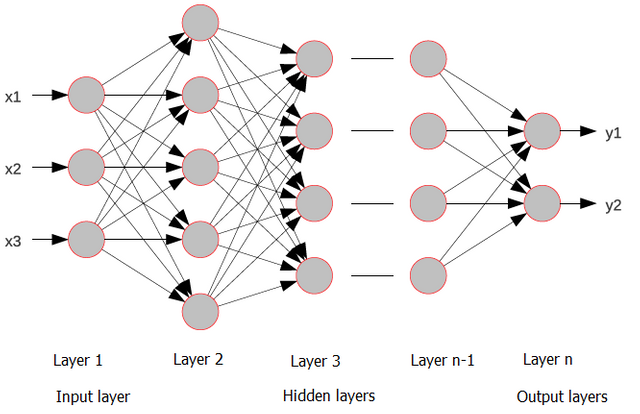

Isolated neuron, as we have seen, can only do a simple data processing. Therefore multiple neurons are connected in different structures known under a general name artificial neural networks.

On the left side of the networks there are input neurons. Those are neurons on which information that needs to be processed is brought. During that stage, input neurons don't perform any kind of processing, they are only forwarding the input to other neurons in the network. Interior of the network consists of neurons which can't be seen from outside and don't have any contact neither with the input nor output of the network. Finally, on the far right there is output layer of the neurons which deliver the processing result to the environment.

Network presented in this example is layered network. However, generally, the network doesn't have to look that way. In general case it is possible that any of the neurons is connected to any other neuron thereby opening possibilities of defining a very large number of different architectures of artificial neural networks. It should be taken into account that behaviour of neural network, just as learning, that is the easiness of learning, depends largely on the architecture of neural network.

For example, layered neural networks with constant input will generate stable output and in that sense will match the functional mapping. But if it's allowed that cyclic connected neurons exist inside the network, output of that network, even with the fixed input, will be time-varying because the interior of the network will be able to save a state. Learning algorithms of those networks will be considerably more complex (and inefficient).

Type and classification on neural networks

Depending on the structure and purpose of artificial neural networks, until today there has been developed a whole variety of different types of artificial neural networks, of which I'll only mention some.

In networks which learn under the supervision there are:

- Feedforward networks - Perceptron: Rosenblatt (1958), Minsky and Papert (1969/1988), Adaline: Widrow and Hoff (1960), Backpropagation: Rumelhart, Hinton and Williams (1986), RBF networks (Radial Basis Function)

- Recurrent networks - Boltzman's machine, Hopfield network

- Competition networks - Counterpropagation network

- and many more

As far as unsupervised neural networks are concerned I'll mention: Vector Quantisation network and self-organising networks like Kohonen self-organizing network.

Summary

In machine learning and cognitive science, an artificial neural network (ANN) is a network inspired by biological neural networks which are used to estimate or approximate functions that can depend on a large number of inputs that are generally unknown. Artificial neural networks are typically specified using three things:

- architecture

- activity rule

- learning rule

Like other machine learning methods neural networks have been used to solve a wide variety of tasks, like computer vision and speech recognition, that are hard to solve using ordinary rule-based programming.

If you are interested in further explanations or discussion about this topic, feel free to leave a comment.

Thank you for reading.

+1 For an excellent post and great pictures. Actually going to read this again so as to really digest it all. ^_^

Excellent post. Makes earning steam easy

Thanks a lot for these pieces of information. Do you have by any chance any good reference where I could find more information? Thanks in advance!

It is pleasant to read, cognitive author fine fellow, continue in the same spirit !

There is an important ommision, the RELU function that has been used with an overwhelming breaktrough the introduction of deep learning for image recognition.

Relu (rectified linear unit) has the following shape _____/

Thank you and everyone feel free to discuss, I'm implementing neural network to an android application right now.

hi,

I wrote a cute little story about a baby dolphin and all the posts he read on steemit last week and your post is in it!

have a look, I hope you like it

thanks

Hello eneismijmich

Would you be able to do new introduction with your photo holding piece of paper with Steemit logo written on it, your username and date, please?

Then post it on Steemit or on your social media account (with link provided here)? I would be very grateful for it. Thanks

This post has been linked to from another place on Steem.

Learn more about linkback bot v0.4. Upvote if you want the bot to continue posting linkbacks for your posts. Flag if otherwise.

Built by @ontofractal