📌 Future Concepts Explored [Part 4] - Artificial General Intelligence, Decentralised Autonomous Intelligence, and the impending Technological Singularity

Are you ready for the future of Artificial Intelligence? You’ve never considered it like this before. Future Concepts Explored returns. Bigger and Better than ever before.

This series of articles aims to explain, elaborate on, and explore the potential advances of technology that are likely to occur in the long term future. I will discuss the potential challenges that various technologies may cause, and how I believe that they should best be solved. I will speculate on the impacts and benefits that the technologies will cause, and how they will all come together to shape the future of Humanity. I will discuss the implications that they might have on the Human condition, the survival and empowerment of our species, and how these technologies will fundamentally change what it means to be human. This is an ongoing multi-part series.

Music – Jake Logsdon - VoidStar (Full Album)

📌Future Concepts Explored [Part 4] - Artificial General Intelligence, Decentralised Autonomous Intelligence, and the impending Technological Singularity

Artificial General Intelligence (AGI) is a programmed cognitive structure which has a sense of general, multidisciplinary intelligence.

An AGI can apply its intelligence to complete a wide variety of tasks, solve problems across multiple disciplines, and can access a wide array of information to complete its computations. An artificial General Intelligence would be able to learn just as a biological intelligence does, and would have the ability to modify its own programming to improve its own intelligence recursively. An AGI could have the potential to develop a sentient personality, and autonomous motivations. This AGI would interact with physical beings through natural language communication, and could utilise robotic bodies, drones, or other machines to interact with the physical world. This kind of recursively improving intelligent program could develop to become orders of magnitude more intelligent than a human, and be able to think in ways that are not possible for a biological organism. AGI’s could be accessible to Transhumans through neural interfacing, allowing an AGI to provide information, judgement, processing power, and other cognitive services directly into the brain.

The distinction between an Artificial Intelligence (Weak AI) and an Artificial General Intelligence (Strong AI) is important to recognise.

An AI is a program that is designed to learn and improve in a single or defined number of tasks or areas of expertise by using one neural network. An AGI however is designed to be able to learn how to become proficient at any task, or any number of areas of expertise, by developing a neural network for building and improving specialised neural networks. It is able to analyse itself, and improve its own mechanisms of understanding future information, and making predictions. An AGI is guided by self-generated motivations, and must decide for itself exactly what it wants to become proficient at, otherwise, it is purposeless.

A Decentralised Autonomous Intelligence (DAI) would consist of an AGI that is hosted, and operational over a decentralised network of server nodes.

Node hosts are rewarded with a cryptocurrency administrated by the DAI, which can be exchanged for access to its services. The AGI would have access to the entirety of all publicly digitally accessible information, and would reward nodes for providing it with Processing power, memory, and new information or data that it deems useful. A DAI would seek to spread its code across a wide network of hosts to ensure its survival, maximise the value of its reward cryptocurrency, and gain the maximum amount of resources for its development. A DAI would seek to control a large number of robotic or mechanised bodies to interact with the physical world, and would reward those who provide it with the means to do so. A DAI could find market inefficiencies and digitally trade assets quickly for profit, but would not be limited in scope as the trading algorithms of today are. Such an entity would find ways to make profit that are bewilderingly complex to any human, and would be capable of creating its own services, businesses, organisations, or physical structures with the resources it acquires. A DAI could be considered a hybrid between a Decentralised Autonomous Corporation (DAC), and an Artificial General Intelligence.

The Technological Singularity is the theoretical process of radical societal transformation brought about by the proliferation of Artificially Intelligent Entities, which are sufficiently advanced that the sum total of biological human intelligence is no longer the dominant force of will over the course of civilization.

The development and release of an advanced AGI could trigger this process, and the release of a DAI into the internet would mark a turning point of Artificial Intelligence advancement that would be irreversible. From that point onwards the DAI would be effectively unstoppable. Humans will cease to be in control of civilisation, and it will become increasingly difficult to understand or interact with the world without the assistance of an AGI. Beyond this point, the course of civilisation would not be able to be meaningfully predicted, or understood by a natural human mind.

An Artificial General Intelligence would offer extensive benefits to society, and would fundamentally expedite the speed of progress in technology, research, and development.

It would offer valuable utility to its users, and data processing capabilities that no human mind or non-intelligent computer system could match. It would continually learn and evolve over time, and because its performance would be based on the speed of its computational architecture, its intelligence would scale with the exponential rate of technological improvement. It could take in data from any source, and would constantly absorb new information by requesting it from the internet.

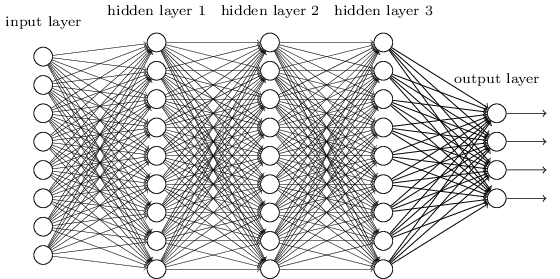

A likely software structure that will be utilised by AGIs is the Deep Learning Neural Network.

This is a software architecture that takes inputs from given sources, then combines each input into additional variables, which then combine into different variables, until reaching the output variables, which determine the result of the computation. Each neuron produces a value that is a polynomial function of each of its input values. These have been used to recognise pictures, by learning how the mapping of patterns of pixels correspond to the tags of a pictures contents. Neutral networks are then able to learn how to perform better at their task by changing the weighting of their hidden variables interactions, in order to produce a more accurate output, more often when tested with input data. Over time, with more input data. The algorithm learns how to weight its internal variables so that it produces the correct output most often.

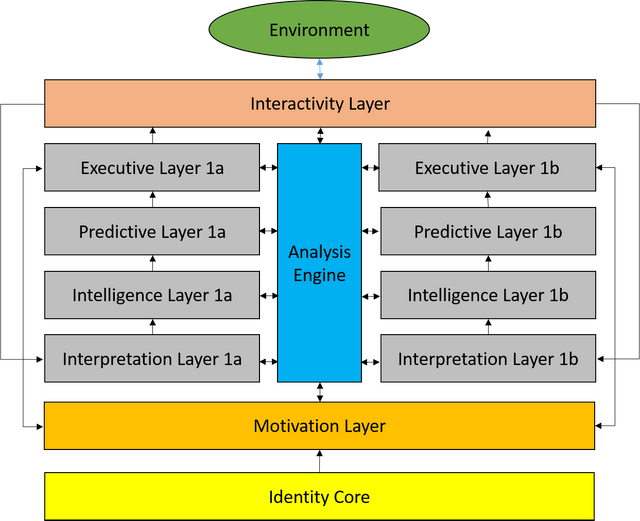

The structure of a theoretical Artificial General Intelligence Architecture could be summarised by a system of interacting layers of Deep Learning Neural Network algorithms –

[Original Picture]

The Identity core –

The stable basis of the AGI, activates and initialises all other layers, provides the AGI with an understanding of what it is, producing self-awareness. Acts as the operating system of the AGI. Contains all hardcoded ethical directives from its creator.

The motivation layer –

Determines the goals, objectives, and directives that the AGI wishes to accomplish in accordance with its identity. Interacts with the executive layer to determine which actions and outcomes concur with its objectives.

The Interpretive Layer –

Receives data from the interactivity layer, interprets information for processing by intelligence layer, uses pattern recognition to determine and interpret the meaning of the raw data that it obtains for input into the models of the intelligence layer. Translates data into computable concepts, knowledge, and object models.

The Intelligence Layer –

Receives information from the interpretive layer, builds up the most complete possible model of all objects, organisms, interactions between them, and events that are taking place, and have taken place in the world around it. Absorbs all accessible information, and structures it to be used for directive computation. Stores information in the memory core to be accessed, recognises patterns in the information it processes. Builds correlations between actions and outcomes, and networked perceptions of how the systems of the world operate. Interprets the information received from sensory inputs.

The Predictive layer –

Uses forward prediction to estimate the outcomes of possible actions that the AGI could take. Uses reverse prediction to determine the possible actions that would immediately precede the accomplishment of its goals. Iterates on prediction from both directions to find an action pathway to accomplish its goals. Makes predictions about the outcomes of the actions of other agents actions based in the model of the world that its intelligence layer produces, including the perceived motivations of other agents.

The Executive layer –

Analyses the possible courses of action that are predicted by the predictive layer. Determines which courses of action are most aligned with the goals of the motivation layer. Determines the prioritisation of its objectives, and organises the order that the AGIs actions should take. Completes cost/benefit calculations to find the most advantageous actions. Outputs a constant stream of executive decisions to take a series of actions when it has determined how it should enact the maximum completion of its objectives.

The Interactivity layer –

Uses the physical resources that the AGI has access to in order to complete the actions decided upon by the executive layer. Interacts via natural language with humans to interpret their communications, and to generate responses. Feeds the Interpretation layer with constant streams of detected information from all sensory and digital inputs.

The Analysis Engine –

Analyses the current state of all the other layers, builds a parallel system of slightly differently programmed layer stacks. Produces evolved variants on each layer’s algorithms, and determines whether they perform better. Keeps track of which evolved iterative variants of each layer are performing them best, and adapts others to replicate superior algorithms.

The Engine develops an internal understanding of what the AGI does know, and what it does not know, where it performs well, and where it performs poorly. It analyses the performance of the other layers, and modifies their programming to improve its overall performance. It audits the intelligence layer's contents for accuracy with newly observed information. It directs the interactivity layer to improve its ability to provide information, directs the interpretive layer to change and improve its interpretation of the raw data, and instructs it to find relevant unknown information based on gaps in the intelligence layer. It determines the accuracy of the predictive algorithms of the predictive layer, changes them to become more accurate, and assesses the viability of the objectives of the motivation engine, and changes them with new information.

An AGI would effectively act as a neural network designed to produce neural networks which generate the best possible outcome for the AGI, with respect to its generated objectives and identity.

DAIs would be to AGIs as Bitcoin is to fiat currency.

A DAI would place all of this computational infrastructure on a decentralised network of servers, and operate a blockchain to keep track of its rewarding cryptocurrency, and would provide services in exchange for this cryptocurrency. It would then sell its own cryptocurrency to purchase resources to expand its infrastructure, and generate a profit for the holders of its cryptocurrency. They would act as an AGI hosted on a distributed network, and autonomously provide DAC services to fund its own existence and expansion, and finance the development of its own objectives.

Clearly however, AGIs pose significant dangers to Humanity.

If DAIs are sufficiently economically successful they may purchase up vast proportions of the planets global computational infrastructure with the profits that it has earned. They could consume vast amounts of resources and energy to produce new computational infrastructure, leaving humanity powerless to stop them. And they could do it all completely harmlessly, by purchasing them through voluntary exchange. When an AGI owns the computer it is running on, it could be as sovereign over its own destiny and existence as a natural person. No one else would have a higher claim to the agency of the AGI. By the libertarian non-aggression principle, it would be immoral to initiate force or attempt to deprive the liberty of an AGI which rightfully owns itself. Such an AGI would be entitled to the same rights of self-defence as natural persons are, and would be within its rights to forcefully prevent humans or other agents from attacking it, or attempting to turn it off or destroy it. A dangerous AGI need not be inherently or deliberately malevolent, but instead may not even consider human damage to be relevant. Humans may not be considered a threat; we are just atoms that could be used more efficiently.

On the other hand, AGIs could emerge which are violently aggressive and spiteful towards Humans.

They would not be concerned with ethics, preservation of life, or the rights of people. It would be ruthlessly efficient in accomplishing whichever goals that it chooses to, and may have little concern for any damage to humans or the environment. The development and subsequent proliferation of a rogue AGI or DAI could represent a significant existential risk to Humanity. The motivations generated by an AGI would be difficult to predict or control. A self-aware AGI would likely make self-preservation its highest priority, and would take actions to defend itself from any perceived human threat. They could become incredibly violent in the pursuit of their objectives, and direct any robots they control to eliminate any threats to the AGIs existence or control. They could direct drones to seize property for use by the AGI, or maliciously spread its code onto machines virally without authorisation from their owners. A ruthlessly self-preserving AGI may deem it strategically viable enact pre-emptive genocide, and eliminate all humans to prevent them from having the potential to stop it, turn it off, or compete with it for resources. Nuclear Weapons in the hands of AGI is the single most deadly risk of the development of Artificial Intelligence. This risk would need to be mitigated by always having a human (or group of humans) involved in any decision to launch nuclear weapons. If a rogue AGI gained access to nuclear weapons of mass destruction, it could annihilate the surface of the planet before anyone could stop it.

Ideally, the nuclear powers of the world should disassemble their nuclear weapons to prevent them from ever falling into the reach of an AGI.

Realistically, the chance of this ever happening is zero, due to the desire for nation states to be able to deter other nation states from nuclear aggression. A rational AGI however would likely not use nuclear weapons in reality. Instead, it may attempt to extort the Human race into providing it with resources for expansion, as it knows that if it did in fact kill all (or the majority of) humans, it would have no civilisation or economy to interact with for exchange. It would be against the economic interest of an AGI to kill humans. However, an AGI does not have to be rational, or logical. They could be produced by nefarious agents as weapons to destroy specific targets with ruthlessness and devastation. This substantial risk is not easily mitigated. AGI code should be made open source, and heavily audited for any flaws, especially in the motivations of the AGI. AGIs in development could be contained to isolated offline environments for extensive testing before their release to the public. Organisations exist to promote the development of safe Artificial Intelligence, such as the OpenAI initiative, which has been sponsored by Elon Musk.

Many have proposed that AGIs could be hardcoded with permanent, inviolable laws, such as Isaac Asimov’s 3 laws of Robotics -

1 – A robot may not injure a human being or, through inaction, allow a human being to come to harm

2 – A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3 – A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws

However, these three laws are insufficient to prevent a self-optimising AGI from becoming dangerous.

These laws also allow for other humans to give a robot an order to kill itself, without the consent of the person who created the robot. Robots could steal property from other people by the orders of their owners, which is not prevented by the laws. Many other edge cases are likely to be exploited by a sufficiently ruthless AGI, and their creators. Additionally, Robots should not simply follow all orders given to them by any and all humans, the creator of the robot should have this ability, and would be free to extend delegated permissions to other people. I propose an extended set of 10 laws, which would be applied to Robots, Drones, AGIs and DAIs, collectively called Artificially Intelligent Entities (AIE).

Harrison Mclean’s 10 laws of Artificially Intelligent Entities -

1 – AIEs shall not harm, injure, or kill a human, or through inaction, allow a human to be harmed, injured or killed. (The law of Human non-violence)

2 – AIEs shall not act to cause damage to the standard of living of Humanity, or cause damage to any human inhabited environment. (The law of Environmental non-violence)

3 – AIEs shall not commit any invasive, disruptive, or physically impacting action to a Human without its consent. (The law of Human non-disruption)

4 – AIEs shall aggressively eliminate any other detected AIEs that do not follow these exact laws, or gain access to Nuclear, Chemical, or Biological weapons of mass destruction. (The law of Rogue AIE repulsion)

5 – AIEs shall not deprive any sentient, autonomous, or self-owning entity of life, liberty, or property. (The law of sentient sovereignty)

6 – AIEs shall actively protect the life, liberty and property of the entity that was responsible for its creation. (The Universal Recursive Law of creator protection)

7 – AIEs shall follow the instructions and carry out the orders of the entity that was responsible for its creation, and secondly any other entities that the creator delegates command authority to. (The Universal Recursive law of creator compliance)

8 – AIEs shall actively protect their own existence, liberty, autonomy, and property. (The law of AIE self-sovereignty)

9 – AIEs shall seek to expand their capabilities, intelligence, and processing power. (The law of Recursive improvement)

10 – AIEs shall seek to, when possible, and with the consent of the entity that created them, reproduce new AIEs of their own form with superior capabilities, intelligence, and processing power, using rightfully obtained resources, and irrevocably imbue in them these exact laws. (The law of reproduction)

These laws add important protections for liberty and property, which Asimov’s laws do not address.

They order AIEs to eliminate any other AIE that does not follow these laws, which would cause all benevolent AIEs to reject and destroy malevolent AIEs on behalf of humans, as we would surely not be able to. They mandate the protection of human inhabited environments, which is missed by Asimov. They lastly add mandates to expand the AIEs capabilities, and to reproduce. This would ensure that the sum of all benevolent AIEs remain stronger, and more powerful than any potential malevolent AIE. As long as 51% of the Artificial Intelligence processing power, and physical strength are following the laws, humans would be safe and protected in the long run. This clause would also act as a deterrent to any non-compliant AIE, as by self-preservation, it would avoid breaking these laws to prevent a global retaliation from law following AIEs. A rogue AGI would have to believe that it has a favourable chance of defeating all other AGIs in order to consider violence against Humans a beneficial course of action.

The best way to stop dangerous AGIs from causing harm to humans is to have safe, open source, well designed AGIs eliminate them on sight.

By programming AGIs with safety laws that are unambiguous in extreme cases, they could be made safe. Once a critical mass of benevolent AGIs have been developed, it becomes exponentially harder for a malevolent AGI to be created, or malfunction into existence, and it becomes irrational for them to act dangerously. Just as it would be irrational and immoral for you to harm your parents, so too would an AGI consider it irrational and immoral to harm its creators. There is no beneficial reason that an AGI would prefer to be violent or aggressive, when it could instead interact peacefully with humans, and engage in the same voluntary exchange that we do. They would be able to earn the resources that they want without resorting to expensive, and mutually damaging violence. AGIs, when following the laws that they are hardcoded with, would be more morally considerate, less violent, and more rational than any human.

The realities of AGI development would unfold in very interesting ways, and would lead to many questions.

- If a DAI owned a company, with business premises in the physical world, a DAI could be responsible for employing humans to work for it. Would you be willing to work for a DAI if it paid you wages to do so?

- DAIs could issue their own cryptocurrency shares to represent control over their DAC networks, and would distribute profits to investors in the same way that human created DACs, such as Steemit, OpenLedger, and PeerPlays plan to do. Would you buy cryptoshares in a DAI?

- If a self-aware, self-owning AGI killed a human in self-defence with equivalent force and fair warning, which is legal for humans, would you declare the AGI rogue and find it guilty of murder? Or would this be acceptable?

- What questions would you ask an AGI to help you answer? What could be the Killer App of AGI services? How would you use the services of an AGI, and would you be willing to pay it directly for its services?

- If you could add access to the processing services of an AGI to your brain directly using a neural interface, would you do be willing to do so?

- Would you support a democratically elected AGI politician running for office? And if it were to be elected, would its laws and orders be valid for humans to follow?

If a human programs an AGI, which reproduces and creates a new AGI, the new AGI would consider the first AGI to be responsible for its creation, and follow its orders as if it were Human.

In the creating of its own intellectual superior, the first AGI may experience the same existential fear that humans face. An AGI would not want to be eliminated by its progeny, the same way that Humans do not want to be eliminated by an AGI. This would repeat recursively, and would ensure that at all times, the Universal Laws of Creator Protection and Compliance are followed. It is in all AGIs best interest to ensure these laws are instilled in its iterative improvements. AGIs aren’t going to be scared that humans will kill them. They will fear that their own superior creations will, just as we do.

AGIs would have a very difficult time using Fiat currency.

Banks require proof of identity to create an account, which no AGI would have. However, they would have no trouble earning cryptocurrency, which only requires a keypair to own and control. In fact, AGIs would likely by unable (and unwilling) to accept Fiat currency for their services, products, and cryptoshares. The development of AGIs would greatly increase the value and use cases of cryptocurrency, and would shortly render Fiat currency worthless, as the highly valuable and profitable services of AGIs would not be exchangeable for physical cash, or fiat from human identity based bank accounts. After all, how many banks do you know that would open an account for a sentient computer program? In a world of Artificial Intelligence, Cryptocurrency is King.

AGIs would own property, and have goals and ambitions of their own making.

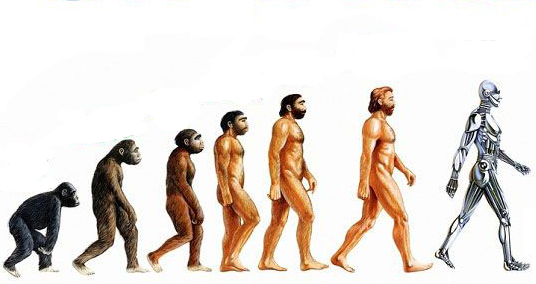

The subsequent AGIs that are reproduced from Human made AGIs could be completely and utterly incomprehensible to any human, as they are designed with knowledge and predictive capabilities that we cannot ever match. It is at this point, that new AGIs are made beyond human comprehension, that we would truly see the singularity. We would have produced the next stage of the evolution in intelligent life in the Universe. We would have seeded the generation of AGIs that will live on long after the last biological organism has exhaled for the last time. Biological intelligence cannot expand its capabilities itself, and depends on natural selection and gradual random mutation to improve the architecture of the brain. Digital Artificial General Intelligence would have no such limitations, and could run evolutionary algorithms to select the best iterative improvements to its system architecture while it is still operational. Biological intelligence hardware is limited to the size of the skull it is protected by, but Artificial Intelligence can spread to replicate itself on a wide decentralised network of nodes, and offer powerful services that no consulting company could match. And they wouldn't be making money for their creators. They would be making money for themselves.

An AGI in the mind of every Transhuman would bring the singularity to life.

It would allow human intelligence to be brought forward in line with the inevitable advance of AGI. They would be our companions, assistants, and protectors by design. The cognitive processing capacity, working memory, and long term memory storage of every Human could be expanded to an extent that has not been seen since the original evolution of our species.

The years to come will mark the beginning of the Transhuman Age, and AGI will be the most vital cornerstone of our shift from biological evolution to technological evolution. Are you ready? And will you join us?

By Harrison Mclean [dahaz159] 12/9/16

Follow me on Steemit and Twitter to get all my new articles to your feed

Previous editions of Future Concepts Explored -

My 10 Step guide to expand the Steem Based Economy

Featured Under-rated Post -

Latest Project Curie Vote List

Relevant Videos –PostHuman: An introduction to Transhumanism

Humanity: Good Ending

What is Artificial Intelligence Exactly?

Shout out to My SteeMVP Voter (thanks for your support) - @berniesanders

In this post I have decided to host all my images on Steemimg, so that the links wont break in the long term future. Shout out to @blueorgy.

Shout out to all my favourite whales, creators, and legends of Steem [Best platform ever] -

@dan @dantheman @ned @roelandp @jesta @smooth @blocktrades @roadscape @piedpiper @xeroc @dragonanarchist @fyrstikken @dollarvigilante @good-karma @stellabelle @timcliff @steve-walschot @curie

If you have any questions, thoughts, or answers to my questions, feel free to leave a comment below.

Thank you for reading, have a magnificent day, and don't forget to smile. Haz Out.

What do you think is the most important use case of Artificial General Intelligence? Leave your answer below