Waste in Research Science — Who’s Responsible, and How to Fix it

An overview of the systemic flaws in academic research

Hi, my name is Patrick and I’m addicted to science.

In 2012, I graduated from a liberal arts school and joined a PhD program in molecular biology. I was so excited to start that I spent the summer sleeping on the floor of my brother’s unairconditioned studio apartment so I could get my feet (and forehead) wet volunteering in a lab.

My brother looked at me like I was crazy, but I didn’t care. To me, there’s something magical about molecular biology techniques.

Ever heard of optogenetics? Scientists are able to genetically engineer neurons to express light-sensitive ion channels. This allows them to selectively activate cells with extreme temporal and spatial resolution by simply shining a blue light.

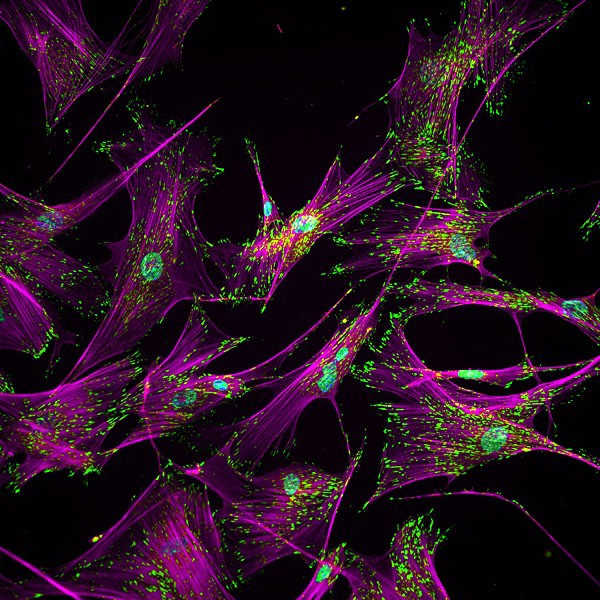

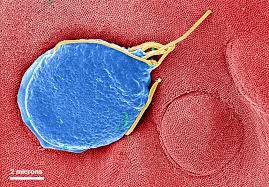

What about immunofluorescense? Fluorophores that glow when exposed to a pre-detemined wavelength of light can be covalently-bonded to an antibody that is targeted to a specific protein. This allows scientist to visually map the location of the target protein within a cell.

FRET microscopy is even more amazing. I won’t go into the details here, but trust me, it’s worth checking out.

Figure 1. Immunofluorescent microscopy image of Lung fibroblasts stained for the proteins vinculin (green) and filamentous actin (purple). Adapted from Singh et al, 2013.

I was lucky to be exposed to some incredible science and scientists, but I decided to leave my program after the first year because it became clear to me that a successful career in academia required a lot more than a passion for research. Even the most successful P.I.’s I worked with, who had resumes full of prestigious publications, were anxious about the likelihood of receiving their next grant.

The economic landscape of academic science is unforgiving and the competition for funding is intense

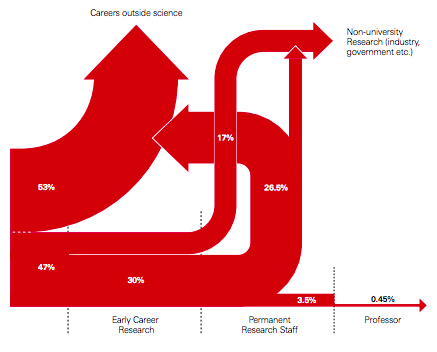

Figure 2. Career Paths of PhD Trained Scientists in the U.K. Adapted from The Royal Society’s “The Scientific Century, securing our future prosperity”

- 61% of STEM PhD graduates end up working outside of academia.

- A recent Nature survey found that academic researchers only spend about 40% of their time conducting research.

- In 2009, The Lancet estimated that 85% of biomedical research funding was wasted.

Wait a second… 85% of biomedical research funding is wasted? In 2009, this equated to over $170b annually. How is that even possible?

A headline this sensational garnered plenty of criticism from the scientific community, so The Lancet followed-up this paper with a five-part series entitled “Research: increasing value, reducing waste”. In the series the authors attribute inefficiency to nebulous funding priorities, unreliable study designs, burdensome regulations, incomplete reporting of findings, and a lack of access to published results.

While I applaud The Lancet for inciting an important conversation, the authors mistake symptoms for causes and fail to identify the systemic flaws in academic research that are the true source of this tremendous waste.

Understanding the flow of capital through the global scientific economy is the key to identifying the root causes of waste in academic research.

Academic research can be divided into four stakeholders:

- Funders

- Scientists

- Publishers

- The General Public

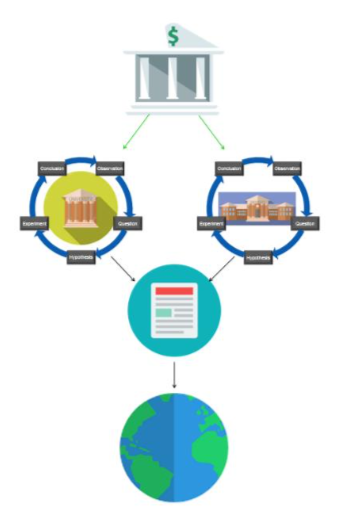

Figure 3. Overview of the scientific research economy

- Funders provide capital to scientists via research grants.

- Scientists convert grant funding into raw data, then process that data into knowledge packaged for publishers.

- Publishers curate the newly-discovered knowledge and disseminate it to the general public for consumption.

Ideally these stakeholders would work together to maximize the impact of research spending towards achieving community-determined goals. In reality, each stakeholder is an independent economic agent that generally acts out of self-interest.

Funders, researchers, and publishers respond to their own personal incentives at the cost of the efficiency of the system as a whole.

Funders of scientific research are interested in two things:

- Achieving organizational goals

- Maximizing the impact of their scientific investment.

The organizations that fund scientific research are incredibly diverse. Each institution has its own set of goals they hope to achieve with their funding decisions.

For example, in 2016 Susan G. Komen announced their intention to cut breast cancer deaths in the United States in half by “focusing on new methods to treat breast cancers that do not currently respond to standard-of-care therapies”. In comparison, the NIH’s mission is “to seek fundamental knowledge about the nature and behavior of living systems and the application of that knowledge to enhance health, lengthen life, and reduce illness and disability.”

The mechanism used by funders to ensure their capital is effectively leveraged towards achieving these goals is the research grant. Funding organizations invite scientists to apply for grants by detailing a proposed research project. These applications are reviewed and the scientists deemed to propose the most viable projects receive an award.

Unfortunately, there are no objective metrics that can accurately asses the quality of a researcher or their grant application.

Institutions attempt to standardize the subjective nature of this process by providing grant-reviewers with criteria for review. For example, the NIH asks reviewers to assess a grant application by judging its significance, investigators, innovation, approach, and environment. Despite reviewers’ best efforts, research has shown this to be ineffective.

A 2017 paper published in PNAS found that there was no agreement between NIH reviewers in their qualitative or quantitative evaluation of grant-applications:

“It appeared that the outcome of the grant review depended more on the reviewer to whom the grant was assigned than the research proposed in the grant.”

Because the success of a scientist’s career is largely dependent on their ability to raise funding, the lack of objective metrics in the grant-application process has tremendous consequences. Out of necessity, the industry has typically fallen back on bibliometrics, or publication history, to judge a scientist’s quality.

The general use of bibliometrics to compare scientists has incentivized them to be “productive” and produce “impactful” research.

A scientist’s “productivity” is a measure of the number of publications they produce. The more a scientist publishes, the more successful they are considered. Simple as that.

The “impact” of any given publication is a slightly more pseudo-scientific measure of quality. The most influential metric defining a publication’s “impact” is the impact factor of the academic journal in which the paper is published. A journal’s impact factor is calculated based on the number of times the journal is cited by other academic publications in a year. This creates a prestigue-heirarchy where the realtive importance of any journal within its field of science is determined by its impact factor ranking.

The problem with incentivizing researchers to be “productive” and produce “impactful” research is that these metrics are inaccurate substitutes for true measures of scientific quality. This creates a Publish-or-Perish atmosphere that disincentivizes the sharing of data and pushes scientists to produce a high volume of research outputs that are tailor-made to be accepted in high impact-factor journals.

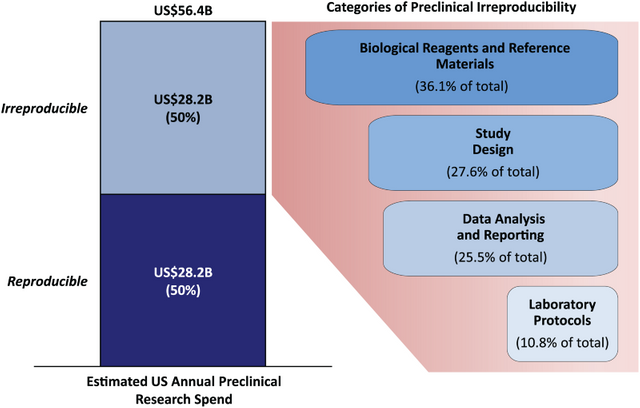

The end result of the scientific economy’s dependence on bibliometrics to judge a researcher’s quality is terrifying. Negative results and replication studies are an integral part of a healthy scientific ecosystem, but they are unlikely to result in numerous citations so prestigious journals have little interest in publishing them. A 2015 article in PLOS estimated that over 50% of preclinical biomedical research is irreproducible. That staggering figure results in over $28b wasted annually. This is without taking into account the “impact” of irreproducible science going undetected and seeping its way into accepted knowledge.

Figure 4. An Overview of of the Economics of Preclinical Research Spending in the U.S. with Categories of Errors that resulted in Irreproducibility. Adapted from Freeman et al, 2015

The beneficiary of the scientific economy’s inability to divorce itself from bibliometrics are for-profit academic publishers. They are an antiquated institution who’s value proposition of knowledge curation and dissemination disappeared with the advent of the internet. Despite this fact, Research funders’ dependence on impact-factor as a metric for quality of research has allowed these companies to thrive. For example, Elsevier accounts for 24.1% of all scientific papers published. In 2010, They collected over $26b in revenue and operated at a 36% profit margin.

For-profit academic publishers are incentivized by… well…. profit.

High-impact factor academic journals charge scientists a hefty submission fee, and then place that content behind pay walls that require an unreasonably large one-time fee or an institutional subscription to access. The result of this is an inequitable distribution of access to knowledge that is based purely on socioeconomic lines.

Recently, there has been a grassroots movement to openly publish research outputs. The open-science community is growing, but the grand majority of open-access publishers lack the academic clout to put a significant dent into the demand for publishing in high-impact academic journals.

Lets review. Diverse funders want to achieve their institutional goals by spending their money effectively, so they invite researchers to apply for grants. There are no accurate metrics to judge the quality of a grant application, therefore funders are forced to utilize substandard measures of a scientist’s “productivity” and “impact” to decide where their money is best spent. The use of these poor metrics creates a prestigue-heirarchy of academic publishers that empowers high impact-factor journals to restrict access to knowledge and collect obscene profits. The downstream result is a Publish-or-Perish climate for scientists that trades collaboration and healthy research practices for generally irreproducible “productivity” and tremendous waste.

Great… Thanks Pat. Tell me something I don’t know. Like… how do we fix it?

Well that’s the easy part. Follow the flow of capital through the scientific economy. It’s actually kind of funny, in a morbid way. Funders utilize research grants to maximize the impact of their institution’s capital, but as a result, they lay the groundwork for perverse incentives that result in massive waste throughout the entire economy.

All we have to do is organize the funders of scientific research to collectively set financial incentivizes that reward healthy research practices.

Once we do that, we can take some of that $170b we just saved and buy some confocal microscopes. Cause if there’s one thing I know for sure. The world needs more microscopy art.

✅ @co-lab, I gave you an upvote on your post! Please give me a follow and I will give you a follow in return and possible future votes!

Thank you in advance!

Thanks - Followed back!

Congratulations @co-lab! You have completed the following achievement on Steemit and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard:

SteemitBoard World Cup Contest - Final results coming soon