Text Analysis On The Dr. Seuss - The Cat In The Hat Book With R Programming

Hi there. In this programming post, I share some experimental work on text analysis/mining with the programming language R. I use R and text analysis to analyze the words in the Dr. Seuss - The Cat In The Hat kids book.

A full version of this post can be found on my website with this link

Sections

- Introduction

- Wordclouds On The Most Common Words In The Cat In The Hat

- Most Common Words Plot

- Sentiment Analysis On The Cat In The Hat

- References

Introduction

I have found a text version of the book which can be found at https://github.com/robertsdionne/rwet/blob/master/hw2/drseuss.txt. The contents are copied and pasted to a different .txt file for offline use.

The R packages that are used are dplyr, tidyr, ggplot2, tidytext, wordcloud and tm. These packages are needed for data manipulation, data wrangling, data visualization, data cleaning and for setting up wordclouds in R.

(To install a package in R, use install.packages("pkg_name"))

# Text Mining on the Dr. Seuss - The Cat In The Hat Kids Book

# Text Version Of Book Source:

# https://github.com/robertsdionne/rwet/blob/master/hw2/drseuss.txt

# 1) Wordclouds

# 2) Word Counts

# 3) Sentiment Analysis - nrc, bing and AFINN Lexicons

#----------------------------------

# Load libraries into R:

# Install packages with install.packages("pkg_name")

library(dplyr)

library(tidyr)

library(ggplot2)

library(tidytext)

library(wordcloud)

library(tm)

Wordclouds Of The Most Common Words In The Cat In The Hat Book

I first load in the The Cat In The Hat book from the offline text file with the readLines() function. Afterwards, the readLines() object is put into a VectorSource and then into a Corpus. (Make sure to set the working directory in R where the file is located.)

Once you have the Corpus object, the tm_map() functions can be used to clean up the text. Options include removing punctuations, converting text to lowercase, removing numbers, removing whitespace and removing stopwords (words like the, and, or, for, me).

# 1) Wordclouds

# Reference: http://www.sthda.com/english/wiki/text-mining-and-word-cloud-fundamentals-in-r-5-simple-steps-you-should-know

# Ref 2: https://www.youtube.com/watch?v=JoArGkOpeU0

catHat_book <- readLines("cat_in_the_hat_textbook.txt")

catHat_text <- Corpus(VectorSource(catHat_book))

# Clean the text up:

catHat_clean <- tm_map(catHat_text, removePunctuation)

catHat_clean <- tm_map(catHat_clean, content_transformer(tolower))

catHat_clean <- tm_map(catHat_clean, removeNumbers)

catHat_clean <- tm_map(catHat_clean, stripWhitespace)

# Remove English stopwords such as: the, and or, over, under, and so on:

catHat_clean <- tm_map(catHat_clean, removeWords, stopwords('english'))

The tm_map() object is then converted into a Term Document Matrix and then into a data frame. Once a data frame is obtained, wordclouds along with bar graphs can be generated.

# Convert to Term Document Matrix:

td_mat<- TermDocumentMatrix(catHat_clean)

matrix <- as.matrix(td_mat)

sorted <- sort(rowSums(matrix),decreasing=TRUE)

data_text <- data.frame(word = names(sorted), freq = sorted)

#Preview data:

> head(data_text, 30)

word freq

like like 88

will will 58

said said 43

sir sir 37

one one 35

fish fish 34

house house 29

cat cat 29

say say 29

now now 29

things things 26

fox fox 26

eat eat 26

grinch grinch 26

two two 25

can can 25

box box 25

look look 24

thing thing 22

socks socks 22

hat hat 20

know know 20

hop hop 18

good good 17

new new 17

knox knox 17

little little 16

mouse mouse 16

bump bump 15

saw saw 15

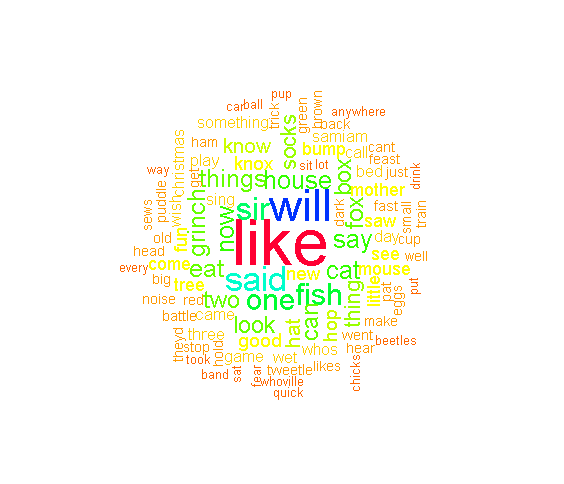

The wordcloud() function from the wordcloud package allows for the generation of a colourful wordcloud as shown below.

# Wordcloud with colours:

set.seed(1234)

wordcloud(words = data_text$word, freq = data_text$freq, min.freq = 5,

max.words = 100, random.order=FALSE, rot.per=0.35,

colors = rainbow(30))

It appears that the word like is the most common along with the words will, sir, fish, things and grinch.

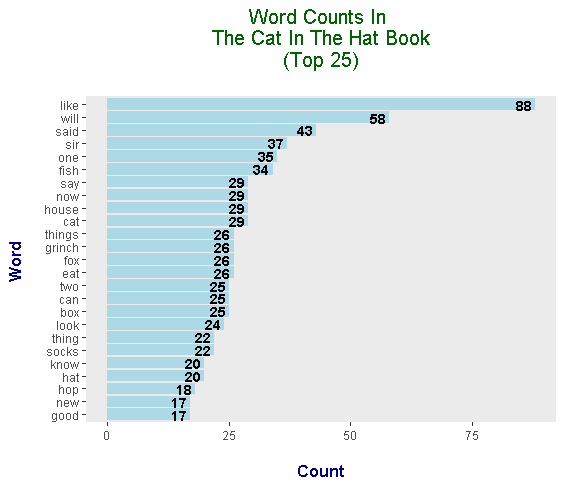

The Most Common Words In The Cat In The Hat Book

This part continues the code from the previous section. The data_text object is already preprocessed with the tm_map() functions and is ready for plotting with ggplot2 graphics.

I take the top 25 most common words from The Cat In The Hat book. To obtain the bars, you need the geom_col() function. Sideways bars can be obtained with the coord_flip() addon function. Labels and text can be added with the labs() function and the geom_text function respectively. The theme() function allows for adjustment of aesthetics such as text colours, text sizes and so forth.

# Wordcounts Plot:

# ggplot2 bar plot (Top 25 Words)

data_text[1:25, ] %>%

mutate(word = reorder(word, freq)) %>%

ggplot(aes(word, freq)) +

geom_col(fill = "lightblue") +

coord_flip() +

labs(x = "Word \n", y = "\n Count ", title = "Word Counts In \n The Cat In The Hat Book \n (Top 25) \n") +

geom_text(aes(label = freq), hjust = 1.2, colour = "black", fontface = "bold", size = 3.7) +

theme(plot.title = element_text(hjust = 0.5, colour = "darkgreen", size = 15),

axis.title.x = element_text(face="bold", colour="darkblue", size = 12),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12),

panel.grid.major = element_blank(),

panel.grid.minor= element_blank())

In the wordclouds, you were unable to determine the counts associated with each word. With the bar graph with numeric texts, you can clearly see the counts with the words.

The most common words in The Cat In The Hat include like, will, said, sir, one, fish and say.

Sentiment Analysis On The Cat In The Hat

Note: There is no code shown for the last two lexicons. Only the graphs are shown for those two.

Sentiment analysis looks at a piece of text and determines whether the text is positive or negative (depending on the lexicon). Three lexicons are used here for analyzing words. These are nrc, bing and AFINN.

Keep in mind that each lexicon has its own way of scoring the words in terms of positive/negative sentiment. In addition, some words are in certain lexicons and some words are not. These lexicons are not perfect since they are subjective.

I read in the book into R (again) and convert the book into a tibble (neater data frame). The head() function is used to preview/check the start of the book.

# 3) Sentiment Analysis

# Is the book positive, negative, neutral?

catHat_book <- readLines("cat_in_the_hat_textbook.txt")

# Preview the start of the book:

catHat_book_df <- data_frame(Text = catHat_book) # tibble aka neater data frame

> head(catHat_book_df, n = 15)

# A tibble: 15 x 1

Text

<chr>

1 The sun did not shine.

2 It was too wet to play.

3 So we sat in the house

4 All that cold, cold, wet day.

5

6 I sat there with Sally.

7 We sat there, we two.

8 "And I said, \"How I wish"

9 "We had something to do!\""

10

11 Too wet to go out

12 And too cold to play ball.

13 So we sat in the house.

14 We did nothing at all.

The unnest_tokens() function is then applied on the data_frame() object. Each word in The Cat In The Hat now has its own row. An anti_join() is used to remove English stop words such as the, and, for, my, myself. A count() function is used to obtain the counts for each word with the sort = TRUE argument.

catHat_book_words <- catHat_book_df %>%

unnest_tokens(output = word, input = Text)

# Retrieve word counts as set up for sentiment lexicons:

catHat_book_wordcounts <- catHat_book_words %>%

anti_join(stop_words) %>%

count(word, sort = TRUE)

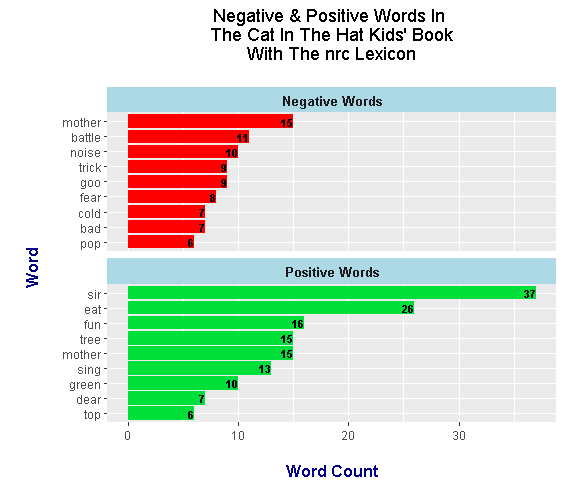

nrc Lexicon

The nrc Lexicon categorizes words as either having the sentiment of trust, fear, negative, sadness, fear, anger or positive. Here, the sentiments of interest from the nrc lexicon are negative and positive.

#### Using nrc, bing and AFINN lexicons

word_labels_nrc <- c(

`negative` = "Negative Words",

`positive` = "Positive Words"

)

### nrc lexicons:

# get_sentiments("nrc")

catHat_book_words_nrc <- catHat_book_wordcounts %>%

inner_join(get_sentiments("nrc"), by = "word") %>%

filter(sentiment %in% c("positive", "negative"))

# Preview common words with sentiment label:

> head(catHat_book_words_nrc, n = 12)

# A tibble: 12 x 3

word n sentiment

<chr> <int> <chr>

1 sir 37 positive

2 eat 26 positive

3 fun 16 positive

4 mother 15 negative

5 mother 15 positive

6 tree 15 positive

7 sing 13 positive

8 battle 11 negative

9 green 10 positive

10 noise 10 negative

11 goo 9 negative

12 trick 9 negative

A plot with ggplot2 graphics can be generated.

# Sentiment Plot with nrc Lexicon (Word Count over 5)

catHat_book_words_nrc %>%

filter(n > 5) %>%

ggplot(aes(x = reorder(word, n), y = n, fill = sentiment)) +

geom_bar(stat = "identity", position = "identity") +

geom_text(aes(label = n), colour = "black", hjust = 1, fontface = "bold", size = 3) +

facet_wrap(~sentiment, nrow = 2, scales = "free_y", labeller = as_labeller(word_labels_nrc)) +

labs(x = "\n Word \n", y = "\n Word Count ", title = "Negative & Positive Words In \n The Cat In The Hat Kids' Book \n With The nrc Lexicon \n") +

theme(plot.title = element_text(hjust = 0.5),

axis.title.x = element_text(face="bold", colour="darkblue", size = 12),

axis.title.y = element_text(face="bold", colour="darkblue", size = 12),

strip.background = element_rect(fill = "lightblue"),

strip.text.x = element_text(size = 10, face = "bold")) +

scale_fill_manual(values=c("#FF0000", "#01DF3A"), guide=FALSE) +

coord_flip()

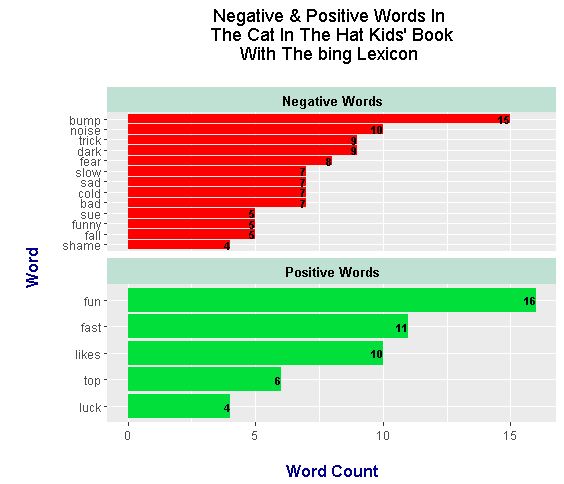

bing Lexicon

Words under the bing lexicon categorizes certain words as either positive or negative. In the bar plot below, you will see that the selected top words are different than the ones from the nrc lexicon. (These lexicons are subjective.)

According to bing the top negative word is bump. Other intriguing negative words include sue, funny, trick and noise. The word sue is either a verb as in to sue someone or it could be a name. Having the word funny as negative is kind of weird.

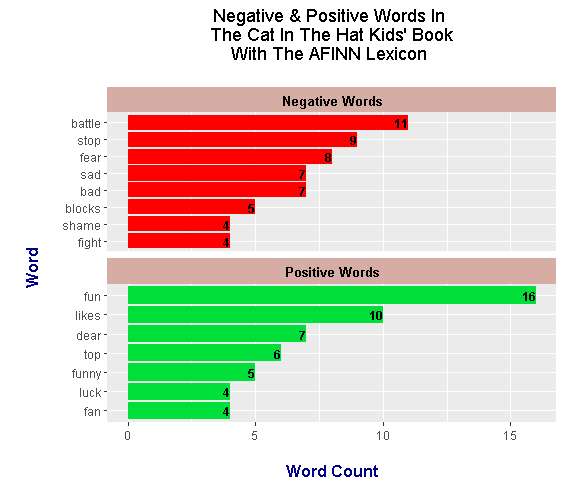

AFINN Lexicon

Words from the AFINN lexicon are given a score from -5 to + 5 (whole numbers only). Scores below zero are for negative words and positive numbers are for positive words.

Under AFINN, the most negative word is battle and the most positive word is fun.

The word fun is featured in all three lexicons and the "negative" word bad is featured in all three as well. As different as these lexicons are in terms of categorization, there are a few common words between the three lexicons.

The nrc lexicon scores the The Cat In The Hat book more positively than bing and AFINN. bing gives the book a more negative score overall and the AFINN results are fairly balanced.

References

- R Graphics Cookbook By Winston Chang

- Text Mining With R: A Tidy Approach By Julia Silge & David Robinson

http://www.sthda.com/english/wiki/text-mining-and-word-cloud-fundamentals-in-r-5-simple-steps-you-should-knowhttps://www.youtube.com/watch?v=JoArGkOpeU0https://github.com/robertsdionne/rwet/blob/master/hw2/drseuss.txthttps://stackoverflow.com/questions/3472980/ggplot-how-to-change-facet-labels

Nice work for you! However, it seems like the sentiment analysis is reeeeally subjective. Why is 'mother' sometimes negative and sometimes positive? Why is 'sir' positive? Why is 'funny' negative? Why is 'fast' positive? Is it positive if you 'drive too fast'? Is 'dark' negative in 'dark-haired beauty'? Is 'eat' positive if you "have nothing to eat"? Well, perhaps we can count more on 'nothing'. But then, is 'nothing' negative if you have "nothing to fear"? This last phrase is comprised of two supposed negatives but in overall it has a positive sentiment.

Perhaps there is something I don't understand well with how this works but it seems very simplistic and misleading to take words out of context like that. Is sentiment analysis a thing? I guess it could be improved if it worked more like automated translators do, working with large volumes of data and learning to more or less associate words depending on their context, but it still seems weird to me to decide on "sentiment". This is the first time I'm seeing this so please let me know if I'm wrong.

Sentiment analysis is indeed subjective. The lexicons look at single words without context.

This reference (Chapter 2) may answer a few questions.

https://www.tidytextmining.com/sentiment.htmlHere are a few parts from that reference.

Love it! Such a great example to introduce people to text analysis. This is the best stats/data science post I have read in a long time.

I have another text mining/analysis project that is coming out soon. Give it about a week or so as I have to make some tweaks.

As of late, I have really gotten into this text mining stuff. There are a lot of interesting things you can do with it. The plan is that eventually I'll transition to Python.

Thank you for your contribution and linking your references!

=======================================================================================

This post was upvoted by Steemgridcoin with the aim of promoting discussions surrounding Gridcoin and science.

This service is free. You can learn more on how to help here.

Have a nice day. :)