Maximizing Happiness

The Wonderful VCG (Vickrey-Clarke-Groves) mechanism

The goal of the Vickrey Clarke Groves mechanism (a groves mechanism is a direct quasilinear mechanism) is to maximize efficiency where each participant pays their social cost measured in how much their existence hurts the others. In Vickrey Clarke Groves all participants pay their social cost (the damage they impose on society) making it socially optimal. We can see this in practice in Vickrey Auctions which are rather useful but which have a certain specific problem which makes them expensive at scale. There may be approaches to get around these problems but that is for another discussion.

Elan Pavlov works in the space between theory and reality. An independent computational economist based in Massachusetts, he explores how to use the formulas of classical economics in the real world. His goal: to maximize the total good—defined as whatever it is that makes an individual happy. “We’re trying to allow individuals to find out what their own goals are and maximize them,” he explains.

One way to maximize happiness, according to some economists, is to design a mechanism—a theoretical arrangement to determine who gets what and how much they should pay. Pavlov's interested in the details of implementing these mechanisms, methods for computing prices and allocations. His specialty is auctions.

The above quotes illustrate the reasoning behind some of my thought processes. It has been shown by this mechanism that it is possible to apply the VCG to;

- Have truth as the dominant strategy

- Make efficient choices

- Ultimately and optimally implement the utilitarian welfare function

Mechanism design is the overall topic of discussion here and the terms such as dominant strategy and utilitarian welfare function may need to be defined for people who don't have a background in economics. The dominant strategy in game theory means that it is the strategy which is better than any other no matter what the other players decide to do. A strategy is called strictly dominant if it is a strategy which provides the greatest utility to the player.

What do we mean by utility? Utility is the "win" the player gets. We can think of utility also as the happiness. when we have multiple options then we rank our preferences (according to theory) based on the amount of utility each preference on the list can provide. In theory we call this ranking a "preference function". In order to maximize happiness we need to not only know the preferences of our stakeholders but also the order of those preferences (weight).

How do we determine how much something is worth?

In the financial context we measure the worth of something by how much it can be sold for. We rely on a technology or financial instrument called an auction which is a method of price discovery.

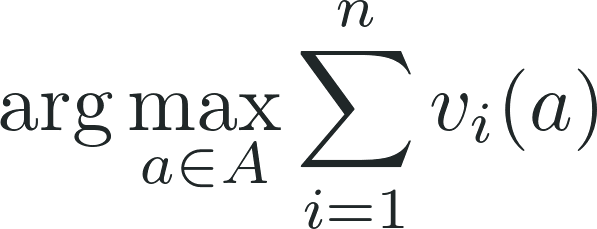

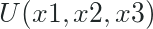

Formula for utility function:

Understanding utility allows us to have a way to measure desire indirectly. Desires exist but we know we cannot directly measure these desires. Markets form to supply the demand and by using certain signals but we understand (at least in theory) that the desire someone has is in correlation with the price someone is willing to pay to satisfy their desire. Put in the most simple of terms, a utility function is a numerical representation of preferences.

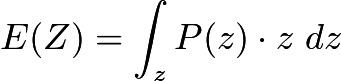

Formula for expected value:

By using utility functions we can analyze behaviors in different ways. For example the von Neumann-Morgenstern utility theorem is widely used to analyze behaviors in a way which mimics a real world environment with uncertainty. From this formula we can now understand what rationality really is, which is VNM-rationality, which is maximizing expected the expected value (expected value of u). All preferences of the agent essentially are profit seeking where the ultimate goal is maximization of expected utility and we see this in all rational behavior.

To put this in a moral context (with survival as the first priority and regret minimization later), then we could say an agent prioritizes it's own survival above all else, but if it's own survival is not threatened then the order of it's preferences could be based on the outcomes they desire most (or least) with regard to regret minimization. In other words the agent will have preferences for different outcomes where some outcomes are ranked higher on the scale or spectrum than others, and because all outcomes are not equal the mathematics of Neumann-Morgenstern must apply (they show it in their proof); finite number of outcomes, different probability of different outcomes in the outcome lottery, the Completeness Axiom shows it is possible to rank outcomes from best to worst.

This applies to problems like the Trolley Dilemma as well which could be thought of as a problem with only a utilitarian solution. The Trolley Dilemma highlights the fact that some scenarios provide only painful options and the only way to make a cost effective choice is to choose the option which produces the least amount of pain on society. If the choice is between 5 lives lost where multiple families suffer that loss or only 1 life is lost, and if you know nothing about any of these lives (all are equal strangers), then 5 strangers who you know nothing about is greater than 1 stranger who you know nothing about.

This problem becomes very different when you know the 1 person and the other lives are 5 complete strangers, as now your happiness is directly at stake in a way which it isn't when everyone involved is a complete stranger. Personalization changes the Trolley problem an reveals a challenge to utilitarian ethics which is based on the assumption that all lives are equal worth to the decision maker (which is not likely to be the case once the decision maker knows more about each person).

Challenges to utilitarianism

If you choose to prioritize your own happiness then you would choose the 1 you know and sacrifice 5 strangers. This would be incorrect under pure utilitarianism (which highlights a flaw in pure utilitarianism). The flaw in pure utilitarianism if an individual person were to try to follow it is that it's an ethics which is not designed for individuals, in the sense that individuals would be encouraged to sacrifice their own happiness for the good of society, or the good of the community, or the greater good of increasing global happiness at the expense of local happiness. In my opinion, it can be ethical for an individual to maximize their own happiness but at what point does it become a disaster? A person who saves their friend, their partner, their child, is still maximizing their expected utility, but the difference is that their preferences for people are not equal (personal relationships produce this), and because there is this inherent and natural favoritism which takes place, people are likely to want to save the people they know and like, rather than sacrifice people they know and like for "the numbers".

Another challenge to the utilitarian way of thinking is illustrated by the scenario of organ harvesting. A utilitarian case can be made to steal kidneys or organs from some to save the maximum amount of lives. The problem with this utilitarian solution is that it violates human rights. This puts the utilitarian solution directly against legal and human rights, where even if by computation you could show that yes letting the government harvest organs could save the most lives (in theory), there is also a hidden cost (moral hazard?) of a society living in constant fear of their government sacrificing them for the greater good or perhaps even enslaving them for the good of society (according to some math formula and computer AI).

We can see here that perhaps this kind of society would cause even more misery than the lives lost due to organ failure and we can also see that it may be possible to generate new organs with stem cells, or build artificial organs, such that this particular problem offers other utilitarian solutions which do not require violating human rights.

Rule utilitarianism allows for heuristics such as "best practices" or "human rights". Human rights would simply be the best practices or rules which if followed, the society believes that it produces the best results a majority of the time. There are situations such as where the government may justify a quarantine (isolate the sick to save the not sick), but we also know that these situations are supposed to be extremely rare, and that under most circumstances rights should never be violated.

Does utilitarian work even in edge cases? In my opinion utilitarianism can work, but it is computationally expensive and like any ethical system it can be used to justify atrocities. The question would be whether or not the potential for atrocities under all ethical systems (including utilitarianism) can be minimized? When utilitarianism is practiced by a company, and that company is bound by the rule of law, that company cannot legally start an organ harvesting business.

In other words utilitarianism in this corporate or professional scenario is within the confines of the rule of law. When a government practices utilitarianism the constraints of law may not work and in this situation it becomes a matter of how much the practitioners in government respect and value the rule of law vs what they deem to be the ethical military solution. We know in times of war and conflict, the rule of law is diminished, and in these situations governments still have to choose which outcomes they prefer using some kind of mechanism (game theory, super computers), so for example a government might value the lives of it's citizens, and of it's allies, to a much greater extent than it values the lives of it's enemies. My post is not intended to go deep into militant utilitarianism or "what governments think about" because frankly I doubt any of us know what military strategists are thinking about or why they make such decisions.

Applied utilitarianism in society

Businesses as mentioned before can and do apply these concepts. Bitcoin itself applies mechanism design and I would go as far to say that mechanism design is the key to why Bitcoin or any cryptocurrency works. Steem applies mechanism design as well and the most recent EIP is an example. When utilitarianism is applied in a way where it is regulated, where human rights are respected, then utilitarianism can work very well. In extreme cases like the Trolley experiment where a decision must be made between how many lives should be lost then again utilitarianism succeeds at reducing the total number of lives lost when there are no better alternatives. We have to remember that these extreme cases happen when there are lives which are guaranteed to be lost and questions about who to save, or how many to save then become critical.

In my opinion there should be clear ethical guidelines approved by society on how to apply utilitarian solutions in extreme circumstances, and this would mean society should at least debate these scenarios so that the most preferred solutions can emerge. For example organ harvesting clearly is not a solution which most of society would prefer, particularly when many other solutions can exist, just as society likely isn't going to prefer "sex credits" or "organized marriages" as a solution to incel culture if it violates the rights of people in society to implement. These solutions which seem utilitarian on the surface, have hidden costs, and those hidden costs have the potential to produce misery in unpredictable ways, which means not all utilitarian solutions are going to be equal in a situation where multiple solutions exist.

All stakeholders within a society should be able to join in on the debates about potential ethical dilemmas. We should also in my opinion try to have an honest understanding of human nature, and know that humans will tend to prioritize their happiness at the expense of societal happiness. What should the balance be between the personal happiness and the societal happiness? These are unsolved dilemmas in utilitarian thinking, which shows that while utilitarianism may maximize happiness, that it too can be taken to the extreme and in the extreme it (the utilitarian happiness maximizer) could maximize happiness at anyone else's expense.

The Vickrey Clarke Groves mechanism and the math of economics clearly shows that markets can and do allow a practical means of happiness maximization through mechanism design. We also can see that the Vickrey auction is a powerful tool for optimal resource allocation.

Conclusion

It is possible to be a consequentialist without being a utilitarian. A utilitarian specifically is concerned about doing what is best for society even if it is not necessarily rational for themselves. A utilitarian for example might donate one of their kidneys to save the most lives they can in the society even if it puts them at increased risk should their one kidney begin to fail.

A consequentialist merely decides right from wrong based on the consequences to their personal expected utility. I myself identify as a consequentialist, but not as a utilitarian. In my opinion a key distinction is that most or maybe all consequentialists apply some sort of regret minimization where negative emotions are anticipated and decisions are made to avoid having to experience these negative emotions. A practitioner pure utilitarian would have no emotions, and no regret, and would align all their behaviors to maximizing global happiness or the good of society.

The consequentialist school of decision making is simply to determine the best course of actions based on preferences for "best" or "worst" outcomes. A consequentialist wants the best outcomes (most utility) for the least cost. Negative emotions such as "regret" are a psychological cost. So we could say consequentialists do seek to maximize their own personal happiness, but they may not always seek to maximize "societal happiness' and may not always choose to sacrifice their personal happiness for "societal happiness". A utilitarian mindset is depersonalized and would choose even to risk their own personal happiness.

To put it as succinctly as I can, a consequentialist makes all decisions based on an analysis of "costs vs benefits", with expected utility in mind. A utilitarian also makes all decisions based on "costs vs benefits' but their prime objective is societal scale rather than personal scale. If the goal of a person is to have as many happy moments in life as possible, then consequentialism is a viable path to achieve it in my opinion, but utilitarianism is more about how to improve society so that as many people as possible can be as happy as possible even if the costs far outweigh the benefits for the utilitarian (utilitarianism is altruistic in intent) and this would mean the happiness maximization would extend to all who can experience pleasure and pain (including non-humans).

Anyone who is a fan of Japanese anime may be familiar with Light Yagami from DeathNote and would know that Light Yagami was a pure utilitarian who thought his actions were for the good of society even though he was acting like a serial killer. Those familiar with the plot will recognize that Light Yagami aka Kira thought he was right and that he was creating a better justice system. In my opinion, utilitarianism (maximization of societal happiness) is a great theory but putting it into practice is risky if there are no laws and no rights to put constraints on things (brakes) if the planned utopia ends up evolving into a dystopia.

Utilitarianism of the kind we see from Light Yagami is the anarchist form, where Light Yagami decided who to kill based on who in his mind the society hated the most or valued the least. Light Yagami chose criminals but we can see from history that when a government is given the power to take lives it often leads to genocide, to dystopia, to atrocities, and while some utilitarian calculation might be able to justify it as being for the "greater good", this is not necessarily going to be true since we don't have complete information to go into these calculations (we don't know what is in everyone's mind, nor everyone's intentions). At the same time, any solution we do find should as a factor care about the happiness of society, but the question is, whether all of society should exist for happiness maximization or should there be something else beyond that?

References

- https://qz.com/1196243/test-how-moral-or-immoral-you-are-with-this-utilitarian-philosophy-quiz/

- https://www.iep.utm.edu/util-a-r/

- http://classic.scopeweb.mit.edu/articles/maximizing-happiness/

- https://www.informs.org/Blogs/Operations-Research-Forum/Thirteen-Reasons-Why-the-Vickrey-Clarke-Groves-Process-is-Not-Practical

To listen to the audio version of this article click on the play image.

Brought to you by @tts. If you find it useful please consider upvoting this reply.