[Steem on RPi] Updates

I have been trying to run a witness on a RPi 4 (8GB). Here are related posts regarding this issue.

Related Posts

- 라즈베리파이로 증인노드를 돌려본다? - Tutorial - 일단 실패

- Raspberry Pi 4B로 증인노드를 돌려본다? - 성공 흔적을 찾아서!

- [Steem on RPi] Other links

- [STEEM on RPi] steemd Built anyway on arm64

- [Steem On RPi] 빌드 성공과 핫도그

- [Steem on RPi] It works! - Screenshots and Logs

- [Steem on RPi] How to Build steem for ARM64

- [Steem on RPi] Sync Failed

--

I could not succeed on syncing block data even though I tried all the options that I can try.

But I will keep digging on this.

Here are some related links:

MIRA caches the data on RocksDB files rather than the random access memory, RAM. Which means you will require a higher storage with fast R/W speeds like SSDs for example. Instead of 64 GB of RAM.

I think RPi which has low RAM (8GB) should take the MIRA method.

Here is some info about MIRA:

https://github.com/steemit/steem/blob/master/doc/mira.md

When I tried replay, the log messages were

- As @steemchiller suggested as following:

Maybe you can replay on a faster ARM machine with same OS and then just copy the data to the Pi.

I tried to sync on Apple M1 macbook air, but I could not run ./run.sh of steem-docker-ex. So I launched a docker container and executed steemd with --replay option. But it failed to sync too.

- When I run replay on RPi4, the block_log.index file became very large which resulted in takes all the RPi's storage of 512GB. And the log messages were like this (when replaying):

------------------------------------------------------

STARTING STEEM NETWORK

------------------------------------------------------

initminer public key: STM8GC13uCZbP44HzMLV6zPZGwVQ8Nt4Kji8PapsPiNq1BK153XTX

chain id: 0000000000000000000000000000000000000000000000000000000000000000

blockchain version: 0.23.0

------------------------------------------------------

2476125ms chain_plugin.cpp:468 plugin_startup ] Starting chain with shared_file_size: 8589934592 bytes

2476125ms chain_plugin.cpp:571 plugin_startup ] Replaying blockchain on user request.

2476126ms database.cpp:235 reindex ] Reindexing Blockchain

2484048ms block_log.cpp:142 open ] Log is nonempty

2484049ms block_log.cpp:173 open ] Index is empty

2484049ms block_log.cpp:355 construct_index ] Reconstructing Block Log Index...

I wonder what will happen if the storage is 1TB. This is the one that I did not try.

MIRA flavor of steemd fails to resync/replay while re-using old datadir

I found an issue having similar error message.steem-0.23.1 repo

@ety001 introduced the repo.

https://github.com/hfang1989/steemhf/tree/hf0.23.1

The only difference between this 0.23.1 and 0.23.x of the official repo is not significant.When starting only with blocklog file (no block_log.index, rockdb_xxxx folders), the log messages were the same as one before (cannot open database):

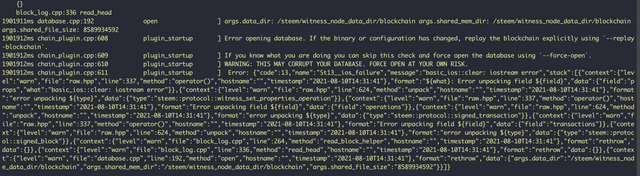

1901911ms database.cpp:192 open ] args.data_dir: /steem/witness_node_data_dir/blockchain args.shared_mem_dir: /steem/witness_node_data_dir/blockchain args.shared_file_size: 8589934592

1901912ms chain_plugin.cpp:608 plugin_startup ] Error opening database. If the binary or configuration has changed, replay the blockchain explicitly using `--replay-blockchain`.

1901912ms chain_plugin.cpp:609 plugin_startup ] If you know what you are doing you can skip this check and force open the database using `--force-open`.

1901912ms chain_plugin.cpp:610 plugin_startup ] WARNING: THIS MAY CORRUPT YOUR DATABASE. FORCE OPEN AT YOUR OWN RISK.

1901912ms chain_plugin.cpp:611 plugin_startup ] Error: {"code":13,"name":"St13__ios_failure","message":"basic_ios::clear: iostream error","stack":[{"context":{"level":"warn","file":"raw.hpp","line":337,"method":"operator()","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"${what}: Error unpacking field ${field}","data":{"field":"props","what":"basic_ios::clear: iostream error"}},{"context":{"level":"warn","file":"raw.hpp","line":624,"method":"unpack","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"error unpacking ${type}","data":{"type":"steem::protocol::witness_set_properties_operation"}},{"context":{"level":"warn","file":"raw.hpp","line":337,"method":"operator()","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"Error unpacking field ${field}","data":{"field":"operations"}},{"context":{"level":"warn","file":"raw.hpp","line":624,"method":"unpack","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"error unpacking ${type}","data":{"type":"steem::protocol::signed_transaction"}},{"context":{"level":"warn","file":"raw.hpp","line":337,"method":"operator()","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"Error unpacking field ${field}","data":{"field":"transactions"}},{"context":{"level":"warn","file":"raw.hpp","line":624,"method":"unpack","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"error unpacking ${type}","data":{"type":"steem::protocol::signed_block"}},{"context":{"level":"warn","file":"block_log.cpp","line":264,"method":"read_block_helper","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"rethrow","data":{}},{"context":{"level":"warn","file":"block_log.cpp","line":336,"method":"read_head","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"rethrow","data":{}},{"context":{"level":"warn","file":"database.cpp","line":192,"method":"open","hostname":"","timestamp":"2021-08-10T14:31:41"},"format":"rethrow","data":{"args.data_dir":"/steem/witness_node_data_dir/blockchain","args.shared_mem_dir":"/steem/witness_node_data_dir/blockchain","args.shared_file_size":"8589934592"}}]}

- When starting with --resync option, it removed block_log file and the following messages appeared:

$ steemd --data-dir=/steem/witness_node_data_dir --resync

186675ms witness_plugin.cpp:532 plugin_startup ] Launching block production for 1 witnesses.

186675ms witness_plugin.cpp:543 plugin_startup ] witness plugin: plugin_startup() end

191641ms p2p_plugin.cpp:229 handle_block ] Error when pushing block:

10 assert_exception: Assert Exception

item->num > std::max<int64_t>( 0, int64_t(_head->num) - (_max_size) ): attempting to push a block that is too old

{"item->num":95,"head":1091,"max_size":22}

fork_database.cpp:57 _push_block

{}

database.cpp:880 _push_block

{"new_block":{"previous":"0000005ec36fa5413af6bf3dddd717223c119d75","timestamp":"2016-03-24T16:10:15","witness":"initminer","transaction_merkle_root":"0000000000000000000000000000000000000000","extensions":[],"witness_signature":"1f71b790d79b0ad1d2e63f073bb9ef5afc797352bb6c4ebbbbf2d0a4c7ddd7bd29512dfa6a5200a6bd32f317c61f358a523ee906588a0aa70f1ca108e05256cfef","transactions":[]}}

database.cpp:764 operator()

- I shared the following error messages with the blurt engineer (jacob).

He said it is a dns problem.

- I guess there were messages of some like this:

{"new_block":{"previous":"0181945ba5b0e549be8fb27ee5677c9e168a49c9","timestamp":"2021-08-17T11:52:24","witness":"bukowski","transaction_merkle_root":"0000000000000000000000000000000000000000","extensions":[],"witness_signature":"20d7984d721d69f6b6142b608aada9223b45f768b0a1bd576d2587c61a51b7c4b93a6965fe601fb3514c997914ff54b47156c594b8ac970fbf49e2cce1f2a76f1d","transactions":[]}}

database.cpp:764 operator()

3143996ms p2p_plugin.cpp:231 handle_block ] Rethrowing as graphene::net exception

3147129ms fork_database.cpp:43 push_block ] Pushing block to fork database that failed to link: 0181945d155aed67fb69702a790bce76d2e05240, 25269341

3147130ms fork_database.cpp:44 push_block ] Head: 1091, 000004433bd4602cf5f74dbb564183837df9cef8

3147130ms p2p_plugin.cpp:229 handle_block ] Error when pushing block:

4080000 unlinkable_block_exception: unlinkable block

block does not link to known chain

{}

fork_database.cpp:64 _push_block

{}

database.cpp:880 _push_block

But the timestamp was 10-12 hours before of the current time. I am not sure when this happened. I guess this happened when replaying without removing rockdb_xxx folders. It seemed to catch recent blocks but the time difference persisted (10-12 hours difference all the time).

At that time, the block_log and the block_log.index files did not increase in size.

This is for now. Any advice and ideas are welcome.

See you later.

cc.

@steemcurator01

I never remove the

block_log.indexfile when replaying and I've not had any issues yet. The--resyncarg will start a full resync and the whole process will take much longer to complete, because it will also build theblock_logfrom start by syncing each block.This is definitely not normal. The index file only contains the positions and lengths of the blocks in

block_log. So it looks like steemd on ARM has a problem with reading the blocks from the log.Thank you for your opinions. I am contacting a person who succeeded in arch linux for Blurt.

He also said, I have to sync from the start. I will share more when I find a solution.

Great, keep me updated on the progress ;)

hello sir

play steem voting service off?

it seems working well. any problem? recently i removed some accounts doing plagiarism.

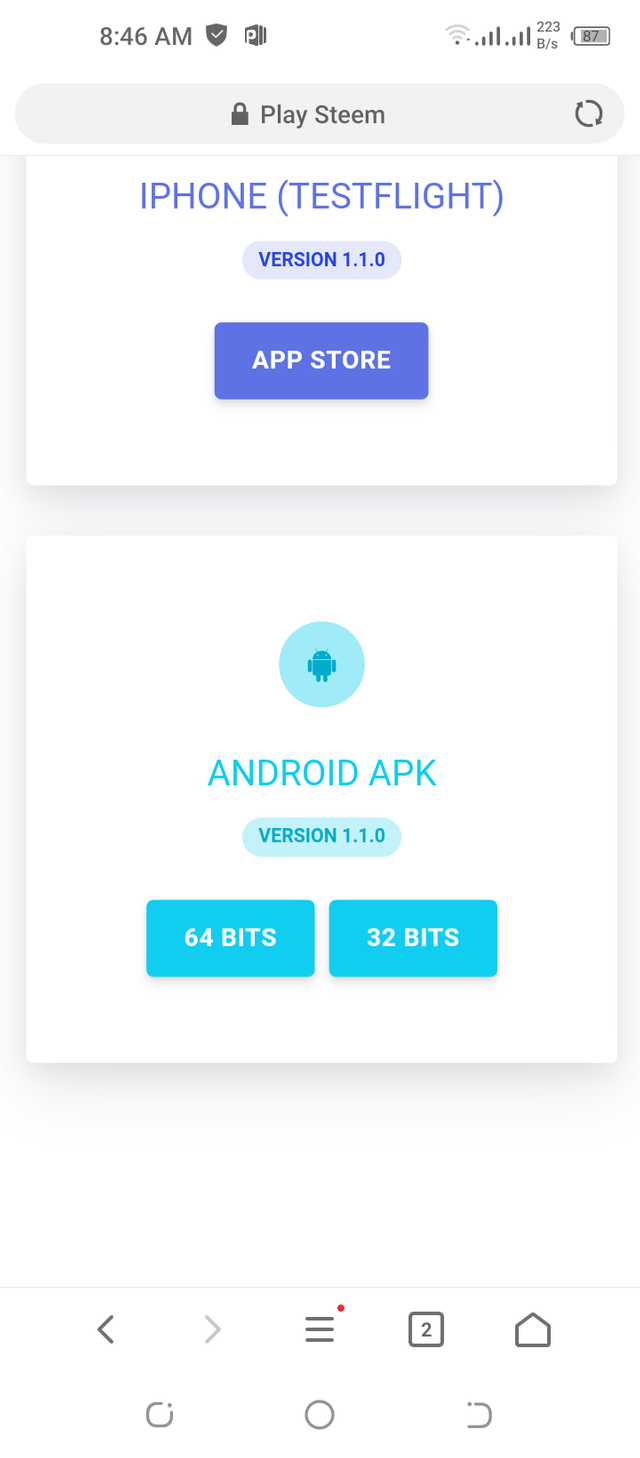

yes i don’t get upvote my last post i don’t use playsteem app may be last one month my mobile suddenly damge & i was covid-19 positive

what version of the app are you using? you can check in the settings screen.

I used latest version download few days ago from playsteem website

plz check the version.and if it is 1.2.1 and not working, then try reinstalling.

l download 1.1.0 version in your website

show me the version in the app settings. please.

수고가 많으십니다. ^^

잘 안되네요~ 고맙습니다.

고생 많으십니다. 생각보다 쉽지 않은 모양이네요..

동기화만 되면 되는데.. 스팀 데이터가 300기가가 넘어가서 RPi4로는 동기화가 불가능할 거 같네요.

ARM 기반 클라우드 인스턴스에서 해보는 방법이 남아 있겠네요.

아 그렇군요. 잘 되었으면 좋았을텐데 아쉽습니다. 수고 많으셨습니다!