Researching a FLOP and Energy Based Model for GridCoin Reward Mechanism

Summary: A model which distributes GRC directly proportional to FLOP contributions is proposed. This necessitates an equivalence between CPUs and GPUs; this is done on the basis of energy consumption, and so as a side result this model approximates the energy consumption of the GridCoin network. Pseudocode is included, which can also be viewed as a summary if you want to skip the justifications. Benefits include a GRC value tied to a fundamental physical asset (the Joule), accurate predictions of expected GRC based on hardware, and an incentive-compatible distribution of research-minted GRC.

Hi Everyone:

This is a model for comparing CPUs and GPUs in the BOINC and GridCoin networks. The ultimate goal is to achieve a more incentive-compatible GRC distribution in the GridCoin reward mechanism. This model distributes GRC based on floating point operation contributions, and as a side result also closely approximates the energy consumption of the GridCoin network. To understand the motivation for such a model, check out @sc-steemit's post on problems in the GridCoin reward mechanism. For an analysis of this problem from the perspective of incentive structures, I refer you to my previous posts on this topic here and here, where I laid out the motivations for these calculations. If you need a refresher on hardware, I refer you to @dutch's post on difference between CPUs and GPUs, and @vortac's post on the difference between FP32 and FP64. Here I focus less on incentives and more on the math underlying the CPU/GPU comparison proposal.

Please remember that this is just a proposal. Feel free to poke and prod, that is how it gets better.

Terminology

- CPU = Central Processing Unit

- GPU = Graphical Processing Unit

- FP = Floating Point

- FLOP = Floating Point Operation(s)

- GFLOP = GigaFLOP = 10^9 FLOPs = a billion FLOP

- FLOP/s = FLOP per second

- GFLOP/s = GigaFLOP per second

- FP32 = Floating Point 32-bit = Single-Precision

- FP64 = Floating Point 64-bit = Double-Precision

- J = Joule = Unit of Energy

- W = Watt = Joules/Second = Unit of Power

Comparing CPUs and GPUs

In response to @hotbit's question in the comments of this post, I use as examples the Intel i5-6600K, NVIDIA GTX 1080, and AMD HD 7970, and then generalize to the case where we have varying amounts of different types of hardware (as BOINC and GridCoin have).

Specifications for the three devices:

| Specifications | Intel i5-6600K | AMD HD 7970 | NVIDIA GTX 1080 |

|---|---|---|---|

| CPU FP GFLOP/s | 178 | N/A | N/A |

| GPU FP32 GFLOP/s | N/A | 3788.8 | 8227.8 |

| GPU FP64 GFLOP/s | N/A | 947.2 | 257.1 |

| Peak Power Consumption (Watts) | 91 | 250 | 180 |

Source for Intel i5-6600K; Source for AMD 7970; Source for NVIDIA 1080.

The following table shows FLOP per unit of energy = FLOP/J = (FLOP/s) / W = (FLOP/s) / (J/s) = FLOP/J for the three devices.

| FLOP/J | Intel i5-6600K | AMD 7970 | NVIDIA 1080 |

|---|---|---|---|

| CPU FP GFLOP/J | 1.96 | N/A | N/A |

| GPU FP32 GFLOP/J | N/A | 15.2 | 45.71 |

| GPU FP64 GFLOP/J | N/A | 3.79 | 1.42 |

If we want to compare the CPU and the 7970 based on energy consumption, we would say 1.96 GFLOP on the CPU = 15.2 GFLOP in FP32 on the 7970 = 3.79 GFLOP in FP64 on the 7970 (note: if we had an old, inefficient GPU, and a new, efficient CPU, this would not be a proper comparison; more on this later). If these were our only two options, we would be done. But we still have the 1080 here, and in BOINC, we have a total of thousands of different CPUs and GPUs, and varying amounts of each (not just one of each). My proposed solution is to take the weighted average of all of these devices.

First, I will give an example where I include the GTX 1080 into the above calculation, and then generalize to the more realistic case.

The average contributions of the 7970 and 1080 on FP32 = (15.2 + 45.71)/2 = 30.5, and for FP64 it is (3.79 + 1.42)/2 = 2.6.

So with these three pieces of hardware, the ratio of of CPU : FP32 GPU : FP64 GPU would be 1.96 : 30.5 : 2.6.

Now at this point you may be asking, how is this a fair comparison? I'll give you an example. For simplicity, I am going to use the numbers above, although this argument can be easily generalized. Also for simplicity, I am going assume that I receive exactly 1GRC for doing the work mentioned above (i.e., doing either 1.96 GFLOP on my CPU, or 30.5 GFLOP FP32 on my GPU, or 2.6 GFLOP FP64 on my GPU, since I am claiming these are equal in value).

I summarize all of these calculations in the next table.

Suppose we have a WU (work unit) from a BOINC project that is CPU only, and it requires exactly 1.96 GFLOP (how convenient!). Furthermore, suppose that we have another WU that requires 30.5 FP32 GFLOP, and a third WU that requires 2.6 FP64 GFLOP.

I can only run my Intel i5-6600K on the first task; that will take 1J of energy and I will get 1GRC for it.

On the second task, I can run either my 7970 or my 1080. If I use my 7970, it will take (30.5 GFLOP) / (15.2 GFLOP/J) = 2.00J of energy and I will get 1GRC for it. If I use my 1080, it will take (30.5 GFLOP) / (45.71 GFLOP/J) = 0.667J and I will get 1GRC for it. Notice how I used only 1/3 of the energy for the 1080 than I did for the 7970, to make the same amount of GRC. If you look at the second table, this should make sense, as the 1080 is 3 times more efficient than the 7970 in FP32.

On the third task, we will see the same effect as in the second, but in reverse, since the 7970 is more efficient than the 1080 in FP64. If I use my 7970, it will take (2.6 GFLOP) / (3.79 GFLOP/J) = 0.686J of energy, and I will get 1GRC for it. If I use my 1080, it will take (2.6 GFLOP) / (1.42 GFLOP/J) = 1.83J, and I will get 1GRC for it. So it takes more energy on my 1080, as expected.

| Energy (in J) Required to Mint 1GRC | Intel i5-6600K | AMD 7970 | NVIDIA 1080 |

|---|---|---|---|

| Task 1 (CPU only) | 1 | N/A | N/A |

| Task 2 (FP32) | N/A | 2 | 0.667 |

| Task 3 (FP64) | N/A | 0.686 | 1.83 |

As you can see, this preserves the fact that better hardware gets more GRC, leaving nothing unchanged on that front.

For the more general case, we just take a weighted average of all the hardware. For example, suppose we have the following setup:

| FLOP/J | CPU A | CPU B | CPU C | GPU D | GPU F | GPU G |

|---|---|---|---|---|---|---|

| Number of this type of hardware | 7 | 13 | 2 | 23 | 1 | 19 |

| CPU FP GFLOP/J | 3 | 2 | 4 | N/A | N/A | N/A |

| GPU FP32 GFLOP/J | N/A | N/A | N/A | 32 | 27 | 89 |

| GPU FP64 GFLOP/J | N/A | N/A | N/A | 16 | 5 | 22.5 |

The weighted average for the CPUs would be (7x3 + 13x2 + 2x4) / (7 + 13 + 2) = 2.5.

The weighted average for the GPUs in FP32 would be (23x32 + 1x27 + 19x89) / (23 + 1 + 19) = 57.1.

The weighted average for the GPUs in FP64 would be (23x16 + 1x5 + 19x22.5) / (23 + 1 + 19) = 18.6.

So the equivalence ratio (ER) here would be 2.5 : 57.1 : 18.6.

Please note that this ER, as produced by the method above, should properly look like 2.5 GFLOP/J CPU : 57.1 GFLOP/J FP32 : 18.6 GFLOP/J FP64. However, we can drop the G in GFLOP and the J, so that it looks like 2.5 FLOP CPU : 57.1 FLOP FP32 : 18.6 FLOP FP64. This is useful in understanding the pseudocode.

The Law of Large Numbers and Theoretical Reference Hardware

This equivalence relation could be an unfair comparison if we only take into account a small set of hardware. Since there cannot be a true comparison between CPUs and GPUs, I am relying on an assumption: since hardware progressively becomes faster and more energy efficient, and since there is high demand for both CPUs and GPUs, this progression over time is roughly equivalent between them.

With that assumption in mind, this problem is addressed by the law of large numbers. Since the number of BOINC users numbers in the thousands, the relative sophistication distribution of the resulting collection of hardware (CPU vs. GPU) should be roughly equal.

The resulting equivalence ratio can be thought of as a comprising a theoretical CPU and theoretical GPU, that are equivalent in the sense that running 1J on the CPU is "worth" the same as running 1J on the GPU in both FP32 and FP64.

This is easily the shakiest proposition that I am making, and there certainly could be a better method of calculating the ER. What I am curious about is 1) what do you think of the concept of the ER/theoretical reference hardware, and 2) how do you think it can be made more precise? Is there a more accurate statistical distribution that would yield a more realistic ER?

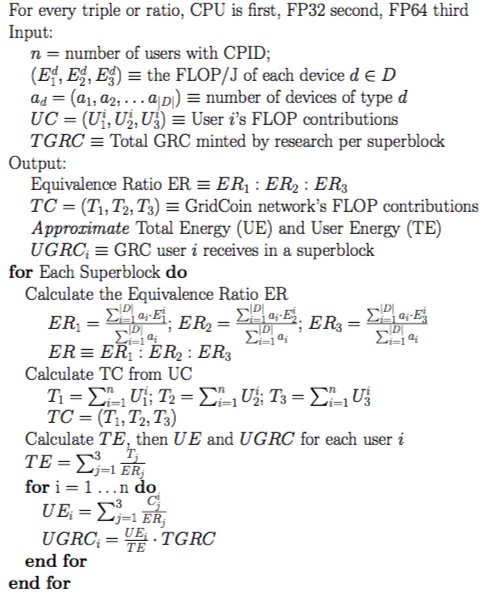

Pseudocode

As you may have noticed, there is a serious problem with this equivalence. Namely, it assumes that the hardware present in the BOINC network is constant. This is clearly not true, as new users join and existing users leave on a daily basis, not to mention the fact that these hardwares are not necessarily running 100% of the time with 100% of the cores. As with the old vs. new hardware problem mentioned above, this introduces a level of uncertainty that cannot be perfectly fixed.

However, I do think that this problem can be sufficiently addressed by taking a survey of existing hardware between each superblock. The equivalence equation above could be updated once every superblock. Then the user's contribution to each type of calculation within a superblock would be divided by the total GridCoin network's contribution to each type of calculation. The resulting three numbers would be the user's contributions as a percentage of the total contributions for CPU, FP32, and FP64. Then using the equivalence relation, we can determine how much GRC a person would receive; outline below.

Summary of proposal in pseudocode. Please note that in the calculations of UGRC_i = UE_i / TE, we cannot cancel the ER_j - you have to take the sum first.

Here is another way of thinking about it: your contribution to CPU will be divided by the total amount of CPU contributions, and then multiplied by the number of GRC minted by CPU-based research per superblock; same for FP32 and FP64. How do we determine how much GRC is minted for CPUs, FP32, and FP64? With the total FLOP for each and equivalence relation.

Thus, you are guaranteed to receive GRC exactly proportional to your contributions to CPU, FP32, and FP64 individually. Uncertainty arises when we try to equate these three using the ER - which effectively converts those FLOP to Joules based on the weighted average of hardware in the network.

Last Issue

How can we calculate the FLOP contributions of each user? Currently there is no way to do this. I think the series of @parejan on the expected return of each type of CPU is a good source of data. In their most recent State of the Network, @jringo and @parejan listed the computational power in GFLOPS for most of the project - this is another good source of data. Finally, there is WUProp@home, which has as its goal to

collect workunits properties of BOINC projects such as computation time, memory requirements, checkpointing interval or report limit.

Many thanks to @barton26 for mentioning this project after this past Thursday's Fireside Chat, and for running it, if I remember correctly. Based on the responses to this post, my next task may be to analyze the data collection necessary to calculate the FLOP contributions of each user.

Benefits

Minted GRC would be tied to a physical asset, the Joule. This has several benefits, including

a close approximation for amount of energy necessary to mint GRC

GRC could become associated with another, perhaps green-energy based coin (thanks @jringo for mentioning this possibility after the Fireside Chat)

it would be very easy to predict how much GRC a person would receive with their hardware

similarly to Bitcoin, it "costs" something to make a GridCoin; it always cost something, but now it would be a stable/predictable amount

You can receive the same amount of GRC by running your hardware on any project you like, so you crunch the project you want the most, and not be punished for it, as you currently would be if you crunch a popular project.

Projects will be rewarded only if they have WU.

Conclusion

What are your thoughts?

How does this model address the many (majority) Gridcoin crunchers who hide their hosts (hardware) from public view?

That's a really good question, I haven't given it much consideration. I've more focused on whether the idea is valid in the first place before logistics - since if it's not valid then it's probably better to work on something else - so I don't have a good answer right now, but here's what comes to mind:

It's in most everyone's interests to have the equivalence ratio to be correct, so there's an incentive for everyone agree it's accurate.

Many computers aren't hidden, perhaps they comprise enough of a statistically significant sample size to be considered representative of the whole.

How does @parejan get all of that data for those RAC tables? That was part of my inspiration.

Or malicious crunchers that try to pass off their older hardware as newer more energy efficient hardware in order to get a greater reward. Either through a bios flash of their video card or some type of hacking tool.

Still, a very interesting idea/analysis. Would this system also not heavily encourage FP64 and CPU projects above FP32 projects?

In terms of hardware, how would we deal with relatively obscure or less commonly used hardware and the obtainment of the FLOPs figures? And how would we ensure that the reported FLOPs of any given hardware is accurate?

Thanks for the good questions!

The way a user could theoretically manipulate the ER is by having a large collection of newer, more energy efficient hardware of one type, e.g. CPUs, and hiding those, as this would lower the corresponding number in the ER and thus increase the equivalent amount of work they are doing. I haven't really thought up of a good way to fix this. I was thinking about this proposal as a long term solution. At the current stage of GridCoin this isn't necessarily a problem, so it's not a priority, but if it scales to 10x or 100x its current size, I think it will become much more important, as hopefully new ideas (maybe replacing this one) would come forth.

In general, it should encourage using hardware in the most efficient way possible (e.g. not using the 1080 on FP64, or the 7970 on FP32). If you take a look at the table for the i5-6600K, 7970, and 1080, the cheapest task to run there is the 1080 on FP32.

I don't know. There might be a way to get that data by benchmarking if it's not publicly available. Or if it's so obscure/uncommonly used, maybe it wouldn't affect the ER much.

That's the biggest question. I've only thought about this a bit. Ideally it would be as simple as possible; maybe do a benchmark on each type of subtask on BOINC projects and get the information from already existing credit data. It's something I have to look into.

Under the current model, a researcher who is running a BOINC project awards BOINC credit in accordance with his/her perceived value in completing some work unit. Maybe that's tied to the number of flops needed to compute the work unit, but essentially it is up to the researcher to distribute those credits equitably based on the 'value' being provided to the researcher. On the Gridcoin end, RAC is used to try to distribute GRC in a way which matches the distribution of BOINC credit which has been done by said researcher (within one project). Right now, we treat all projects on the whitelist equally in terms of GRC/project/day.

There is a profit motive in GRC which pushes users towards under-crunched projects. No one is being 'punished' for crunching popular projects. A person makes a choice based on a mixture of motivations, monetary reward is only one of them. If you are crunching a project which makes you feel good about taking part in a particular field of research, then that feeling is part of your reward. If someone else is purely optimizing for GRC reward then that is their prerogative.

I don't understand why you want to down-rate people who are using more capable or more efficient hardware. If GRC can motivate people to use more efficient computation to provide more 'value' to a project's researchers, isn't that a good thing? It's nice to put some old or otherwise inefficient hardware to use towards scientific computing, but is it necessary to demand they get paid based on how much heat they produce rather than how much scientific 'value'?

This sounds to me like a lot of extra work to ask of the system (when you consider the importance of growth), which would require some further degree of centralization and possibly create new security holes or avenues for abuse (how hard would it be to spoof what hardware I'm using for something with a higher TDP?). This could be the basis for an interesting statistical analysis to understand how much energy is being used by different projects, which could provide value to the BOINC/GRC community, but I don't think it has any place in the rewards mechanism whatsoever.

If the real issue is a perceived difference in the value of different projects, and as a community we think that different projects should have a different amount of GRC distributed amongst their crunchers, there are simpler and more robust ways of addressing those issues.

Thanks for thinking about the rewards mechanism and its inherent questions of fairness and bringing out a new idea which tackles the frustrations and issues you see. The conversation is essential to trying to improve the system.

I think it is going to be important to develop a systems away from credits in the future for 2 reasons:

I think that any way to get a direct FLOP -> GRC relationship is ideal.

I have to disagree. If you make less GRC by crunching a more popular project, I see that as the reward mechanism effectively punishing you. Why should you make less if you did the same amount of FLOP on a different project?

This is exactly the opposite of what I'm proposing. If you take a look at the 7970 vs. 1080 comparison that's a good example of how better, more efficient hardware gets more GRC.

That's a good question. I don't know how BOINC specifically collects the data on my hardware, but they do know all of the specs of the machines I run BOINC on.

That being said, the point of this model is that you are only rewarded for the FLOP you do, nothing else. The weighted average of all the hardware is just used to calculate the equivalence between CPUs and GPUs. Theoretically a person could try to manipulate this balance, but given the fact that this is being averaged over many thousands of users, trying that would likely be ineffective, and moreover, probably quite noticeable if it was effective. Maybe some sort of verification/alert would be needed, but good point.

I'm not proposing that we implement this right now at all. There might be a lot of problems with this proposal, and this might not even be the best way of approaching the problem. Besides that, there are other, more important things to focus on right now. But I think in the long term, investigating a direct FLOP -> Magnitude relationship is something that might be useful in GridCoin's growth, as it gives a clear, FLOP-measured value to a currency that's based on doing computations.

Wanted to comment on this too. As I understand the proposal, the network-averaged energy ratio FLOP/Joule for each (CPUs, FP32, FP64) is used only as a means to define the conversion from FLOP --> GRC within each class. The reason for defining the conversion factor this way is to allocate rewards more equitably between CPUs and GPUs. It remains true that FLOP is proportional to GRC rewarded within each of the three classes.

Hi @hownixt:

Just to be clear, I agree with you that a better/more efficient CPU should receive more GRC than a less efficient CPU, and a better/more efficient GPU should receive more GRC than a less efficient GPU. That's a basic principle that I had in mind when I was thinking about this. Unfortunately, that's currently not how it works. It's true that within a single project, a better CPU/GPU will receive more, but across projects that's not the case, and that's what I'm trying to address.

You've done awesome job moving from the plain idea to the above study!

BOINC credit system attempts to fairly reward crunchers. Is there any point to create a secondary system? BOINC has direct access to hardware and it's much easier to improve the system within BOINC than build a new one from scratch. Improvements should be done in cooperation with BOINC developers and be contained within the BOINC platform.

Location. Should such a system be incorporated into a blockchain? I'm not convinced about that. If yes - pros and cons? If not - where?

I'm sorry for my comment to sound so critical, I really appreciate your work.

No need to apologize, thanks for your comment!

I'm not insistent on this model being implemented one way or the other - or at all, in fact, if another, better alternative is proposed. As I mentioned briefly regarding WUProp, which is a BOINC project, we may already have the information necessary to do this. That being said, there is the question of whether we want to be able to bring in other distributed computing projects from outside BOINC, which would require a standardized measurement. For now, I'm just trying to get the conversation started.

The GPU will do 15x more FLOP and get the same amount of credit for it as a CPU crunching a CPU-only task that required 1.96 GFLOP. If a CPU tried to run those same 15 GPU tasks, it would get the same credit, but it would take 15x more energy to do it - i.e. it would cost 15x as much, so why do it?

As to whether it's fair that the GPU gets the same credit as the CPU, the idea - and feel free to take shots at it - is that many projects simply can't be parallelized on GPUs - otherwise they definitely would be, as GPU computations are much faster. Therefore, if a task is CPU-only, that's because it needs a CPU. At that point, the question is, how can we compare such tasks? My suggestion is to base it on the Joule, as both types of hardware require energy to run. I'm open to other suggestions.

Ideally I would like to see all users be rewarded for the FLOP they contribute. The Joule was just an intermediary to compare CPUs and GPUs. As a side result, it also estimated the energy consumption of each individual user.

Just to be clear I'm not suggesting that people who use more energy should be rewarded more - somewhat the opposite: more efficient hardware would always yield more GRC than less efficient hardware under this model, which isn't the case right now, as it depends on which project you run.

"I have to disagree. If you make less GRC by crunching a more popular project, I see that as the reward mechanism effectively punishing you. Why should you make less if you did the same amount of FLOP on a different project?"

This is where alot of misunderatanding comes from. no one is being punished. you are rewarded by your "Relative" contribution. It's a competition. the best are rewarded more. if you want more GRC invest more crunchers. Investing is a win win. Science win and you win. and if they can't handle the heat. choose a different project or stick to it because you believe in it.

I agree to an extent, if you want to see it as a competition I don't have a problem with that. My concern has more to do with the fact that you can make 2 or 3 times more by running a different project with the same hardware. I totally agree that if you want to add more crunchers to make more GRC that's a good thing - I think the current reward mechanism actually prevents that from happening.

That is the thing, GRC must be hard to earn to have a value(aka diffuculty) if it easier then its just merely a token of appreciation. because everyone will have a grc of his/her own. thus no demand with big supply

I don't think it should be easier or harder, just that it should be the same for everyone.

I like where this proposal is going. I want to check my understanding. Tell me if this is an accurate summary:

/////

Currently, each BOINC project has its own (approximate) conversion from FLOP --> credits. GRC rewarded to a cruncher is proportional to the cruncher's credits divided by the total network credit on the project. However, there are at least two major shortcomings in this approach:

By establishing network wide, energy-based conversion factors taking FLOP --> GRC for each CPUs, FP32, and FP64, we solve both of these problems.

/////

Practical aspects of implementation are of course more complex, e.g. preventing unfair manipulation. BOINC has already grappled with this issue for a long time, see: https://boinc.berkeley.edu/trac/wiki/CreditNew.

Their solution is a huge mess. It seems to work OK, but a simpler implementation would be well worth the effort, I think.

Yes that's an accurate summary. One point I'd like to emphasize is regarding this:

A result of the model I described is that a cruncher would get the same amount of GRC with the same piece of hardware on any project, not just within the same project. (This could be changed by ranking projects, but that's a separate issue from this one). One of the goals is to remove the financial incentive to crunch one project over the other, and make that more of an issue as to how much people actually value that project. That doesn't remove financial incentive completely (nor am I suggesting that), just the incentive to move from one project to another based solely on the amount of GRC you can earn.

Got it, thanks for clarifying. That certainly would be convenient to solve more than one issue at once, i.e. without having to introduce an additional variable for ranking projects. On the other hand, this would mean users could only speak with their own mining output, and not with their balance. But right, that starts heading into a different debate...!

Totally agreed, it's a complicated topic. That's why in this post I only focuses on a direct FLOP -> magnitude relationship, which I think solves many different problems simultaneously, but not that one.