Counting FLOPSs in a FLOPS - part 1

FLOPS is a popular measure of computer hardware performance. BOINC projects reward credits based on FLOPS. Users complain that that credits rewarded by BOINC projects are very inconsistent between projects. One of the reasons is that FLOPS concept is not well defined, it’s fuzzy.

What is FLOPS?

FLoating point Operations Per Second (FLOPS) from the definition is a number of floating point operations computer can perform within one second.

Peak FLOPS equals to Number of Cores∗Average frequency∗Operations per cycle

So far so good.

Floating point stands for the type of the number; real numbers are usually represented in the programming languages as a variable of the type float (FP32 – single precision, occupies 32 bits in computer memory) or type double ((FP64 – double precision, occupies 64 bits in computer memory)

Operations stand for operations like addition and multiplication; the problems arising here are:

- different architectures have different types of instructions sets; for example some new processors can perform addition and multiplication within just one instruction but usually it’s counted as two FLOPS; sine function can take several more instructions on one machine than on another;

- on some processors (GPUs) integer and floating point operations share some resources – thus if also integer numbers are calculated, peak FLOPS is unachievable;

Theoretical peak FLOPS can be calculated using above equation, or more realistic measure taken by running one of the benchmark codes, like Whetstone or LINPACK. As FLOPS performance measures have several shortcomings, new benchmarks are proposed, like HPCG.

As you see, comparing FLOPS for different machines without knowing WHAT KIND OF FLOPS they are can become comparing apples and plums.

BOINC project rewards 200 credits (Cobblestone) for one day of CPU time on a reference computer that does 1 GigaFLOPS based on the Whetstone benchmark. Therefore computing power can be calculated as GigaFLOPSs = RAC/200.

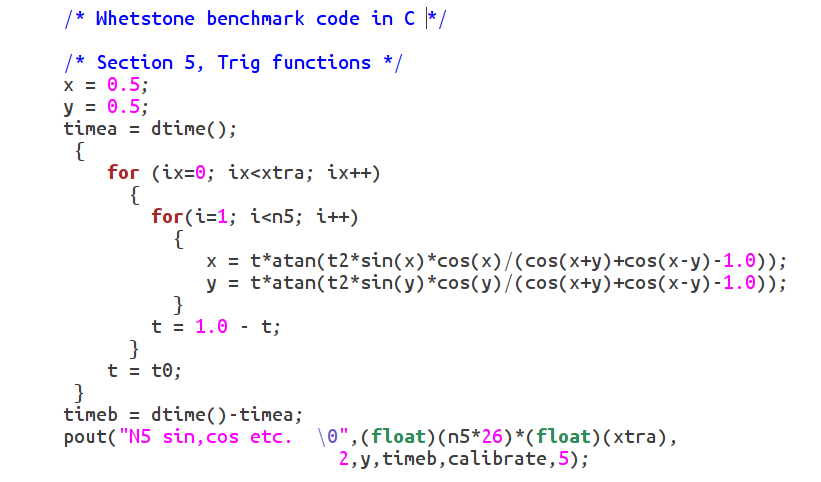

A snippet of Whetstone benchmark code:

BOINC formula RAC and GFLOPS

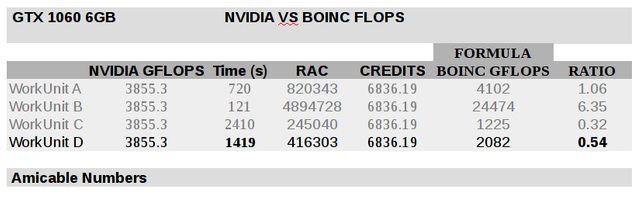

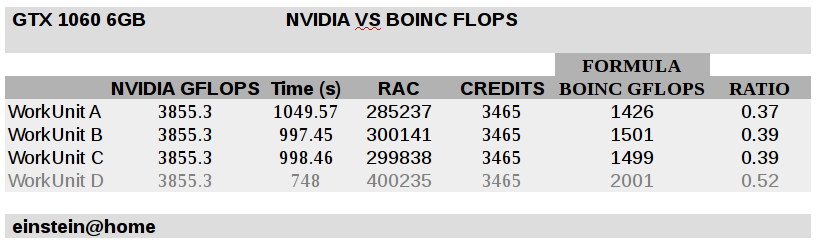

For calculations and comparison I have used base NVIDIA GTX 1060 6GB GFLOPS (no boost, no overclocking).

Amicable Numbers

The time to complete a WU varies, although most workunits are quite consistent and take around 1500 seconds. For GTX 1060 6GB maximum RAC is ~ 400 000, while performed GFLOPS calculated with BOINC formula is ~2000, which is around ~50% of the nominal peak value.

einstein@home

The time to complete a WU is recently more consistent than in previous case, most units take around 1000 seconds. For GTX 1060 6GB maximum RAC is ~ 300 000, while performed GFLOPS calculated with BOINC formula is ~1500, which is just under ~40% of the nominal peak value.

Great post, thank you for writing this!

I was considering addressing this in my last post but I decided not to as it was already quite long. Thank you for taking the time to explain it here.

Regarding the points you've made (in reference to the ER I proposed in my post):

If this post wasn't written as a response to mine, sorry for making the assumption, the timing and your comment makes it seem so.

The same CPU or GPU will perform differently on difference projects - i.e. carry out the same number of FLOP in a different amount of time on different projects - due to the reasons you mentioned. In response to one of @donkeykong9000's questions, I pointed out that the ER model would encourage using hardware in the most efficient way possible. I was referring to using FP32-efficient GPUs on FP32 projects, and FP64-efficient GPUs on FP64 projects. However, the same applies to GPUs/CPUs with architecture better suited to some projects than others - in this sense, there would be an incentive to use your hardware on the projects on which it would perform the best (not the worst thing in the world, since that increases overall efficiency). As to whether these points would/should elicit a different ER, that's a good question, and I'm totally open to the idea (if that's what you're trying to get at here, I don't know).

The same point guk made in his comment.

What's part 2 going to be about?

I've started to research FLOPSes ;) as I want to understand relative network powers of gridcoin and curecoin.

You pulled me in, guys. I have an idea, stay tuned.

I'd say I'm looking forward to seeing it, but it's already up ;)

Unfortunately many BOINC projects alter the credit calculation meaning credits cannot be compared across projects.

If you can figure out a way of gaining the true project compute requirement that can be compared across projects it would make a very useful extension to the Whitelist/Greylist process that is currently up for vote.

The answer is : You cant . As the post says, there are many factors. Just an example. I have a ryzen 1600 and an nvidia 710GT, the ryzen 1600 has around 90GFLOPS, the GPU, 366GFLOPS. But if i mine monero with it (the most extreme example) , i get 500 hashes on the cpu and 40 in the gpu,

You pulled me in, guys. I have an idea, working on it.

1.44% @pushup from @hotbit