Why Voxels Are the Future of Video Games, VR, and Simulating Reality

I am not in a possession of a crystal ball. But I do have an obsessively accumulated, encyclopedic knowledge of real time rendering techniques. The one which has most fascinated me all these years is the voxel engine. Voxels are commonly understood to be 3D cubes but can also be rendered as points, for example the point cloud models generated by 3D scanning.

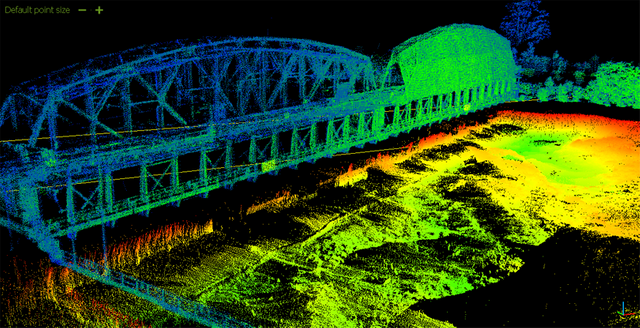

If you sweep a laser up and down or back and forth in successive rows, generating a point in your software every place the laser hits something, the result is a point cloud. If the points are densely packed enough, the resulting virtual object appears solid, no polygons required.

GPUs available today are optimized for polygons, not voxels. But if you had hardware specially designed to permit absolutely, unfathomably massive numbers of voxels, you could create some truly photorealistic scenery. Unprecedented levels of tiny, intricate detail would become possible, as well as more realistic behaviors for light, fluids, gases and so on.

The simulation above is voxel based (allow some time for it to load, very large gif). It cannot be rendered in realtime due to the sheer number/density of the particles involved, but they are sufficiently numerous to create convincing solid objects, and the accurately simulated fluid dynamics results in interactions between the water particles resembling real behaviors of water.

The ability to more accurately model reality in this manner should come as no surprise, given that reality is also voxel based. The difference being that our voxels are exceedingly small, and we call them subatomic particles. As with a point cloud, were you to zoom in far enough you'd see lots of space between them. But zoom out enough and they take on the appearance of a solid object.

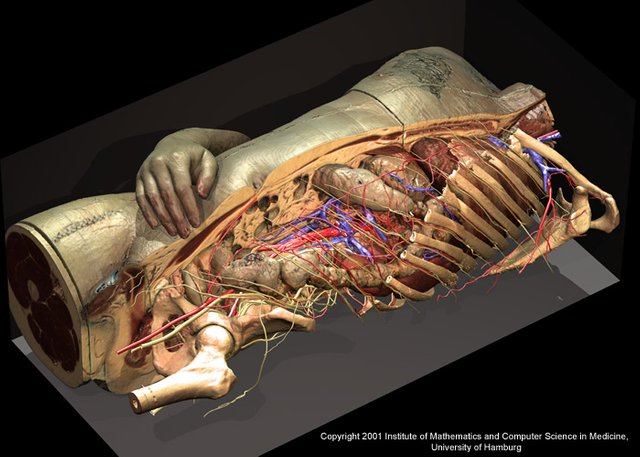

The nature of voxel objects is that they can be "hollow" like polygon objects to save processing power, but unlike polygon objects they can also be solid. A voxel object can be made of solid voxels, through and through. This would mean in a computer game that you could slice an apple in half from any direction and see an accurate cross section.

If you were exploded by an enemy missile your body would not come apart into pre-modeled polygonal chunks, but actually separate in a manner unique to that event, spilling your insides as it would in reality. Real objects are after all just atoms put together in a particular shape, and everything which happens is just an interaction between those particles, governed by physical law.

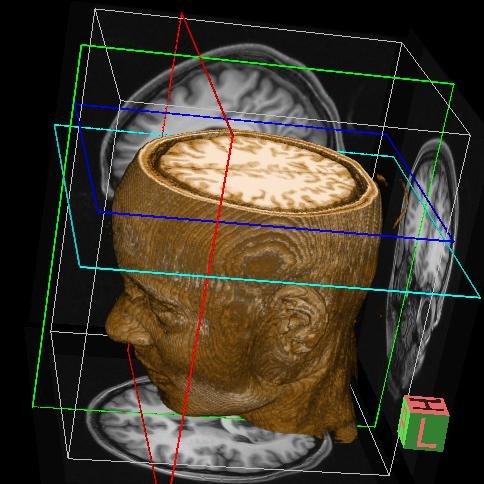

So great is the potential to replicate at least limited chunks of reality in software by this method, that those who put their hope in brain uploading specifically intend to achieve it by scanning the brain down to subatomic particle resolution, and generating an identical point cloud from that data where each point corresponds to (and is assigned the known behaviors of) each of those subatomic particles. The expectation is that this virtual brain will then resume cognition from where it left off.

Minecraft is a good example of a popular voxel based game, which leverages the gameplay potential of voxel terrain and interactions. It "cheats" in the sense that the voxels are just polygonal cubes, but the math involved in procedurally generating terrain in Minecraft will be familiar to anybody who has ever tinkered with a voxel based terrain engine from any other game.

Outcast (which came out in 1999 if you can believe it) is another notable example of a voxel based game, this time with no polygonal cheating, and it demonstrated the potential to produce far more detailed terrain than was possible in polygonal 3D engines of the day.

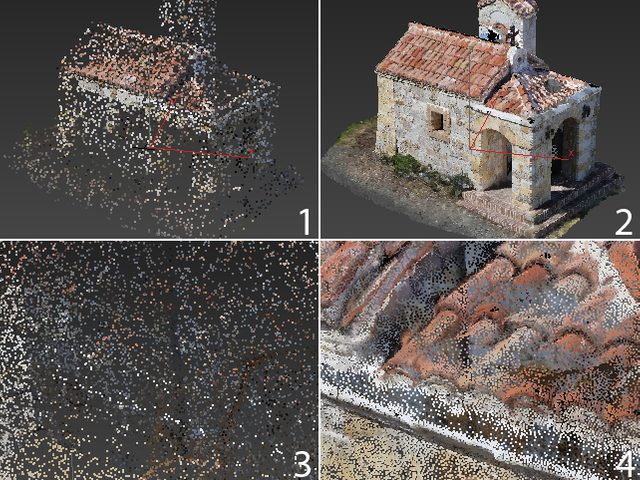

So clearly, some amazing things are possible with voxels. Because our own reality is comprised of particles and their interactions, it is the natural path to take for efforts at simulating reality. Euclideon has demonstrated recently a modern voxel engine with environments generated from 3D scans of real world locations:

The results are so stunning that it's difficult to believe this isn't a photograph. Understandably many called bullshit from day one. But they have since shown their engine running in real time even on a relatively modest laptop, as their central claim is to have discovered a means to greatly optimize voxel rendering.

As soon as they demonstrated the engine running in real time, the goalposts were shifted, and their critics said "Alright it can do static environments, but not moving/animated objects". They then demonstrated animated and moving objects, so the goalposts shifted again. "None of that is skeletal. It's all frame by frame, precalculated."

So it went. Much as with the ongoing skepticism of the EmDrive, every time it passes a test, the skepticism only grows more furious and intense. So afraid are we to be fooled that we dare not even hope for such a fantastical breakthrough.

This is all on hardware not remotely optimized for voxels. They've achieved it purely by the discovery of a selective rendering method that cuts out most of the workload, determining by some dark juju what is or isn't visible to you and culling accordingly, but using a small fraction the processing power that normally requires.

Just imagine what will become possible with next generation video cards specially designed to push points, not polys. Imagine when those points become small enough as to be imperceptible, even with 4k, 8k and even 16k resolution VR headsets. The result will be truly photorealistic virtual environments, captured from real world objects and locations.

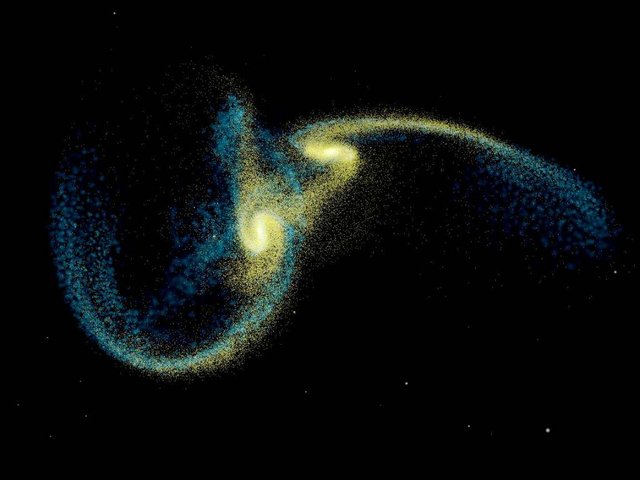

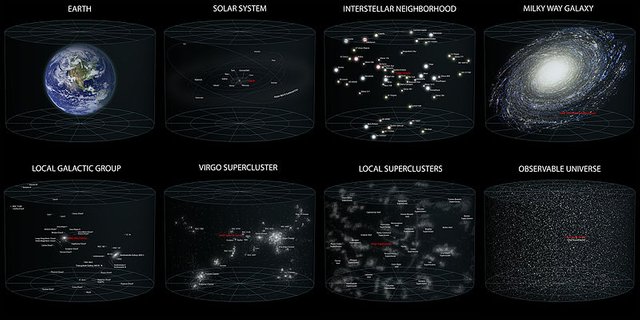

Of course when you add realtime lighting, fluid dynamics and so forth, the computing workload skyrockets. But if there is anything in this world as certain as death and taxes, it's that computers will become more powerful. Conceive of computers a century from now which can render the entire Earth in points as small as actual subatomic particles. Or computers two centuries from now which can render the entire solar system, or galaxy.

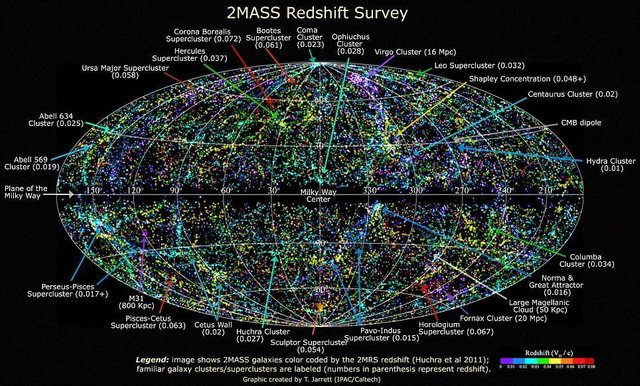

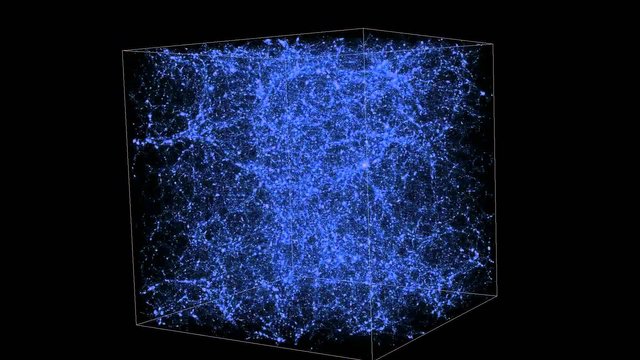

Already, point cloud sims are used to model the collision of galaxies, or the expansion of the universe. Imagine if those simulations were really complete. If you could zoom in to any individual planet and it would be as detailed, down to the subatomic level, as actual planets are. At that point, what would distinguish it from the reality in which we reside?

This should make some sense of why Elon Musk, Stephen Hawking and others have recently been vocal about the high probability that we already reside in a simulation. Our own technology is approaching the capability of rendering large chunks of reality to the same fidelity as the one we reside in.

After all, if we can one day simulate an entire universe, probably we will not be the first to do so unless humanity is the first intelligent life ever to evolve in the universe. Probably we will also not be the last to do it, as simulating whole universes has obvious scientific merits where it comes to learning about our own universe.

If those simulations are indeed perfectly accurate, then life will arise within the sim universes for the same reasons it did in this one. Those simulated species which become intelligent will then eventually develop the technology required to run their own whole-universe simulations, and so on.

This would result in each actual universe containing many simulated universes at any given time, each of which contains many further sub-simulations, each of which contains many further sub-simulations and so on in a fractally arranged process tree. Of course it can't extend forever as the processing power of the root sim is limited, but even then the number of simulated universe necessarily greatly outnumbers the actual universes at the 'top level'.

Probably then, the intelligent inhabitants of all those universes (at least the ones who've not yet reasoned all of this out) assume they exist in a real universe, just as most human beings do. What are the odds that we actually do live in one of the comparatively few real universes, rather than the vastly more numerous simulated ones? Vanishingly, remotely small.

So the next time you look up at the countless points of light in the night sky, think of the unfathomable shitload of particles each of those stars is made out of. When you look at a particularly lovely tree, think "nice graphics". And the next time someone asks you if you think this is a game, nod.

just bought a small amount of VOX, lets see where this can go :D

Nice article... I've written a few articles on voxels and I've written a few voxel systems.

I wanted to expand upon what you said just a bit.

A Pixel is a single dot on your screen that can have color values. That is it. That is what our images are built out of.

A Voxel is a Volumetric Pixel. It is when we conceptually break a world or and environment into a grid. Each grid cell is like a pixel. The key is since this is a virtual 3D pixel we can put WHATEVER we want into that cell. We can define values that are more than just colors.

One of the most simple representations of this is a cube. It is the easiest to code. This is NOT what all voxels are. In fact, it isn't even the most common form, but it has become more popular after Minecraft became so popular.

The Marching Cubes Algorithm is NOT referring to cube shapes but rather the cubes it is referring to are the grid volumetric areas but it holds many shapes in that area and only one of those is a cube.

You can take this further. If you have a tile based game where the tiles are all the same size then in reality that could almost be viewed as a form of VoXel. You have volumetric pixels with the possible values being the different tile variations.

Voxels (even cubes) are tricky to get performance for procedurally created infinite environments. They are easy to use with static environments created on the fly.

There are some benefits to them and yes I agree they likely are the future. VoxelFarm.com is one of the most advanced voxel engines out there and some amazing stuff has been done with it. (it is Dual Contouring based)

The benefits are procedural generation and HUGE worlds represented with very little memory requirements. With procedural generation you do not need to save all the cubes either. You simply need to save which cubes were changed.

It is great for creation, destruction, and simulating near infinite worlds. (not truly infinite due to mathematical precision limitations... but if done right this is unnoticeable to the players).

Thanks for posting your blog article. This is definitely a strong interest of mine. I am actually working on some Unity based Voxel Systems. I may try to sell them. We shall see. I am building basic tools first, and then will likely build a fancier more optimized version.

Thanks for adding your luxurious thoughts to the topic. I've followed your Unity articles closely.

And I agree the procedural nature makes it totally plausible that we could be running inside of a simulation. :)

I'm afraid we probably still have decades of polygon rendering ahead of us. Voxels require such a huge amount of memory that it's difficult to reach an acceptable resolution. Minecraft with it's cheated voxels illustrates how at the time of it's release the technology had a resolution similar to Atari 2600 blocks.

Even the demos from Euclideon result in some dodgy looking objects and textures in the near field. The resolution isn't there yet.

As you're surely aware square bitmaps and textures for 3D objects need simply the memory to fit the resolution of one side squared.

Voxel objects need the memory to fit the resolution of one side cubed.

I'll sum up why it should take decades for voxels to overcome polygons is because polygon graphics are a moving target. If the state of the art for polygons somehow hit a snag and was frozen in time then voxels might quickly catch up in a number of computer generations taking perhaps five or six years. But that fox called polygon graphics is going to keep running at a faster pace than voxels can keep up. For every doubling of voxel resolution polygon textures can quadruple. It won't be until the increases in resolution for polygonal graphics starts hitting diminishing returns that we see voxels gain ground as an industry standard.

In the meantime voxels should enjoy dominating augmented reality applications as devices with sensors will naturally generate point clouds. That's where we'll see the first strides in optimizing voxel graphics on a hardware level.

My expectation is that voxels won't be the go-to for immersive full screen photo realism for quite some time. It'll be the choice for mixed reality and games with an retro bent or artistic style which can be accommodated by voxels of not too high resolution.

Some clever insights. I will say however that while polygons will always have a performance edge over voxels, the same was true of 2.5d engines like Doom and Duke 3D over early polygon engines like Quake.

We moved on to polygons not because they became more efficient than that approach, but because we could more accurately model realistic environments with them. Voxels have that same advantage over polys.

So if i scan my brain and switch on two instances of my copy, which one will be me ?

If we're talking about perfect copies I would say they're all you. They would feel a sense of continuity with your previously uncopied self. To them all your life experiences up to the copy would be as much a part of their existence as it is for you. For all intents ant purposes they would be you independent of the you in your own skull. All of that and you could continue your life the same as you ever were.

Go open Notepad.exe, then open it again. There are now two instances of Notepad running. Which is the real Notepad?

Let's say I get teleported somewhere. I get into the machine, get scanned, destroyed and rebuilt somewhere else. In my perspective I feel a continuous experience going into the beamer and then suddenly being somewhere else. Now instead of beaming me somewhere I am beamed to two locations in parrallel. What is my continuous experience then ? I go into the machine, and then .... where do i reappear ? Of course you can say in both places. But in my subjective experience I can only be in one place....

Bravo! Excellent post congratulations, valuable information thank you for sharing brilliant post

Shit, I don't need this existentialism right now. I'm already anxious about the goddamn election, and now I have to figure out if I'm in the goddamn Matrix.

... I need a drink.

I loved this post! I am a game designer who uses blender and had no idea about voxels. Thanks for sharing @alexbeyman ! P.S. I tried to resteem this but I guess I can't because it's too old? That's unfortunate.