MattockFS; Computer-Forensics File-System : Part Five

This post is the 5th of an eight-part series regarding the MattockFS Computer-Forensics File-System. This series of post is based on the MattockFS workshop that I gave at the Digital Forensics Research Workshop in Überlingen Germany earlier this year.

If you didn't read the earlier installments of this series yet, you might want to visit these first:

- Asynchronous processing and the toolchain approach

- Integrity, Privilege-separation and capabilities

- CarvFS & MinorFS

- MattockFS core design

In this installment, we are going to be zooming in on the way that MattockFS implements its file-system as an API. This is meant purely as a way for you to get a good grasp of the concepts and of the way how the capability based API is supposed to interact with the operating system mandatory access controls. Note that the API described in this installment is meant to be a common low-level API that different language bindings could be built upon. The only language binding currently available is that for Python. Given that MattockFS is currently a one man project, you as a reader are invited to write language bindings for your favorite programing language.

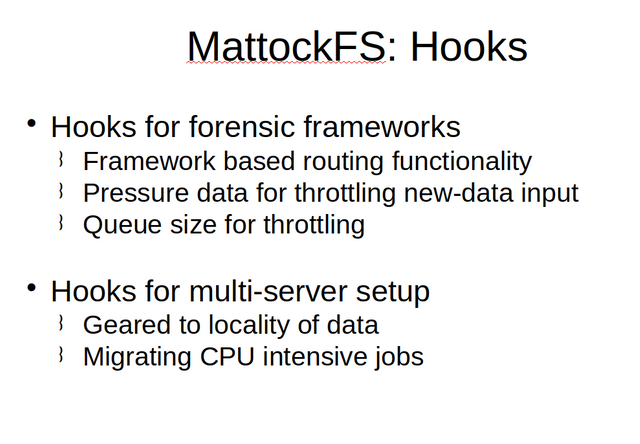

MattockFS is meant to interact with two distinct architectural components through its file-system as an API. Primarily it aims to interact with the local instantiations of a (future) computer forensic module framework. MattockFS provides basic hooks for such a framework to hook in distributed tool-chain and meta-data based routing functionality. Further MattockFS provides essential information that the framework requires in order to throttle its new-data output when not doing so might be decremental to the page-cache or message-buss efficient processing of the currently active data.

Next to the hooks that MattockFS provides for the local part of the forensic framework setup, it also provides important hooks that should be useful for distributed processing. Again spurious reads remain a major concern, also, no especially in a distributed setting, so the hooks provided are primarily geared towards maximizing the locality of data properties. The base concept is that primarily only CPU intensive jobs should warant migrating a tool-chain to another network node.

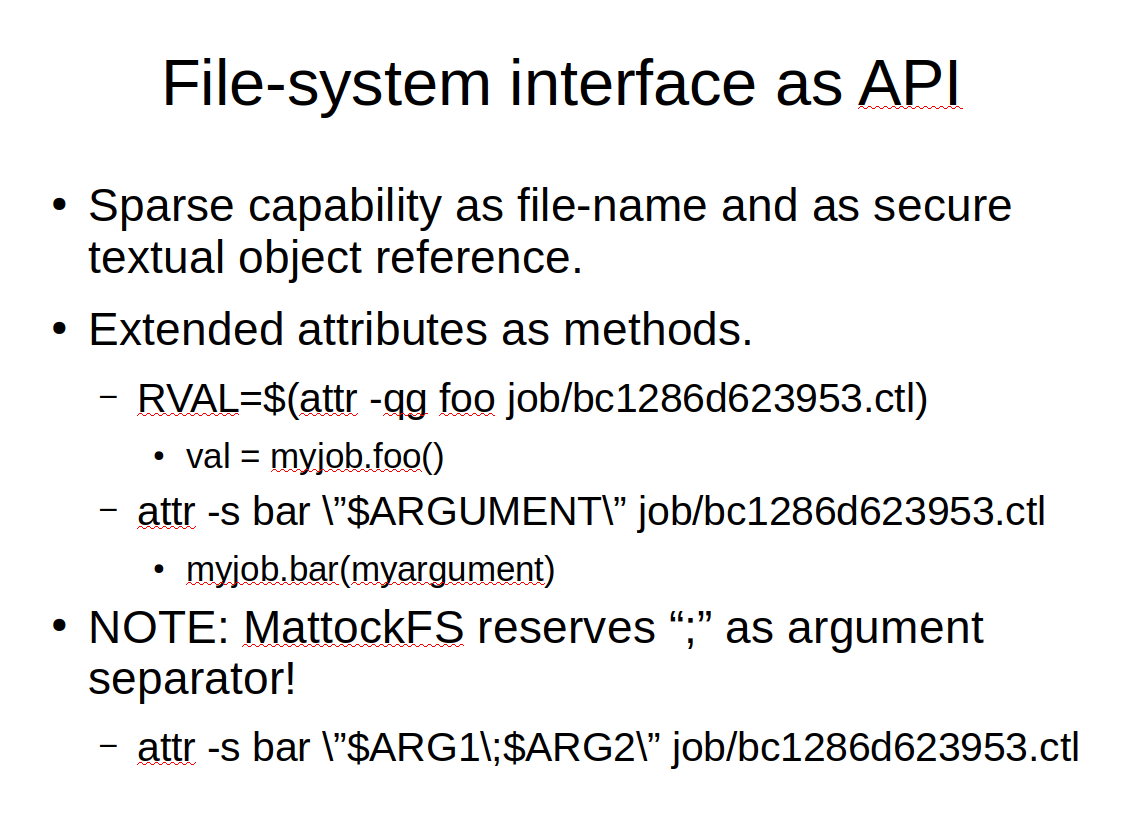

So let us look at the file-system as an API approach that MattockFS uses. The most important thing to wrap your head arround with respect to this approach is the fact that the API is based on the base concepts from object oriented programming. While the API works with weird file-names and extended attributes, on a more abstract level you should think of these from an object oriented programming context. Consider that the unguessable file-names used by MattockFS are a sparse capability based equivalent of an object reference in an object oriented programming language. Now if we use the file-system interface for setting and getting extended attributes, and consider these operations can do something different from simply getting or setting an attribute but can be used to invoke concrete functionality in the referenced objects in the same way that method invocation does in an object oriented programming language, then we can mentally map all such getter and setter operations to method invocations. There are some limitations here given that all operations are either set or get based so that a method taking arguments can not return a value, but overall most of the API can be modeled respecting these limitations.

It is important to note that setting an attribute will take a single string as argument where as a method invocation may take many. Currently, MattockFS gets around this limitation by using the semicolon as attribute separator. In retrospect, the usage of a serialization format might have been more flexible, but for now, the approach seems appropriate given the expected field values.

When you open a MattockFS mount point in your file browser, you will see the above directory structure. I'll discuss all parts of these below.

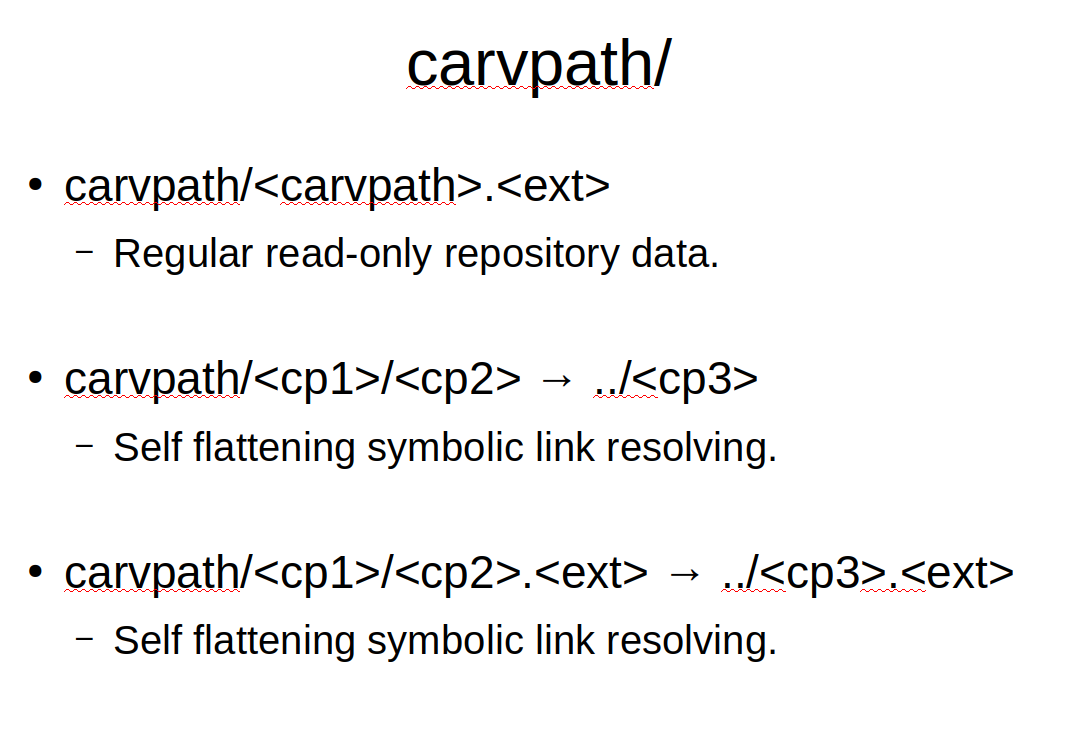

If you have ever used CarvFS in the past, the /carvpath directory should feel really familiar already. If we look at the /carvpath directory from it's data access perspective, it is mostly a fully compatible CarvFS clone with as one new feature the fact that the file extension can be chosen at random. Some naive tools give meaning to file extensions, and to accommodate these tools, a forensic module might choose to address a carvpath based designation with an alternative file extension. For the rest, the MattockFS carvpath directory provides the same designating and self flattening read only access to chunks of the the underlying archive that CarvFS did.

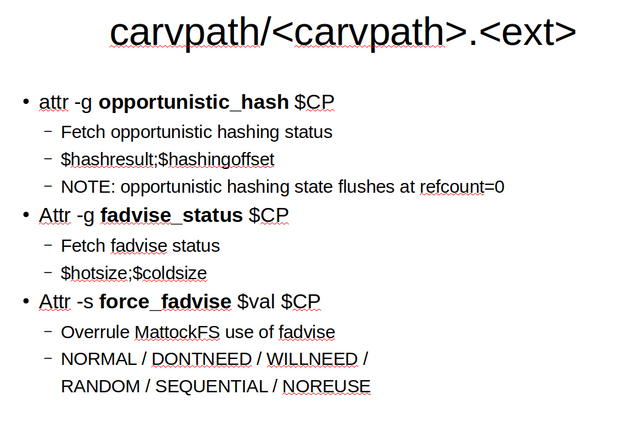

Appart from the basic data access functionality that it inherits from CarvFS, MattockFS provides three methods for carvpath entities. Please note that these methods are only useful for carvpath entities that are currently part of, or partially part of an active toolchain. We will get to the toolchain part later on, but let's look at the three methods that are provided for active carvpath entities.

The first method is meant for querying opportunistic hashing status. The return value consists of two parts. If hashing is completely done for the entity, the BLAKE2 hash of the entity will be returned. If not, then the current index of the hashing operation is made available so that a client may read only the tail of the entity in order to complete the hashing operation.

A second method allows us to query the fadvise status of a carvpath entity. If for example we are processing a disk image and want to know how much of it is still marked as being hot data by the reference counting stack, this method allows us to query that.

The third and final method that can be invoked on a carvpath allows us to overrule the reference counting stack for a carvpath entity. We can overrule the current fadvise status for underlying chunks of archive data by invoking this method.

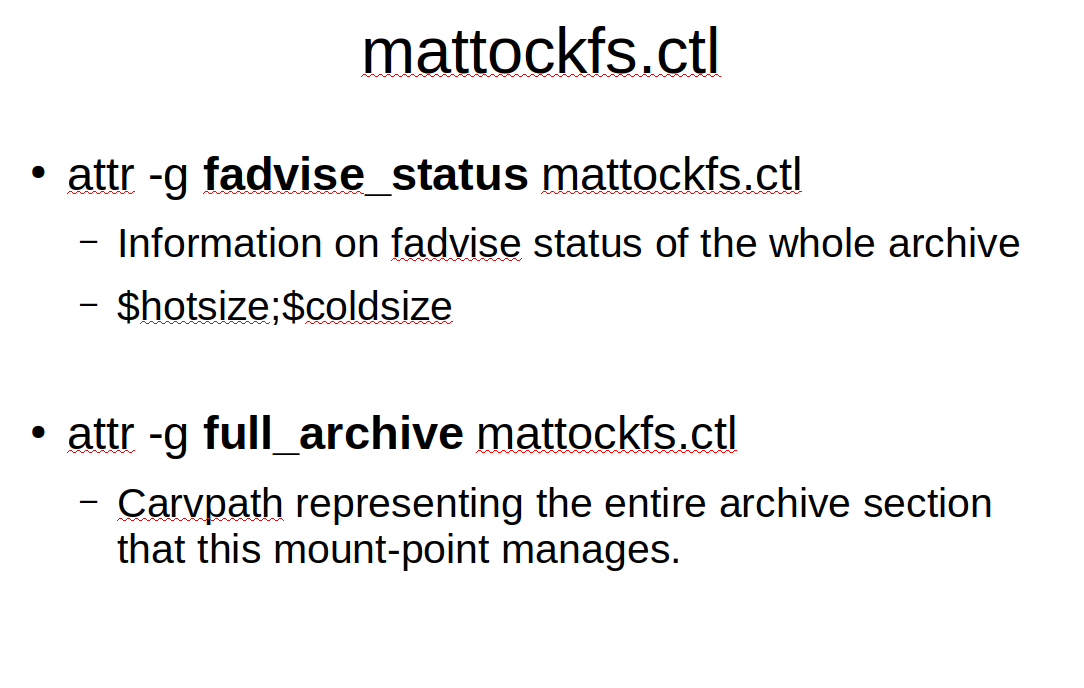

The pseudo file /mattockfs.ctl gives us some archive level info. Just as with individual carvpath entities under /carvpath, we can query the fadvise status of the archive as a whole. If needed we can also ask for a carvpath representation of the archive as a whole.

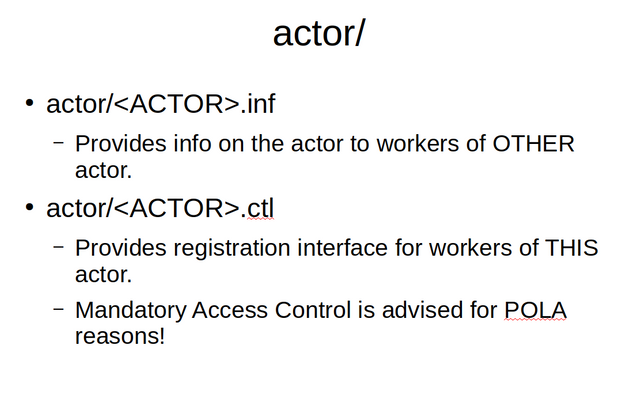

MattockFS has two abstraction levels for looking at computer forensic modules. Again you could look at it from an object oriented perspective. The code for the module, the script or program implementing the module functionality may be running in one or more instances on the system. In MattockFS we refer to a cluster of one or more module processes as an actor. To an individual process in that cluster we refer to as a worker. This distinction can be confusing at first, but as we walk through the API it should all become clear. We start with the actor API. There are two distinct handles for addressing the actor abstraction. If we want to forward a job to another module (tool) in the tool-chain, we won't particularly care what worker will be processing the job next.

The other actor level handle we work with is meant for use by the involved module itself. This handle allows a process to register itself as a worker for a particular actor.

It is important to note that access to this registration handle by itself is unrestricted and from a system integrity point of view needs to be restricted to the actual actor program or script. To accomplish this, access to the ctl handles should be AppArmor or SELinux controlled.

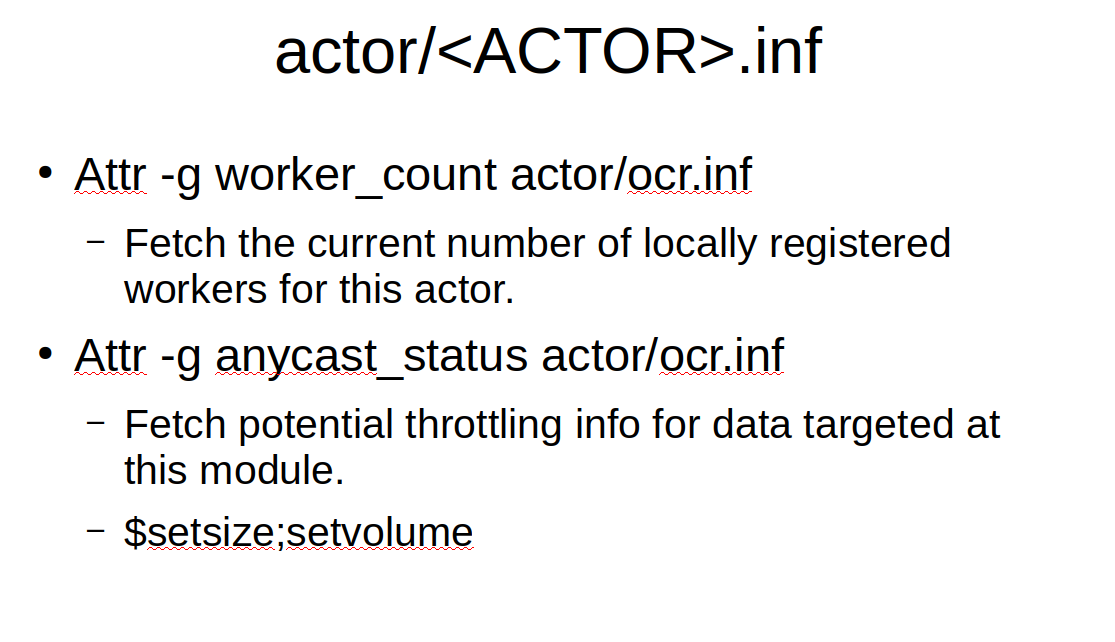

Let's start by first looking at the inf files. The inf files supply us with a number of methods. We can query how many worker are currently registered for the worker, and we can query the integrated anycast queue for the actor to see how many pending jobs there currently are for this actor.

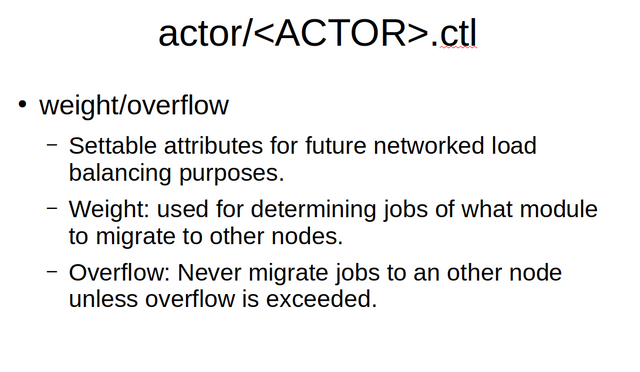

There are also two settable variables for the actor that we control through the ctl file. Weight and overflow are actor attributes that are used for distributed processing purposes and determine the potential migration of jobs to other nodes.

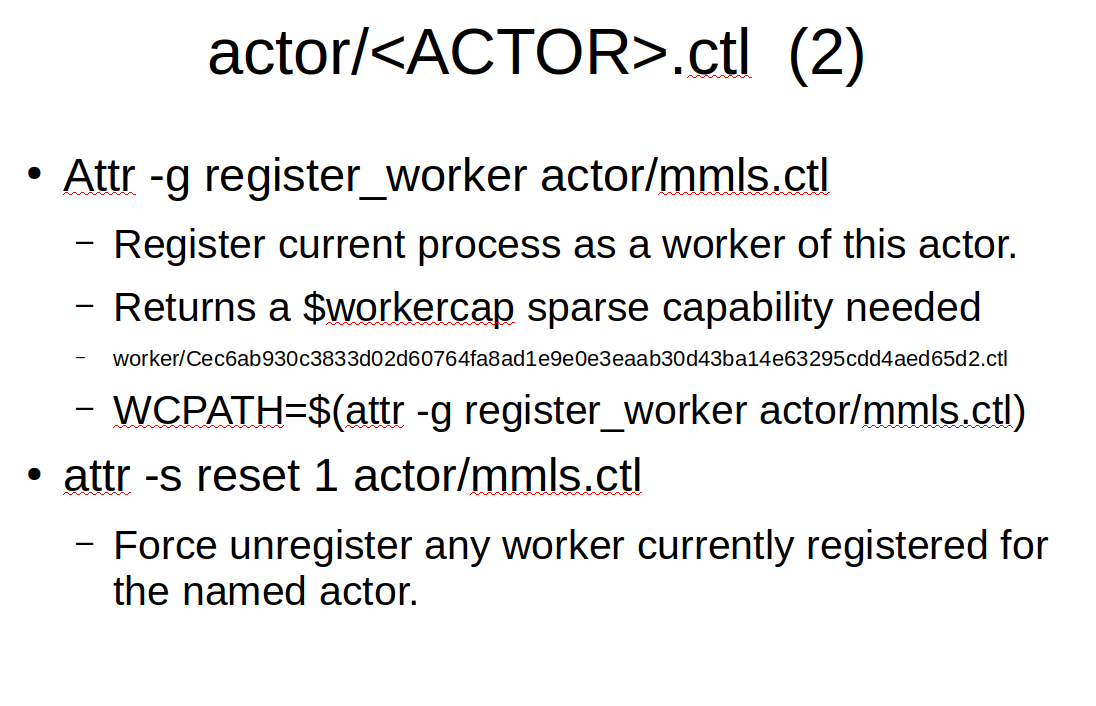

The most important method that the ctl file for the actor provides is allowing a worker to register itself with the file-system as a worker. Invoking register_worker will return a special sparse-capability based path into the worker/ directory that is to be used by the worker as a private handle to be used by the new worker for communicating with file-system privately. The sparse-cap based feile path is unguessable and with proper mandatory access controls on the /proc file-system combined with ptrace limitations should add up for proper integrity guarantees that are a major protective factor against anti-forensic attacks involving vulnerable libraries or tools used in the forensic data processing process.

If for some reason there are stale worker handles due to crashed workers, a reset is provided that will force unregister all workers for a given actor.

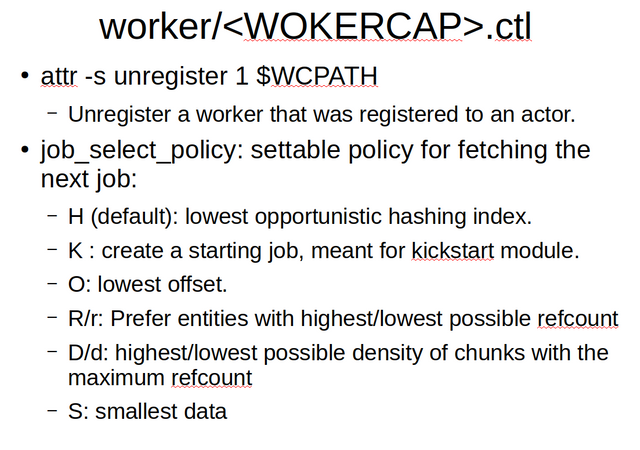

Once a workermonitoringered, it can invoke a number of methods on its private worker handle into MattockFS. It is important that a worker unregisters itself before exiting. Care should be taken that even in case of a crash, exception handling be set up to still unregister the worker, if needed using a seperate erlang style monotoring process.

The main function of a worker is to process jobs, but before it can start accepting jobs, it might need to set a job select pollicy.

By default the pollicy will be to select the next job based on the opportunistic hashing offset of the job, but alternative job picking pollicies are available.

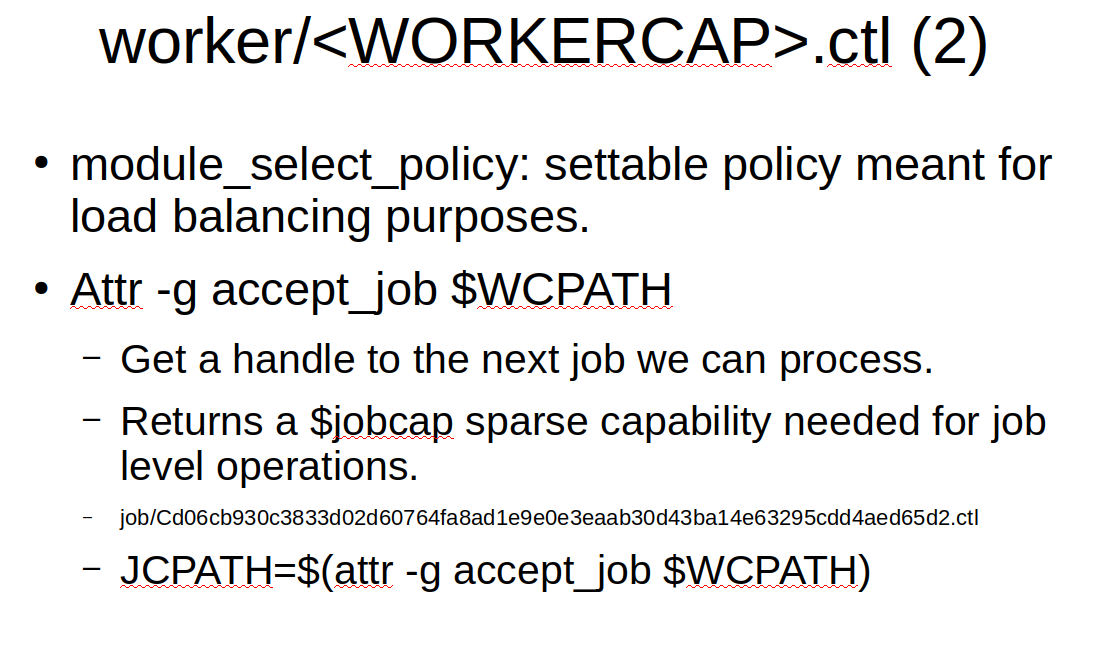

For a special load balancing worker, the possibility exists to also set a module selection pollicy and to steal jobs from overloaded modules.

The core method that can be invoked on the worker handle though is the accept_job method. This methor returns yet an other unguessable path handle. This time pointing into the job/ sub directory.

Ok, we have finaly arived at the point where the worker can actually start doing some work. There is a job that needs processing and we have a handle to that job that we can query and that we can use for sharing our results.

We need to fetch the carvpath of the data entity we need to process, and next to that we need to fetch the current state token for the distributed routing process.

Once we are done with the job, we shall need to communicate the updated router state with the filesystem so that the next module can restore the distributed router state for this tool-chain.

A worker will produce either derived or extracted child data or basic serialized child meta-data that needs to be processed further by MattockFS messaging. To accommodate this, the job handle provides a method for submitting child evidence.

Note that a child evidence needs to be referenced as a carvpath. This should be a good fit when we designate a child in terms of fragments of its parent, such as will be done in carving modules or for many files in a file system processing module. If however, a module wants to submit new child data, for example, meta data or extracted data from a compressed entity, new data will first need to be allocated and frozen. More on that below.

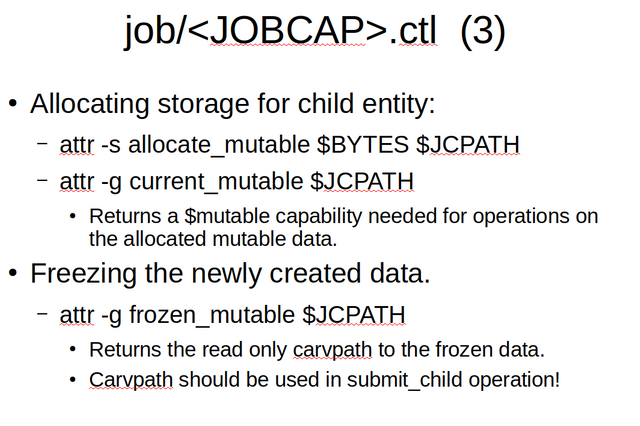

Here we run into the limitations of extended attributes as methods. In order to allocate a chunk of (temporarily) mutable data, we need to invoke two separate methods. First, we allocate what becomes the current mutable and secondly we request the handle for that mutable data.

Once we are done with filling the chunk of mutable data, we need to freeze it in an operation that returns a new carvpath for the new frozen and thus now immutable data chunk. This then allows us to submit the newly created immutable data for processing by subsequent actors.

We conclude the discussion of the MattockFS file-system as an API with the discussion of the mutable data. The mutable data handle, that is only valid while the data remains unfrozen, is a fixed size mutable file that the worker may fill with its data.

In the next installment of this series, we shall be doing a bit of hands-on. That is, we shall walk through what we discussed in this installment in a way that you can easily duplicate yourself. While I will discuss manual installation for those interested, I shall be assuming readers interested in trying the MattockFS walkthrough next time will be using the Virtual Appliance that I created for the DFRWS-EU earlier this year. So if you want to try things out next time, or even if you want to test some of the stuff discussed this time yourself, please download the virtual appliance.

This is very cool and very informative!

This post was resteemed by @resteembot!

Good Luck!

Learn more about the @resteembot project in the introduction post.

@pibara do you own this software? Do you offer resellerships

The software is currently available under the old 4-clause open source BSD license. As the author, I have shared copyrights with the UCD as I developed the first proof of concept version as part of my M.Sc dissertation. I'm considering switching the license to the simplified 2-clause BSD license. If desired I can offer limited support contracts or paid feature prioritization, but the software itself is totally free.

Congratulations @pibara! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP