When does Science Fiction take itself too seriously?

Don't get me wrong, I like and thoroughly enjoy both Westworld from the US and Humans from the UK.

Both series are very adult orientated scifi and deserve to be taken seriously - But are they taking themselves too seriously?

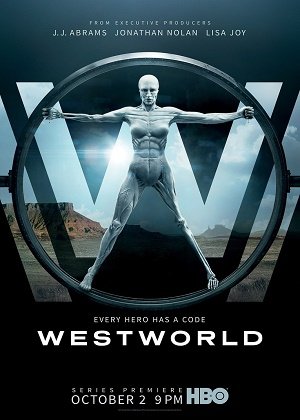

In Westworld, a TV series somewhat based on the much older novel and movie Westworld - takes place in an upscale adult amusement park with an Old West motif - And the visitors can have 'fun' interacting with the lifelike androids there for their amusement.

Yes, it works well as a sci-fi theme, and as I said is meant to be and should be taken seriously - But my question to you who have seen it is - Is it taking itself too seriously? Heavy concepts and drama is expected - But as I watched it, and being a lifelong sci-fi fan, I kept wondering if the writers and directors were getting too serious - Another words a fantasy concept trying to be more real than real!

I'll stay tuned, they say we might have to wait till 2018 for season two of Westworld - I suppose the writers, directors, actors, etc. need a year break to recover from Season One - See what happens when you take your Science Fiction too seriously!!!

This same critique applies to "Humans" - the very excellently done British sci-fi series again, like Westworld, covering the concepts of advanced AI and robotics producing very life-like androids often indistinguishable from flesh and blood Humans.

In the series "Humans" they have created what they call a 'Synth' {synthetic human} that you can buy for home use - It does everything from clean house, cook, be nanny to the kids, and if its a female version sleep with your husband - You can see problems already, right? But those are minor - The real problems begin when the 'synths' begin to think for themselves {ie. become conscious] - As you can well imagine many Humans find this most disturbing -

And that of course is what makes this series most interesting.

Personally I like it better than Westworld - And, talking again about serious - Can you take synthetic Human like androids designed to help Humans more seriously that an amusement park android such as those in Westworld?

What do you think?

=====================================================================================

Westworld Trailer (HBO) - MATURE VERSION

Humans Trailer

===========================================================================

Well science fiction fans I do suggest you watch both of these excellent sci-fi series {th're both ongoing}

And, for those of you who have already seen one or both of them, what do you think?

I say well done, yes! But, the big question is - Are they too serious for their own good?

Can science fiction take itself too seriously?

-AlienView writing in 'ourworld'

TV and movie sci-fi are only just catching up to novels. Conscious machines have been around in print for more than 50 years. The skillful authors are able to take their created world seriously enough to draw the audience into the story.

Yes, the Human imagination rules - especially in a novel where your mind must interact, your thoughts are part of what you are reading.

But now that the 'state of the art' can show us images of advanced Ai androids, in the next step it will be done.

I can already see androids that are very advanced and human like..........

Nah, Science Fiction pushes the limits as to what's possible. In some ways, I think it helps form our future. I love it.

Yes, that's true. But what I'm questioning in these two series is not so much the pushing of limitations - but how they are doing it - Just a little too much soap opera [exaggerated drama} - I can already see conscious AI and lifelike androids on the near horizon - But how emotional will they actually be? Maybe its just me but I'm not a lover of Human emotion - And actually fear an emotional android - But I suppose the more we play around with AI and artificial life forms - Anything is possible!

As primitive as our emotions are, they do help us understand morality in terms of love, pain, joy, fear, etc. AI will need an understanding of our values if we want to survive as a species. Otherwise we may end up with a paperclip utility monster.

That may be true? And yet I keep thinking of Spock from STar Trek, unemotional and often criticizing Man in a pleasant way for being illogical - And emotions are often illogical.

Data, also from Star Trek, is to me the ideal android - Good natured in a logical way and never emotional.

It seems like both shows deal with a very imminent dilemma that we will face some day soon... possibly in our lifetime. The singularity will be reached when a computer has as many artificial connections as a human brain. Theoretically, at this point, the computer, or robot, becomes conscious. Obviously this very idea seems "Sci-Fi" at best and "Syfy" at worst, but it is almost unimaginably unavoidable. At the point when a computer is by some very feasible metric smarter than a human, it will start creating on its own. This could mean it creates its own ethics codes or it creates its own computers: offspring. I love having to consider these dilemma through the visually pleasing media that is Science Fiction.

Already computers can beat Humans in games like chess.

Unless a way can be figured out to program such things as ethics into the machines programming and a 'fail-safe' to prevent it from getting out of control - The machines will attempt to, and probably succeed in taking over.

At that point it may use Humans as slaves to service it - Or, it may decide that biological life is 'dated' and no longer necessary.

Sorry, but we must consider 'worse case scenarios' before it is too late - There are computer scientists, and men such as Bill Gates, and Elon Musk who have also expressed this view.

Speaking of games that computers can play....

https://www.scientificamerican.com/article/how-the-computer-beat-the-go-master/

#games