A Different Approach to Curation: Quality Based Consensus and Quality Discovery

If you look at the current curation system, the options are very limited. Also, there is little incentive to correctly identify quality content, only profitable content. I wrote about this in my last post and encourage you to skim over it to better understand the difference in approaches between profit driven curation and quality driven curation. So, today I want to go over an idea that I've been developing the past few months and why I think it might be able to solve some of the issues we currently see on the blockchain now.

The Problem

While searching for quality is encouraged on the blockchain, interfaces have not been able to effectively establish a good metric for quality. How do we measure quality currently? Is it the number of upvotes? Is it the amount of rewards earned? Posts are currently organized by the amount they earn. But this has nothing to do with quality. All it takes is a whale to hit that upvote button and boom the content goes trending by the end of the hour. What we need is a better quality metric to organize our posts and filter by quality.

We also have upvotes, but upvotes don't really say much about quality. Basically they say I like your content. There is little nuance there and little to distinguish the truly great from the average. Couple that with incentives for profit and limited voting power and suddenly the upvote becomes less about voting for content and more about carefully selecting content that you anticipate blowing up.

There is also downvoting, which says that you do not like a post a lot. But there are lots of reasons--both monetary and social--to avoid using the flag. This reduces the process of curation to separating what I like from what I don't care about. And given the current drive for profit that often means that little consideration is given to the quality of the post rather than other factors.

But what if you were incentivized to determine the quality of different posts? What if you were incentivized to also get it right? By making curation into a process of quality discovery, we can overcome the limitations of a simple upvote system within a complex Steem ecosystem.

Quality Based Consensus

We'll be getting a little more technical in this section, so I'll give you a warning here in case you hate math or technical descriptions. Those who are allergic to reading or to math can move onto the next section.

Quality Based Consensus operates on the following principle. If everyone agrees that a post is quality than that post is quality and should be highly rated. If there is disagreement, then that post should be penalized and should not be highly rated. We ideally want agreement when determining the best of the best, but acknowledge that each individual has a subjective view of quality. But we combine these subjective views in order to come to a result that is somewhat fair.

First off, we need to abandon the simple upvote for a more expressive language to vote in. This could be a five star rating system or a 1-10 rating system. It doesn't really matter except that you have choices in your voting. This expressiveness allows us to separate the good from the great and also allows us to separate the mediocre from the good content. Having more expressiveness gives us room to wriggle and allows each user to vote how they feel.

But you may argue that such a system could easily be ignored. If you give everyone you like a 10, then what need is there for expressive options? This was one of the reasons that YouTube removed their five star rating system. And yes, YouTube actually had one of those back in the day. But back to our problem. What incentive is there to vote for quality? Right now, none. But here's where things get interesting and more mathematical.

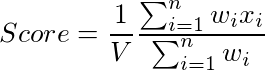

Let's give each post a certain score based on different ratings. The score is calculated by the following formula:

This is simply a weighted average with a slight modification. A weighted average in this case is like a normal average but where people who have higher weights have their vote count more. Naturally we can use Steem Power for this weighting mechanism (but we don't have to). The slight modification comes with the variable V. This is a variance based measure. This could be the variance, standard deviation, or your favorite measure of spread. This gives us an additional penalty for disagreement and only keeps scores high for those posts where there is consensus on the post being of high quality.

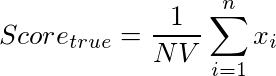

But again, there is no incentive to vote in a specific way. Sure this weighted mean might improve things in some ways, but you can simply vote highly and lowly without any consequence. Thus, we move to a curation scheme based on estimating the score rather than voting for popular content. The closer you are to the true score or quality of the post, then the better rewarded you should be. But we don't use the displayed score to compute this calculation, but a true average (with the variance based penalty included):

Why should we be trying to estimate the true average instead of the weighted average when the weighted average in the one that is used to sort the content? There are two reasons:

The true average shows the communities' approximation of the quality of the post. We should use this in determining the curation reward because this is the agreed upon consensus (or lack thereof) of the community free of whale influence. Whales can influence what is seen but it doesn't make sense that they influence the essence of quality.

We use the true average for curation, but use the weighted average as the filter to sort the posts. This allows whales to still influence what content gets voted to the top and still incentivizes whales to hold onto Steem Power. Everyone gets a voice, but those who have the most to lose get more of a voice.

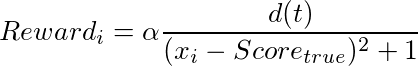

But for such a change to work, the curation rewards algorithm would have to be changed. However, we can still use the curation rewards allocated (25% of the post) and redistribute in a more fair way. To do this, we reward accuracy. The rewards would be distributed using the following equation:

Basically we take the difference between the true score and the score assigned to the post by the user. We then square that value. This acts as the square error which is used to punish those who are further away from the true score of the post. We add 1 to avoid any divide by zero errors.

We divide a decay function by the result of this calculation. The decay function decreases rewards based on the number of users who have already voted on the post. This rewards people to find new undiscovered content rather than stacking the votes on and also rewards people for voting when they have less information of what the final score might be. If the decay function was not there, then people could simply take a wait and see approach with the score.

We tie the whole thing together by multiplying by the normalization factor (alpha) which serves to make sure the numbers all add up to the amount allocated to curation. The curation rewards are then split among the users in a fair way that rewards users for quality discovery over finding popular content.

What all of this math effectively does is that we create a new filter that organizes posts by a stake-weighted score where users are incentivized to correctly identify the quality of the post to maximize their profits and are encouraged to take a look at new users to find content with a few votes to get meaningful rewards against the decay function.

This potentially solves two issues of stake-based curation: new users are no longer ignored and quality is showcased rather than stake being showcased. Ultimately the authorship rewards stay the same and stake-holders still have a larger impact on the score filter meaning there is still a reason to hold Steem Power.

Additional Benefits

One should never consider implementing a solution to a problem without considering the pros and cons and some of the unintended consequences that such changes could cause. While HF19 did a lot of good, some could consider some of the negative aspects that arose afterwards as unintentional consequences that possibly could have been avoided with more forethought. I will list additional benefits here, but I encourage you to take a hatchet and tear this idea to pieces, so we can move forward with any of the useful parts that may remain.

One of the additional benefits of this curation system is that it is bot-resistant in a way that the current system is not. You can't just automatically upvote certain authors with particular scores as you lose out on potential profits from the system. You could train a machine learning agent to mock human behavior (or to read), but that is much more sophisticated than anything we see now.

Another benefit is that such rewards benefit casual readers much more than they do with stake-based rewards. This not only encourages more users to use the site, but it also encourages consumers of content to use the site. And when consumers come to the site, it is only time before advertisers and celebrities come knocking. And the value of Steem rockets due to the demand.

Overall, the reasoning for the changes are simple. Right now there is a misalignment between curating for profit and curating for quality. By making the system more expressive and turning curation into a quality discovery exercise, we make curating for quality and curating for profit the same thing. This way we don't have to tell people that they should do something and hope for the best. They are incentivized to do something and they lose out if they act in a way that harms community growth in the long run.

I appreciate anyone who has taken the time to read to this point and again I encourage you to harpoon this idea and poke as many holes in it as you can. There is probably something I forgot to consider and this may have been a waste of time, but I have something to write about at the very minimum.

In case, anyone was wondering, I did not come up with the algorithm out of the blue. I developed the algorithm for a similar quality discovery problem (fake news curation) for a proof-of-concept website I designed called Newsiphy. Feel free to check that out if you are interested, but there's no crypto over there (sorry).

It's an interesting approach, but as with any attempt at measuring subjective quality - it has major holes.. At least if I have interpreted your idea as you intended.

This in itself isn't necessarily out of alignment with what the community, as a group, might want to occur - but it has the effect of skewing things away from 'the absolute finest' and towards mediocrity - since the bell curve principle means that the vast majority of people are not top experts in everything and thus cannot assess everything, on average, in a way that is more than mediocre. There will still be greatness uncovered, but the algorithm needs to allow more flexibility to be sure to identify it.

To put this another way, if everyone knew what greatness was - there would be a whole lot more greatness ;)

cheers for imagining!

p.s. something is broken with the text input here as the numbering of my comment points here refuses to be updated and saved!

To address your first concern, there is a disagreement penalty built into the formula which sorts the posts. This helps protect the minority opinion. If you choose the variance based measure carefully, it would require a large super majority of voters to cancel the effects of that disagreement. That being said, smaller users with differing opinions than the community might get drowned out, but that currently happens anyway. Your groupthink concern definitely has some validity, although all social media suffers from mediocre content that appeals to the masses. Definitely a tough problem to think about.

To address your second concern, you could create a large number of account to skew the simple average and take more of the curation rewards, but you would have to buy Steem Power for this attack to impact the rating that is used to sort and display the posts as a filter since it is a weighted average.

The disagreement penalty will have an effect of limiting 'dissent' and thus will erase the voice of the true experts who disagree with the crowd - yes. So I just want to be clear that this is not a true solution to the issue of identifying actual quality, but rather it is a way of increasing the responsiveness in the system to the ideas about quality held by the majority in the community.

I'm not sure it's possible to do much about this problem without either having a computer that is literally more intelligent than most humans or by possibly using reputation in a clever way that factors in 'voices of authority'. The problem with alleged voices of authority is the same as with the alleged measurements of quality - in that if measurements of reputation are defined by the crowd - en mass - and the crowd - en mass - tends towards mediocrity, then those with the highest reputation will also be skewed towards mediocrity. lol. This is not entirely fair or true of course, since in the real world there are other factors involved that make a reputation score serve it's purpose in a useful way - to some extent (although it can be gamed).

Yes, you would need to invest in Steem Power to make the scam work but you could also withdraw it all when you are done by powering down - so it's a scam with a high entry price, but with potentially high rewards. Your investment of a million dollars to create new accounts might artificially inflate the price of steem, leaving you a nice golden getaway car when you are finished vampiring the rewards pool!

Well, the disagreement penalty may limit "dissent", it also can encourage dissent. If a post is highly rated, and then an expert disagrees, he adds variance to the system, decreasing the score. If you have a large enough minority of experts, they should be able to hold their own in disagreement.

But if you dissent in that you think something is quality and everyone disagrees then penalty hurts you, so you are better to just join the crowd.

Yes, I would agree that this is more of a "community" rating rather than one of objective quality. Objective quality is impossible.

I actually have some good ideas for how to overcome these problems that don't require any changes to the existing algorithms - but I will need to write them down in detail. I'll link back to your post here when I put the new post together. Well, it's a 'good' idea according to my own subjective assessment anyway. :)

First of, yes. I'm thinking in this kind of direction myself, and I've been talking to people such as @stellabelle, @rycharde, @the-ego-is-you and @eturnerx about it recently.

Secondly, what you're proposing is common to any modern star rating system, and that's a good thing - it's proven to work. The difference is that the centralized and opaque system is the thing that assigns the weights to votes, and they are dynamic too, based on a multitude of factors. An example is the star rating system Google Play Store uses, or many other stores.

So I don't view what you're proposing as radical, and in fact it's complimentary to what we currently have, once we have SMTs that is. There's no need for me to take a hatchet to your idea (though I might suggest alterations to the exact formulas down the road). I would suggest you consider it as an additional token, instead of a modification to the current reward system. And I'm coming round to that idea.

Great that you put in the work to describe it in detail.

Interesting. It definitely has a better chance of actually being implemented by using another interface and perhaps another token. I'll have to do deep dive into how SMTs are implemented to see if they can support the changes to curation rewards (and maybe even posting rewards).

I should also say, especially in light of @ura-soul 's point, that I'm not particularly tied to the details of your idea at all, but more to the idea that some kind of SMT could be used to step in to reward great authors - the curators that promote them - the readers that read them - much better than the current system does. Any tweak on the current system or additional system that furthers that goal is something I'm into.

Agreed. The issue with any idea or implementation are those creative people who find sneaky ways to leverage the implementation to their benefit. But with SMTs certainly one person will figure out a great way to reward people--or at least a better one.

A better chance, as in 100% chance if you make it 😆 You should take some time to consider it. I've skimmed the whitepaper and it looks like it's got many of the Steem-like feature. Here's some indication:

There's a lot of people taking about this, you've got a few here, and I think it's something that's needed. I'd love to see the discussion go further.

Brilliant!

I'll be honest, I skipped the math part, which I realize is pretty significant, but I am pretty sure I get the idea and I think this makes so much sense. Do you know about utopian.io? You could probably put your idea on there and make some solid steem.

I've heard of it, but haven't really looked that much into it. I'll check it out. As for the math part that just explains the mechanics of it all. I'm glad that the idea makes sense without needing the math. I hate reading papers that are very math dominant and make little sense.

you should definitely submit it

Agreed. Get it submitted for review before I do! 😈

I will once steemconnect allows me to log in.

Yes, submit this. It merits a close review and I feel it would take Steem a step closer to a fundamental goal: rewarding quality content.

We need this, payout has proven to be a terrible way to organize content and much better curation cam be found else where leading me to use the platform less and honestly i camt recomend steemit to friends until this is changed. Thanks for you work also check out Refind its a block chain centric app that curates content from the likes of meduim and other sites based on your preferences

This definitely sounds interesting-- we can really use an improvement to the current system, especially if it can be calibrated to be more encouraging of human curation, as opposed to automated curation. One of the factors I would still like to see included in the algorithm for curation is involvement. I strongly believe that (for example) a whale with 500,000SP who has never posted or commented and has a rep of 25 should "count" for less than a whale with 500,000SP who has 10,000+ posts/comments and a rep of 73.

But that's only peripheral to your suggestion... as a content creator (and active manual curator) I am all for authentic quality (which I recognize is subjective) being curated to the top and given more visibility.

I like your idea of using contribution as a factor rather than just stake. The weighting could be a combination of some these factors where the biggest contributors hold the largest weight. Contribution being defined as some combination of reputation, activity, and Steem holdings.

I like your approach to weighing the curators differently than on their SP, but I would prefer that to be topical, i.e. knowledge based.

As such the platform would assess the curators also on their own posts, and their success/failure, and base a weight on that, as well as posted content and comments (both weighted differently).

Only then will we come to a level where quality becomes the true weighing factor of the curation.

Why does this matter? The content spinner who focuses on posting about social media can easily hash out hundreds of posts a month, without knowing more than 20% and ever truly going in depth. Quora slightly tries to do this by making the bio xcerpt visible for each answer, even if one can make it specific to that answer. But it can make one think that replier's answer should be consider higher, and thus checked in extended view. Algorithmically we could achieve that if the concept is taken to a SMT and both contributors and curators are assessed on every post and its success/failure. Obviously, that requires an incubation period.

But it could lead to ultimately quality over quantity.

Otherwise, I like where this is/could be going.

Ok, taking out all of the stuff I do not understand, I think this idea has some merit if it was able to be reviewed by people who understand the algorithms.

I am for experimenting with anything that will drive quality upwards and encourage manual curation of content as I think it will be a defining factor in Steemit's future position.

I agree that driving quality upwards will determine the future adoption on the site. People are attracted to quality like moths are attracted to the light outside of my house. I'm not sure that the algorithm works or if I'm missing something, but I think the idea of incentivizing the discovery of quality posts is worth looking into.

I disagree with this btw. While I most definitely want the average of discovered quality to be higher, to tie it to the future adoption of the site is blindsided and elitist.

Last time I checked gossip rags and tabloids still sell more copies, and have more site visitors, than the Financial Times and the WaPo.

TMZ, and back in the day, Gawker most definitely also had many more page views than say io9.

That is not to say that we may not want to prefer to see higher quality, but that's the usual struggle. And also freedom we have, freedom to dig deeper and discover.

A quick look at Alexa's Top 50 sites in the US validates this. Platforms with a very broad reach, and lots of irrelevant drivel, do rank very highly. Youtube, Facebook, Reddit, Instagram, Twitter all are sites with lots of useless drivel on them. That was a key in their adoption btw, the freedom to post anything. See also blogspot and WordPress.com, tumblr too.

The adoption will eventually be triggered by the ability to both earn and spend on site, without needing to convert to fiat.

I get and understand where you are coming from. Perhaps "quality" is a bad way to look at it. Interesting content drives the eyeballs regardless of quality or not. Perceived quality is only a small portion of that and I probably overstated the importance of quality content.

That being said, I designed this algorithm in the first place because I wasn't content with how places like Reddit and other sites organized their posts. I wanted a supplementary filter that could bring stuff to the top outside of memes, clickbait, and ideological trash.

Maybe you're looking at the wrong model. You are also looking at discovery platforms. Platforms where, most often, the popular vote decides (not that simple in karma and everything but most definitely an element).

Maybe you should build around an interest engine. Steemit itself is the Everything Bucket.

In means of visibility, content shown, Steemit is to the Steem blockchain what the Google Index is the Internet. It has it all.

Curation is the oldest thing online. We go all the way to the Yahoo! directory, then DMOZ and of course thousands and thousands of manual curators, some of the more popular ones being Jason Kottke, Andy Baio, John Gruber and also BoingBoing.

The rest, Google has spent thousands of years of development in trying not to be gamed anymore. Yet, SEO is still a very thriving model.

I'm thinking that a more correct approach than trying to rank/weed out what you perceive as trash but may be the only thing many thousands of others care about, is to build an interest engine.

And I mean that almost like Quora, where one can follow specific topics. Within each vertical, then sorting can be done. But not on the Everything Bucket. Yet, Communities are coming and they will make following topics, and content verticals, easier. Let's see if they will improve how we feel about poor quality slash noise.

I for one, here on Steemit, I would be happy already if I could just exclude specific topics/interface sources and heck... languages too. That doesn't mean though I'm not waiting for a platform which caters better to my needs. See also my reply to denmarkguy slightly below this one. :)

This is why you should submit to utopian.io as it is more likely to be seen by those able to look into it.

I like your post from a discussion point of view. One man's trash is another man's treasure however, and art is subjective and can't be analyzed by algorithms. Thank God. The artists will be the last to lose their jobs to robots

Luckily this is a human-based algorithm rather than a robot-based one. I agree it would be crazy to have a robot judge art.

That's true!!