How using MongoDB as a cache greatly reduced request-response time

How using MongoDB as a cache greatly reduced request-response time

Preface

I was working as a software developer on the data visualization project build out of MEAN stack.The version 1.0 was already running live in production but our sales team faced a lot of issue selling the product.

So what do you do as an engineer if your product sales guy tells you that it takes an eternity to load the data and render the data visualizations to the client?I believe no company would be happy to listen to this from their sales team.So what did we do?

Plan of Action

Our Engineering team worked on a couple of things like

Moving our front end from Angular 1.0 to Angular 2.0

Code level optimization at the backend

So, Moving from Angular 1 to Angular 2, definitely boosted the performance, but my focus today is more on backend side.

Profiling and logs of the API requests made to our Node.js server helped us identify the main bottlenecks.Metrics reported that the response time for 70% API requests was ranging from 3000 ms to 11000 ms, that was like crazy too much.So I started working on a couple of things.

Standardisation of the API’s was the first thing which included documentation and sanitization of the available API’s.

Implementation of cache.I thought using Redis would be great but our use case was a little trickier. Redis would be good if the value corresponding to our keys is smaller in size and short lived.But when you deal with a data visualization platform, is by default humongous.

Problem with Redis:

Let’s say we even stored the value in Redis , but at some-point in near future we would have to delete it to make space for new key-value pair.

Ideally if I could have it saved for a longer period of time in order to retrieve, then it would be awesome. At that time , I thought of using MongoDB as a cache, kind-of though, but the results were amazing.

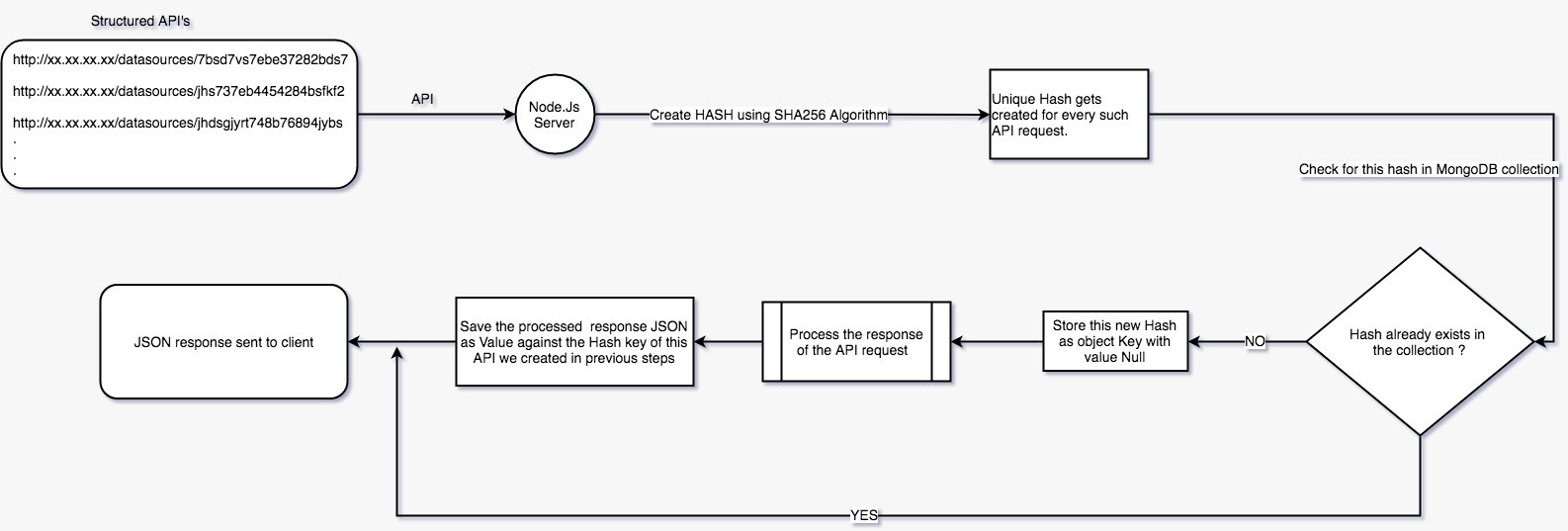

I have tried to visualise the request and response flow for API’s in the flowchart below.

Request Response Caching FlowchartBonus facts :

Our datasources were updated by the ETL injector every night which makes the value stored against our key( hash of the api) invalid.So,In order to keep the data updated for that hash, I function was triggered to recalculate all the values of key hashes and update against their keys.

Key dashboards had mostly defined set of KPI graphs along with their filters, of which only datasources were changed only after a certain period of time.So instead of recalculating the values of the graph points of dashboards, above technique helped render the charts quickly.

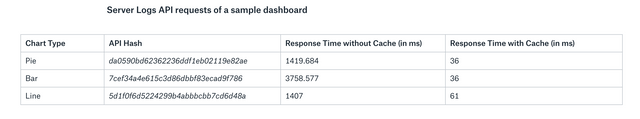

Few logs from our node.js server( via morgan[https://www.npmjs.com/package/morgan] logging library)

This technique can find its use case at all those places where large datasets are to be stored and for a decent longer period of time in a cost-effective manner.

Congratulations @nikhilbhola! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Do not miss the last post from @steemitboard:

Vote for @Steemitboard as a witness to get one more award and increased upvotes!

Congratulations @nikhilbhola! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Do not miss the last post from @steemitboard:

Vote for @Steemitboard as a witness to get one more award and increased upvotes!