DIY - Statistical Analysis of BTC/USD

I have wrote this philosophical post about how education should really be about empowering people. That is what I believe in, I think whenever somebody wants to educate people it should have the end-result of leaving the student with more information and power in his hand than what he had before that.

Now I have a background in economics/finance/math, and really I did a lot of market analysis even posted many on Steemit, but I never really showed my methodology, so that was not very educational. Now I did reveal some tools in this post, but it's kind of hard to do in LibreOffice and pretty complicated if people are total novices.

So I just thought let's make a Python script together that will perform all those calculations instantly, automatically, and can be used in the future, without having to calculate everything manually in a spreadsheet. It would be amazing.

Preparations

Obviously I am only using Linux and we will need the latest python or possibly anything above 3.5. All you need really is just gedit, the default text editor in Linux. Just open it and save the empty file btcanalyzer.py. To execute the file, just open the terminal and enter python3 btcanalyzer.py, that’s it.

Obviously it won’t do anything yet since it’s empty, but that is how easy it is, Python is source based so no need for compilation, the script runs as it is, and you will always know what code is running. Total transparency and ease of use, it’s the best programming language in my opinion.

Okay so just download the BTC/USD price from here:

Download the .csv file, the market-price.csv and place it in the same folder as the btcanalyzer.py and don’t rename it nor modify it, just leave it as it is.

It’s the entire data of BTC/USD provided by Blockchain.info, however it has empty values in the beginning and the data only starts from 2010-8-18, so we will have to take that into consideration later.

Since the 0$ price is unrealistic statistically (it should have been a few cents or fractions of a cent at the beginning, but there was probably no exchange in the early days), and it gives a bigger distortion than if it were cut off, it’s better if it’s removed, so we will have to remove this in the software. Don’t remove this in the file, just leave the .csv file as it is, we will deal with it inside the software.

Let’s begin!

Okay so we will need to read the file in, if you notice the .csv file is text based it’s basically 2 columns separated by a comma, and contains the data line-by-line. This is very easy to work with.

Add these lines to the beginning:

#----------------1

file_name="market-price.csv"

with open(file_name,'r') as f:

lines=f.readlines() # reads the entire file line-by-line into the lines variable

So we set a variable containing the file name and open the file with the with operator that also closes it after we are done, and it reads in all lines into the lines operator which will be a string array.

Now this is read in as it is, but we have 2 columns, so we need to put this into a 2 dimensional array, and sort a few things out so add the following below:

#----------------2

ARRAY=[]

for i in range(296,len(lines)):

ARRAY.append(lines[i].strip().split(','))

Now here we make a 2 dimensional array or list, and start reading in the lines from the 296th line which is actually the 297th but counted from 0, the first datapoint 2010-08-18 00:00:00,0.074 so this will be the first element in the ARRAY list of course counting from 0. We also split it into 2 dimensions the 0th one containing the date and the 1st one containing the price. We also strip the \n line breaker from it.

So a simple call ARRAY[0][1] is the first 0.074 BTC price and ARRAY[len(ARRAY)-1][1] is the last 5711.205866666667 BTC price as of today.

Now we have basically the entire price data in the 1st dimension of this array so we can now use that for statistical analysis.

If you remember from the past post I sad that in a heteroskedastic market there is no real mean but the best approximation given the current information is actually the latest price. So the hypothetical mean is the latest price.

So we add this and we also need to add an import math to the beginning given that we will need this additional library to work with.

#----------------3

arraysize=len(ARRAY)

mean=float(ARRAY[arraysize-1][1])

stdev=unbiased_stddev(ARRAY,1,mean)

so this marks the mean as the last element, we count the size of the array, and I have prepared a special function that calculates the unbiased sample standard deviation based on this formula, so we need to add that to the beginning:

def unbiased_stddev(f_array,index,f_mean):

pricelist=[float(x[index]) for x in f_array]

square=sum([(e-f_mean)**2 for e in pricelist])

count=len(pricelist)

pop_ex_kurtosis= (sum([(e-f_mean)**4 for e in pricelist])/(square)**2)*count-3

denominator= (count-1.5-0.25*pop_ex_kurtosis)

variance = square/ denominator

return math.sqrt(variance)

It looks complicated but it returns exactly the unbiased sample standard deviation relative to the mean we set it to which will be the last price in our case.

Now the only thing left to do is to calculate the cumulative normal distribution, to get the probability area. Now we can’t integrate in python, at least not to my knowledge so we will “brute force” the integrals, basically going through all numbers at a certain resolution, the higher the resolution, the more accurate the integral, but the slower it will take to computer. Note that for slow PC’s it’s better to be set at 1000 or below.

def frange(x, y, jump):

# SOURCE: (kichik) https://stackoverflow.com/a/7267280

while x < y:

yield x

x += jump

def cum_norm(x,f_mean,f_stdev):

pi=math.pi

e =math.e

psum=0

for number in frange(min(f_mean,x),max(f_mean,x),1/resolution):

psum+=1/(math.sqrt(2*pi*f_stdev**2)) * e**(-( ((number-f_mean)**2) / (2*f_stdev**2) ))

return (0.5-psum/resolution)

So basically I’ve borrowed that range code, since the default is for integrals and we jump through float values. The cumulative normal distribution is calculated by adding together the discrete values with a certain precision, obviously we can’t go through infinite values since the distance between 2 real numbers is infinity, but we can go through it at a 0.001 precision set as default.

The formula is the default formula basically, and we subtract it from 0.5 to get the reference probability area from the mean.

Now obviously we can’t compute the probability between 2 numbers (x,y), since the probability area can’t skip the price between that. So if you are curious what the probability is between 7000-8000$ that is invalid since how does the price get there from the mean? So everything is in reference to the mean, thus the mean is 1 parameter, and our target is the other parameter. So we can only calculate the distance from the mean, and since the mean is the latest price, basically the distance from the current level. This is good to estimate STOPLOSS or TAKEPROFIT levels in my opinion, or just give people a rough estimate of the possible/probable price.

So to call this would be something like:

prob_area=cum_norm(6000,mean,stdev)

print(prob_area)

But let’s make it fancy, and let the user decide what number to input, basically like a dialog software.

Well I have reorganized it a little bit, added tests for errors and made it more eye friendly and colorful:

DOWNLOAD SCRIPT FROM HERE

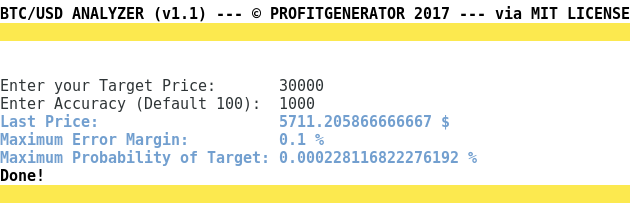

Finished Software!

It’s gorgeous, who needs a GUI when you can use a very eye-friendly colored console in Linux. So as you can see the script first asks a target from you, it must be numerical otherwise it rejects it and it should not be a huge number otherwise it might freeze. Then setting the accuracy, the higher it is the more accurate will be more the calculation will be slower as well. There is not much difference in the accuracy so with 1000 the margin of error will be pretty low.

Then the software will calculate the heavy math behind the scenes and for the user it will just give back the probability, which is what we are interested in only.

As you can see in the test above the probability of a 30,000$ BTC is very very low. And it’s bi-directional, however for some reason the probability of a low price is actually quite high, I can’t figure out exactly why, probably because we have modified the center of the distribution, it’s a fat tailed distribution essentially, I will have to investigate this.

But for now it’s a very usable software, very useful for analyzing the BTC/USD market, I will work on this software casually and improving it with new features, stay tuned!

Disclaimer: The information provided on this page or blog post might be incorrect, inaccurate or incomplete. I am not responsible if you lose money or other valuables using the information on this page or blog post! This page or blog post is not an investment advice, just my opinion and analysis for educational or entertainment purposes.

Sources:

- https://pixabay.com

- The Linux logo (“Tux”) and the Python logo are the trademarks of their respective owners.

does your program code weigh the prices that are three years old the same as the prices that are current?

No, weighing the price has no statistical significance. In theory a heteroskedastic distribution has no mean (in my theory it does, it just that it gets revealed in the future), so the most accurate position it to just use the latest price while ignoring everything before it.

Of course if we'd knew the BTC price 20 years from now, the mean would obviously change, it might even turn into a normal distribution.

But since we dont know the future, it has to be updated after every new datapoint, and taking the newest data as the most accurate one.

Now I forgot to include 1 thing, which is a measurement of the variability of the different variances, basically the variance of the variances, the lower it is, the less heteroskedastic the market is and the more "patterns" you can see ,while if it's too big, that means that the variance and mean changes constantly, meaning that it's impossible to forecast anything.

It does matter though, not all markets are predictable.

To give you an idea: