DIY Full Linear Forecasting Tool - Cryptocurrency Prediction

I have completed the forecasting software. Man it was a pain in the ass, it's really hard really. I got confused so much in the indexes that I had to rewrite it completely and test the code step by step, basically manually verifying each output.

Now I can really imagine what full-time developers have to go through when testing code much complex than this, either I am a novice and got hanged up by complicated indexes or it is really hard to code arrays where you have to sync multiple ones.

Nontheless it is done now, and you can thank me later since I give it all out for free, so you can thank me by upvoting this and in the comment section.

The code should be bug free now, or at least I have found no obvious bugs, if you do please signal!

So now the software is capable of backtesting on a period basis, since the rolling forecast with increasing sample is pretty biased, each segment has to be the same size so that we can compare it to eachother, plus we can now test how further the "memory of the price" goes back. Stay tuned.

So it is still a linear forecasting tool, I haven't added anything fancy to it yet, just starting with the basics, but later I might upgrade it if I have time. But it is fully functional now, version 2.0.

Debugging

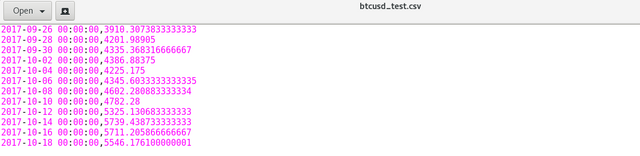

I have debugged it on a dummy price data:

It this example we can range from period 1 to period 10, and we have in total 12 elements, but we need minimum 2 to start from so the max period here is 10.

I was so pissed at the indexing and confused that I rewrote the code completely and added a dummy element to the 0th element so that we now start the count from 1, but it didn't made it much easier because the for loop is messed up too, since the the range goes like this [x,y) mathematically represented, so the last element is actually the previous one, so you always have to add a +1 to the end range. It's complicated.

def process_file():

with open("data/"+file_name,'r') as f:

lines=f.readlines()

ARRAY.append(['dummytime', 'dummyprice'])

for i in range(0,len(lines)):

ARRAY.append(lines[i].strip().split(','))

arraysize=len(ARRAY) -1 # removing the dummy

return arraysize

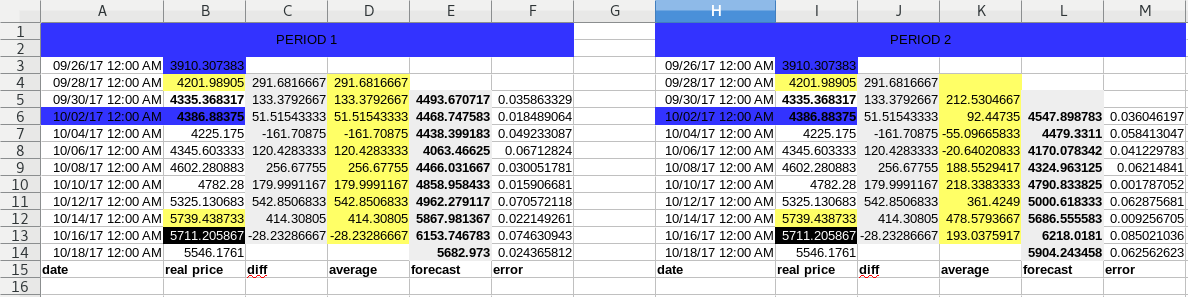

So I debugged it manually in LibreOffice, testing each period. It was boring but useful!

Period 1 & Period 2

- Period 1

['2017-09-28 00:00:00', '4201.98905']

(4335.368316666667, 4493.670716666667, 291.68166666666684, 0.03586332920996334)

['2017-09-30 00:00:00', '4335.368316666667']

(4386.88375, 4468.747583333334, 133.3792666666668, 0.01848906358081066)

['2017-10-02 00:00:00', '4386.88375']

(4225.175, 4438.399183333333, 51.51543333333302, 0.04923308706842717)

['2017-10-04 00:00:00', '4225.175']

(4345.6033333333335, 4063.4662500000004, -161.70874999999978, 0.06712824047250222)

['2017-10-06 00:00:00', '4345.6033333333335']

(4602.280883333334, 4466.031666666667, 120.42833333333328, 0.03005178080446565)

['2017-10-08 00:00:00', '4602.280883333334']

(4782.28, 4858.958433333334, 256.67755000000034, 0.015906680586192233)

['2017-10-10 00:00:00', '4782.28']

(5325.130683333333, 4962.279116666666, 179.99911666666594, 0.07057211823809517)

['2017-10-12 00:00:00', '5325.130683333333']

(5739.438733333333, 5867.981366666666, 542.8506833333331, 0.022149260959722163)

['2017-10-14 00:00:00', '5739.438733333333']

(5711.205866666667, 6153.746783333334, 414.30805000000055, 0.07463094294668364)

['2017-10-16 00:00:00', '5711.205866666667']

(5546.176100000001, 5682.973000000001, -28.23286666666627, 0.02436581198792913)

- Period 2

['2017-09-28 00:00:00', '4201.98905']

['2017-09-30 00:00:00', '4335.368316666667']

(4386.88375, 4547.898783333334, 212.53046666666683, 0.036046197350203306)

['2017-09-30 00:00:00', '4335.368316666667']

['2017-10-02 00:00:00', '4386.88375']

(4225.175, 4479.331099999999, 92.44734999999991, 0.05841304717393397)

['2017-10-02 00:00:00', '4386.88375']

['2017-10-04 00:00:00', '4225.175']

(4345.6033333333335, 4170.078341666667, -55.09665833333338, 0.041229783396631144)

['2017-10-04 00:00:00', '4225.175']

['2017-10-06 00:00:00', '4345.6033333333335']

(4602.280883333334, 4324.963125, -20.64020833333325, 0.06214841047189709)

['2017-10-06 00:00:00', '4345.6033333333335']

['2017-10-08 00:00:00', '4602.280883333334']

(4782.28, 4790.833825000001, 188.5529416666668, 0.0017870522450257598)

['2017-10-08 00:00:00', '4602.280883333334']

['2017-10-10 00:00:00', '4782.28']

(5325.130683333333, 5000.618333333333, 218.33833333333314, 0.0628756813107251)

['2017-10-10 00:00:00', '4782.28']

['2017-10-12 00:00:00', '5325.130683333333']

(5739.438733333333, 5686.555583333333, 361.4248999999995, 0.009256704694315991)

['2017-10-12 00:00:00', '5325.130683333333']

['2017-10-14 00:00:00', '5739.438733333333']

(5711.205866666667, 6218.0181, 478.57936666666683, 0.0850210361092005)

['2017-10-14 00:00:00', '5739.438733333333']

['2017-10-16 00:00:00', '5711.205866666667']

(5546.176100000001, 5904.243458333334, 193.03759166666714, 0.06256262331157432)

It matches to what I calculated in LibreOffice:

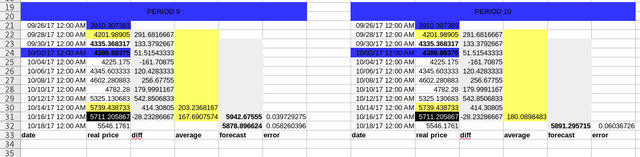

Period 9 & Period 10

- Period 9

['2017-09-28 00:00:00', '4201.98905']

['2017-09-30 00:00:00', '4335.368316666667']

['2017-10-02 00:00:00', '4386.88375']

['2017-10-04 00:00:00', '4225.175']

['2017-10-06 00:00:00', '4345.6033333333335']

['2017-10-08 00:00:00', '4602.280883333334']

['2017-10-10 00:00:00', '4782.28']

['2017-10-12 00:00:00', '5325.130683333333']

['2017-10-14 00:00:00', '5739.438733333333']

(5711.205866666667, 5942.67555, 203.23681666666667, 0.03972927482770809)

['2017-09-30 00:00:00', '4335.368316666667']

['2017-10-02 00:00:00', '4386.88375']

['2017-10-04 00:00:00', '4225.175']

['2017-10-06 00:00:00', '4345.6033333333335']

['2017-10-08 00:00:00', '4602.280883333334']

['2017-10-10 00:00:00', '4782.28']

['2017-10-12 00:00:00', '5325.130683333333']

['2017-10-14 00:00:00', '5739.438733333333']

['2017-10-16 00:00:00', '5711.205866666667']

(5546.176100000001, 5878.896624074075, 167.69075740740743, 0.058260396031291524)

- Period 10

['2017-09-28 00:00:00', '4201.98905']

['2017-09-30 00:00:00', '4335.368316666667']

['2017-10-02 00:00:00', '4386.88375']

['2017-10-04 00:00:00', '4225.175']

['2017-10-06 00:00:00', '4345.6033333333335']

['2017-10-08 00:00:00', '4602.280883333334']

['2017-10-10 00:00:00', '4782.28']

['2017-10-12 00:00:00', '5325.130683333333']

['2017-10-14 00:00:00', '5739.438733333333']

['2017-10-16 00:00:00', '5711.205866666667']

(5546.176100000001, 5891.295715, 180.08984833333338, 0.06036725973499906)

It also matches to my expectations:

So we can say that it’s probably bug free, although if you detect any bugs please signal it. Based on these calculations, which was painful, since I had to calculate all of them manually, it looks like it calculates the periods well.

The period must be a value so that (arraysize+1-1)-(1+period)>=1 or period<=arraysize-2!

I also have to admit that certain negative forecast values came up as well, and since the logarithm is undefined in negative values I did a few manual corrections, basically taking the absolute value of the difference and adding it to the previous price. Since the distance should be the same, the error should be the same as well.

### manual correction for negative log values

if(last_forecast<0):

last_forecast=float(ARRAY[limit ][1])+abs(f_diff)

Real Data

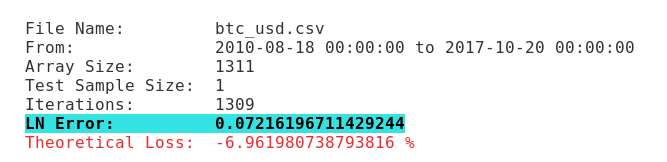

Now let’s play it with real data, with the latest BTC/USD data from blockchain.info:

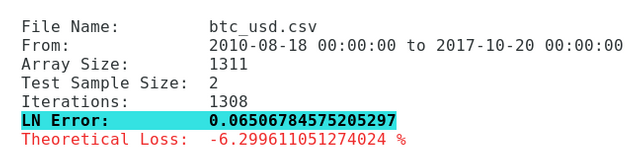

As you can see I have redesigned the GUI, it’s much smoother, for a console output. This is on the latest BTC/USD data from blockchain.info, using 1 period.

We have had 1309 iterations, so the sample size is big and it looks like it gives us a 7% forecast error if we use a 1 period sample, basically just the previous price, and no “memory”.

Let’s add 1 more element using 2 period:

- Period=3

File Name: btc_usd.csv

From: 2010-08-18 00:00:00 to 2017-10-20 00:00:00

Array Size: 1311

Test Sample Size: 3

Iterations: 1307

LN Error: 0.05881179953911284

Theoretical Loss: -5.711579629835562 %

- Period=4

File Name: btc_usd.csv

From: 2010-08-18 00:00:00 to 2017-10-20 00:00:00

Array Size: 1311

Test Sample Size: 4

Iterations: 1306

LN Error: 0.05677725166122436

Theoretical Loss: -5.519550043808974 %

- Period=5

File Name: btc_usd.csv

From: 2010-08-18 00:00:00 to 2017-10-20 00:00:00

Array Size: 1311

Test Sample Size: 5

Iterations: 1305

LN Error: 0.0550900919445993

Theoretical Loss: -5.360011889304395 %

What can you see? The forecast becomes more and more accurate, the higher periods we use.

So this confirms the initial hypothesis, the price does have a memory!

What is the best period to use for forecasting?

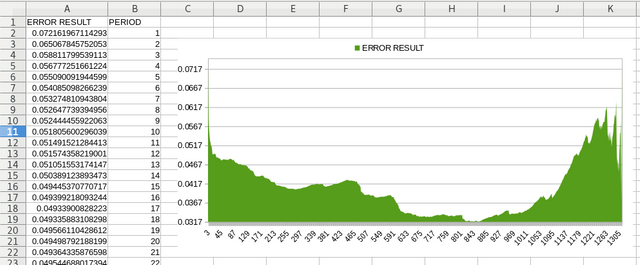

I had to run an iteration script on top of this to go through all possible PERIOD permutations.

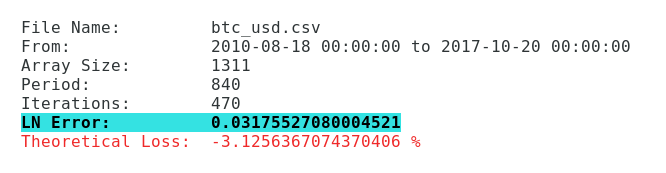

The best period on the latest BTC dataset, for linear forecasting is: 840 (as of today)

Yep, literally, looks like the memory is very very strong indeed, it surprized me, but keep in mind this is linear forecasting so it’s not the proper way to do it, thus we need more data to “fit the line” to the data. It’s mostly data fitting if we think about it.

Now this is only true of today, it might change periodically and it may be arbitrary but it looks like at 840 we have the smallest error. So the “memory” is pretty long if you think about it or it might just be a coincidence, so let’s look at the density chart to find more answers.

Well it looks like the error is diminishing steadily the more periods we use right until that 840 point and then it steeply increases. Interesting.

If we would want to do a multivariate analysis on it, I’d think this is the period when the probability distributions separate, one ends and the other starts, it’s a heteroskedastic distribution with roughly 840 point sized sub-populations.

Conclusion

So this is about it to linear forecasting, I have successfully created a good software to do linear forecasting, and our error margin is only -3.1256367074370406 %.

Let’s see if it can be shrunked down further, plus I should also add some sort of confidence ratio since this is not entirely straightforward how reliable the error is. In later episodes.

The latest script can be downloaded from here v2.0, including all the tools that I used in this article:

DOWNLOAD CRYPTO FORECASTING TOOL 2.0

Let me hear your thoughts and opinions in the comments below!

Sources:

https://pixabay.com