Prediction: Self-driving cars are still far away

A few days ago @xtester wrote about Elon Musk's thoughts on how close we are to real self-driving cars.

In a recent interview, Musk said that 2 years ago, based on the level of technology we had, he thought it would take roughly 10 years until we could reach full autonomy. One year later, when he seriously re-examined the question, he concluded that we could now make it in roughly 5 years. A few months ago, he pondered about the same question again, and concluded that technology has so rapidly advanced, that full autonomy would be doable in roughly 2 years(24-36 months).

For a long time I've been highly skeptical about these predictions.

It's not that I don't believe in fast technological progress. I do and I hope that it happens, I consider myself as a transhumanist.

But many times a new technological innovation is so big step forward that people don't understand it fully. Only when it's used for a while they start to realize it might have unseen risks and disadvantages.

The biggest problem for autonomous cars

Autonomous cars are really complex things. How do you prevent hacking of a really complex thing? Well, it's quite hard.

Just recently it was reported that 100 million Volkswagens can be unlocked with $40 Arduno device. Similar security holes are found regularly, and probably most of them are not even told to the public.

In an autonomous car everything is controlled by a computer. Updates are automatically sent by the manufacturer to the cars. There is not much what user can do.

So, what happens when hackers get in and the system is compromised? It's not just a computer that processes information. It's a computer that controls really heavy physical object. If that object is used harmfully, results can be horrible.

Just imagine if a hacker can send updates to thousands of cars. Cars will lock the doors, accelerate as fast as they can and drive straight as long as they can. Some passengers might bump into a building that's front of them and get a few bruises. But some of them will be at a highway and cause serious crashes.

Just imagine if you are in a car that stops completely ignoring your commands and accelerates as fast as it can. If you survive from that, do you think you'll go into autonomous car ever again?

The psychological impact will be huge. People will want to ban self-driving vehicles. Maybe there will be even terrorists who sabotage car factories and blow up autonomous cars because they don't want to see them in their roads.

My prediction

The technology of autonomous cars will advance too fast and it will backfire. Probably due to a hack something really bad happens. After that there will be many barriers preventing self-driving cars becoming common. It will take several years to undo the harm caused by this.

Solutions

There are a couple of ways to prevent this happening.

- Take it easy. To get the security right, it might take a while to identify all the potential problems. If cars are manufactured and sold immediately when the technology is at the stage when it does the job well enough, it will probably have some serious security holes.

- Open up the source code. For me this has been obvious for a long time, but I haven't seen much discussion about it. Closed source code is always more risky than open. All software that is in an autonomous car should be required to be open. This is a question of public safety. Badly behaving self-driving cars do not only threat the lives of their passengers but also everybody else's lives who happen to be near the cars.

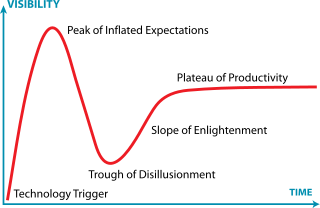

I agree with your setback prediction. We are still on the early climb of the hype curve. Many lessons and realities to set in before we stabilize.

I say for maybe 50-100 years, We can see some small % of self driving cars in World. Still it's too risky to put computer to drive instead of me :) At least I would not do it :)

All car brand are doing a good job... but as you say:

I think it will be much sooner. The exponential rate of advancement, fueled by the billions of dollars being invested, will bring that timeline in much sooner. We may see the emergence of limited-use autonomous vehicles by the end of the decade.

Many people will get fired in that moment . And still, there are big unemployment so we need to invent new job positions before that :)

Add to that all truck drivers... :D

I think your points are valid and I really hope that the auto industry will take these threats seriously. This brings to mind The DAO disaster: people wanting too much of the future too soon, without fully considering all the boring building blocks needed inbetween. Reality will always catch up, and I really hope that the self-driving future won't be unnecessarily delayed by too eager and unprepared technologists (with all due respect to people working with these things).

Edit: this was actually a really good post, got me googling... it seems that at least Tesla is and has been taking these things seriously: http://www.computerworld.com/article/2597937/security0/tesla-recruits-hackers-to-boost-vehicle-security.html

Funny, all this bad publicity and the news channels blow up when a Tesla vehicle gets in an accident when it was on auto-drive. There are a TON more accidents done bad drivers, drunk drivers, or just simply put--Old drivers who shouldn't be driving anymore.

SHIT--Have you ever looked around when you are driving? I would much rather have an auto-drive machine driving next to me than some of the jackasses on the road. Enough said!

This is not about single accidents. Autonomous cars are great because they really do prevent normal accidents.

The point is that autonomous car, especially when they are always connected to internet, create totally new kind of risk. They can be hacked and the hacker can control all of them at the same time. If that hacker happens to be an evil terrorist, the result will not look nice.

BOOO!

interesting...

Asides from that there are two further large problems to market acceptance:

1: legality. Will it be legal to drive such a car, or to use it to drive itself. No-one will pay in order to have a fancy thing which he legally can't use.

I remember 10 years ago failing my driving test because I drove a car with an electronic handbrake. I actually had to come back to the examination centre,

2: Insurance. Will insurance companies be willing to insure these vehicles (including when driving autonomously,...)n retake the test with another car, which had a manual handbrake, just to show how backward these systems can be,...

On legality: US government seems to be well aware of the legality aspect: http://www.businessinsider.com/the-us-government-has-made-a-major-announcement-about-self-driving-cars-2016-6

I guess/hope the insurance companies will do their part, but yeah, it will be interesting to see how that side will play out. If we can get through the scenarios mentioned by Samupaha (I think his concerns are valid), there should be much less need for insurance...

Here in Pittsburgh, PA, Uber is testing a self-driving car. Someone still sits behind the wheel but the car takes over and has control. There are lots of articles on it. I have included the one from our local news. http://steemurl.com/Ey6RVlmh

You forgot something only an ethics professor would thought about:

The Robot Car of Tomorrow May Just Be Programmed to Hit You

Plain and simple:

The car's software should decide what to do in a case of an imminent accident. It will have to choose between hitting a small car or a big one.

That's the scenario.Solutions:

a) the software protects the life (of yours and of the others) therefore will hit the big one in order to maximize the chances of survival. > That's wrong. Volvo and Mercedes will be upset. We make good cars, why you hit us ?

b) what if instead of cars there are motorcyclists ? One with a helmet and one without ? We hit the one with the helmet, right? > WRONG. We penalize it for using one ?

c) elegant solution: flip a coin. > What ? We made an expensive software for random decisions ? Wrong !

d) don't use the information from the sensors. > Wrong. What ? If you knew about a terrorist attack you shouldn't used the info ? What's wrong with this software ?

e) this software protects my life. But also my wallet. So let's hit something with the most cheapest part of the car.

Yeah, ethics for software it's just tricky.

I believe in Steemit, I believe in You!

Good afternoon, I want to invite you to support my post dedicated to my dream and development and good advertizing of community of Steemit! I want to make the balloon on which I am going to visit many large cities and to place the logo and the slogan Steemit on it.

Support me and I will support you!

URDL : https://steemit.com/steem/@vdoh/rise-steemit