XLR8R - Live Essentials: Max Cooper

One of electronic music's foremost innovators details the processes behind his awe-inspiring live shows.

Though one of the most acclaimed live acts out there, it's not easy to do a Live Essentials with Max Cooper, the Belfast-born, London-based DJ, producer, and scientist explains. "I focus on the experience of the people attending rather than the kit I use," he explains, adding that he limits his setup as much as possible to allow him to fit it into his hand luggage. "It smoothes my travels and avoids lost or damaged equipment so that my shows are reliably delivered." Detailing the gear behind his work would, therefore, be a very short read, so we had to adapt the format somewhat.

In truth, even if you've seen Max perform more than once, there's a high chance that they were rather different experiences. Having launched Emergence—a highly ambitious and visually-focused project that tells the story of how "everything comes from (almost) nothing"—around four years ago, he's since developed four other live sets, which he performs at different venues around the world depending on the venue and the occasion—all while maintaining a setup that requires no extra luggage at the airport. Thus, instead of detailing the minimal setup behind Cooper's work, this month's Live Essentials explores the technicalities and processes behind each of his different live performances.

Aether

I’m always trying to find ways of bringing visual experiences to my shows, but I wanted to escape the standard screen format, so I started working with some architects—Satyajit Das and Regan Appleton—on a new technique for creating a three-dimensional field of points of light, using a laser and a special form of three-dimensional screen they invented.

I run my live set with Ableton, as usual, and have MIDI clips set up on scenes in Live to send control messages to a separate laptop running Pangolin Beyond, which controls the laser. This allows us to set up many different forms in the 3D space, which can be triggered and manipulated from the Ableton set as I trigger clips and manipulate the audio using an Akai APC40. I also use an iPad running Lemur, for live drumming and sending fast editing/glitching effects to the laser control. And I use a Novation Launch Control XL for laser-specific controls, like adjusting the color balance, size, and brightness of the 3D image.

Surround Sound

I love the effect of being wrapped up in sound, so I’ve experimented with several different types of immersive audio shows. The most basic of these is my home-made surround sound show which is designed for clubs with six or eight speaker stacks relatively evenly spaced around the room. Running this from Ableton relies on using the return channels for outputs, which currently gives a maximum of 12 possible channels.

Then there’s an “XY Send Nodes Circlepan” Max for Live plugin available for free on the Max user database which can drive Ableton's sends in order to move sounds around a space. You input the position of each speaker in the space, and which return channel it corresponds to, and then the position or movement you want for any given sound (something I usually control live or with LFOs). The plugin activates the sends accordingly to output the correct balance across all of the speakers in the room to give the best possible illusion of the sound coming from that position. The more speakers you use the better the positional flexibility, although Ableton only currently has a maximum of 12 return channels, so that's the speaker number limit using this technique.

I assign this to Lemur controls so that I can throw things around live, but what I find more useful is to assign LFO’s to the X and Y coordinates which can generate some really nice cyclic movement patterns.

I also assign many channels to go out to just one of the surround sound speakers, as you can see in the screenshot of part of a surround live set I built for Presences Electroniques Festival In Geneva. This allows for very localized sources, as opposed to the XY panner and sends route which blends across speakers as it moves. I also like setting up several stereo channels—stereo front, stereo middle, and stereo back of the room—so that I can still use all the work I have done on stereo parts, and I can split the space into three separate stereo sections, which has a nice effect.

In order to set up the project at home, I just used my normal Focusrite Scarlet 18i20 audio interface as it has plenty of outputs, and I bought some relatively cheap Tannoy Reveal 402 monitors to place around the room in addition to my main Genelec 8040’s. They work fine in combination, and it’s a lot more cost-effective that way. For live shows, I bought a little Gigaport HD+ which seems to be the cheapest audio interface on the market with eight outputs and decent sound.

Hyperform

Hyperform is a new project coming later this month at Société des Arts Technologiques for MUTEK. They have a 360° surround visual and audio dome there, which projects all around you, and has speakers behind the screens too. I’m working with a visual artist called Maotik, who luckily for me, already has experience using the dome system. I will run my standard surround sound set up, but with the difference that the positional information is stretched out over the curved dome surface, rather than a flat room space, and that information is also sent to Mathieu (Moatik) so that he can map the visual positional cues to my audio positions, so that what you see and hear within the space are in unison.

The space at Société des Arts Technologiques in Montreal gives a strange experience of three visual dimensions because of the curved screen, and I wanted to take advantage of that with the project, so we brought in a mathematician, Dugan Hammock, who has helped me visually realise difficult requests in the past. Dugan and Mathieu have been building a system for mapping higher dimensional forms to the space, that is, visual structures with more than three spatial dimensions. The show will be an exploration of dimensionality, from the mu ane, to the real, to the unusual experiences of seeing what it would be like to live with extra dimensions.

Emergence

Emergence is my long-running visual show, which I have been building and developing for three or four years now. It started with an idea of how I would like to bring live visual performance into my sets, and it spiralled out of control there as I realised how many different ideas and projects were contained in the overall theme —that of simple laws and natural systems giving rise to more complex higher level behaviours. It turned into a collaboration with many visual artists, scientists, and mathematicians across 25 chapters of the story—all summarised .

The live performance side of the show relies on my trusty Akai APC40 and iPad/Lemur controllers, plus two laptops, one running Ableton for the audio, and the other running Resolume for the visual. I designed the show to fit into my hand luggage in order to aid touring, and I use a little Motu Microbook audio interface which has a digital output for a little more punch than the line. I send MIDI into Ableton to trigger clips and glitches, filters, effects etc., everything I do then sends signals from within Ableton to the machine running Resolume via OSC over an Ethernet cable. I spent months setting up this interface so that everything I like to do with the audio has a fitting visual counterpart—if I start layering on loads of live glitch and drumming with Lemur controlling audio effects and drum machines in Ableton, the visual glitches in sync in Resolume. So when it’s all ready I can just play as I would usually with Ableton, and most of the visuals just happen.

I do have some visual specific controls however, master levels, and most importantly effects matrices—I use the Macro rotary faders in the audio effect racks to switch on or off, and various other settings, for many visual effects in Resolume, whereby all are controlled by the same filter cut off points on the audio channels. This allows me to assign in Ableton, many different visual effects, acting alone or simultaneously, to the same audio-effecting live process. Put another way, I can make the visual do a lot of different things in response to the same audio-control process, where I want to. All of this control of Resolume from Ableton relies on the Resolume Dispatcher and Resolume Parameter forwarder Max for Live plugins, which interface the two programs. This system means that the positions of your audio clips automatically map to the equivalent positions of video clips on the other machine, making the trigger sync part very easy. The mappings I needed for the finer visual controls on the other hand, were a pain to set up, but all worth it, as it’s a system I’ll continue to use for future visual shows.

4D Sound

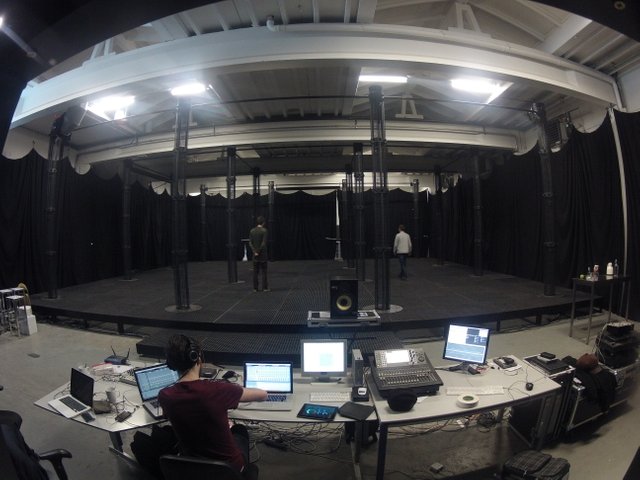

The 4D Sound System was designed and developed in Amsterdam by Paul Oomen, Salvador Breed, Luc Van Weelden, and Poul Holleman. It comprises 16 columns with omnidirectional speakers at several heights in each column, and the columns spaced around the room in a 12 x 12 x 4m array.

The system is called "4D Sound" because it produces sound in all 3 spatial dimensions, as well as being able to move sounds through the space in time—the 4th dimension.

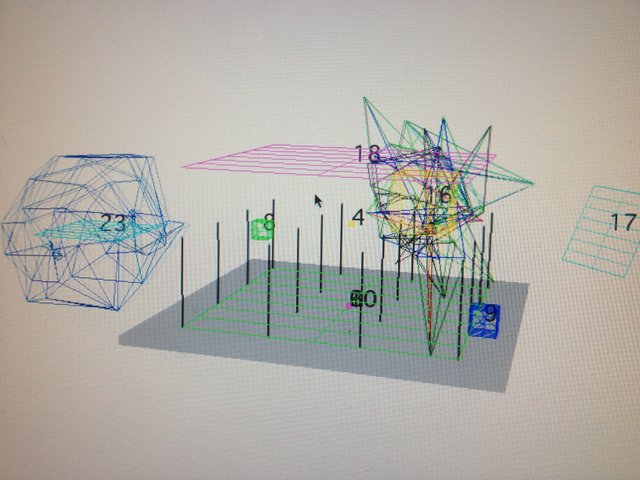

They have designed Max for Live devices which provide a range of spatial controls within Ableton Live, as well as an accompanying visual 3D model of the space and sounds within it. This allows for an object-based spatial composition/production process, whereby each sound has a shape and position within the 3D space, and can move in any way, or change its size and spatial effects with time. You work with this space and the objects, rather than thinking about what speaker to drive when, as with my basic home made surround sound set up. It’s a very different way of working, but it opens up huge possibilities, not least because of the amazing host of spatial-specific effects they have designed.

The whole process is depending on using their custom software in Ableton, which allows positional coordinates to be input to each clip title, and midi controller assignments to positions to move things around or control spatial effects. I ran my set by building spatial presets for each different track. I tried to make each piece of music have its own physical identity, and I also experimented with “audio sculptures,” whereby I built from the spatial experience up, making something which primarily conveyed a structure in space, rather than a traditional piece of music.

The really exciting thing about this system is that the sounds do not always seem to come from the speakers, you get clouds of sound source seemingly in the space, which you can walk through or past, because the speakers are everywhere in the space, rather than just on the edges like with other surround sound systems. This means that the experience becomes much more exploratory than with the alternatives, it’s best to walk around and see what you can find in the space.

Congratulations @xlr8r! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP