Making website speed and performance part of your SEO routine

Accomplishment in website improvement (SEO) requires not just a comprehension of where Google's calculation is today yet an understanding to where Google is heading later on.

In view of my experience, it has turned out to be obvious to me Google will put a more grounded weight on the client's involvement with page stack speed as a major aspect of their versatile first procedure. With the speculation Google has made in page execution, there are a few pointers we require to see how basic this factor is currently and will be later on. For instance:

AMP — Specifically intended to carry more data into the web search tool comes about pages (SERPs) in a way that conveys on the client's aim generally speedily. Google's want to rapidly serve the client "bursting quick page rendering and substance conveyance" crosswise over gadgets and media starts with Google reserving more substance in their own cloud.

Google Fiber — A quicker web association for a speedier web. A speedier web takes into consideration a more grounded web nearness in our regular day to day existences and is the premise of the achievement of the web of things (IoT). What the web is today is driven by substance and experience conveyance. At the point when fiber establishments achieve minimum amount and gigabit turns into the standard, the web will start to achieve its maximum capacity.

Google Developer Guidelines — 200-millisecond reaction time and a one-moment best of overlay page stack time, more than an unpretentious clue that speed ought to be an essential objective for each website admin.

Since we know page execution is critical to Google, how would we as computerized promoting experts work speed and execution into our ordinary SEO schedule?

An initial step is manufacture the information source. Website design enhancement is an information driven showcasing channel, and execution information is the same as positions, navigate rates (CTRs) and impressions. We gather the information, dissect, and decide the game-plan required to move the measurements toward our picking.

Apparatuses to utilize

With page execution apparatuses it is critical to recollect a device might be erroneous with a solitary estimation. I want to use no less than three instruments for social affair general execution measurements so I can triangulate the information and approve every individual source against the other two.

Information is just helpful when the information is solid. Contingent upon the site I am taking a shot at, I may approach page execution information on a repeating premise. Some instrument arrangements like DynaTrace, Quantum Metric, Foglight, IBM and TeaLeaf gather information continuously yet accompany a high sticker price or constrained licenses. At the point when taken a toll is a thought, I depend all the more vigorously on the accompanying instruments:

Google Page Speed Insights — Regardless of what instruments you approach, how Google sees the execution of a page is truly what is important.

Pingdom.com — A strong apparatus for social occasion gauge measurements and proposals for development. The additional ability to test utilizing universal servers is key when worldwide movement is a solid driver for the business you are taking a shot at.

GTMetrix.com — Similar to Pingdom, with the additional advantage of having the capacity to play back the client encounter timetable in a video medium.

WebPageTest.org — somewhat rougher (UI) outline, however you can catch all the basic measurements. Awesome for approving the information acquired from different instruments.

Utilize numerous devices to exploit particular advantages of each apparatus, hope to check whether the information from all sources recounts a similar story. At the point when the information isn't recounting a similar story, there are more profound issues that ought to be settled before execution information can be noteworthy.

Inspecting approach

While it is more than practical to break down a solitary all inclusive asset locator (URL) you are chipping away at, in the event that you need to drive changes in the measurements, you should have the capacity to recount the whole story.

I generally prescribe utilizing a testing approach. In the event that you are chipping away at an online business webpage, for instance, and your URL center is a particular item detail page, accumulate measurements about the particular URL, and afterward complete a 10-item detail page test to deliver a normal. There might be a story one of a kind to the single URL, or the story might be at the page level.

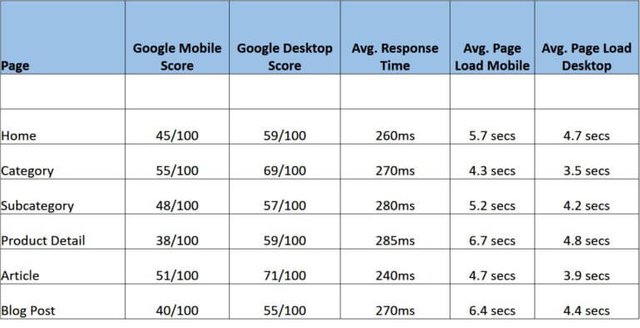

The following is a case of a catch of a 10-page normal over different page composes utilizing Google Page Speed Insights as the source.

Assessing this information, we can see all page writes are surpassing a four-second load time. Our underlying target is to bring these pages into a sub-four-second page stack time, 200 milliseconds or better on reaction and a one-moment over the-overlay (ATF) stack time.

Utilizing the information gave, you can complete a more profound plunge into source code, framework, engineering plan and systems administration to decide precisely what changes are important to carry the measurements into the adjusted objectives. Joining forces with the data innovation (IT) to build up benefit level assentions (SLAs) for stack time measurements will guarantee enhancements are a progressing goal of the organization. Without the privilege SLAs set up, IT may not keep up the measurements you requirement for SEO.

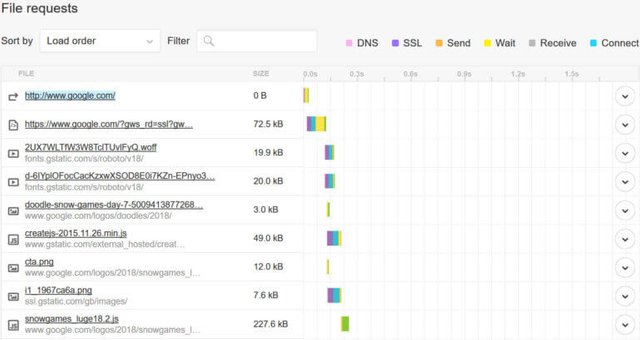

Utilizing Pingdom, we can jump somewhat promote into what is driving the slower page loads. The waterfall outline shows how much time each page component requires to stack.

Remember that articles will stack in parallel, so a solitary moderate stacking item may moderate ATF stack however may not affect the general page stack time.

Review the waterfall diagram to find elements that are consuming excessive load time. You can change the sort and file size to identify any objects that are of excessive size.

A common issue is the use of third-party hosted fonts and or images that have not been optimized for the web. Fonts are loaded above the fold, and if there are delays in response from a third-party font provider, it can bring the page load to a crawl.

When working with designers and front-end developers, ask if they evaluate web-safe fonts for their design. If web-safe fonts do not work with the design, consider Google fonts or Adobe Typekit.

Evaluate by file type

You can also evaluate the page weight by file type to determine if there are excessive scripts or style sheets called on the page. Once you have identified the elements that require further investigation, perform a view source on the page in your browser and see where the elements load in the page. Look closely for excessive style sheets, fonts and/or JavaScript loading in the HEAD section of the document. The HEAD section must execute before the BODY. If unnecessary calls exist in the HEAD, it is highly unlikely you will be able to achieve the one-second above-the-fold target.

Work with your front-end developers to ensure that all JavaScript is set to load asynchronously. Loading asynchronously allows other scripts to execute without waiting for the prior script call to complete. JavaScript calls that are not required for every page or are not required to execute in the HEAD of the document is a common issue you find in platforms like Magento, Shopify, NetSuite, Demandware and BigCommerce, primarily due to add-on modules or extensions. Work with your developers to evaluate each script call for dependencies in the page and whether the execution of the script can be deferred.

Cleaning up the code in the HEAD of your webpages and investigating excessive file sizes are key to achieving a one-second above-the-fold load time. If the code appears to be clean, but the page load times are still excessive, evaluate response time. Response timing above 200 milliseconds exceeds Google’s threshold. Tools such as Pingdom can identify response-time issues related to domain name system (DNS) and/or excessive document size, as well as network connectivity issues. Gather your information, partner with your IT team and place a focus on a fast-loading customer experience.

Google’s algorithm will continue to evolve, and SEO professionals who focus on website experience, from page load times to fulfilling on the customer’s intent, are working ahead of the algorithm.

Working ahead of the algorithm allows us to toast a new algorithm update instead of scrambling to determine potential negative impact. Improving the customer experience through SEO-driven initiatives demonstrates how a mature SEO program can drive positive impact regardless of traffic source.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

About The Author Bob Lyons is a seasoned technology and marketing expert with 25 years in technology and 20 years online marketing experience with a focus in SEO. Bob currently oversees the SEO program for Walgreens, but has successfully implemented programs for all business sizes

Sneaky Ninja Attack! You have been defended with a 1.56% vote... I was summoned by @tuhin2017! I have done their bidding and now I will vanish...Whoosh