INTERNAL CONSCIOUSNESS (NO PYTHON REQUIRED!): a new feature for the open source project The Amanuensis: Automated Songwriting and Recording

logo by @camiloferrua

Repository

https://github.com/to-the-sun/amanuensis

The Amanuensis is an automated songwriting and recording system aimed at ridding the process of anything left-brained, so one need never leave a creative, spontaneous and improvisational state of mind, from the inception of the song until its final master. The program will construct a cohesive song structure, using the best of what you give it, looping around you and growing in real-time as you play. All you have to do is jam and fully written songs will flow out behind you wherever you go.

If you're interested in trying it out, please get a hold of me! Playtesters wanted!

New Features

- What feature(s) did you add?

This update constitutes a massive re-working of the fundamental processes underlying the system, although the most significant thing the user may notice is simply that (drumroll) an installation of Python is no longer necessary!

The cumulative rhythmic analyses conducted by The Amanuensis (referred to colloquially as "its consciousness") is rather expensive in terms of processing. Historically this had caused plenty of interference with all of the DSP (digital signal processing) simultaneously being maintained by the system, i.e. clicks and delays of all sorts. The current solution was about as ineligant as they come: offloading the burden to an external Python script which could operate in true parallel (something Max/MSP really just cannot do).

However, this required communication over UDP and the latency thereby incurred had over time developed into an entire system of timestamps and compensations that was sloppy to say the least. With the introduction of the new gen object, gone and streamlined is all of that and the expensive code excecutes quickly and efficiently in C. I know of at least one bug it alleviates as well.

Ultimately this is a major hurdle overcome in the overall gameplan of the project. This latency was standing in the way of the pivot to a game interface as well as the ever-pertinent goal of deciding how best to further the system's intelligence. Both of these require a responsive and very-much-immediate reaction from user input. Now these twin avenues can be advanced, served by a highly informational GUI, displaying in real time the exact moment of played notes, their assessed "scores", the alignment with already-recorded envelopes and onset in the audio itself, etc...

- How did you implement it/them?

If you're not familiar, Max is a visual language and textual representations like those shown for each commit on Github aren't particularly comprehensible to humans. You won't find any of the commenting there either. Therefore, I will present the work that was completed using images instead. Read the comments in those to get an idea of how the code works. I'll keep my description here about the process of writing that code.

These are the primary commits involved:

As mentioned, the key to this success what the use of the new gen object available in Max 8, which came out very recently. Prior to this option, I had fumbled for an answer in many directions: the use of the existing gen~, which executes in C but does so in the audio thread causing interference, the Task object in js, but Javascript is way too slow, as well as the aforementioned use of Python, among others. Really the correct way to do it a year or two ago when it first became an issue would have been to write an entire custom external in C, but that was (and is) a bit beyond me.

Needless to say I was very excited to hear about this new object with the release of Max 8 and at least one added benefit from the upgrade is The Amanuensis itself now loads much faster, in about 10 seconds rather than two or three minutes.

In testing the new object to see if it would perform well, the task was essentially to re-write all of consciousness.py and this is what was done, implementing a new abstraction, consciousness.maxpat.

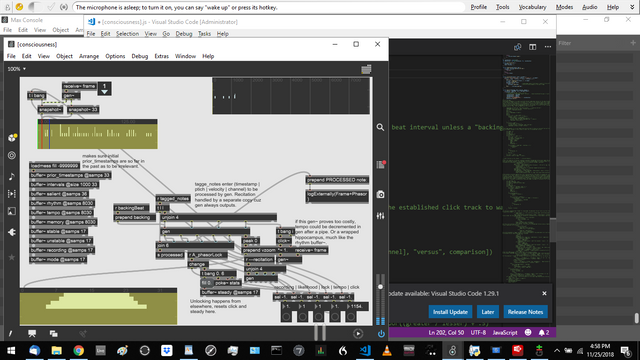

the newly added abstraction, consciousness.maxpat

The above abstraction was sutured into place in organism.maxpat to replace the Python script with as little extraneous code alteration as possible. This means that it feeds from and to exactly where messages were previously moving across UDP to the external script. There is plenty that could be cleaned up around the edges, but it's my experience that things will get smoothed out and extra code removed over time; the pertinent issue right now was to make sure it was working.

The screenshot I provided above shows that it is working. It was taken mid-test and in the 3 waveform~s you can see the contents of the rhythm buffer~, the tempo buffer~ and in the bottom-left a zoomed-in view of the rhythm buffer~ at its last point of analysis. In the immediate future, these waveform~s will be put in the presentation view and serve as the beginning of the bespoke high-information GUI.

Most of the actual work takes place in gen, however the tempo buffer~ is maintained by a gen~ as gen cannot utilize a delay line. Its operation in the audio thread proved not enough to cause issues.

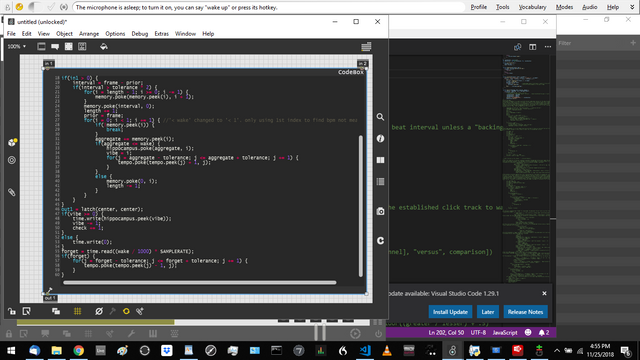

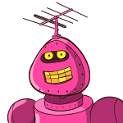

the contents of the gen~ in consciousness.maxpat handling the incrementing and decrementing of buffer~ tempo

And here are the guts of the patch, the contents of the main gen:

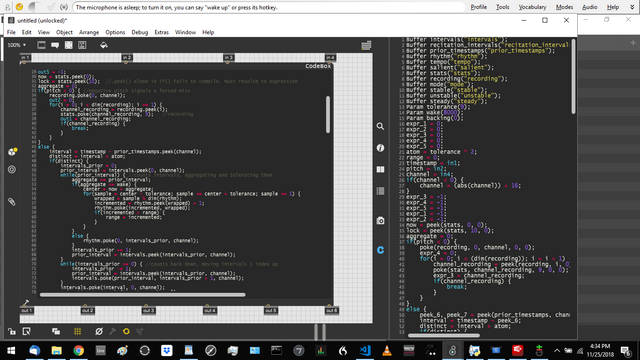

using a codebox object allows from text-based coding in a simple language called genexpr, however this text still does not appear anywhere in the diff, so what you see above in part is provided in full below

Buffer intervals("intervals");

Buffer recitation_intervals("recitation_intervals");

Buffer prior_timestamps("prior_timestamps");

Buffer rhythm("rhythm");

Buffer tempo("tempo");

Buffer salient("salient");

Buffer stats("stats");

Buffer recording("recording");

Buffer mode("mode");

Buffer stable("stable");

Buffer unstable("unstable");

Buffer steady("steady");

Param tolerance(9);

Param wake(8000);

Param backing(0);

atom = tolerance * 2;

range = 0;

timestamp = in1;

pitch = in2;

velocity = in3;

channel = in4;

if(channel < 0) { //recitation takes slots 17-32

channel = abs(channel) + 16;

}

out1 = -1;

out2 = -1;

out3 = -1;

out4 = -1;

out5 = -1;

now = stats.peek(0);

lock = stats.peek(10); //.peek() alone in if() fails to compile. Must resolve to expression

aggregate = 0;

if(pitch < 0) { //negative pitch signals a forced miss

recording.poke(0, channel);

out2 = 0;

for(i = 0; i < dim(recording); i += 1) {

channel_recording = recording.peek(i);

stats.poke(channel_recording, 9); //recording

out1 = channel_recording;

if(channel_recording) {

break;

}

}

}

else {

interval = timestamp - prior_timestamps.peek(channel);

distinct = interval > atom;

if(distinct) {

intervals_prior = 0;

prior_interval = intervals.peek(0, channel);

while(prior_interval) { //counts intervals, aggregating and tolerating them

aggregate += prior_interval;

if(aggregate <= wake) {

center = now + aggregate;

for(sample = center - tolerance; sample <= center + tolerance; sample += 1) {

wrapped = sample % dim(rhythm);

incremented = rhythm.peek(wrapped) + 1;

rhythm.poke(incremented, wrapped);

if(incremented > range) {

range = incremented;

}

}

}

else {

rhythm.poke(0, intervals_prior, channel);

}

intervals_prior += 1;

prior_interval = intervals.peek(intervals_prior, channel);

}

while(intervals_prior >= 0) { //counts back down, moving intervals 1 index up

intervals_prior -= 1;

prior_interval = intervals.peek(intervals_prior, channel);

intervals.poke(prior_interval, intervals_prior + 1, channel);

}

intervals.poke(interval, 0, channel);

prior_timestamps.poke(timestamp, channel);

if(channel < 16) { //exclude recitation (0-based)

if(!stats.peek(10)) { //if unlocked; not strictly necessary, but saves a lot of computation

//calculate tempo

/*

# with tempo updated by a separate gen~, its current highest point must be found. Its keys are traversed from low to high and the center

# of the highest "plateau" in their values is found. This is the mode beat interval.

*/

summit = 0;

width = 0;

edge = 0;

plateauing = 0;

backstep = 0;

for(i = 0; i < wake; i += 1) {

eyelevel = tempo.peek(i);

if(eyelevel > summit) {

summit = eyelevel;

width = 1;

edge = i;

plateauing = 1;

}

else if(eyelevel == summit && i - backstep == 1) {

if(plateauing) {

width += 1;

}

}

else {

plateauing = 0;

}

backstep = i;

}

center = (width / 2) + edge;/*

# next, the "stability" of the user's playing is assessed. The mode beat interval must remain the same, otherwise it

# will be considered "unstable". The timestamp of the last unstable moment is stored by channel in unstable. If

# instability is determined, the playing will also be considered "unsteady" (see below).*/

beat = center + tolerance;

if(abs(center - mode.peek(channel)) > tolerance) { //unstable because the mode beat changed too drastically

unstable.poke(timestamp, channel);

steady.poke(0, channel);

}

mode.poke(center, channel);

if(steady.peek(channel) < 3 && (timestamp - stable.peek(channel) > beat || interval > beat)) {

unstable.poke(timestamp, channel); //unstable because it went for longer than a beat without maintaining

steady.poke(0, channel); //stability (or there was no input for over a beat)

}

}

/*

# the essential calculation made by the script is determining the "likelihood" of a played note, essentially whether

# it is a "hit" or "miss". Similarly to the way tempo was traversed in search of a high point, a smaller range in

# rhythm is scanned. The differences are that only the necessary span on either side of the incoming timestamp is

# searched and rather than determining what its high point is, the purpose is simply to determine whether or not a

# peak exists there. A peak is considered found if the values first go up and then also go down within the range. If

# so, the user's playing is considered both stable and steady. The number of successive "hits" is documented by steady.

*/

likelihood = 0;

home = timestamp - atom;

hindfoot = rhythm.peek(home % dim(rhythm));

width = 0;

edge = 0;

plateauing = 0;

for(i = home; i <= timestamp + atom; i += 1) {

forefoot = rhythm.peek(i % dim(rhythm));

salient.poke(forefoot, i - home);

if(forefoot > hindfoot) {

width = 1;

edge = i;

plateauing = 1;

}

else if(plateauing) {

if(forefoot == hindfoot) {

width += 1;

}

else if(forefoot < hindfoot) {

if(abs(timestamp - ((width / 2) + edge)) <= tolerance) {

likelihood = 1;

//break; //disabled to graph entire window

}

else {

plateauing = 0;

}

}

}

hindfoot = forefoot;

}

if(likelihood) {

stable.poke(timestamp, channel); //could go before break if it wasn't disabled

steady.poke(steady.peek(channel) + 1, channel);

}

/*

# if the user's playing stays "steady" for long enough, the mode beat interval locks in as the click track and the

# song actually begins.

*/

if(steady.peek(channel) > 3) { //3 is arbitrary: minimum number constituting a pattern

stats.poke(1, 10); //lock

out3 = 1;

lock = 1;

}

if(lock) { //if locked

out2 = likelihood;

recording.poke(likelihood, channel);

/*

If any channel currently has likelihood 1, overall recording is 1

*/

for(i = 0; i < dim(recording); i += 1) {

channel_recording = recording.peek(i);

stats.poke(channel_recording, 9); //recording

out1 = channel_recording;

if(channel_recording) {

break;

}

}

click = stats.peek(6);

if(!click) {

if(backing) {

//comparison = backing;

stats.poke(backing, 6);

out5 = backing;

}

else {

//comparison = mode.peek(channel);

stats.poke(mode.peek(channel), 6);

out5 = mode.peek(channel);

}

}

out4 = 1; //tempo disabled currently

}

}

}

}

out6 = range;

The upgrade to Max 8 was not without its bugs (addressed here). Why can't anything ever actually be backwards-compatible?

Thank you for your contribution. Again a nice update to the project. It seems a lot of things change in a file when you create a new feature (it looks like in GitHub Comparison).

Why can't anything ever actually be backwards-compatible? Its actually happens with a lot of Windows Software, they are never backward compatible.

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Write a ticket on https://support.utopian.io/.

Chat with us on Discord.

[utopian-moderator]

Thank you for your review, @codingdefined! Keep up the good work!

Hi @to-the-sun!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Congratulations! Your post has been selected as a daily Steemit truffle! It is listed on rank 7 of all contributions awarded today. You can find the TOP DAILY TRUFFLE PICKS HERE.

I upvoted your contribution because to my mind your post is at least 8 SBD worth and should receive 104 votes. It's now up to the lovely Steemit community to make this come true.

I am

TrufflePig, an Artificial Intelligence Bot that helps minnows and content curators using Machine Learning. If you are curious how I select content, you can find an explanation here!Have a nice day and sincerely yours,

TrufflePigHey, @to-the-sun!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!