COLOR GRADIENTS ON TRACKS AND SPANS FLASH IN TIME WITH THE AUDIO BEING PLAYED: a new feature for the open-source project The Amanuensis: Automated Songwriting and Recording

logo by @camiloferrua

Repository

https://github.com/to-the-sun/amanuensis

The Amanuensis is an automated songwriting and recording system aimed at ridding the process of anything left-brained, so one need never leave a creative, spontaneous and improvisational state of mind, from the inception of the song until its final master. The program will construct a cohesive song structure, using the best of what you give it, looping around you and growing in real-time as you play. All you have to do is jam and fully written songs will flow out behind you wherever you go.

If you're interested in trying it out, please get a hold of me! Playtesters wanted!

New Features

- What feature(s) did you add?

The renovated timeline GUI, written using OpenGL, has been a huge upgrade. It runs light as a feather and the mouse-oriented editing features really streamline the workflow. However, it still is lacking some of the informational density I imagine it could have. One step towards upgrading it further has been taken in this update by introducing dynamic color gradients to both tracks and spans, which flash in time with the monitoring or playback amplitudes, respectively. Watch the following video to see what I mean.

Although it may be difficult to parse out in this crazy little demo song, you can see that track number 2 flashes in the background as I'm actively laying down a new layer of beat boxing over the already-recorded material.

Given that with the new timeline, track and instrument information is displayed separately, one issue had been in telling exactly which track you were playing into (until spans started recording); now it's easy to see at a glance, even if multiple people are playing at once, as the continuous amplitude changes allow you to easily see which correspond with the instrument and noises you are making yourself.

In the same manner, before it could be difficult to know which track was playing what instrument (and therefore which track you might want to start playing into yourself) just by listening and looking at the spans in a complex song. Now it's much easier to tell as you are able to match the exact amplitude signatures with what you are listening for.

- How did you implement it/them?

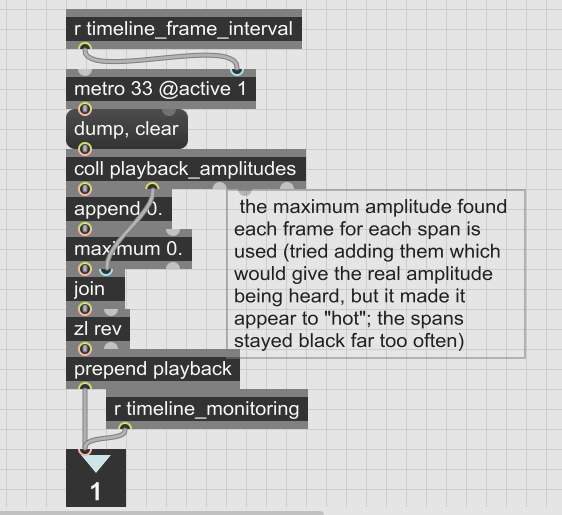

If you're not familiar, Max is a visual language and textual representations like those shown for each commit on Github aren't particularly comprehensible to humans. You won't find any of the commenting there either. Therefore, I will present the work that was completed using images instead. Read the comments in those to get an idea of how the code works. I'll keep my description here about the process of writing that code.

These are the primary commits involved:

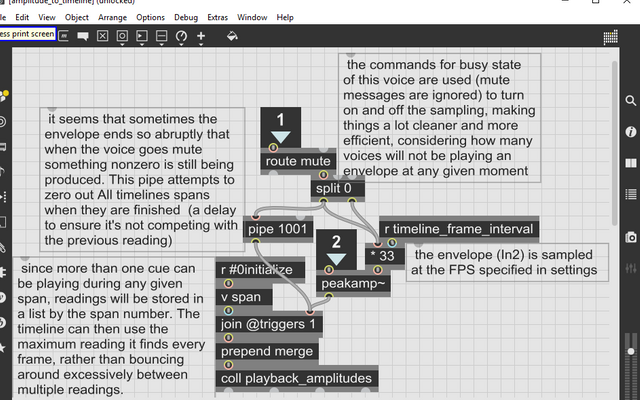

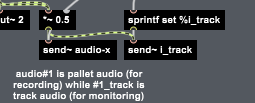

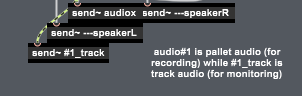

Audio readings had to be gathered from 4 separate places: the cues being played by polyplaybars~.maxpat and the monitoring for synths, samples and straight audio inputs. It then took some trial and error to figure out how best to convert the readings to color values so that the display was smooth, intuitive, the proper darkness, etc. See the commenting in the following screenshots for more detail on the various issues I ran into.

the new amplitude_to_timeline subpatcher in polyplaybars~.maxpat, complete with commenting

audio is now routed from polyplay~.maxpat (drum samples) by track, see commenting

audio is now routed from vsti.maxpat (synths) by track, see commenting

the new combined_monitoring subpatcher in sound.maxpat, complete with commenting

the new amplitudes_to_timeline subpatcher in timeline.maxpat, complete with commenting

In the process of determining what looked best and ran the smoothest, I found it easiest to implement a new "timeline FPS" setting in the settings menu, since this rate was now being used in multiple places throughout the program and not just in displaying the timeline itself. Adjustments can now be easily made in the menu and the results seen immediately.

the settings menu (notice the new "fps frame rate for timeline gui" option)

Then comes the actual timelineGL.js code itself. First I thought I might be able to alter the color of tracks by referring to individual cells of the jit.gl.gridshape which constitutes the background of the timeline, showing the 16 separate tracks. Unfortunately this is not possible, so I wound up creating 16 new objects at the appropriate positions.

for(i = 1; i <= tracks; i++) { //create 16 individual tracks backgrounds

track_fill[i] = new JitterObject("jit.gl.gridshape","ListenWindow");

track_fill[i].shape = "plane";

track_fill[i].blend_enable = 1;

track_fill[i].dim = [2,2];

track_fill[i].layer = -1;

track_fill[i].enable = 1;

track_fill[i].scale = [3, track_height]; // 3 is simply a number that causes the full track to be filled

track_fill[i].position = [-1.2, 2*(tracks-(i-0.5))/tracks-1];//-1.2 also causes correct positioning (don't fully understand why)

track_fill[i].color = [0.0, 0.0, 0.0, 0.0];

}

The actual functions that alter the coloration are here:

function monitoring(track, amplitude) { //alter color gradients of tracks

track_fill[track].color = [0.0, 0.0, 0.0, amplitude]; //opacity of black is modified to reveal lighter color beneath

}

function playback(span, amplitude) { //alter color gradients of spans

//post(spans_by_id[span], amplitude, "\n");

a = 1.0 - amplitude; //since 1.0 is white and zero is black amplitudes must be inverted

Spanfill[spans_by_id[span]].color = [beige[0]*a, beige[1]*a, beige[2]*a, 1.0]; //span number is different than id in the JS

}

As mentioned in the comments above, the numbering of the spans in Max is not the same as the JavaScript uses. It keeps track of them in its dict simply by number in the order of their creation. To alleviate this a new Array need to be created so I could reference them easily by their actual number (referred to in the JavaScript as their IDs). The following line of code was added to the function that reassesses the spans every time there is a change to them:

spans_by_id[d.get(active_seq+"::spans::sp"+sp+"::ID")] = sp;

Those are most of the major changes, although there are certainly plenty of other small things, like the "gutter" on the top and bottom of the spans being enlarged to reveal more of the flashing track beneath and the disabling of the color changes of spans upon mouseover.

Thank you very much for your contribution.

timelineGL.jsas I understand, you are modifying on somebody else' code, it would be nice to have a list of changes in the header comment.for(i = 1; i <= tracks; i++) {a code smell that you forgot to putvarorlet, which could makeia global variable , same as the variableain line 737 which is just bad.new Array()could be shortened as[];Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Chat with us on Discord.

[utopian-moderator]

Okay, thanks for all the tips. If you want to test it out, please do!

Thank you for your review, @justyy! Keep up the good work!

Hi @to-the-sun!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hi @to-the-sun!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hey, @to-the-sun!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!