A brief history of machine learning

Since the initial development of science and technology and artificial intelligence, scientists Blaise Pascal and Von Leibniz have pondered how to make a machine that is as intelligent as humans. Famous writers Jules Verne, Frank Baum (The Wizard of Oz), Mary Shelley (Frankenstein), George Lucas (Star Wars) dreamed of the existence of species with human behavior, and even Has the ability to defeat humans in different environments.

Machine learning is one of the most important branches of artificial intelligence, and it is a very hot topic in both scientific research and industry. Businesses and schools invest a great deal of resources to improve their knowledge. Recently, the field has achieved impressive results on many different tasks, comparable to human performance (recognition of traffic signs, machine learning reaching 98.98% - more than humans).

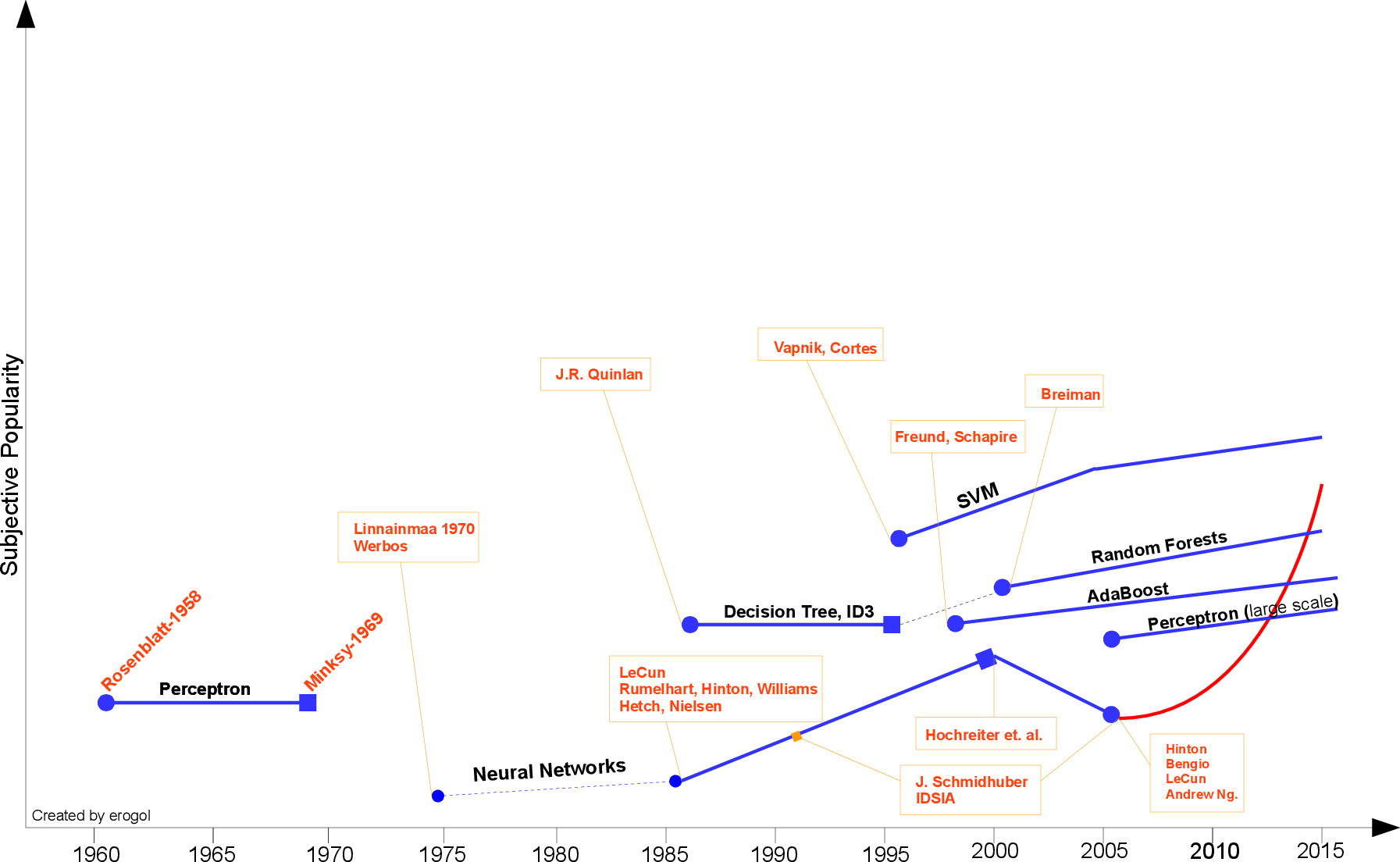

Here, I would like to share a rough schedule for machine learning development and mark some milestones, but not complete. In addition, when reading all the statements in this article, please make up your mind in advance: "As far as I know".

The first person to universally promote machine learning was Hebb, who introduced a neuropsychological learning method in 1949, known as Hebbian learning theory. Through simple explanations, we study the correlation between nodes of recurrent neural network (RNN). It records commonalities on the web, working like memories. This argument is formally expressed as follows:

In 1952, IBM's Arthur Samuel developed a chess program. The program can learn an implicit model from the position of the chess piece and provide a good way to go for the next move. Samuel played the game against the game many times and observed that the program could play better after a period of learning.

Samuel used the program to refute the assertion that the machine could not go beyond written code and learn the model like a human being. He created and defined "machine learning":

In 1957, Rosenblatt once again proposed the second machine learning model, the perceptron, based on neuroscience, which is more like today's machine learning model. At the time, this was a very exciting discovery, which was actually easier to achieve than Hebbian's idea. Rosenblatt introduced the Perceptron model in a few words:

Three years later, the Delta learning rule invented by Widrow [4] was loaded into the history of machine learning, which was quickly used as a practical procedure for perceived training. It is also known as the least squares problem. The combination of these two ideas creates a good linear classifier. However, the sensory model heat was replaced by Minsky [3] in 1969. He proposed the famous XOR problem, and the perceptron can not classify the linear indivisible data. This is Minsky's retort to the neural network community. Since then, the research of neural networks has been halting and only reached a breakthrough in the 1980s.

Thank you for sharing.

a nice article.

good