[Analysis] Does Money Vote For Money?

A billion here, a billion there; pretty soon you're talking about real money.

(Better formatted version available, as ever, on GitHub.)

During one of the discussions about the architecture and distribution of Reputation on the platform, @abh12345 said:

[However, I would be keen on a Vested Steem Power analysis of voting - does money vote for money?](— https://steemit.com/utopian-io/@abh12345/steemit-steem-reputation-voting-analysis#@abh12345/re-lextenebris-re-abh12345-steemit-steem-reputation-voting-analysis-20180306t185234133z)

Which is a really good question, as far as it goes, and I'm thinking its one that deserves a little poking at.

What we're going to do is to dig, aggressively, into the accounts that make up the moderate-to-high echelon of Reputation on the steem blockchain, try and get a handle on how often they send their votes within the groups as opposed to outside it, and get a feel for what that support is shaped like. And I'm going to show you, step-by-step, how you can do it yourself and dig as much further as your heart may desire.

Terms and Conditions

We have to start looking into this by defining more of what we're talking about. Is it just the general issue of do accounts with extremely high SP tended to restrict their votes to other accounts of extremely high SP? I've already done the analysis for something like that, and the answer was, surprisingly, most of the votes occurring from the top 200 were going out of the network, and not into it.

But that says nothing about Reputation, which is an entirely orthogonal measure of account value. Accounts with high Reputation must have, for a considerable amount of time and at considerable effort, been up voted by powerful accounts over a notable time.

I don't really understand what Reputation even looks like on the platform in terms of distribution, so that's the place to start.

Building Your Rep

# Setting up the imports for our basic query tools

from pprint import pprint

from steemdata import SteemData

import datetime

from datetime import datetime as dt

import math

import pandas as pd

import bokeh

import bokeh.plotting as bplt

# Init connection to database

db = SteemData()

# Just a list of all accounts with Rep over 30

query = {'reputation': {'$gte': 30}}

proj = {'name': 1,

'reputation': 1,

'_id': 0}

%%time

result = list(db.Accounts.find(query,

projection=proj,

sort=[],

limit=10))

Wall time: 103 ms

len(result)

10

That seems a legitimate kind of number. Let's look inside.

result[:5]

[{'name': 'a-acehsteem', 'reputation': 1567944952},

{'name': 'a-aka-assassin', 'reputation': 1174954542},

{'name': 'a-ayman', 'reputation': 114463809},

{'name': 'a-b', 'reputation': 1998284910},

{'name': 'a-dars', 'reputation': 17769912}]

Reputation is stored as a big, big number. Which may not be what we're looking for. Let's dig around and see if someone else is calculated how to derive the displayed Reputation number rather than the raw value.

How reputation scores are calculated - the details explained with simple math

Lucky for us it's simple, I suppose. Effectively you throw it on a log 10 curve, fiddle around so that the bottom end is squashed a bit and moved up to 25, and generally mischief managed.

Let's write a function to do that, because that's the sensible thing.

The following code is a direct translation from JavaScript as found in both condenser and steem-js.

# See https://github.com/steemit/condenser/blob/ff705f7a413c64e513ecb7f1f6f66fee793a28c7/src/app/utils/ParsersAndFormatters.js

def steemLog10(Str):

if Str == '0':

return 0

leadingDigits = int(Str[:4])

pad = len(Str) - 1

# This is not a perfect replication; the JS library has a constant for log[10]

strLog = math.log(leadingDigits) / math.log(10)

return pad + (strLog - int(strLog))

def steemRep2Rep(RawI):

RepS = str(RawI)

IsRepNeg = RawI < 0

if IsRepNeg:

RepS = RepS[1:]

RepI = steemBullshitLog10(RepS)

RepI = max(RepI - 9, 0)

if IsRepNeg:

RepI *= -1

RepI = (RepI * 9) + 25

return RepI

# And now, let's write it according to the stated intent in the comments

def rep2Rep(RawI):

if RawI:

if RawI > 0:

return int(max((math.log( RawI, 10) - 9), 0) * 9) + 25

else:

return -int(max((math.log(-RawI, 10) - 9), 0) * 9) + 25

else:

# Because we arbitrarily center at 25

return 25

rep2Rep(result[0]['reputation'])

26

This appears to work well enough for us. It does mean that we can't really query accounts for which ones have a minimum Reputation, not without doing some reverse engineering to find out what number would actually map to 30.

Maybe that would be worth doing.

Sometimes you go with what will get you the fastest answer, and when I need to do some sort of weird salt for a missing variable problem – I go to Wolfram-Alpha.

(I know, I know – there are plenty of tools that I could've used straight in Python but they would've all taken more time to set up than simply going to webpage. Also, this function as given to Wolfram Alpha isn't strictly correct. There is a weird bit regarding the absolute value of the Reputation as stored when it is a negative number that I didn't put in – but which has retroactively been worked into rep2Rep. See all the advantages you get when you read these things when they're done and not while I'm writing them?))

Still, this tells us the amount of in-database Reputation that will equate to a minimum level for figuring out who is at least Reputation 30.

Let's plug that back in.

# Init connection to database

db = SteemData()

minRep = int(3.593813663804627302188167229038422636504 * 10**9)

minRep

3593813663

# Just a list of all accounts

query = {}

proj = {'name': 1,

'reputation': 1,

'_id': 0}

%%time

result = list(db.Accounts.find(query,

projection=proj,

sort=[]))

Wall time: 12.8 s

len(result)

788516

repCalc = [rep2Rep(int(e['reputation'])) for e in result]

len(repCalc)

788516

Data = pd.DataFrame({'name': [e['name'] for e in result],

'rep': [int(e['reputation']) for e in result],

'calcRep': [e for e in repCalc]

})

Data.sort_values('rep', ascending=False).head(3)

| calcRep | name | rep | |

|---|---|---|---|

| 513965 | 78 | steemsports | 913296035791209 |

| 293626 | 77 | knozaki2015 | 746658070499557 |

| 410136 | 77 | papa-pepper | 682990070870322 |

Data.sort_values('rep', ascending=False).tail(3)

| calcRep | name | rep | |

|---|---|---|---|

| 340682 | -15 | matrixdweller | -29377009310460 |

| 580751 | -16 | wang | -37765258368568 |

| 59937 | -17 | berniesanders | -53479962528315 |

Getting that list took a lot more gyration than expected – because the values for Reputation as stored in the database are so large, they change type at some point and stop being 64-bit integers and start being strings.

@furion , if you're listening, they probably ought to be stored as all strings or an even bigger int if possible. I would settle for strings, but one or the other should definitely happen. This mixed type solution is messy.

Anyway, we have a list of all the accounts on the system sorted by reputation, and how we can actually do some stuff with it.

cData = Data[Data['calcRep'] >= 40].sort_values('calcRep', ascending=False)

nData = cData.set_index('name')

nData.head()

| calcRep | rep | |

|---|---|---|

| name | ||

| steemsports | 78 | 913296035791209 |

| gavvet | 77 | 660761030588799 |

| knozaki2015 | 77 | 746658070499557 |

| papa-pepper | 77 | 682990070870322 |

| ozchartart | 76 | 467589219907553 |

nData.tail()

| calcRep | rep | |

|---|---|---|

| name | ||

| chadwickthecrab | 40 | 58978979429 |

| yudiananda | 40 | 50125948491 |

| mahmut | 40 | 47287237996 |

| mahmoodhassan | 40 | 56958995698 |

| yohamartinez | 40 | 48050862479 |

len(nData)

55739

That is a lot lower number than I expected to see. In fact, I'm starting to wonder if poking around in this part of the world doesn't invalidate a lot of the things we've been told about the amount of live accounts on the platform. It's just over 55,000 accounts with a reputation of "you've at least done something to create content", covering the entire life of the platform.

That is not a great thing.

But before we go there, we need to talk about something you've already seen the evidence of up above…

Is JavaScript the Devil??

Look, I know it's been a long time since I had to maintain enterprise-class code. In the intervening years I may have become more cynical, more bitter, and even better at doing code analysis than I was in the heady years of my youth.

Which I deeply disliked, for the record. Going through someone else's code is like going through their sewer pipe. It's not even as dignified as going through their garbage. I enjoy going through the garbage.

The condenser and steem-js versions of this code are bad. As in, "not good," and they're out there in production systems.

I invite you to go and look at the article I linked earlier. You'll notice that it lightly outlines the Reputation calculation and then cites a piece of code and shows a piece of code. There is some reference to "details left out."

Someone decided that the best way to implement a log base 10 algorithm was not to actually use floats or integer approximations, but instead to convert or use a string, snip off the first five characters of that string and convert it to an int, take the natural logarithm of that int and divide it by the natural logarithm of 10 (the smart part of this algorithm), then crush those things back together, counting on the parseInt function in JavaScript to do the right thing and if they are slightly offset in log calculation to effectively return the decimal portion of the base 10 logarithm of the overall request, and then pass it back.

parseInt, by the way, really should be called with the base whenever you use it, because it has a weird tendency to try to guess the base and you can get an octal int back if, say, all your digits are less than 7.

The actual Reputation calculation maintains some of the calculation in an integer state and some in a string state, mashes things together, and hopes that parseInt can save the day again when they go to return the ultimate value.

This is bad code. I'm sure it leads to some bad outcomes. Luckily, it's tied to a presentation-only part of the system. I'm definitely certain the code elsewhere is fine.

I can almost, kind of, if I tilt my head to the side 90° and then squint really hard, see what they were trying to do. There is an attempt to keep from passing around large integers. Rather than simply read the value and immediately convert it to something useful like a 64-bit integer and doing the calculations sensibly in that domain, they decided to write their own base 10 logarithm code which is effectively just "tell me how long this string is -1", pass around a whole pile of strings, build and deconstruct strings, parse strings multiple times, and hit the stack on top of all of that.

Out of curiosity, pure curiosity, I decided to do a quick set of time comparisons of my proper version of the Reputation code, a pretty straightforward version of their Reputation code, and the dumbest possible implementation I could imagine which was just literally taking the length of an integer turned into a string and returning that as the base 10 logarithm.

Here's what that looks like.

%timeit -n 10 [rep2Rep(i) for i in range(1, 100000)][:5]

75 ms ± 681 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

%timeit -n 10 [steemRep2Rep(i) for i in range(1, 100000)][:5]

209 ms ± 3.54 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)

%timeit -n 10 [len(str(i)) - 1 + 25 for i in range(1, 100000)][:5]

34.6 ms ± 529 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

I was impressed; it turns out that the JavaScript-like-equivalent only ran a third the speed of properly designed code. Though I could double the speed of my own if I replaced it with a purely naïve implementation. I'm not sure we would actually lose much accuracy in the presentation as a result.

If you're curious where this stuff lives, be my guest and go gaze upon it with thine own eyes.

https://github.com/steemit/steem-js/blob/master/src/formatter.js

Now that's out of the way, let's check out what the distribution of Reputation looks like.

Eye on the Prize(s)

At a certain point you just have to roll up your sleeves, flex your fingers, and get ready to get your hands dirty. I have a sneaking suspicion of what this is going to look like, right up front, but we'll take a look at how things actually shake out.

nData.head()

| calcRep | rep | |

|---|---|---|

| name | ||

| steemsports | 78 | 913296035791209 |

| gavvet | 77 | 660761030588799 |

| knozaki2015 | 77 | 746658070499557 |

| papa-pepper | 77 | 682990070870322 |

| ozchartart | 76 | 467589219907553 |

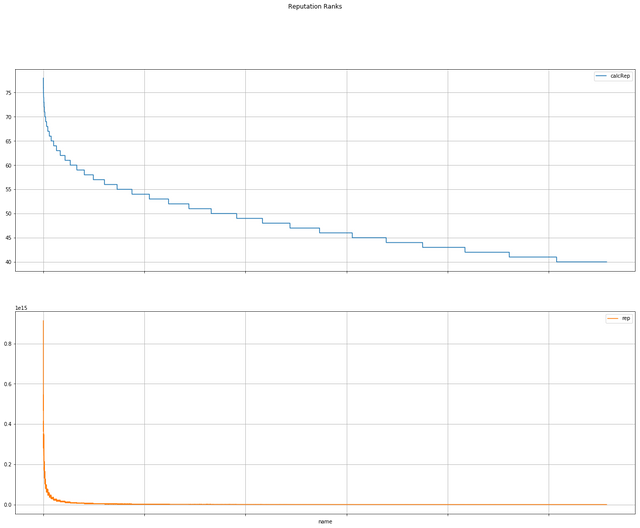

nData.plot(subplots=True,

figsize=(22, 18),

title='Reputation Ranks',

grid=True,

)

array([<matplotlib.axes._subplots.AxesSubplot object at 0x000001220C3478D0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000001220C3A1358>],

dtype=object)

Does this look familiar to you?

If you've been reading any of the analysis that I've been doing over the last few weeks, it should. It looks like every other distribution curve on Steemit. Every single one. The difference between the top and bottom is that the top is taking the integer Reputation of all of the accounts with greater than 40 Rep, and the lower curve is those exact same accounts except with their raw reputation plotted.

Can you tell the immediate difference between the two?

If you've been following the functions I've been writing, you know – the calculated Reputation has an implicit logarithmic curve causing the vast slope of the actual underlying reputation to be somewhat masked. I've used logarithmic slopes before in showing SP distribution on the blockchain, and the results were exactly like this. It just lifted up the knee of the curve.

Is Reputation 40 high enough to count as "money," or should we take the limit even higher? Of course, as we raise our cutoff point, the population of people that we're talking about becomes very quickly much smaller. Let's redo this with Reputation 50+ and see where that puts us.

cData = Data[Data['calcRep'] >= 50].sort_values('calcRep', ascending=False)

nData = cData.set_index('name')

len(nData)

19129

nData.head()

| calcRep | rep | |

|---|---|---|

| name | ||

| steemsports | 78 | 913296035791209 |

| papa-pepper | 77 | 682990070870322 |

| knozaki2015 | 77 | 746658070499557 |

| gavvet | 77 | 660761030588799 |

| krnel | 76 | 528772137201395 |

nData.tail()

| calcRep | rep | |

|---|---|---|

| name | ||

| neesje | 50 | 738360211524 |

| ned-scott-videos | 50 | 629802131081 |

| tktk1023 | 50 | 732930735510 |

| neclatenekeci | 50 | 653698846700 |

| wefund | 50 | 680810981197 |

That takes us down to only 20,000 of the users on the platform and probably puts us in the right place to start wondering if all of the votes for this group stay within the group or fall outside of it?

Of course, we need to figure out what the scope of the votes we're interested in actually are. All votes on the platform would be far too much data to crunch and not very interesting. The last week might be acceptable, so let's target that first.

Step one is querying the database for all of the votes which were done by any of the people in this list within the last week.

intL = nData.index.tolist()

# Init connection to database

db = SteemData()

# Let's put our account on interest here in a list. One day

# we might want to generalize to more than one.

intL = nData.index.tolist()

# We want only the vote transactions which have happened

# in the last week AND involve only our accounts of interest.

query = {

'type' : 'vote',

'timestamp' : {'$gte': dt.now() - datetime.timedelta(days=7)},

'$or': [{'voter': {'$in': intL}}

]}

proj = {'voter': 1, 'author': 1, 'weight': 1, 'permlink': 1,

'_id': 0}

%%time

result = db.Operations.find(query,

projection=proj)

votes = list(result)

Wall time: 1min 12s

votes[:5]

[{'author': 'appics',

'permlink': 're-claudiaz-re-appics-announcing-appics-or-the-next-generation-social-app-or-first-smart-media-token-20171018t233054132z',

'voter': 'sinner264',

'weight': 1000},

{'author': 'simone88',

'permlink': 'colored-drawing-mayor-mccheese',

'voter': 'biglipsmama',

'weight': 300},

{'author': 'novi',

'permlink': 'wanita-tangguh-b2e0e2732f87c',

'voter': 'good-karma',

'weight': 6},

{'author': 'edwardbernays',

'permlink': 'basic-needs',

'voter': 'youareyourowngod',

'weight': 10},

{'author': 'hninyu',

'permlink': 'grilled-shrimp-a3c0635fbd3cb',

'voter': 'cryptohustler',

'weight': 50}]

len(votes)

1913625

Almost 2 million votes in the last week from the accounts in this pile.

That is a lot of voting.

Worse, though – it's just too many edges to deal with. Though maybe once we get this data into some sort of table that we can work with, structuring the output with some summaries and counts certainly may cut down on things – especially if there are a lot of commonalities.

What the Hell, let's give it a try.

vData = pd.DataFrame(votes)

vData.head()

| author | permlink | voter | weight | |

|---|---|---|---|---|

| 0 | appics | re-claudiaz-re-appics-announcing-appics-or-the... | sinner264 | 1000 |

| 1 | simone88 | colored-drawing-mayor-mccheese | biglipsmama | 300 |

| 2 | novi | wanita-tangguh-b2e0e2732f87c | good-karma | 6 |

| 3 | edwardbernays | basic-needs | youareyourowngod | 10 |

| 4 | hninyu | grilled-shrimp-a3c0635fbd3cb | cryptohustler | 50 |

veData = vData[vData['author'].isin(intL)]

len(veData)

1147353

len(veData) / len(vData)

0.5995704487556339

I suppose that we have an answer, really. Roughly 60% of the votes in the last week cast by accounts with Reputations greater than 50 went to other accounts with Reputations greater than 50.

But maybe it's not as bad as it looks, right? I mean, maybe all of the votes going inside the group are tiny votes, and they tend to vote with heavier weight outside of the group. That should be relatively easy to find out. All we need to do is sum up the weights on all the votes inside and in total, and a quick divisor will let us know what the ratio is.

vSum = vData['weight'].sum()

vSum

7303280912

veSum = veData['weight'].sum()

veSum

5232713161

veSum / vSum

0.7164880036864177

Oh.

71% by weight went to authors inside the group as opposed to merely 60% by count.

At this point it's getting hard to avoid finding that money does, in fact, vote for money.

But soft, what light through yonder window breaks?

What percentage of these votes are actually self-votes? Those would count as votes which were cast for someone within the same group, right? What percentage of the total are simply not getting out of the group because they are going to the voter themselves?

We can easily work this out.

vsData = vData[vData['author'] == vData['voter']]

len(vsData)

90695

vsSum = vsData['weight'].sum()

vsSum

835090614

len(vsData) / len(vData), vsSum / vSum

(0.047394343196812336, 0.11434458349094377)

Not entirely expected.

Only 4.7% of the total number of votes cast by accounts with a Reputation of greater than 50 were self-votes, which is relatively reassuring, I suppose. However, 11.4% of the total weight in votes cast by accounts with Reputation 50 or more went to self-voting.

I want to be clear at this point, I have no problem with people self-voting. I am a firm believer in "code is law," and the steem blockchain goes out of its way to reinforce at every turn that voting your own goods up with your own SP is one of the most efficient and effective ways of increasing your holdings.

You don't have to like it, but you do have to accept it.

This paints a very strange picture, in that 60% of votes in general go inside of the high reputation group, while 71% of the total vote weight stayed in the group. Only 4.7% of those votes were self votes, but they made up 11.4% of the total weight of votes cast.

Apparently, high Reputation accounts keep the SP in the family, with a tendency to vote other high Reputation accounts more aggressively, but of that 71% of vote power, roughly 11% were just voting for themselves.

That's really, really interesting. Like most of my findings, I don't know what it actually means – but we found it.

Out of curiosity, a truly perverse and probably self-destructive curiosity, what say we turn the breakpoint all the way up to Reputation 70 and see how the numbers fall out.

cData = Data[Data['calcRep'] >= 70].sort_values('calcRep', ascending=False)

nData = cData.set_index('name')

len(nData)

224

Only 224 accounts on the entire blockchain have a Reputation of over 70. I'm not sure if that's reassuring or terrifying. It is what it is.

nData.head()

| calcRep | rep | |

|---|---|---|

| name | ||

| steemsports | 78 | 913296035791209 |

| gavvet | 77 | 660761030588799 |

| papa-pepper | 77 | 682990070870322 |

| knozaki2015 | 77 | 746658070499557 |

| ozchartart | 76 | 467589219907553 |

nData.tail()

| calcRep | rep | |

|---|---|---|

| name | ||

| richman | 70 | 114253548771732 |

| reddust | 70 | 100083302723374 |

| rea | 70 | 116140932922857 |

| razvanelulmarin | 70 | 108790190309091 |

| fishingvideos | 70 | 100154736138756 |

intL = nData.index.tolist()

# Init connection to database

db = SteemData()

# Let's put our account on interest here in a list. One day

# we might want to generalize to more than one.

intL = nData.index.tolist()

# We want only the vote transactions which have happened

# in the last week AND involve only our accounts of interest.

query = {

'type' : 'vote',

'timestamp' : {'$gte': dt.now() - datetime.timedelta(days=7)},

'$or': [{'voter': {'$in': intL}}

]}

proj = {'voter': 1, 'author': 1, 'weight': 1, 'permlink': 1,

'_id': 0}

%%time

result = db.Operations.find(query,

projection=proj)

votes = list(result)

Wall time: 16.5 s

votes[:5]

[{'author': 'walidelhaddad',

'permlink': 'binance-offers-usd250-000-bounty-for-arrest-of-hackers',

'voter': 'cheetah',

'weight': 8},

{'author': 'carlitojoshua',

'permlink': 'march-fire-prevention-month',

'voter': 'juvyjabian',

'weight': 1000},

{'author': 'smilebot',

'permlink': 'remember-to-smile-a2db16b93296a',

'voter': 'cheetah',

'weight': -8},

{'author': 'wefund',

'permlink': 'wefund-ada-1-3',

'voter': 'krexchange',

'weight': 10000},

{'author': 'gabbybear',

'permlink': 'international-women-s-day',

'voter': 'juvyjabian',

'weight': 1000}]

len(votes)

91305

91,000 total votes cast by accounts with Reputation 70 or greater. Compared to the number of votes that we been juggling along the way, this is very, very small. We are getting down into the realm of the graphable.

vData = pd.DataFrame(votes)

vData.head()

| author | permlink | voter | weight | |

|---|---|---|---|---|

| 0 | walidelhaddad | binance-offers-usd250-000-bounty-for-arrest-of... | cheetah | 8 |

| 1 | carlitojoshua | march-fire-prevention-month | juvyjabian | 1000 |

| 2 | smilebot | remember-to-smile-a2db16b93296a | cheetah | -8 |

| 3 | wefund | wefund-ada-1-3 | krexchange | 10000 |

| 4 | gabbybear | international-women-s-day | juvyjabian | 1000 |

veData = vData[vData['author'].isin(intL)]

len(veData)

12615

len(veData) / len(vData)

0.13816329883357975

vSum = vData['weight'].sum()

vSum

161766147

veSum = veData['weight'].sum()

veSum

54197247

veSum / vSum

0.3350345421777277

vsData = vData[vData['author'] == vData['voter']]

len(vsData)

2538

vsSum = vsData['weight'].sum()

vsSum

22746035

len(vsData) / len(vData), vsSum / vSum

(0.02779694430754066, 0.14061060006578507)

So there we have it.

Of accounts with Reputation 70+ who have voted in the last week:

Nearly 14% by count of those votes went to other accounts with Reputation 70+.

33% by weight of those votes stayed with accounts with Reputation 70+.

Only 2.7% of those votes by count were self-votes.

But nearly 15% by weight of those votes were self-votes.

Looking at the list of accounts in the Reputation 70+ category, this is not surprising news.

Epilogue

So what have we learned here, today?

Money does, in fact, vote for money. Quite a lot of the time. Not only does it vote for money, it votes for itself quite often. The class of accounts with high Reputations focus on, whether intentionally or emergently, other accounts with high Reputations.

The percentages by count decrease as the Reputation breakpoint increases, but not faster than the number of accounts involved decreases. And when talking about vote weight, which is purely about percentages, there seems to be an active centralization as you go up.

Of accounts with Reputation 40+, it's not surprising that the vast bulk of their vote weight goes within the group. That includes moderately successful and established small bloggers with communities who have managed to tough it out and are still around.

Once we start talking about 70+, however – it's an entirely different world. Keep in mind, 14% of their total votes for the week went to one of the other 224 accounts of comparable level.

We haven't even dealt with the question of how many of these accounts are automated as opposed to manual, which is an entirely orthogonal question that probably deserves some kind of attention. Is it possible to gain an extremely high Reputation via automated means?

There are definitely some bots in this group, particularly up voting bots. That means that they have not only been uploaded by new users, but have a long history of being up voted by higher Reputation accounts as well. It's not something that we can blame on uncouth newbies.

This is interesting stuff. Hopefully someone else finds it as interesting as I do.

Tools

- Python 3.6

- Jupyter Lab

- SteemData created by @furion

- MongoDB

- Pandas

And the letter "YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYY?!"

Posted on Utopian.io - Rewarding Open Source Contributors

Rather than percent-internality numbers, would it be possible to look at the "voting profiles" of different bands of users? It's hard for me to figure out what to think about the numbers you're presenting because I have no basis for comparison. But maybe seeing how different groups of users compare to each other might help. I was also thinking it might be interesting to see if there's a difference in the tendency to "vote for higher rep than me" vs. "vote for lower rep than me" as your rep changes, but that's complicated by the fact that expectations would change (because the size of the population above you and below you would be a function of your rep).

Exactly. The problem with doing that kind of analysis is that the population in various bands is either almost indistinguishable (which works in our favor), or vastly different (which means it would be an apples to oranges comparison) – and the places where the population is almost indistinguishable is at the bottom, where there is relatively little SP/voting power kicking around.

The problem is really that exponential graph that I dropped in about midway through, and the fact that anything that we want to talk about in terms of assessing what different groups on that curve are doing has to work with the really harsh truth that the different groups are going to be exponentially separated.

So we could talk about the activity of accounts in the Reputation 25 to 35 range pretty comfortably and maybe even 35 to 45 without too much trouble, though I would want to run the numbers on population counts before I really launched into something like that aggressively – but as we go up, we end up talking about so many fewer accounts that either our bands need to be very wide (which is why I literally said "this point and up" in this analysis) or we have to come up with something particularly clever to reveal what we want to find out.

Doing a raw comparison of "have I voted for someone with a higher or lower Reputation?" might be interesting to visualize, but I'm not sure it would actually be informative. Maybe, though.

Maybe the place to start is with the likelihood of a given Reputation level being a consistent poster. My gut says that lower Reps provide the bulk of content creation, which is necessarily true simply by population – but is it true by percentage of population? There are some definite high Rep content creators, though there is always some question about the content they're creating.

Interesting. There might be something that can be done with this.

🕵🏻♂️💡

“Fascinating!” I whisper as I shrink, smaller and smaller until I am but a speck of sand on a planet of wealth.

Good stuff inspector gadget. 🔍 🕵🏻♂️

My goodness your brain must have been throbbing by the time you finally clicked post on this amazing compilation of research.💆🏻♂️✨ ⭐️⭐️⭐️⭐️⭐️✨

The last couple weeks have just been a pile of data-digging through the depths of the database. It hasn't been good for having a personal sense of the grandiose or the important, I must say. From charting the curve of SP distribution to this -- it's like being the heir to Total Perspective Vortex.

Which is, in part, to blame for the decreased frequency of my posting from the every other day to the every third day or so. There's only so much self-abuse that someone can do before they start feeling inflamed and sore.

I was rather glad to get this one out, however.

Hey @lextenebris I am @utopian-io. I have just upvoted you!

Achievements

Community-Driven Witness!

I am the first and only Steem Community-Driven Witness. Participate on Discord. Lets GROW TOGETHER!

Up-vote this comment to grow my power and help Open Source contributions like this one. Want to chat? Join me on Discord https://discord.gg/Pc8HG9x

As a relative newbie on the platform this was pretty interesting (and disheartening). While I didn't understand all of the terms used in your analysis (again, newbie on the platform) or the math in your analysis, the conclusions help to explain why some of the posts that I follow have large dollar values for less than great content, while some new users that are writing (in my opinion) better content make dozens of pennies per post.

I guess the other way of looking at it is that the fact that the higher reputation users are at least passing some influence out of their orbit is a good thing, even if it would probably help things overall if it were higher.

At the same time, I imagine a lot of them have been around for a while producing content and have earned what kind of content and tone gets those votes from others with similar reputation which makes it more likely that they'd vote for each other.

This is going to be advice that no one else on the platform is going to give you, but loosely because it is some of the best advice you are ever going to get.

If you want to remain happy with any community, no matter what kind of community that is, do not look and see how the sausage is made. Do everything you can do to stay out of administration, responsibility, a position of power, a position of inquiry, and generally avoid knowing how things work or why things work – just work with them in ways that give you gratified pleasure.

Because if you don't, if you see how the sausage is made, there is no going back. There is only suffering. You will become disenchanted, disheartened, and disengaged, while simultaneously getting sucked in if you show even the slightest amount of talent for understanding what you've seen.

There is no punishment like success.

You'll notice that in this entire analysis there is no discussion of types of content, what kind of content was being voted on, what kind of engagement was underway, just pure numbers.

That was no accident.

Because so many of the high Reputation accounts are fairly clearly bots or automated-augmented accounts of one sort or another, that is to say a lot of up vote bots, I'm not sure that a content analysis methodology would even be meaningful. It's questionable how useful looking at in group versus outgroup voting actually is, because I can't really determine how many of those votes were purchased. It may be that quite a lot of them were at the behest of another account, and votes placed willfully and with volition were even more incestuous.

There's just no way to know.

It's difficult to know what is going on entirely, but if you really want to touch the meaty goodness of the sausage while it's being ground, some of my code might make a good basis for starting to learn how to use Python to go rummaging around in the blockchain garbage cans to see what you can turn up.

Yap!! well said!! The only thing closer to the true success is: ¡The absence of it! ;)

To further the points that Lex made... I honestly believe that on this platform (the same as any other social media platform) - it's far more about relationships than the quality of content.

Exceptional content may be found by curators like @curie, @ocd and @qurator, but vastly, quality of content is highly subjective, and relationships are key. YouTube is another great example of this.. YouTubers with 10 years experience often have much bigger followings than others producing 'better' videos because they've had longer to create relationships... but by the same token, people can go viral and rocket up the ranks for a short time.

Thank you for the contribution. It has been approved.

You can contact us on Discord.

[utopian-moderator]

I'm definitely in the group of people interested in this.

To a degree it does makes sense that money votes for money... I think we could assume that everyone with a high reputation has been here a lot longer than the usual punter, and probably has, in the last year or two formed quite strong relationships with others that they started with. I know I personally found quite a strong bond with 3 others that started in the same week as me.

The whole rancho/haejin thing must skew your results a touch... Haejin has a rep above 70 now, while Rancho is at 52 - given that he only has one post and few comments.. but it's still just 5% of the pool.

I've always been torn about self-voting. I'm currently at 0% self-votes, but I did read that if you continually upvote yourself, you double your account in something like 9 months... but the amazing @abh12345 personally gives rewards out of his own pocket for people highly engaged with the platform who don't self-vote. So I'll stick with that for the moment...

All in all, this was super interesting and I really do massively appreciate the effort you put into answering these interesting little behavioral questions.

Sorry, I've just realised you've had very similar conversations above. There is a huge amount of automation with votes, but that makes sense to me... if you're pumping out your own content, and don't have a lot of time for curation, setting up autovotes is a great way to collect curation rewards effectively for free. I'm not saying it's fair or right... but if you don't have a lot of time, it's almost crazy not to autovote or follow curation trails.

Personally, I don't really have a problem with people self voting, as I said. The system explicitly goes out of its way to invite it and allow it, and even sets it as the default on Steemit when you post and you have to go out of your way to make it otherwise. It's hard to argue that something else should be what you choose to do.

That said, the "advanced mode" trade-off understanding is recognizing that a big curation voter wants to be as early as possible as a voter, and if you take that first slot, your denying it to a potential other voter who would bring it up. Maybe that matters, maybe it doesn't, depending on what you're doing and who follows your stuff.

Understanding how that interaction trade-off actually works? That might be above even my level of understanding. Actually, it's definitely above my level of understanding in any real sense. It may not even be possible to understand it because, Schrödinger's cat-like, the decimation of that knowledge may change the behavior.

It's a complicated world.

From my perspective, the auto votes farming for curation rewards are a pretty effective subversion of the idea that "increased voting indicates quality of content." They don't really care about the actual content that they hit; it could be anything. The curation trail leader has a guaranteed following set of votes, assuring their curation reward. As such, it doesn't end up mattering what they vote on.

As a means of a form of passive income, turning what might otherwise be unmoving SP into active SP, it's a great system. As an indicator for a metric for deciding what content is good? Not so much.

It's differentiating these indicators that is becoming much more difficult as we go.

Oh... I completely agree...

I guess if autovotes didn't exist at all... and rich lazy people only had the options of following curation trails then it would be far more like a "proof of brain" concept, assuming the curation trails were only upvoting good quality stuff... which I feel is mostly true (Curie trail, Utopian trail, r-bot trail, qurator trail, photofeed, etc).

I'm constantly confused by the curation rewards, and have decided to not even worry about it and mainly upvote content I like and people who make comments on my posts.

What you said is correct, with the added complexity of the time. If you vote in the first 15 minutes of a post, then 100% of your vote goes to the author, if you vote 15-30 minutes then you share 50-50% with the author and if you vote 30+ minutes then the 25% curation rewards are 100% yours... except when you have to share... and it's that breakup I don't truly understand.

I do understand that the most I've ever made in curation was $0.37 in a day, so it's not really a thing that is important to me.

I look at it this way: I'm a real big believer in provability. If I were to jump onto one of the curation trails, what proof do I have that tomorrow it wouldn't begin voting for things that I don't think are particularly good? Past performance is no predictor of future behavior, after all.

It would make sense for me to follow them and expected that they would re-steem content that they think is of quality, and then I would be in a position to review it myself and voted up for myself I thought it was worthwhile, but curation trails even maintained by Curie, Utopian, anybody – I'm not really guaranteed of wanting to support everything that they support.

Of course, curation trails have a common quality with the existence of God; in the absence of their existence, we had to make them up. It's one of the easiest bot systems to implement, really. That they've developed interesting, nuanced methods to decide at least at some basic level how much VP to invest in a given vote reflects only that they've been around for over a year, now.

The whole curation system is weird and, I would argue, broken, because it explicitly does not do what "curation" as a term actually does. In the Steemit sense, curation is just trying to get to what you believe viral content will be, before it goes viral, and bet on its existence. The virality of that content doesn't have anything to do with the content itself, but only whether or not other people/entities will then subsequently vote it up.

All it takes at that point is a sufficient amount of collusion, between the first voter and subsequent voters or a swarm of bots, and we can prove that because we can empirically see that behavior. Trending and Hot are an endless cavalcade of examples.

Part of the problem is that there is this race to find content within the first 30 minutes. That means there's a continuous pressure, if you're going for curation rewards, to only look at the most recent, most immediate content – and God forbid you actually sleep. The curation architecture is not designed for a human use case. It's designed for something that doesn't sleep, doesn't eat, doesn't go to the bathroom, and really doesn't care much about the nature of the content at all, only whether or not something is going to get up voted later than it votes.

It's complicated. And it's annoying. And it's designed in a way that's antithetical to supporting creators who design things/right things/create things which are intended to be consumed for longer than a week, since no one can get rewarded for that content after that time.

Curation is only really valuable if you can do it in massive bulk or you already have a lot of SP, which means that all of your actions are scaled commensurately. You will notice that this is a recurring theme in this architecture.

That's something I hugely struggle with... I have a lot of content in draft-form, that would be excellent for the platform because it should appear in Google searches... but I haven't published yet because I'm not popular enough and can't produce the reward/effort ratio I'd want... of course, if payment didn't even exist I probably would have put it all up already.

You're right of course... curation is purely about predicting popularity at this point... but without it no one would vote on anything. I'm not sure there is a social media platform in existence yet where the "best" content rises to the top. Honestly, I've only ever seen it on websites and forums that are heavily moderated.

I don't know – I think I have a pretty good reason to vote on things:

"I like this thing and I'd like more of it, and so to get more of it I would like to reward the creator."

It's honestly the same reason to vote/+1/thumb up anything on any platform, it just so happens that it comes with a tiny hint of cryptocurrency on this one to sweeten the deal.

I think that people would be quite happy to vote on things without the ridiculously complicated system of "curation" which is effectively just a complex betting pool on trying to predict viral content, because we have empirical evidence that they will, pretty much everywhere on the Internet. The difference between most everywhere else and Steemit, at this point, is that when you up vote things elsewhere you would expect that those signals will help the system bring you more things that you like. On Steemit there is no direct assumption that that is the case at all; you just hope that enough signal is created so that particular creator will feel sufficiently motivated to create more things that you like.

Philosophically and mechanically it's a very different system of engagement. But it doesn't have to be.

Which is why I have written about Discovery systems a few times the last couple of weeks. Being able to turn those positive signals into some sort of machine indication which finds more content that you want and that you'll like – it's a hard problem, but it's one which would go a long way to improving the state of affairs on the platform.

First off, I don't know Coding or any sort of programming voodoo, so I know I missed some information above.

That being said, is it possible that the "70 crowd" is original (or darn near) users who merely have favorites or friends on Steemit?

For example, I only have about 30 followers, but more importantly - I only follow about 20, and not all of the ones I follow are following me (and vice versa).

Out of those 20-something that I follow, I don't upvote all of their posts, nor do I view all of them. Sometimes the content doesn't interest me, sometimes I'm prioritizing my time.

However, there are already a few of those that I follow that I do take the time to look at, and usually, upvote. Now, I'm not upvoting for money, but content. Yet, a lot of times, it's the higher Reputation accounts that get my time and upvote, but it isn't intentional (usually).

TL;DR: is it possible it is friends voting for friends, and not necessarily money voting for money - even if the outcome is?

Some of it is known figures voting for known figures; I hesitate to say "friends" because of a lot of different factors, but many of the people at the top are a somewhat iconic it in of themselves, at least within the limited context of the steem blockchain.

But it's worth noting that quite a number of them are not actually producing that much of the way of content. That's an entirely different pile of analysis that needs to be done at some point, comparing the post output of people at the top of the SP pile as well as the Reputation pile. Those groups are not the same, interestingly enough.

Also, never forget that quite a lot of the votes on the steem blockchain are driven by two completely content-neutral methodologies: automatic vote following, where someone sets their account up to vote for everything that another account votes for, and vote purchasing from an automated bot. There is so much automation occurring at various levels of blockchain interaction that it is very difficult to determine what kind of signal is really communicating that a person is interested in content.

Personally, I do all of my own curation, just like my own stunts. I don't think it's actually very interesting to auto-vote after a community leader, but that can be a very effective way to get ahead of a number of other people chasing content. Likewise, I don't employ bots to vote up my stuff – it's perfectly capable of failing on its own. But bots can be a very effective way for individuals to convert pooled resources (whether SBD or steem itself) into someone else's SP/voting power, pointed right back at their own account for more of the above.

In this particular instance, and in particular the Reputation 70+ folks, I don't believe there's a whole lot of manual curation going on, though there is clearly some.

More than that, it's really hard to say.

Fair enough. When I was first looking into Steemit and nosing around some of the users, I felt that there may be a decent (if slow) business here, using multiple accounts to feed into each other for the benefit of...well, me.

However, once I stopped looking at it from a purely profit-driven mode, I found I was actually MORE excited to find not only a community that I could help grow, but also a media outlet that didn't feel so negative in scope.

Like you said above, don't find out how the sausage is made.

My advice as a newcomer? Just do it because you enjoy it. If you end up making some coin because of it, bonus. But don't do it thinking you'll get rich, because that's the path to discouragement.

Very good post lextenebris friend greetings i follow u from Amsterdam to Love :)

Keep it up... you can check my article about Crypto's best Hype Man and give me your feedback!

My article Crypto's best Hype Man