If the Singularity Is Not Happening...

IF THE SINGULARITY IS NOT HAPPENING...

INTRODUCTION

Transhumanists pride themselves on basing their expectations on solid science. Karl Popper said that a theory is scientific if it can be proved wrong, and so we may ask: If the technological singularity is not happening, how would we know? I argue that online worlds like Second Life (SL) can serve as an indicator that we are either on-track or if the underlying technologies are faltering.

The reasons online worlds can serve this purpose is because many of the enabling technologies of the singularity also serve to push the size and sophistication of online worlds. For example, Vernor Vinge identified improvements in communication as being something that could lead to superhuman intelligence, saying ‘every time our ability to access information and communicate it to others is improved, we have in some sense achieved an increase over natural intelligence’. Arguably, online worlds are first and foremost platforms for communication. If, as we head toward the future, online worlds enable more people to be online simultaneously, and to exchange knowledge more efficiently (or, better yet, in ways that were not possible in the past), that could be taken as a sign that progress is heading in the right direction.

WHAT SHOULD WE BE WATCHING?

If communication is fundamental to online worlds, what about the Singularity? It is important to know, because we do not want to be distracted tracking trends of little or no relevance. Some people think the Singularity is all about mind uploading, but it really is not. Admittedly, Singularity enthusiasts are often also uploading enthusiasts and certainly the technologies that would enable one’s mind to be copied into an artificial brain/body would be very useful in Singularity research. However, even if we never develop uploading technologies, that in itself would not rule out the possibility of the Singularity happening. Simply put, ‘Singularity equals mind uploading’ is an incorrect definition.

Nor should the Singularity be seen as synonymous with humanlike AI. Again, if we ever find online worlds are being populated with autonomous avatars that anthropologists, psychologists and other experts in human behaviour agree are indistinguishable from avatars controlled by humans, we would very likely have technologies and knowledge that would be useful in Singularity research, but a complete lack of artificial intelligences that can ace the Turing test or any other test of humanlike capability would not rule out the Singularity.

Ok, well, what is essential? What can we point to and say, ‘progress in this area is faltering, therefore we can say the Singularity will not happen’? One such thing would be software complexity. Continual progress in pushing the envelope of computing power can only continue so long as developers can design more sophisticated software tools. The last time a computer was designed entirely by hand was in the 1970s. Since then we have seen orders-of-magnitude increases in the complexity of our computers, and this could only have been achieved by automating more and more aspects of the design process. By the late 1990s, a few cellphone chips were designed almost entirely by machines. The role of humans consisted of setting up the design space and the system itself discovered the most elegant solution using techniques inspired by evolution.

While it is true that we rely on a lot of automation in order to design and manufacture modern integrated circuits, we cannot yet say that humans can be taken completely out of the loop. Computers do not quite design and build themselves. One day, perhaps, one generation of computing technology will design and construct the next generation without any humans involved in the process. But not yet. For now, human ingenuity remains an indispensable aspect.

THE SOFTWARE COMPLEXITY PROBLEM

In an interview with Natasha Vita-More, Vernor Vinge identified a failure to solve the software complexity problem as being the most likely non-catastrophic scenario preventing the Singularity. ‘That is, we never figure out how to automate the solution of large, distributed problems’. If this were to happen, eventually progress in improving hardware would level off, because software engineers would no longer be able to deliver the necessary tools needed to develop the next generation of computers. With increases in the power of computers leveling off, progress in fields that rely on ever-more powerful computing and IT would continue only for as long as it takes to ‘max out’ the capabilities of the current generation. No further progress would be possible, because we could not progress to new generations of even more powerful IT.

Obviously, online worlds and the closely-related field of videogames rely on ever-more sophisticated software tools and on more powerful computers in order to deliver better graphics, physics simulations, AI and so on. If we compare the capabilities of online worlds and videogames that exist ‘today’ and find it increasingly difficult to point out improvements over previous years’ offerings, that could well be a sign that the software complexity problem is proving insolvable.

We should, however, be aware that some improvements are impossible to see because we have already surpassed the limits of human perception. Graphics is an obvious example. Perhaps one day realtime graphics will reach a fidelity that makes them completely indistinguishable from real life. It might be possible to produce even more capable graphics card, but the human eye would not be able to discern further improvements.

It is a fact that every individual technology can only be improved so far, and that we are closer to reaching the ultimate limits of some technologies than others. Isolated incidents of a technology leveling off might not be symptomatic of the software complexity problem, but if we notice a slowdown in progress across a broad range of technologies that rely on increasingly powerful computers, that would be compelling evidence.

THE DEFAULT POSITION OF DOUBT.

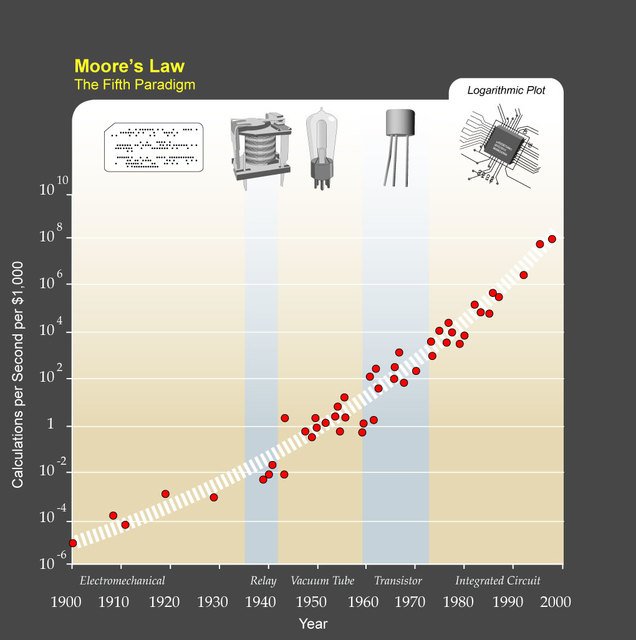

Look at Ray Kurzweil’s chart tracking progress in computing paradigms. Look how smooth progress has been so far. One could be forgiven for thinking the computer industry as so far improved its products with little difficulty.

This is not true, of course. R+D has always faced barriers to further progress. For instance, in the 1970s we were rapidly approaching the limits of the wavelength of light used to sculpt the chips, it was becoming increasingly difficult to deal with the heat the chips generated, and a host of other problems were seen as cause for pessimism by many experienced engineers. Well, with the benefit of hindsight we know More’s Law did not come to an end. Rather, human ingenuity found solutions to all these problems.

According to Hans Moravec, doubt over further progress is the norm within R+D:

‘The engineers directly involved in making integrated circuits tend to be pessimistic about further progress, because they can see all the problems with the current approaches, but are too busy to pay much attention to the far-out alternatives in research labs. As long as conventional approaches continue to be improved, the radical alternatives don’t stand a competitive chance. But, as soon as progess in conventional techniques falter, radical alternatives jump ahead and start a new cycle of refinement’.

Similarly, residents of SL tend to be pessimistic about their online world. It never seems to be good enough. Of course, in its current state SL does have many faults that prevent it from being fast, easy and fun and if we do not have the skills to improve these deficiencies, we might as well declare the Singularity impossible right now. But, I would suggest that online worlds are doomed to remain ‘not quite good enough’, because what people can imagine doing will always be more ambitious than what the technologies of the day can deliver. That, after all, is why we continually strive to produce better technology. Yes, there may come a time when online worlds are advanced enough to allow anyone to easily do activities that are typical today, but all the knowledge and technology that gets us to this point will broaden our horizons and people will be complaining about not being able to easily perform feats we would could not even imagine doing today.

At any point in time, the path to further progress seems blocked by no end of problems. It is probably true, therefore, that at any time there were skeptical voices expressing doubt over substantial improvements over current technologies. For some, the Technological Singularity has come to take on an almost mythical status of some deus ex machina that will arrive and solve all our problems for us. If, by ‘problems’, we mean only material concerns, perhaps a combination of advanced AI and nanosystems could elevate all people to a high standard of living. However, it is a fact that any technology will create problems as well as solve them. That is another reason why we continually strive to invent new things- to solve the problems caused by previous generations of inventions!

WHAT THE SINGULARITY CAN NEVER ACCOMPLISH.

If you expect the Singularity to rid us of all problems, it will never manifest itself because such a utopian outcome is beyond the capability of any technologically-based system. But if we cannot use the eradication of all problems as the measure by which we judge the Singularity’s presence, what can we use?

AND WHAT IT CAN.

One way to answer this is to consider again what an inability to solve the software complexity problem would mean. Vinge saw this developing into ‘a state in communication and cultural self-knowledge where artists can see almost anything any human has done before- and can’t do any better’. With invention itself unable to grow beyond the boundaries the software complexity problem imposes, we would (in Vinge’s words) ‘be left to refine and cross-pollinate what has already been refined and cross-pollinated…art will segue into a kind of noise [which is] a symptom of complete knowledge- where knowers are forever trapped within the human compass’. Novelty, true novelty, would be a thing of the past. Whatever came along in the future, we would have seen it all before and would be hard-pressed to discern any improvement.

But, if the Singularity does happen- if technological evolution can advance to a point where we create and/or become posthuman- there would be a veritable Cambrian explosion of creativity and novelty. We tend to be rather human-centric when thinking about intelligence, imagining the spectrum runs from ‘village idiot’ to ‘Leonardo da Vinci’. But Darwin’s theory tells us that other species are our evolutionary cousins, connected to us by smooth gradients of extinct common ancestors. These facts tell us that the spectrum of intelligence must stretch beyond the ‘village idiot’ point towards progressively less intelligent minds, all the way down to a point where the term ‘intelligence’ (or even ‘mind’ or ‘brain’) is not in evidence at all. Think bacteria or viruses.

And what about the other direction? The Singularity is based on two assumptions: That intelligences fundamentally more capable than human intelligence (however you care to define it) is theoretically possible, and that with appropriate application of science and technology we (and/ or our technologies) will have minds as far above our own as ours are above the rest of the animal kingdom.

Not everyone accepts these assumptions. Some argue that our minds are too mysterious and complex for us to fully understand, and how can we fundamentally improve something we don’t fully understand? Others see ‘spritual machines’ as requiring an impoverished view of ‘spirituality’ rather than a transcendent view of ‘machines’. But, let us assume that people like Hugo de Garis are correct and that miniaturization and self-assembly will progress to a point where we can store and process information on individual molecues (or even atoms) and build complex three-dimensional patterns out of molecules. When you consider how a single drop of water contains more molecules than all the transistors in all the computer chips ever manufactured, you get some idea of how staggeringly powerful even a sugar-cube sized 3D molecular circuitry would be- even before that individual nanocomputer starts communicating with the gazillions of others throughout the environments of the world.

Let’s also assume that our efforts to understand what the brain is and how it functions eventually results in a fully reverse-engineered blueprint of the salient details of operation. We combine the two: gazillions of nanocomputers, each sugar-cubed sized object capable of processing the equivilent of (at least) one hundred million human brains, running whole brain emulations at electronic speeds (millions of times faster than the speed at which biological neurons communicate). Of course, it has the capacity to run millions of such emulations, perhaps networked together and able to take advantage of a computer’s superiority in data sharing, data mining and data retrieval. Millions of human-level agents networked together to make one posthuman mind. And there are gazillions of these minds making up whatever the world wide web as become.

Then what? Vinge said that, ‘before the invention of writing, almost every insight was happening for the first time ( at least to the knowledge of the small groups of humans involved)’. Isolated tribes and nations may well have come up with inventions they consider to be completely new, unaware that other nations got there first. As information and communication technologies were invented and improved, so did our ability to recall the past until (in Vinge’s words) ‘in our era, almost everything we do in the arts is done with an awareness of what has been done before’.

But what we have never seen is anything that requires greater-than-human intelligence. Therefore, the Technological Singularity will usher in a posthuman era that (from our perspective) will have a profound sense of novelty about it. If we are on-track for a Singularity, it should become increasingly common to encounter things we never imagined would be possible. We will commonly encounter artworks that we struggle to fit into existing categories- truly extraordinary renderings of a fundamentally superior mind.

CONCLUSION.

Ushering in the posthuman world has always been what Second Life was conceived for, at least in the mind of Rosedale. It never was about recreating the real-world experience of shopping malls and fashionably-slim young ladies. It was a dream that a combination of computing, communication, software tools and human imagination can be coordinated to achieve a transcendent state that is greater than the sum of its parts. ‘There are those who will say…God has to breathe life into SL for it to be magical and real’, Rosedale is on record as saying in Wagner James Au’s book ‘The Making Of Second Life’. ‘My answer to that is…it’ll breathe by itself, if it’s big enough…simply the fact that if the system is big enough and has enough complexity, it will emerge with all these properties. People will come from out of the dust’.

hi,

I wrote a cute little story about a baby dolphin and all the posts he read on steemit last week and your post is in it!

have a look, I hope you like it

thanks

(ahem) We, uh, kinda need an explanation of what you mean by using the term 'singularity'. I've read about it quite a bit and it means different things to different people. Some think of it as 'the rapture of the nerds' where the faithful will be transported to cyberspace....

huh...that sound familiar somehow. Where have I heard that before?

The singularity is the point where artificial intelligence surpasses the human intelligence. Things will change rapidly at that point, humanity as we know it will not be the same anymore.

https://en.wikipedia.org/wiki/Technological_singularity

ah...well that kind of requires a definition of 'artificial intelligence then'. Will AI fit on the EQ list, (Encephalization Quotient, along with dogs and cats and Ravens and everything else with a brain or something that they use as a brain?)

Is an AI sapient? Is it sentient? Must it be sentient To BE sapient?

Personally I don't think AI is possible for a very long time. Why? Because consciousness, awareness, is a result of programming. We might know how to build the machine, how to wire the circuits, but how do we program it? The right program has to be running before consciousness will emerge. Mind is an emergent property.

Wrg of The Singularity, "artificial intelligence" is anything that can enhance its own design. Consciousness or awareness is not a requirement.

Actually if you read Vernor Vinge's paper on the singularity, it is clear that he regards the development of artificial intelligence as one path among many that could take us to the Singularity. As well as AI there are scenarios involving cyborgs, with people adopting brain prosthetics that make them smarter (using those smarts to make improved prosthetics, among other things) and scenarios involving greater cooperation between human and machine networks. This latter scenario may not involve any general AI but lots and lots of narrow AI. With regard to this scenario, Vinge's wrote:

"Of all the items on the list, progress in this is proceeding the fastest. The power and influence of the Internet are vastly underestimated. The very anarchy of the worldwide net's development is evidence of its potential. As connectivity, bandwidth, archive size, and computer speed all increase, we are seeing something like Lynn Margulis' vision of the biosphere as data processor recapitulated, but at a million times greater speed and with millions of humanly intelligent agents (ourselves)".

I define the Singularity as 'using science and technology to create, or become, superhuman intelligence'.