Fractalnomics — An overview of the GET Protocol token economic utility road map

Word of caution: This publication is part of a rather technical & detailed blog series about token economics of the GET Protocol. I would not consider the contents of this blog required reading if you are a casual follower of the project. In this blog I’ll go deep into the weeds of how token economic policy is set and what theories are used to formally describe such a policy. Accordingly how we model them and predict a policies effect on the projected future utility value of GET.

Aim of the blog :

After reading this blog it will be clear to the reader that utility value is not a feat that manifests itself in an instant. Utility grows as a function of time due to network & user growth, actor synergies and optimization and incremental addition & optimization of utility mechanisms/models.

Structure of the blog:

Just like the Mandlebrot set I don’t think there ever will an end when it comes to token economic improvements. Just like complex number theory and infinite fractal zooms the ticketing sector is granular, complex(ha!), fragmented and changes in the space occur unexpectedly and chaotically. Math pun overload.

- Explaining what the topic of token economics entail and why it is an important endevour to explore, test and discuss.

- Setting the general aim of what the token economic policy should ideally maximize and target by defining the protocols mandate.

- Tooling velocity: Formally describing a way to model velocity of GET within the protocol by introducing and then formally relaying a established model on to the crypto use case.

- Overview of future token economic blogs and publications.

Lets start with this zoom shall we!

Check out the article: Velocity reduction— The cryptoeconomic speed bumps of the GET Protocol here by clicking on the image.

1. What are token economics?

A few months ago we announced that in Q3 and Q4 of 2018 more than 140.000 tickets will be sold on the Ethereum main-net via the GET Protocol. This means that all these smart tickets, assigned to an Ethereum address that is owned by a consumer buying tickets with FIAT(€,$,¥), will require a certain amount of GET to fuel the state changes this ticket will make during its life cycle. The GET required to use the contracts of the protocol will have to be bought from the open market. The owners of the GET on open market will not hand over their GET without being compensated, in short; this acquisition of GET will cost somebody money somewhere.

Following this rather trivial train of thought (if you already lost me I would advise against reading further) one might start pondering about questions like; Who is actually paying for this GET acquired from the open market? What purpose does it serve in the process of ticketing? Where does the GET go after it is used and the event is over? What does a decentralized blockchain ledger add to this ticketing feature? What does the GET ERC20 token specifically add?

These are all valid questions. After all, businesses tend to only pay money for services that allow them to make more revenue or improve their profit margins themselves. As such, there must be a solid economic reason for these companies to acquire GET and use the functionalities offered by the protocol.

The mechanisms and logic behind this economic reason for ticketing companies to use the GET Protocol is what this token economics blog series and GET Protocol yellow paper is all about.

While the premise and goal of this economic exploration might seem simple on face value. The actual formalization and eventual realization of a sound and stable economic model clearly aren’t. Governments, central banks and academia have been trying to perfect economic policy for ages and we still haven’t come close to a perfect system. Proof in point; economic crashes, hyper inflation, bail outs and societal unrest due to monetary policy.

Enough for the introduction, let me show you the hard stuff.*

*this line doesn’t work as a pick-up line in a bar-setting. Trust me on this one.

About our iterative approach

The usage of blockchain in the context of smart ticketing is uncharted territory. Tokenization and the fundamental value of utility tokens are also a field scarce with comparative data-sets. Due to this there are no ‘tried and tested’ economic models available we can plug in our ticketing solution and be on our way. Lack of empirical data and comparable use-cases isn’t a reason to hold back on innovation. It just means that we will have to collect the data ourselves and draw conclusion on the effectiveness of the utility models and mechanisms accordingly. This way of approaching a problem set with an ideal outcome in mind and reverse engineering it back to the game’s players is called reverse game theory aka Mechanism Design.

What makes economics hard is the fact that the actors(players of a certain economic game) we are capturing in a model are all unique Homo Sapiens (or are companies that are lead by these type of apes). These entities all act on what is referred to as ‘private information’ in the game theory field (i.e. game outcome relevant information that you as architect aren’t aware of.) All actors thus have unique incentive functions (i.e. different business models) guiding their decisions. We can’t read collective minds of all actors involved in every event-cycle, yet (still waiting on that mind-reading ICO, hit me up if you can get me an allocation in the pre-sale).

While humans generally act rationally I think you will agree that this is not always the case, as such we have to account for random and counter-intuitive behavior. These rare outcomes resulting from these decisions shouldn’t break the system, even if they are extremely rare, the protocol should be formally certain that none of the outcomes result in a ‘failed’ event-cycle outcome for any of the actors. Ever.

We already know a lot about the ticketing industry. Based on this practical experience we have several mechanisms of which we are fairly certain that they will improve (i.e. make more efficient) the outcomes of the economic game of ticketing.

Sculpting the GET Protocol sales pitch

If the GET Protocol wants to gain foothold in the rather mature market of event ticketing we’ll need more than projections or promises. Those don’t hold up in a sales negotiation especially with hawkish ticketing companies on the other side of the table. What we need is data and metrics based pitch based on real-life examples. The goal is that we are able to state that; ‘We know from experience GET Protocol feature X will improve the show-up rate of your event by Y%’. Or, even better; ‘After 10 event-cycles on the GET Protocol our current users, on average, decrease their re-marketing costs per attendee by a minimum 10%’; thus increasing overall profits of these organizers. That is the goal here. You get the point.

So how do we get from cool sounding ideas to stone hard revenue improvement metrics & projections? Easy: mathematical models and a Guinea pig ticketing company to test the models and validate its effectiveness.

Somebody has to be the first to take their solid ship into the volatile blockchain waters. Might as well be us. Failure is temporary, glory is eternal.

GUTS Tickets is taking the plunge

As the protocol has a fully operational ticketing company as its launching clients we have enough to take this plain in the air, we’ll build the rest as we are airborne. Concretely this means that we will incrementally add more and more use cases for our token and the blockchain in general. If we introduce a new model this doesn’t mean the previous one is void, it just means the new model is stacked on top of the previous utility model(s). The goal is to build a utility skyscraper!

TLDR: Economics is a tough field. Applying economic theory in crypto sphere is even harder. Therefor we will start applying simple utility models, collect data, improve based on what we learn of the outcomes and fine-tune accordingly. Reflect. Repeat.

2. The GET Protocol mandate

In earlier blogs we explained there are fundamental flaws in how the ticketing industry is set up. The sector is very ineffective(with 30% of tickets being resold above fair-value, with all re-sale profits exiting the ecosystem). The sectors current set-up pushes the costs of these inefficiencies on to the fans.

We believe a transparent ownership registry of tickets with an auditable value & revenue trail will ensure all actors will act morally and fair. For this ticketing use-case using blockchain is the perfect marriage as technology where it excels in processing & registering cryptographically secure transfers of digital asset ownership's based on certain criteria (like max re-sale margins, ticket validity and overall network state).

- The GET Protocol will be used by ticketing companies, consumers, promoters and decision-makers in this industry all over the world. All these actors need to have a place in this protocol and need to be able to add their value without disrupting elements of the industry that work well. The protocol aims to decentralize only what benefits from decentralization & its inherent transparency. Maximizing for added value and minimizing usage costs for the actors using the protocol for what it is intended to facilitate; fair and transparent ticketing.

- Incentives and benefits of early stage GET holders (i.e. speculators) and later stage GET buyers/users (i.e. event organizers) should be fairly aligned. Adoption is only accomplished if GET usage is evenly beneficial for the users of

the token as it is for the speculators of the token(read the Token Discount Model paper for context.) - The protocol should be able to comply to any local law in which it is used. Meaning that the ticketing companies using the protocol in countries around the world should be able to only use the GET Protocol functionalities that are compliant to their applicable local banking, trade and consumer protection/ownership laws. In short; the protocol should offer a buffet of functionalities to ticketing companies to pick and choose from. Suiting all business models and jurisdictions.

Mandates and wish-lists are only useful if there is a quantitative metric to which they can be held accountable. In our eyes this mandate is best reflected in the goal for the GET Protocol to be predictable in its use by organizers and therefor considered a stable and reliable infrastructure to build value adding business on top of for ticketing companies. All over the world.

Learning from history

While building a decentral protocol has little to do with managing a central bank, we do share some mandate end-goals with these banks. While there are several schools of thought in monetarism there seems to be common ground in the premise that economies benefit most by offering stability for

the actors within the economy(allowing entrepreneurs to take risks and innovate, create value, jobs & moons). Stability doesn’t mean that a currencies (relative) price will stagnate, or that overall production should plateau. It just mainly means the economic environment should stay within a predictable margin. While I am personally no fan of central banks it would be foolish not review their mandates(Mandate FED), their policies(ECB monetary policy), the results of these policies (Paper in the Journal of Monetary Economics) and external reflections on the long erm effects of these policies(Athanasios Orphanides FED mandate paper). Warning upfront; sources are dry as ****.

Scratching the surface of economic theory

Having a high velocity as the protocols native asset(i.e. actors in the protocol don’t hold on to GET after usage but immediately convert the crypto into FIAT). High token velocity creates an unstable, volatile and unpredictable

ecosystem. The cause of this chaos is the fact that the average hold time is low and GET in circulation has a high variance/range. Controlling for velocity is therefor important (this was already established in the first blog

on token economics). For more information on the velocity problem read up on this CoinDesk/Multicoin Capital article.

Luckily a ticketing protocol is less complex than controlling the currency of a regions economy. The whole point of using a protocol with a native token, as opposed to using only ether for blockchain ticketing, is that in a protocol with a native token (GET) the system is able to set certain rules for transacting and interacting. For example, a system can influence by white-listing actors, setting requirements of staking for usage of the protocols contracts or offering discounts of usage to actors assert control. There are many more of such mechanisms that assert control and steer outcomes.

It comes down to controlling how and when a ticket is transferred **within the GET Protocol and who benefits of the transaction. **This allows us to more effectively restrict the flow of GET into the protocol, creating a external(open market) and internal(event-cycles) environment. The value of these internal rules will be formalized further moving forward. Open market transfers of GET can’t be controlled or restricted in any way by these policies.

Modelling effects of mechanisms on the tokens velocity

As internal stability and predictability are paramount for any consumer facing service/protocol we need to tackle this velocity problem head on. In its conclusion the first bloglisted 5 velocity reduction methods that would ensure actors within the GET Protocol would hold on to the GET after usage in a ticketing cycle.

In the first blog on token economics we introduced the concept of the equation of exchange and the importance of velocity control. In short we concluded that conversion from the token into FIAT should be controlled as without any control mechanisms risk-averse actors can create a token economy where the conversion-rate to FIAT is very high.

Unfortunately the model isn’t complete with just the equation of exchange. While the equation is correct from a high level point of view it is far from perfect when trying to quantify effects of certain protocol mechanisms practically(i.e. predict effectiveness of velocity reducing mechanisms). Its main flaw comes from the fact that when used in the context of modelling value crypto assets(and not FIAT money/economies) the equation becomes circular. Let’s quickly cover the formula again to refresh your memory.

The equation of exchange isn’t the golden bullet

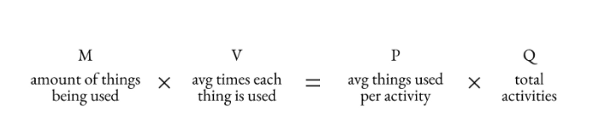

The equation of exchange rephrased to a more utility-focused description of demand.

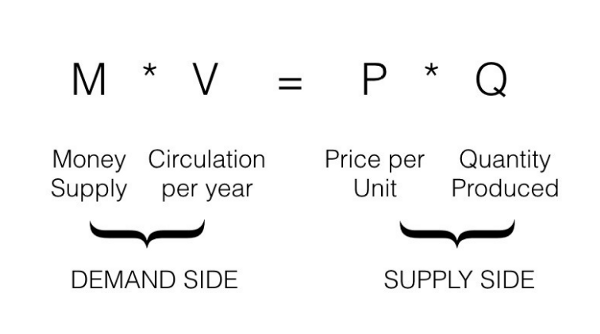

The Equation of Exchange was originally formulated by the economist Irving Fisher in the early 1900's. The equation describes the relationship between the demand side (M * V) and the supply side (P * Q) for the monetary base of a currency region.

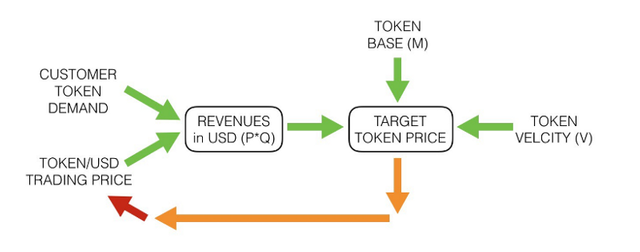

It should be clear to the reader that we differentiate between the external market of the protocol(i.e. the open market, over which we have no control) and the internal market(i.e. the event-cycles, their ticket market and the actors) are two entirely different economies. While the external market is affected by the pull(demand) for GET coming from the internal market, this pull is controlled and set by the added value in the internal market. As the internal market of the GET Protocol has this control, this aspect of the protocol is most interesting to model and formally describe.

Figure X MV = PQ (Image source: Austere Capital). More background information about the equation of exchange(SOURCE).

For those that are a bit lost, lets go over the variables again in the context of ticketing in the GET Protocol.

- M — The total asset base. In our case this is all the GET that is being used, staked or held by actors within the protocol.

- V — The velocity of GET within the protocol. How many times a unit of an asset is spent, used or staked/locked by an actor within the protocol.

- P — The amount of work/utility a token can perform for an actor within the protocol. The more work a single GET can perform, the higher its value for the holder.

- Q—The total amount of tickets and event cycles occurring within the protocol.

Equation dynamics & assumptions

If one adopts a theory from a different field it is important to review the assumptions made by the creators of said theory to verify if these assumptions also hold up in our usecase. In the case of the equation of exchange we need to review if monetary policy is comparable with protocol policy.

When looking at the formula from a central bank point of view there is only one variable the bank can influence directly; the money supply(M). By printing extra money a central bank is able to trigger inflation (P) making the economy more

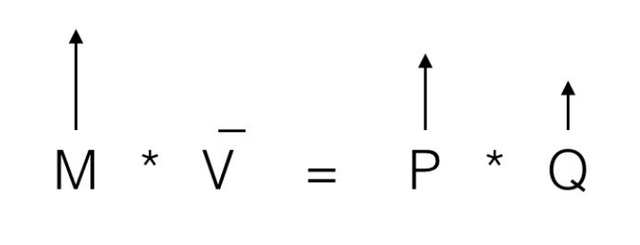

competitive (i.e. cheaper) and thus increasing Q rather directly. Velocity in the context of both quantitative easing or ordinary monetary policy (i.e. printing more money) is deemed to stay unchanged in the short term. Oops. Conclusion: due to this ‘V doesn’t change’ assumption the model on its own is rather useless in predicting changes in velocity.

Figure 2. By increasing the money supply a central bank directly increases P due to inflation of the currency and thereby boosting Q. Central banks can’t directly influence V in the short term and assume it to be in-elastic as they have no direct mechanisms at hand to boost faster overall money circulation directly by actors in an economy.

Modelling effects on velocity

The equation (and the theory around it) seems to be built upon the assumption of inflation and thus the dynamic characteristics of the money supply(M). The problem is that crypto is generally built upon the idea that the ‘money/token/coin supply’ (M) is either capped(cannot change after minting, like with GET) or has a scheduled decreasing release (like Bitcoin). In other words crypto tends to prefer the deflationary train of thought.

Summary: Central banks make use of control tools that are not completely compatible with what we have at our disposal. Check this blog for a more complete analysis of this conclusion.

When using this model to quantify certain velocity reduction measures in the GET Protocol we run into another problem; circularity.

Circularity is bad!

We need to be weary of being completely reliant on the equation of exchange as the equation was originally formed to model a different ecosystem than the one we are trying to model.

MV = PQ equation is what’s called an identity equation — an equation created such that both sides are always equal. In this case, sum of all purchases in an economy ought to be equal the sum of all sales. MV represents the former, or the number of times money is ‘spent’. PQ represents the latter, or the sum of all production sold.

We need to be cautious in using MV = PQ free of the original context and constraints. The equation can be circular, as the terms aren’t exactly independent of the other. (SOURCE)

Figure 3. A crypto protocol is expected to generate FIAT revenues by buying and selling tokens at the traded token/USD price all year long. The crypto MV = PQ equation turns right around and **uses this very FIAT revenue **figure to try to determine a target token/FIAT price. This means that both the left side and the right side of the formula use the trading price to ensure the equation balances out. This is circularity and makes the formula in its current form useless in predicting velocity.

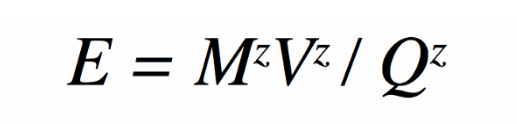

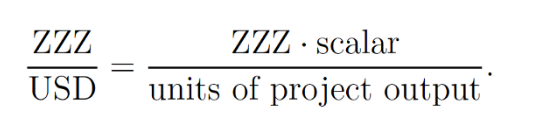

E is the price in USD of token ZZZ.

Looking at the notations of all the variables in this correct looking formula one will find a flaw.

For the formula shown above to be a correct specification, the units on both sides of the equation have to be the same as is the case with equation of exchange. However, USD does not appear anywhere on the right hand side of this formula.

**Summary: **Without an independent means of calculating velocity the equation of exchange will not provide any insights due to the variables in the equation not being independent of each other. If you want to dive deeper into this

conclusion read up on this blog on the quantity theory of money for tokens.

Ok.. How to model and predict effects on velocity then?

In conclusion: we should find a way of estimating/calculating the projected velocity(or change in velocity) of the GET Protocol that is independent of the P * Q side of the equation. That way we can prevent the equation of exchange

of becoming circular. I have used, tested and modeled a wide array of models. To mention a few:

- Calculating velocity with queuing theory (will not be covered in

this blog, if ever). - The Baumol-Tobin model to calculate velocity in a non-circular manner.

- A more expansive version of the Baumol-Tobinmodel to calculate velocity also accounting for staked/locked tokens and alternative investment opportunities.

- The network-effect approach (also called the Evans approach) to velocity.

All viable approaches will be covered in future blogs and if not then certainly in the yellow paper of the GET Protocol. For starters I will describe the most simple means of independent velocity approximation; by using the Baumol-Tobin model and applying it to the crypto protocol usecase.

3. Calculating velocity with the Baumol-Tobin model

In this blog we are not going to cover the why of actors holding on to GET. For simplicity this behavior is assumed here (no worries future blogs will cover this aspect in great detail). As GET costs FIAT money, holding on to GET is a rational choice not to convert back to FIAT. Luckily for us there is an entire field of finance surrounding the calculation of holding non-cash inventory; It’s called the economic order quantity (EOQ), and it generally applies to physical items with holding costs like warehousing.

In the case of coins and cryptoassets, we don’t really have physical holding costs, but we do have the opportunity cost of keeping that money somewhere else where it can earn interest. So we need a refined version of EOQ that uses the interest rate as the holding cost.

For this calculation we use the Baumol-Tobin model, a model which uses the interest collected on liquid money and the transaction costs involved with converting this money into a particular asset. This blog is long enough as it is, as there is more than enough material online describing the model so if you are interested in its applications and history surrounding it Google at it :)!

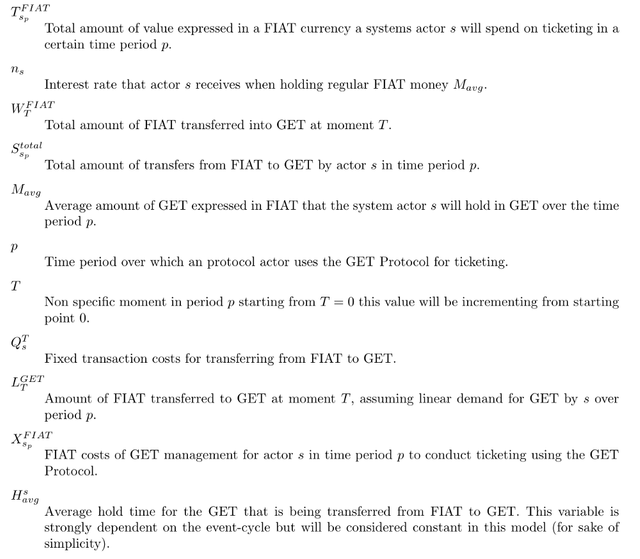

The variables of the simplified BT model applied to GET

The variables and formulas is said to be simplified as it is making assumptions (i.e. considering certain variables to be static, predictable etc.) We’ll zoom in on these assumptions in a future fractalnomic zoom.

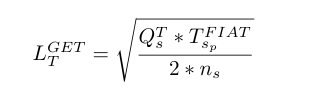

Logic formula 1

If a ticketing company chooses to use the protocol they will do so because they know it will add value to their business in a certain time period. This value is expressed (for their accounting department) in FIAT money. In order to access this added value they will convert FIAT to GET. But not all at once. After all, holding FIAT is preferred as FIAT money on the bank will accrue interest and will make the company more solvent as a whole. As such we assume they will convert the minimum amount of FIAT to GET, maximizing the added value of the staking/using GET and minimizing interest and risk costs involved in transferring to GET and holding crypto.

Amount of FIAT transferred to GET

Formula 1. Assuming a continuous demand for GET over time period p.

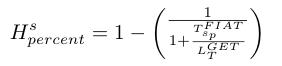

Logic formula 2

In a more general sense it is interesting percentage wise to describe and model what percentage of all the added value of using the protocol on a yearly(or periodic) basis is held in GET at all time. This metric will heavily correlate with the protocols internal velocity.

Holding percentage of added value in GET

Formula 2. Assuming a continuous demand for GET over time period p.

We are not there yet. But its a start. See ya later alligator.

Lets do it live

As noted such formulas add little value just by being stated in LaTeX or in medium. They do however provide us with the certainty and confidence needed to take capital risks in testing these models in real-life ticketing use cases. It also keeps me off the streets which is a win for society as a whole.

4. Upcoming blogs on token-economics

As already alluded to this is a quite broad and nuanced subject. Most research and testing on everything covered has been conducted a while ago. As I know it will scare everybody off when I drop all this theory at once, therefor we will publish a token economic blog every 2/3 weeks.

- Part II — Quantifying value added by centralized and decentral innovation.

- Part III—The logic and strategy behind the GET Protocol open sourcing road map

- Part IV — The Fee Based Utility Model

- Part V — The Token Discount Utility Model

- Part VI — Circling back to the added value of decentralizing an industry.

- Part ∞ — Cover all the subjects.

So there is a lot of text to look forward to.

Show me the numbers

The whole point of the token-economic utility models is to create an ecosystem that is stable and predictable. Theories and frameworks used are only part of the endevour, quantitative models in both Google Spreadsheet as in as SimPy simulation using Python. The IPython notebooks will be made public in the GitHub repository of the GET Protocol at some point.

More about the GET Protocol

Any questions or want to know more about what we do? Join our active Telegram community for any questions you might have, read our whitepaper, visit the website, join the discussion on the GET Protocol Reddit. Or get yourself a smart event ticket in our sandbox environment.