The Layers of the Internet

When you think of the internet, or the web, you probably are thinking of the web pages you see when you open your browser. Images and text, and sometimes video, assault your senses and that is what you percieve as "the internet". But underneath the page and images, the internet is built on several technologies, and only the top of that stack gets seen by most people. Even technical people don't go very far down in that stack.

The Layers

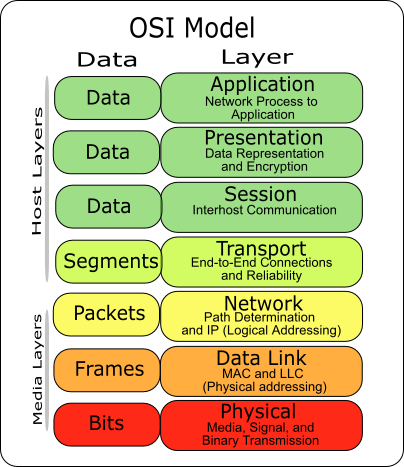

When discussing networking, it is usually done by describing the technologies involved as several layers built on each other. On the top, there is the web page that you see. This is built on top of something call the Hyper Text Transfer Protocol, or HTTP. This protocol is what is referred to when you type "http://example.com/". HTTP connects your browser (the client) to the web host (server) that provides the data your browser needs to render the web page.

HTTP is itself built on top of the Transmission Control Protocol, or TCP. TCP allows for reliable transmission of data in a strict order to occur across a network that doesn't make those same guarantees, and to do that most of the time (never going to have a reliable connection when the cable is unplugged or the wireless is off). A number of streams between can be maintained between two machines by using different ports, of which there are 65,536 available. Some of these ports are reserved for specific uses. For example, the HTTP mentioned earlier uses port 80 by default. If you want a different port, the URL changes to specify the port: "http://example.com:8080/".

TCP is built on top of the Internet Protocol, or IP. This is a packet-oriented protocol, where each packet has a source address, a destination address, and some data payload. When routing to the other machine, only the destination address is used to determine where the packet needs to be sent. IP has two version, with the main difference between the two being the size of the addresses. IPv4 uses 32 bit addresses and allows for a maximum of roughly 4.2 billion addresses. When Internet Protocol version 4 was released in 1983, there were only 4.6 billion people on the planet and only a tiny fraction of those had computers. Now, there are over 7 billion people, some of which have multiple computers connected to the internet.

IP is built on top of a variety of link-level protocols, but the one I'll be discussing is Ethernet. Origionally, Ethernet used a physical medium that was broadcast. Every terminal would receive all the packets, but would then discard everything not intended for that particular terminal. This worked for short distances, those not more than a couple hundred feet. Because it was a broadcast protocol, all the terminals had to be reachable within a certain amount of time, and the speed of light limits the maximum distance that information can propagate in a given time. Later, repeating hubs and then switches came on. The latter either forwarded all packets out every interface other than the one it was received on (dumb switch), or it kept track of which terminals were present on each interface and then only forwarded the packet to the interface that terminal is on (smart switch).

From there down, it is all electronics and physics until we reach the limits of human knowledge.

Another Way

This is the way the internet is now, but that is not the only way the internet could be built. There are replacements of several of these layers currently in development that have their own advantages (and drawbacks) that are hoping to replace or supplement the technologies currently in use. Here are a couple of them:

InterPlanetary File System

IPFS is a peer-to-peer (P2P) a content distribution network (CDN) for static files. It is built upon the ideas of bittorrent and git. Each file in the system gets store under a hash of its contents, and this is used to fetch the document instead of using a server+path pair. Because of this, it doesn't matter where the data comes from. Any server with the data can serve it, not just the original server.

Unfortunately, this ability to serve from anywhere comes with some a drawback: mutable or dynamic content doesn't really exist in the system. If you change a file, the hash changes, and the new hash must be found somehow. IPFS has a feature that is supposed to help with this, the self-certifying ipns namespace, but the implementation as of the time of writing is broken, because it can take a couple of minutes to resolve ipns addresses. When people will spend no more than 10-20 seconds on a given web page, this is a serious issue.

Notes:

- All the images in this post are stored on the IPFS network.

- All the wikipedia articles referenced in this post are from a snapshot of mirrored on IPFS

CJDNS / Hyperboria

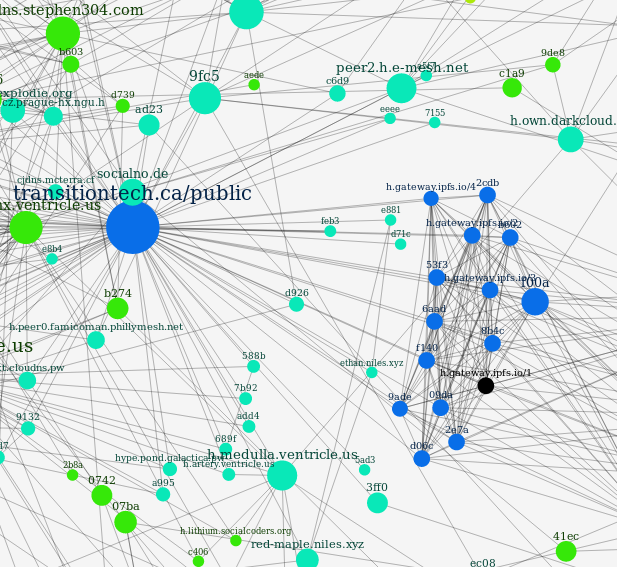

Network Explorer Hyperboria CJDNS Source Code

CJDNS is a mesh network routing protocol that utilizes cryptography for everything it can. All connections are encrypted by default, with the IP addresses being a 128-bit fingerprint hash of the public key for that node. Once you have an IP addresses, a man-in-the-middle attack is impossible* by default. The routing table is stored in a distributed hash table (DHT).

The routing protocol uses several layers internally: switching, routing, and encryption. At its base, each router is setup as a many-port switch, with port 1 being the node itself. Instead of using an IP address and a hierarchical routing table to get packets from one host to another, the exact path the packet will traverse is encoded in a switch label. The system can function with a partial routing table.

To get the paths between nodes, a distributed hash is used to determine the switch label from the IP address. This is in contract to the current internet, which requires that all routers with no default route to have a complete copy of the global routing table, which is roughly 590,000 routes. Additionally, the routes are distributed in real time, and in a way that requires trusting the routes are correct, which is not always a safe assumption. In 2008, Pakistan, in an attempt to block YouTube, configured a blackhole route that caused the entire internet to route YouTube's traffic to Pakistan where it was discarded instead of the correct servers. In CJDNS, the network graph could be traversed in a secure manner thanks to cryptography.

Like IPFS, there is an outstanding issue with CJDNS: the horizon bug. The switch label used to route packets is a fixed length (64 bits), which forms a hard limit on the maximum number of hops, as each hop requires at least one bit. So far, this hasn't been much of an issue because the size of the network is small, at 570-590 nodes in total, but if it were to grow, this limit would become much more urgent to resolve.

Notes:

- *cryptography is technically possible to defeat by methods such as brute forcing the keys. However, for the key sizes commonly used, it would take decades with current the computational capacity of the planet to break a single key provided there is not a fault in the key itself.

- IPFS has several of their gateway nodes on hyperboria, as shown in the image.

What's Left to Do

There is still a lot of work left to do to have a fully secure internet system. In particular, a secure replacement for the DNS system is going to be required. Both CJDNS and IPFS are secure, but only so long as you can get the correct IP addresses or content hashes. If you can't get these from a secure source, a malicious actor could feed you data of their choice and eavesdrop on your communications.

IPFS works quite well for static content, but not at all for dynamic content. The web as it exists today is a combination of static and dynamic content, so some system would be required for server processing, as there are things that cannot be done with client-side scripting (such as periodic updates regardless of whether the user's computer is on).

There is currently not a distributed, secure version of a search engine. An attempt at a P2P web indexer (YaCy, written in Java) exists, but is mostly unusable, as only a tiny fraction of the internet is indexed. Indexing the entire internet required large amounts of processing and data storage to do. IPFS could help with the storage, but the processing is missing.

Final Thoughts

I'm sure that I've left something out. The internet is one of the most complex systems ever created, of such a scale that it is impossible for one person to know everything about how it works and what it contains. I hope that something like what I've described is created, as it is more scalable, secure, and censorship-resistant than the current internet, and I suspect that those qualities are going to be needed much more in the near future.

I will be featuring it in my weekly #technology and #science curation post for the @minnowsupport project and the Tech Bloggers' Guild! The Tech Bloggers' Guild is a new group of Steem bloggers and content creators looking to improve the overall quality of our niche.

Wish not to be featured in the curation post this Friday? Please let me know. In the meantime, keep up the hard work, and I hope to see you at the Tech Bloggers' Guild!

If you have a free witness vote and like what I am doing for the Steem blockchain it would be an honor to have your vote for my witness server. Either click this SteemConnect link or head over to steemit.com/~witnesses and enter my username it the box at the bottom.