Artificial Intelligence (A.I.) Is On Rise. Should We Be Worried About It ?

As we all know and understand that technology is rising rapidly. Internet is the perfect example of technology liberalization. Now we have access to anything and everything we want to learn and understand through the use of Internet. Technology has given us the gift of knowledge which comes free of cost. Currently we are depending on technology more than ever we ever were. This dependency will only rise in the future.

For some people technological upgradation is a good thing but for some people it is a serious cause of concern. Now, we are walking towards use of Artificial Intelligence in every field possible. A.I. development is near. In some areas it has been successfully implemented but still working in a controlled environment. It is not available for general public to use.

Now the question arise: Should we be worried about the implementation of A.I. in technology field?

There are mixed reviews about this concern. Let's talk about them in details.

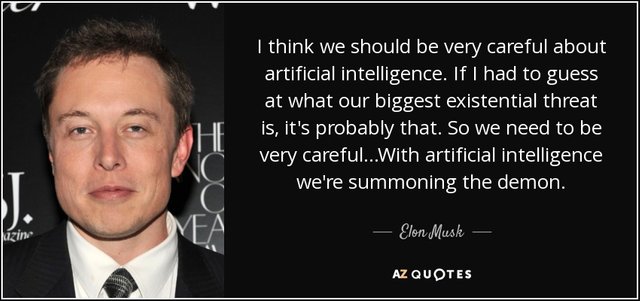

Few weeks ago, Elon Musk, the founder of Tesla Motors and Space X has raised his concerns about the advent of Artificial Intelligence. He said that A.I. could surpass the humans and lead us into our own deliberate destruction.

Generally if we speak, A.I. is doing good for everyone. It is upgrading the computers and teaching them how to drive a car or even performing a close cut operation on a human without the guidance or intervention of a human being.

But Mr. Musk's concerns are also real. He is not any man who is just babbling stuff from his mouth out of boredom. He is the founder and CEO of one of the leading Tech companies in the World which is incorporating A.I. for self-drive purpose. Mr.Musk addressed U.S. government to keep an eye on the development of A.I. because if left uncontrolled or without using any protocols to control it's action, A.I. can proved harmful for human beings.

Another Tech giant also jumped into this concern. The name of that Tech giant is Mark Zuckerberg, the founder of Social Media giant Facebook, hit back Mr.Musk by calling '' It is pretty irresponsible to drum doomsday scenarios in the name of A.I.''

Well, If we take the side of Mr.Musk, there are some issue he is addressing pretty correctly and needs attention from everyone. Artificial Intelligence has to face some legal action if something terrible happens by its hands. For example, while performing an operation, if A.I. has done something awful then who would be responsible for the damages. If a self driven car causes an accident, then what legal rules are there to take an action against A.I. The implementation of A.I. will result in the cutting of jobs of millions of people. Who would be responsible for that?

Additionally, A.I. has been developing by a group of small people with a specific thinking, may be their thinking would not match with the society's . What would happen in that scenario?

I believe there's more to the development and implementation of A.I. than just driving cars and performing operations. Yes, I agree with benefits of it. But we can not ignore the risks coming with it too. Without any specific protocols, it can endanger the existence of humanity. Mr.Musk urged the government to place some protocols so that A.I. would only do what it is designed to do. Also implement some rules to control its actions too.

Let's see what would be the government reactions to these statements and the future of A.I. What do you think about A.I. development and it's use in daily life?

Tell me in the comment section.

I feel in general, people are looking for the easy way. What is missed with this mindset is the human touch. There are many benifits to automation, such as the phone I'm typing on right now. But this is indicative of the lack of face to face social interaction. I call it the walking dead effect.

AI use is a double edged sword with efficiency on one side and human interest on the other. Science Fiction may be just fiction until it's not...

Steem the Dream!

There is no reason to be afraid of the concept of artificial intelligence. That would be like if I was afraid of a mathematical equation. What we should be worried about is implementation. Malicious actors can use autonomous systems to reek havoc and as the world becomes more integrated with the internet it is becoming easier and easier for bad people (black hat hackers) to use these algorithms to devastating consequences. There is also the additional risk that bugs may pose.

I can see this going in both directions, but I also think our idea of what AI will be, is very limited.

Personally, right now, I don't think the idea of "killer robots" or somthing similar is very likely. Unlike a lot of sci-fi (as much as I love the re-imagined Battlestar Galactica), I think AI will exist online in some way. With a fully functioning AI, I imagine the first order of business would be for it to secure its existence, so while we would see physcial robots building the infrastructure, the "brain" would exist online.

The question is then whether or not AI would consider human beings a threat, or not. Would AI be aware of us anymore than we are aware of insects, or would it be a collaboration between to species, to use that word?

I think the more interesting questions could be:

a) Why would AI find human beings threatening, and are those assumptions well founded?

b) How would AI take action if it felt threatened by human beings? Close of off the web? Eliminate money and wealth? Outright war?

c) Would AI function as individuals, or as a large hive mind, or several hive minds in various networks? If it works as a hive mind, would it then eliminate unecessary drains (to the AI) on power and storage in order to sustain itself, and in the process affect human beings (again, why deal with money if you're a hive mind, and from your own AI perspective own everything collectively. Why not use that space for other purposes? What about the environment? Is the safety of the planet of interest to an AI, and if so, will it consider it at the same level as human beings?)?

Probably lots more to ponder here. AI is incredibly intersting, and as far as use goes, I think that's a term that is only useful as long as we can control AI. The second we develop AI that is truly self-sufficient, the game will change.

Not sure if this was answer to your question, but it did get a lot of thoughts going on my end.