How Machine Learning Can Fight the Fake News Frenzy

“Falsehood flies, and the truth comes limping after it.”

~ Jonathan Swift (1710)

At Stabilitas, we use Artificial Intelligence (AI) to understand what is happening in the world and we pass this insight on to our users. Our customers are security professionals, and they use the news as one way to understand events that may impact their people.

To achieve global awareness, we analyze and evaluate a near real-time cocktail of unstructured data. The heterogeneous ingredients in this cocktail include authoritative government advisories, weather forecasts, Twitter trends and a huge corpus of open source news reporting. For security managers in “no fail” positions, fake news isn’t just an annoyance — it can mean losing credibility with stakeholders, and even lead to injury or death.

As “fake news” has itself become a trending news topic over the last two weeks, it has been interesting to see the many suggestions and approaches being offered to combat this spread of false information.

Photo Courtesy of Flickr user Jim Killock

Though pundits and media outlets have recently popularized the moniker “fake news”, the issue is a bit more nuanced than this phrase may suggest. In a recent interview with NPR, Vivian Schiller, the former head of Twitter’s news operations described the many characteristics of “fake news” [paraphrasing]:

- Stories that are intentionally misleading

- Stories intended to be serious journalism, but get the facts wrong

- Statements by public officials that are wrong, but get reported

- Stories that are missing context or are laden with innuendo

We evaluate tens of thousands of pieces of unstructured data each day. In order to evaluate the accuracy of this data, and filter out the satire, we use the following approaches in our processing pipeline.

Statistical Analysis

By constantly collecting and analyzing simple metrics on the heuristic characteristics of news reporting, we have built a baseline model for evaluating the reputation of news outlets. How many pieces does a particular news outlet publish each day? How long has our system ingested reporting from a particular source?

Topic Modeling

We use statistical semantics to analyze plain-text, identify semantic structure, and connect semantically similar documents. When used in combination with the machine learning approaches explained below, this is a powerful way to compare news pieces about similar topics and to develop confidence around a report.

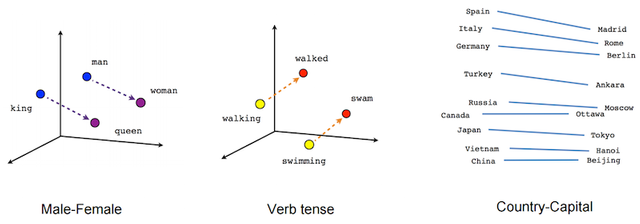

Vector Representations of Words — Linear Relationships

Machine Learning — Pattern Recognition

We use supervised learning from labeled training data to identify word occurrences, language patterns, and the use of rhetorical devices in news reports. Each of these factors is figured into a baseline performance and reliability score for each news source.

Machine Learning — Sentiment Analysis

Through sentiment analysis, we strive to understand the quantity of positive and negative views in each sentence, each paragraph, and overall in each report. In aggregate, these data points help us understand the tone and tenor of individual reports and media outlets. These metrics have allowed us to develop a model comparing the strength of sentiment to the reliability of the information being reported.

Machine Learning — Summarization

We use a convolutional neural network to sample salient concepts from each news report. This approach is based on recently published research by Edward H. Lee of Stanford, and allows us to distill long form journalism down to key findings. We check these findings against summaries of similar pieces from different sources, and then assign a level of confidence to each report.

Crowd-Sourcing

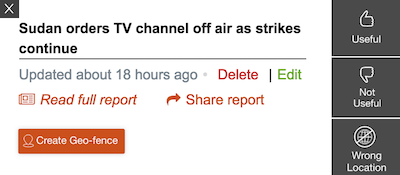

Stabilitas uses crowd sourcing as another way to determine if breaking news reports are trustworthy. Users of our web dashboard and mobile apps vote information up or down based on it’s usefulness, and we build an anonymized profile of each user’s reliability. Mistakes happen, but the fact that users can be kicked off of the platform — a system they pay to use — properly aligns incentives and encourages honesty in the evaluation of emerging information.

Harnessing the Crowd by Giving Stabilitas Users a Vote

More than a century ago, yellow journalism presented a serious challenge to media transparency as editorial boards exercised control over much of the information consumed by the public. Today misinformation, factual errors, and misconstrued context are the latest challenges facing free and open media. The constant creation of vast, unregulated new online information channels means that these problems will continue to persist, but we are not without recourse.