[Programming] Beginning the Search for Discovery

I decided that today I'm going to tinker around with some code which may lead to a method for generating Discovery on the steem blockchain. No promises yet, but every new idea starts with experimentation.

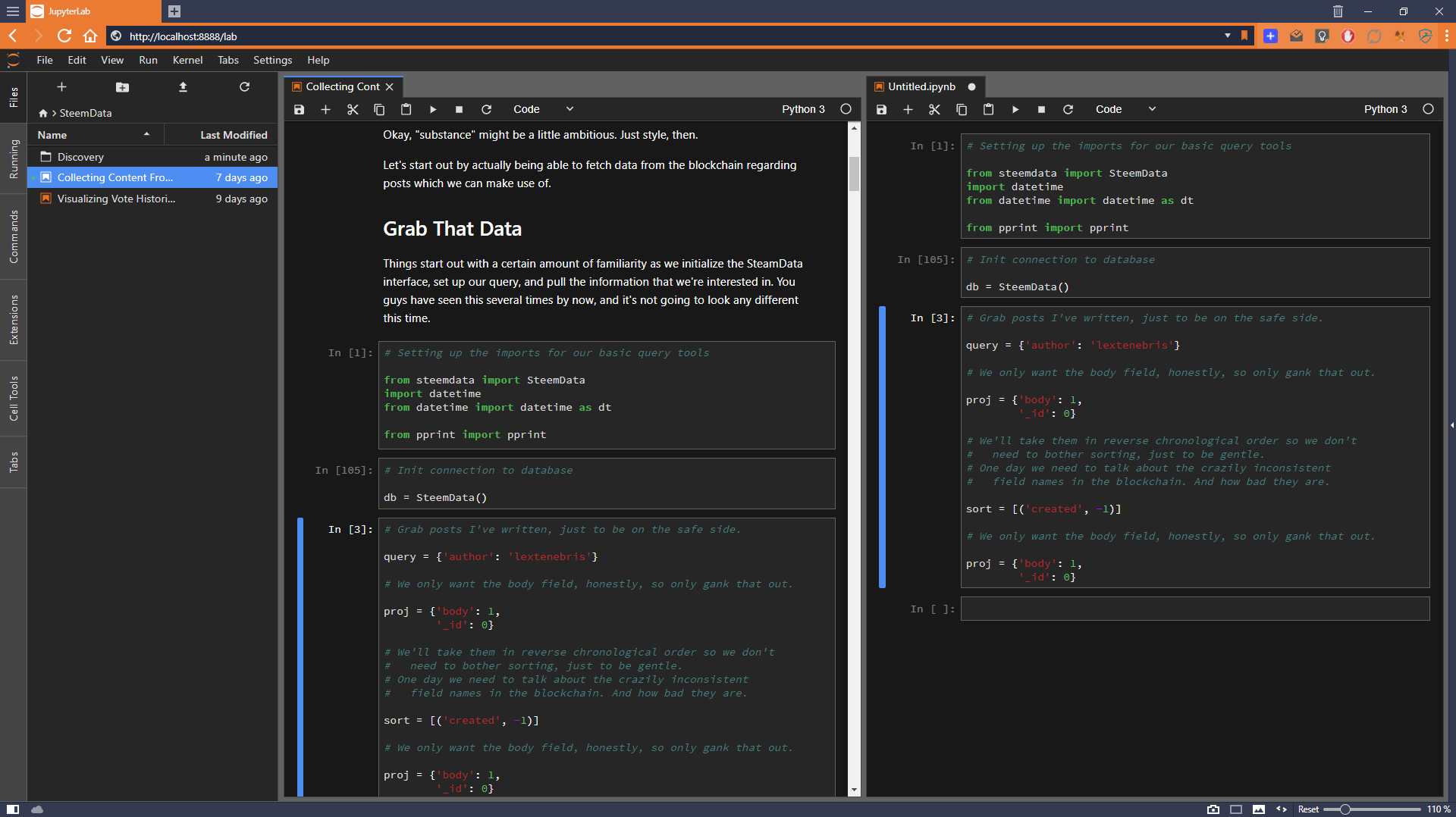

Frankly, I wouldn't even bother taking up this sort of thing if Jupyter Notebooks didn't exist – or I should say JupyterLabs, now. They took their standard Notebook editable real-time interface and built a proper IDE environment around it, so that you can do multiple window management, text editing, multiple views on the same document, and all with the integrated data exploration tools from Notebooks.

In this case, I'm grabbing some code from one of my earlier posts in order to re-factor it and rebuild it into a new post using entirely different tools. That's as easy as grabbing one of the blocks on the left and simply dragging it into the document on the right. Repeat until I have all of the stuff I need.

If you are a programmer and you're not using this, I hope you have an environment that is equally as flexible to work in.

Oh, did you notice that it's all running in a browser window? That's right, it all lives inside your browser. (Okay, that's not completely true – there is a server program running on the backend on my system and the interfaces through the browser. But that's not as impressive.)

Maybe I will come up with something through this. Maybe not. We'll just have to see.

I've built a couple tools that do basic word analysis (word cloud and sentiment) on a steemit account: https://evanmarshall.github.io/steemit-cloud/

You might be able to use this analysis to help people discover more content. For example, if you use and engage with certain keywords with high probability, you may want to see other posts that contain those keywords.

Similarly, you could use it to auto-follow people who talk a lot about things you're interested in.

I appreciate the tool reference, and I'll add it to my bag, but my current plan is to go a different direction with the analysis.

Right now I'm thinking I will extract the text of everything I have ever voted up, do some aggressive cleanup on it, break it into reasonable sentences with some nice filtering, and then treat that corpus as a single document. Then I'll hit it with latent semantic analysis from the gensim package to create a lexical/semantic eigenvector, and then use a nearness metric to figure out how "like" the things I've already liked any given document is.

This has the advantage of being able to update the LSA with new information without having to recompute the whole model, and should be relatively fast once that model is actually built. Doing all the text manipulation, stripping stuff, shifting around, filtering it – that's going to be, surprisingly, the slow part.

I'm not really fond of "keyword searching" as a means of discovery. It is very difficult to hit the sweet spot of too many keywords which don't actually give you enough content, and too few which are overbroad. A semantic distance measure at least gives you a sort of general, multiaxis way to approach the problem.

It may not be a good one, but it's the one I'm starting with.

(In theory I could use some sort of neural net, but there is no good reason to tie myself to a single training event like that, and neural nets which get ongoing training our real bear to code and deal with.)

Clearly I'm insane.

I certainly agree that an online algorithm will be much preferable to something that depends on large one-off runs.

It seems like you should also gather data from other users. Maybe a netflix like recommendation engine (https://github.com/Sam-Serpoosh/netflix_recommendation_system). That way it would be like an implicit follow. If someone has a similar engagement profile to you, you will be recommended content that they like.

All in all, it sounds like you know what you're doing and I'm excited to follow along for your updates.

See, here's the thing…

I don't care about other users.

That sounds pointed, but it is exactly true. If I wanted recommendations from other users, I would just accept the fact that up votes or some kind of crowd indicator. Of course, we know by looking at Trending and Hot that it's a broken measure. It clearly doesn't work.

But more importantly, the opinion of other users has nothing to do with my opinion. The whole idea here is that individuation is far more effective at bringing me things that I will like than looking at what other people like and assuming that they are like me.

The Netflix recommendation system starts with a basic assumption that your registered preferences are representative of what you really want. Then they look at the registered preferences of other people and try to find the ones that are nearest to your express preferences in their fairly limited measure space. Then they look for things which other people have found desirable who are near you in preference space, check against whether you've seen it and rated it, and go from there.

The reason that they use the intermediary of other people's preferences is because they don't have the technology, processing power or time that it would take to directly find features in movies that you have rated preferably. Image processing, audio processing – those are hard problems. It's much easier and less data intensive to look at the information which is easy for them to acquire, expressed ratings and preferences.

We don't actually have that problem. We could, if we wanted, create an eigenvector which describes the up vote tendencies based on word frequency of any individuals' history. Then we could find the distance in our vector space of interesting words between those up vote tendencies.

But that wouldn't really be useful for actual discovery. It would only be useful for the discovery of content which someone else has already found and up voted.

I want to go the opposite direction. I want to be able to take a new post which no one has actually seen before and compare it to the eigenvector which describes the things I have historically liked in order to determine if this new piece of content is something that I might like. No amount of information from other people will actually make that more possible. Quite the opposite. It would only pollute the signal of my preferences.

Again, I am working actively to avoid an impositional process which originates on the system. I don't want the system to tell me what other people like, I want the system to be able to look at content and filter it for the things that I might like.

So far today I've managed to extract from the database my likes for an arbitrary period of time in the past, extract the text of those posts and comments, process them into word frequency lists, and I'm now preparing them to go into some kind of semantic analysis – but this is where things start getting hard.

What you're saying makes sense. I understand that you don't want to base the recommendations based on other users.

I do think you need a specific way to characterize your feature vector though. N-grams is one way but it can blow up the dimensionality really quickly. You'll also have to think about how you want to handle images and other media. Do you also want to include some features representing the profile of the person voting?

I would also be careful of creating your own filter bubble. Maybe create two models: one for maximizing the expected value of a recommendation and another for maximizing the maximum value of a set of recommendations . You could then mix core recommendations with discovery.

I understand that these suggestions only add complexity to any system you build so I only offer them as potential improvements.

In any case, I'd be happy to help you test and give feedback for what you build.

I've used N-grams before pretty successfully, and I'm really fond of the fact that they care nothing about source language or even source format if you filter the input properly. In this case, however, I'm just going with a bag of words solution which is essentially a giant dictionary with word frequency tags. That should work well enough for what I'm doing right now. I can always change the processing methodology to output a different vectorized descriptor if I really want to.

The actual dimensionality doesn't matter in this particular case because I have more than enough horsepower to throw at the problem and I'm working with sparse matrices anyway.

I'm stripping out images, HTML in general, URLs, pretty much anything that's not text. Those features are simply not interesting to me. The only features that are important about the person voting are the features from the things that they're voting for.

I want a filter bubble. That's the whole point. The vector space described by the up votes is going to be bulbous enough that effectively any sort of distance measure that involves it is going to involve some slop. That's more than enough to keep fuzzy match diversity fairly high – unless someone is extremely specific about what they vote for, in which case who am I to tell them what they want?

Again, the idea is to get away from the idea that anyone else has the right, ability, or insight to tell you what you like. You have emitted signals. Lots of them, actually, if you look at the features of things that you have voted up. A system shouldn't second guess you and your preferences.

I'm a technophile but I don't adhere to the cult of the machine. A discovery tool should do just that, and in this case specifically be a discovery tool for filtering the bloody firehose of posts which are created on a moment to moment basis by the steem blockchain.

This is a long way from any sort of testing or feedback. Right now it's in the early prototype, feeling around the model, looking for sharp edges sort of place.