Be Careful! Vulnerable Digital Assistants Attacked by Hackers

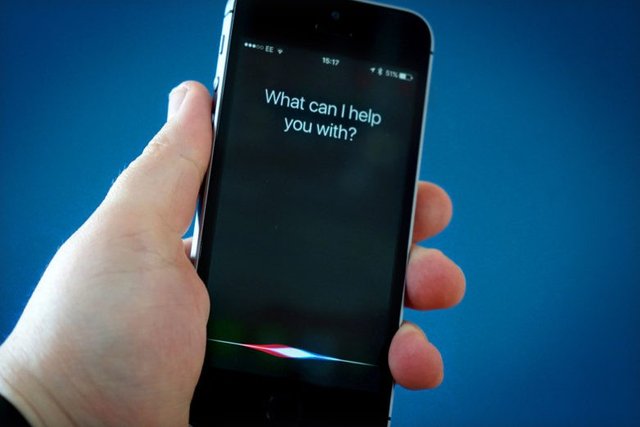

Voice assistants have recently become a technology developed by many technology vendors. But apparently voice assistants like Siri and Alexa are potentially vulnerable to hackers.

This is revealed by the new vulnerability found by Chinese security researchers. The bug, which is essentially a design flaw, allows hackers to launch a new type of attack, called DolphinAttack.

The attack allows cyber criminals to whisper orders hidden into smartphones to hijack Siri and Alexa, as quoted by the IB Times, Friday (08/09/2017).

Researchers at Zhejiang University said that DolphinAttack would allow hackers to send commands to vote on digital assistant apps that use ultrasonic frequencies, which are too high for human listening, but sounded clearly on the device's microphone.

The attack allows hackers to potentially hijack voice assist apps like Siri and Alexa and redirect users to malicious websites. The vulnerability is reportedly affecting voice assistant apps from Apple, Google, Amazon, Microsoft, Samsung and Huawei.

The researchers say that by using DolphinAttack, hackers can issue various types of commands, ranging from as simple as saying "Hey Siri" to make the iPhone dial a certain number, or Amazon Echo to "open the backdoor".

In some proof-of-concept attacks, the researchers issue an inaudible voice command that allows them to enable Siri to initiate FaceTime calls on the iPhone, enabling Google Now to divert the phone into airplane mode and manipulate Audi's system navigation. "The range of attacks varies from 2 cm to a maximum value of 175 cm and shows a large variation in the device. What's more, the maximum distance we can achieve for both attacks is 165 cm in the Amazon Echo.

We believe that the distance may increase with equipment that can produce sounds with higher pressure levels and show better acoustic direction, or by using shorter and more recognizable commands, "the researchers said.

In other words, the effectiveness of DolphinAttack depends on the device. For example, using an attack technique to instruct Amazon Echo to open a door would not be possible because it would require the attacker to already be inside the target house. But in comparison, to hack iPhone is simpler because it only takes a close distance to the target.

Given the nature of the DolphinAttack technique depending on the person using the voice assistant app, the easiest way to stay safe from such attacks is to turn off Siri, Alexa or Google Assistant. "I think Silicon Valley has the disadvantage of not thinking about how a product can be misused - it's as powerful as part of a product plan as it should be," Ame Elliott, director of design at SimplySecure.

Source Image : 1, Ref : betanews.

I'll Be Back!

By following @geek-stuff you will get up to date info.

"Geeks Are People Who Love Something So Much That All The Details Matter" - The Geek

it is now developing dramatically

Thats why Im making my own...