Data as a Service (DaaS): Will it measure up in the long term?

It has been the enterprise pipe dream for decades to serve data like electricity. You consume it and get a bill for the exact amount utilized. In other words, data becomes a utility. It is well nigh impossible to have considered such a feat when the sun was shining on enterprise storage.

But how the mighty have fallen? Scour the storage networking conferences and you will be inundated with Data as a Service (DaaS) offering by companies who are not traditional storage array vendors.

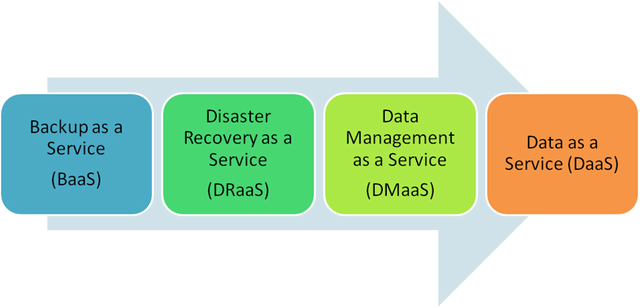

Ever since the cloud happened, quite a few vendors have been beating the drum about DaaS. Simply put DaaS is data when you need it, where you need it and how you need it. Naturally all wise IT owls feel that it is just a new name for backup or disaster recovery.

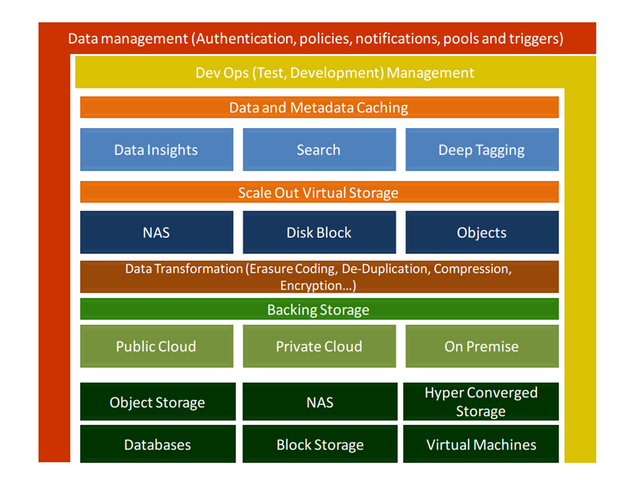

There is primary data and then there is everything else. This is the mantra of the current DaaS providers who provide scale out secondary storage that can run to Petabytes.

I find it ironic that the Direct Attached Storage (DAS) vendors have been replaced by DaaS ones. I guess the value of an extra letter in your service offering is transformational.

The DaaS argument to the CIO/IT manager is simple:

Primary data is precious. You decide its performance, its location, its availability and complete maintenance. But your backup data is like dark fibre. It has practically no use apart from eventual restoration which happens very rarely. Instead we will become the gold standard for all data once it is copied to us. We will bring the agile development model to Dev Ops which means test application servers anytime, anywhere!

The reasons for going towards DaaS are far more mundane:

- The amount of data has skyrocketed and backups are not completing within the backup window

- Backup management with software agents, hardware array integration and archival devices is complex and time consuming

- Plethora of options like snapshots, point in time copies, versioning, continuous replication have formed a hodgepodge of combative and sometimes competitive solutions that distract from application management

- Data being scattered around in different department silos rarely helps

- In this era of rapid application development and testing, storage infrastructure seems stuck in quicksand

- There exists inexplicable diversity in processes that manage backup, disaster recovery and business continuity

So what is the Modus Operandi for DaaS?

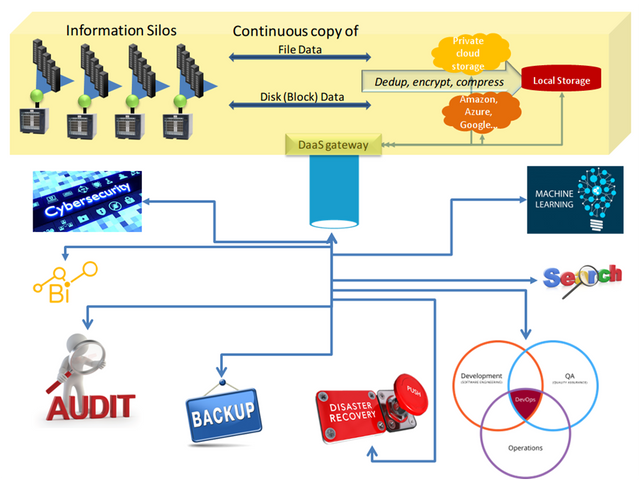

All DaaS vendors insist on becoming the gold copy of all data in the organization. Ambitious you say? The principle is simple enough. They copy all data from primary application stores and become the universal gateway for the entire organization. Irrespective of your purpose, data needs to be accessed through their appliances (software or hardware).

Data can be resting on storage devices in your premises, sink into your private clouds or rasterized across one or many public clouds. The caveat is that IT administrators will leave the primary application alone and unaffected.

I have to confess that when you see the beautiful animations that illustrate each vendor’s expertise, it almost seems like Moses willing manna from heaven!

The basic principle is to copy all data continuously over to data stores that are managed by the DaaS provider. Once the data is committed, it can be versioned, backed up to tapes, ferried over to remote disaster recovery sites, encrypted and carry out virtually any kind of data manipulation. In a virtual machine environment, even workloads can be spun up from saved VM files.

Since there is minimal impact on primary application workload performance, it is a solution that will be easily assimilated in any enterprise datacenter.

The value proposition for Dev Ops is very clear. Testing, development and troubleshooting can all happen on production workloads that are just a few measly minutes behind the original.

Remember those days when we used to sit and test against a 90 day old copy of production data? Obviously our fix would work and then even more obviously, the whole thing would blow up in our faces when deployed in production.

There are a whole bunch of DaaS vendors who use the cloud as a giant backing store. Most of them evolved either from backup software stack or copy data management techniques. Maybe a more colloquial description will help to separate them:

- Disk gateways: They copy disk blocks from your production servers and it does not matter whether you are running file or block storage protocols. They become a pass through gateway where all storage objects are instantiated and shared to the rest of the datacenter. Once the data is copied, they become a massively scalable virtual storage appliance. They typically have most functions offered by a file + block (SAN+NAS) storage appliance

- File gateways: They inhabit the known file storage universe. Your NAS servers or file systems on servers are copied over and they turn into a universal file system gateway. They can take file level snapshots, versioning, application checkpoints, tag per file metadata, etc. Even virtual machines are nothing but files in their world.

Commonality exists in both approaches. Data copied over can be stored on cloud or other commodity appliances that you throw at them. They are also the single window for all your data. That means every secondary data activity flows through them: cyber surveillance, backup/restore, disaster recovery, application testing, solution development, audits, machine learning and business intelligence. They save space by using de-duplication and compression. They secure information by encryption and also scatter data across multiple destinations using erasure coding.

DaaS vendors are subtly different in the way they position themselves and it would be remiss of us if we did not attempt to categorize them:

The hardware guys

Quite a few of them provide scale out appliances with SSDs as well as disks with spindles. This cluster can be grown from one to as many nodes as desired. Companies like Cohesity and Rubrik fit the bill. If I was a customer, I would see myself buying another massive storage appliance to store secondary data with a whole lot of added features. If you use

Cohesity’s TCO calculator or their precomputed calculations, you will see that 250 Terabytes costs you an upfront amount of $5/GB. Rubrik on the other hand seems to be less expensive. Despite being available as software deployments, their hardware avatars are more popular.

They support file as well as disk protocols and hence are the premium offerings in this space.

Software all-in-ones

A few vendors mimic the functionality of the hardware vendors with custom built software solutions. Actifio is easily one of the more recognized players in this space because they were the first to introduce copy data virtualization techniques. Catalogic’s ECX software does pretty much the same thing and Delphix limits itself to databases. The storage backend can be virtually anything including public clouds. Software costs are around 1/4th of the hardware product pricing.

Actifio’s offering is particularly interesting because they are able to create 1000s of versions of a single disk at different points in time with absolutely no wasted storage.

Their ability to be a pure play software provider and bring the power of the cloud into on-premise solutions makes them stand out.

Universal filers

Almost 90% of the solutions in this space fall into this category. They drag file data from any server or other file servers, consolidate it, tag it and proceed to yield a universal view of all of the data. As we can see this is quite different. Just imagine if your datacenter had only file objects then these appliances would be able to map all of your data and we would be drinking kool aid from the Holy Grail no less!

Nasuni deserves special mention here because they are the file server of file servers. Their appliances cache the data from different sources and functions as a single file storage window to all of the enterprise. In fact Nasuni is the complete file storage lifecycle provider from primary storage to archives.

As far as secondary data management goes, there are many companies that offer scale out NAS services. Cloudian appliances are object storage gateways. Others like Igneous, Aparavi, Druva and Scality are file storage gateways. Some of them act as routers and others cache data locally.

Software providers are easy to deploy, low cost and have wide spread applicability from enterprise to SMEs.

Is DaaS here to stay or is it just a step in the evolutionary datacenter?

source: ZDnet

This is the million dollar question because for a long time we have been used to data being married to the application that produces it. However unstructured data has no parents and no children either. We can safely assume that the growth of unstructured data will keep increasing until modern day applications change. There are some compelling indicators to consider that will predict whether DaaS will measure up in the upcoming decade.

The ZDNET IT budget survey 2017-2018 is very informative.

IT budgets are not aligned for DaaS

IT spending priorities indicate that disaster recovery is still the lowest priority for datacenter managers while cyber security does not even make it to the list. This is significant because DaaS spending arises directly from DR/BC funds. Even though DaaS is eating into the primary storage market, it remains to be seen whether CIOs will see it that way in the long run.

DaaS software forms a very minor part of the enterprise software market

The combined might of software that deals with management of information, storage and IT is 15% of the total enterprise budget. This sector is filled with business analytics, primary infrastructure administration, business continuity, backup, datacenter management, etc. DaaS vendors are still seen as software or hardware providers and this will not change because the cloud behind them is the real infrastructure.

Cyber security or business resilience will not be strong use cases for DaaS in the long term

In flight cyber surveillance has been around forever if you consider anti-virus scanning, intrusion detection, network monitoring, etc. What are left are deeper scans, anomaly detection on cold data and tighter integration with disaster recovery. As persistent memory increases (read optane!), in-flight scanning and prevention will take center stage.

Point use cases will not work

The DaaS vendors that survive will not do so by focussing only on GDPR, databases protection, cyber security or test/development enablement. They need to be all in one solutions because it does not makeROI cents for the enterprise to invest in point solutions for secondary storage management.

DaaS in its current form will not add value to Big Data

Even though the rise of DaaS is due to unstructured data, insights can only be gained after several ETL cycles. This means that the data in raw form has very little use as it needs transformation before it becomes useful. Big Data exists without DaaS today and will continue in isolated form for the foreseeable future.

The danger of being the golden copy

Eventually the entire onus of data resilience will fall on the shoulders of the DaaS provider. Can the enterprise IT manager trust a single product to own all of the data? Remember that the DaaS guys will obfuscate the data in order to achieve their ends and this will be an extreme case of vendor lock in. What if there is a catastrophic failure? If there is corruption then it can never be redone again. Will the DaaS provider have the pedigree to outlast backup software vendors who are deeply entrenched?

Cloud providers will have their say

Just imagine if Google FileStore offered data management features. It is already native scale out NAS. AWS can offer the same with a contrived version of its EFS. Cloud providers are watching this space very closely and you can bet that they have understood the massive potential of being the gold copy of data. All it takes is a single acquisition and DaaS companies will be out of the market.

Conclusions

It is fair to say that:

- DaaS vendors are carving up the enterprise storage market and their real impact is on existing storage vendors

- It is still unclear whether DaaS as secondary storage will survive in the long run. It seems like a band aid at the moment

- Cloud providers will control this market eventually

hope you enjoyed reading. Your comments are welcome

@adarshh Thank you for not using bidbots on this post and also using the #nobidbot tag!

Hello @adarshh, thank you for sharing this creative work! We just stopped by to say that you've been upvoted by the @creativecrypto magazine. The Creative Crypto is all about art on the blockchain and learning from creatives like you. Looking forward to crossing paths again soon. Steem on!

thank you much @creativecrypto. your comment is all the encouragement i need